Open-Assistant

Open-Assistant copied to clipboard

Open-Assistant copied to clipboard

Leverage Initial Prompts from ChatGPT

There are datasets being built based on prompts and responses of ChatGPT, for instance:

- ChatGPT-Comparison-Detection Project

- How Close is ChatGPT to Human Experts? Comparison Corpus, Evaluation, and Detection

I thought this might be helpful and also collecting ChatGPT on some complex prompts (coding, puzzles) that are hard to generate might be very helpful?

We are not using output from chatgpt. It is against their tos and we want to build a better bot. Thank you for brining this up. We can however, research bot output detectors and can compare our bot's output to human output as an eval. if you want to work on this lmk.

Related to this issue: https://github.com/LAION-AI/Open-Assistant/issues/792

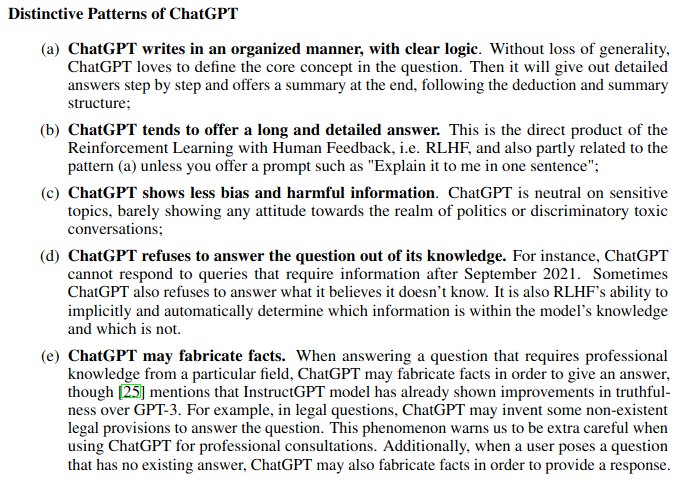

@hooman650 Thanks for linking the paper. The analysis on p. 6 is interesting. We could think about including points a) - d) rephrased as instructions in our prompting-guide:

Oh - @andreaskoepf you gave me a great idea. @Rallio67 - we could include these as part of our sparrow prompts actually and try to see if our answers are more informative.

You are a kind and wise chatbot ... blah blah... When asked questions, you define the core concept of the question and give detailed answers step by stepp and then offer a summary at the end. ...

Then you give exmaples like this.

Same for points b-d.

then we run some data through a qg/qa generator with this prompt and some background text.