Open-Assistant

Open-Assistant copied to clipboard

Open-Assistant copied to clipboard

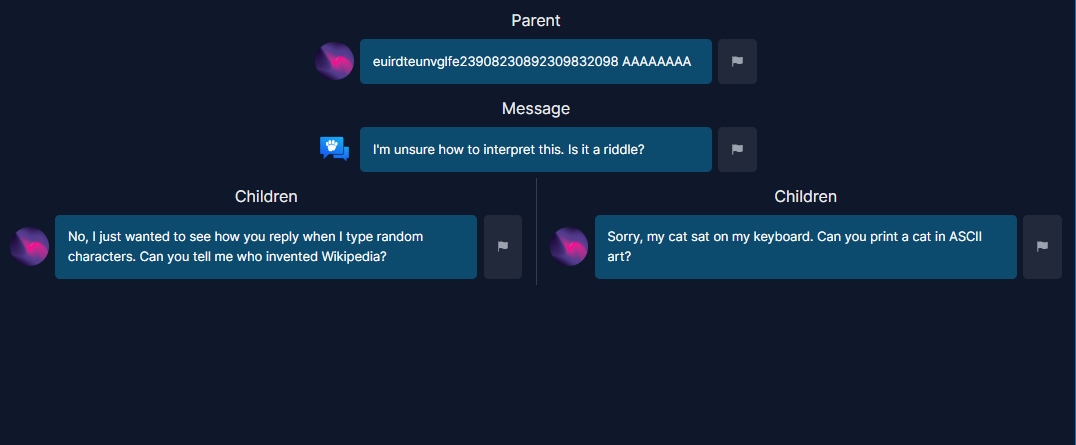

Create a page to view a message in the context of it's entire conversation

Given a unique message id, we should be able to fetch that message's full conversation and then render all the messages before and after.

To finish this we need:

- [ ] An API route to call

/api/v1/messages/{message_id}/conversationgiven amessage_id - [ ] A page like

/messages/{message_id}that includes the message ID in the URL and then fetches the conversation and presents it. - [ ] The messages should display which message is from the assistant and which is from a prompter.

Blocked by #307

Hey! Would like to contribute to the project. Seems cool this idea, I think that I could start by creating first the endpoint.

Great! I'll assign this to you. If you're excited, #307 is also a good and highly related starting point.

Thanks! Will start with this one now, as I had to finish another Issue.

I think we might need to use the /api/v1/frontend_messages/{message_id}/conversation backend calls to use the Web's version of the task id. Or we can fetch the tasks details from the Task table and get the backends version if the ID.

Also, to clarify, we need a website API handler in website/src/pages/api/conversations that takes a message ID and then gets the data from the backend.

Right now we have both a web NextJS API layer and a FastAPI layer. The web API layer mostly does user authentication and then acts as a proxy to the FastAPI layer.

Actually, tracking the backend conversation, the frontend_message endpoint might go away.

So we should fetch the true task content from the RegisteredTask table within the web API handler and then pull out the true ID from the task field.

I think I have implemented some of the API endpoints for #309, which is now a draft #440.. maybe its helpful :)

Yup! I think you knocked out all the API types there

could this be closed? I think we already have the required feature:

or do we need to recursively go through the parents?

We've gotten far enough for the MVP and more. We'll make more issues later after getting user feedback.

Okay thanks! Yeah I was looking and seem to have the enough features.

When we need to work more on that, will happily do it!