Open-Assistant

Open-Assistant copied to clipboard

Open-Assistant copied to clipboard

Ability to tag and rate chat responses similar to how we do in label reply task

I think being able to flag, tag and rate chat responses in the chat UI could maybe be a very useful capability for a few different things.

- Could be useful for training various workflows around safety and things like that.

- Also helps maybe create a good fly wheel of training data from users using the chat.

We have #2475 logged for message reporting (which is already supported in backend I think) and of course have thumbs up/down. Maybe could be expanded for specific tags

Yeah I guess that's what I mean too, just ability to expand thumbs data to more granular labels if a user is so inclined.

Also could be good (different feature request) to "donate" or share individual charts to the public training data in some way too.

Eg probably a lot of my chats I'd actually feel good about donating them to the public data and then tagging them as best I can etc.

We should definitely collect more feedback about the quality of generated messages. We should also integrate inference into the message generation, so that users can correct the output of the system.

If found this classes interesting which John Schulmann mentioned in a presentation. It is not trivial to do this rating automated but maybe we could give users the chance to give feedback in this direction:

Just watching that part now, he says "this requires some oracle to tell you if correct or not".

I wonder then if such tagged and donated conversations can become candidate tasks then to be given that ground Truth by labelers. So similar to how data effort so far was based on human inputs, now that we have chat, some of the input tasks could just naturally fall out of the back end of chat usage and maybe become even more useful data then once sort of "ground truthed" by the OA community itself in some way.

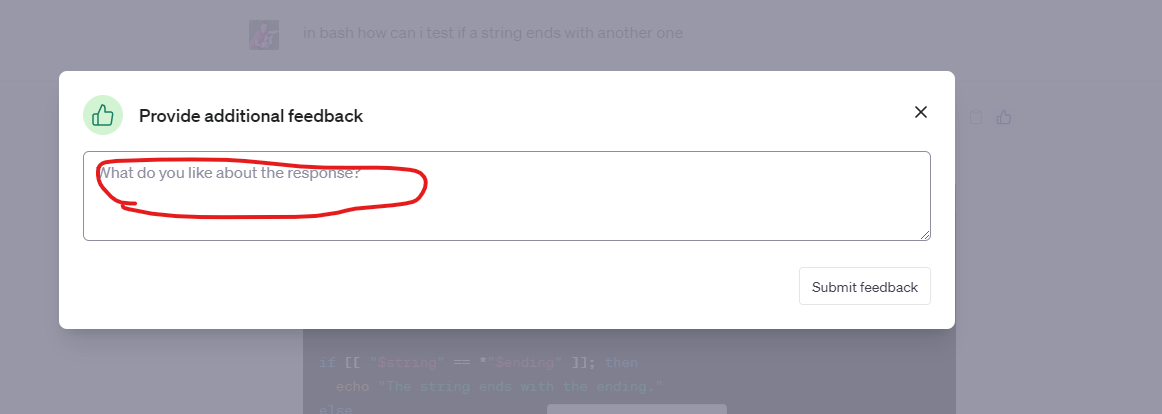

i notice that chatgpt just lets you type in what your issues are with a response - eg lets me just say what was good or bad about the response:

am guessing they then feeding that into some additional downstream models later.

so being able to just tell it what the problem is might be the most flexible approach in the long run assuming you then have a nlp pipeline that can make sense and take advantage of it.

i guess idea being the labels and flags and taxonomy approach always going to be a little imperfect and restrictive so why not just let user give more info on feedback as text and assuming some other LLM then will make sense of that feedback itself in maybe a more useful way since on average maybe will be higher quality or more specific feedback.