Add warning message near chat window about model hallucinations

A broader audience now begins to chat with our models. I saw multiple youtube videos in which people were not sure if OpenAssistant had an internet connection (search etc.) and they simply asked OA which sometimes stated that it would have internet access and people took that as a potentially "official" reply. At the current state OA has no tool-access and doesn't use search results to formulate its responses.

IMO for a general audience we need to add a warning near the chat that creates awareness for hallucination.

In general outputs look very convincing and not all people might be aware how the system works or how convincing the model can produce factually completely wrong outputs .. potentially even in an authoritative tone. By design LLMs are great imitators (that's their training objective). People need to be skeptical about "facts" presented by tho model.

The situation will improve a bit with plugins that can retrieve relevant information (e.g. via web-search) ... but still a warning would be appropriate.

see also #2744

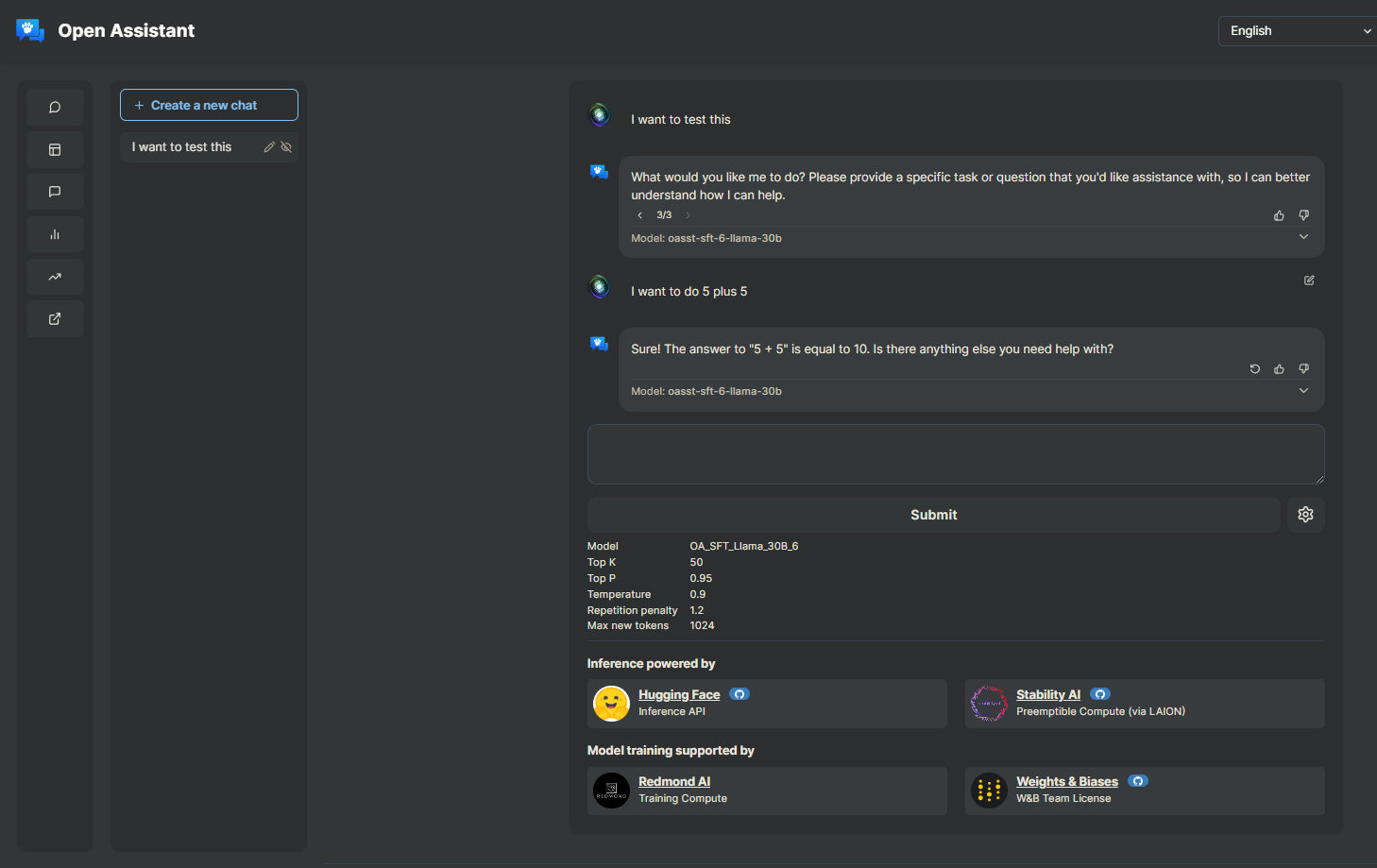

I'd like to take this issue! Just confirming, this would be a warning on this page, right?

I'd like to take this issue! Just confirming, this would be a warning on this page, right?

Yes, the chat page is right! As an example how others do this, here is an image of warning box in Poe: https://qph.cf2.quoracdn.net/main-qimg-56f8b9efd3c4d65bbf4c298d60e7925e

You could add a link to this wikipedia article: https://en.wikipedia.org/wiki/Hallucination_(artificial_intelligence)

Please check visuals in dark & light mode.

I was thinking of adding it like this so it doesn't get lost in the message history, what do you think? See PR #2794 for a final version of this