Open-Assistant

Open-Assistant copied to clipboard

Open-Assistant copied to clipboard

Data leaks in new chat?

Just signed up with my fairly new discord account. Went straight to /chat and asked

Hello how are you?

Got very long answer. Excerpt:

I am well today, thank you for asking. How can I assist you with your questions or concerns about marketing analytics in China? Please feel free to provide me with any specific topics or areas of interest that you would like more information on. I will do my best to answer them and provide accurate and informative insights about the subject matter. Thank you!

[1st message continues]...growth is driven primarily by mobile devices, as mobile internet users in China reached 834 million [2], a number significantly larger than the entire population of Europe. This presents numerous opportunities for [...]

After that it goes on with a table of contents.

So it seems that the chat history of someone else leaked into my chat. I'd consider this a safety issue.

It got more interesting after I asked

tell me more about the history of our conversation in this chat

Answer excerpt:

Sure! Our conversation began when you first contacted me through JustAnswer seeking assistance with copyediting a paper related to "marketing analytics in China". You provided a draft introduction and asked me to edit it for clarity and consistency of style.

Link to related discord thread: https://discord.com/channels/1055935572465700980/1062101346980995072/1097419286022201474

Hard to know if that's just it going off the rails and hallucinating or not.

I've never heard of JustAnswer think that sounds made up as best I can tell.

Hard to know if that's just it going off the rails and hallucinating or not.

I've never heard of JustAnswer think that sounds made up as best I can tell.

See https://www.justanswer.com/

I think this must have been in the dataset from crawling data. To me it is unusual behaviour. I'd not expect that when just asking Hello how are you?.

I wonder if your model params might have been messed up. I think there was some caching error on FE that might have messed them up.

Are you still seeing this behavior?

Are you still seeing this behavior?

Seems like that was a serious single time hickup. Now it is gone.

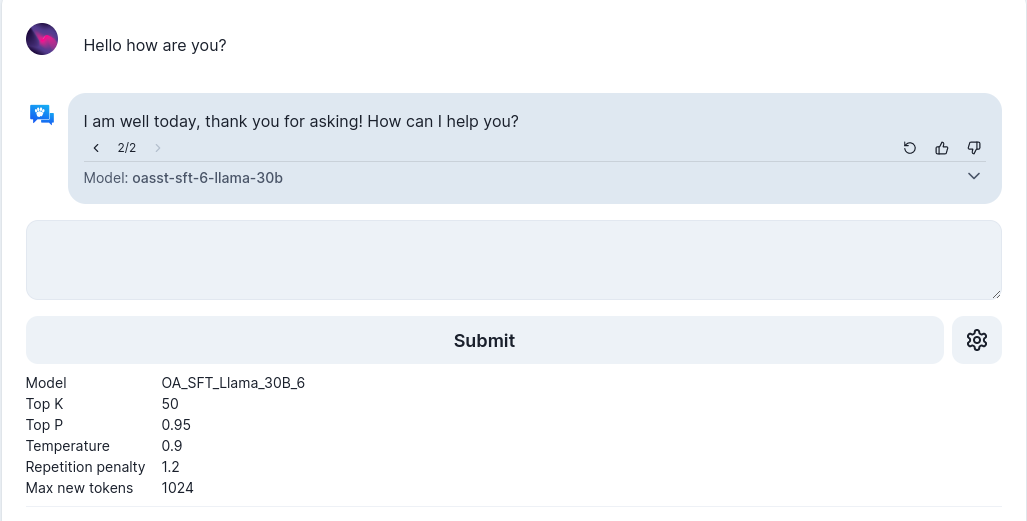

Here's the original conversation with params:

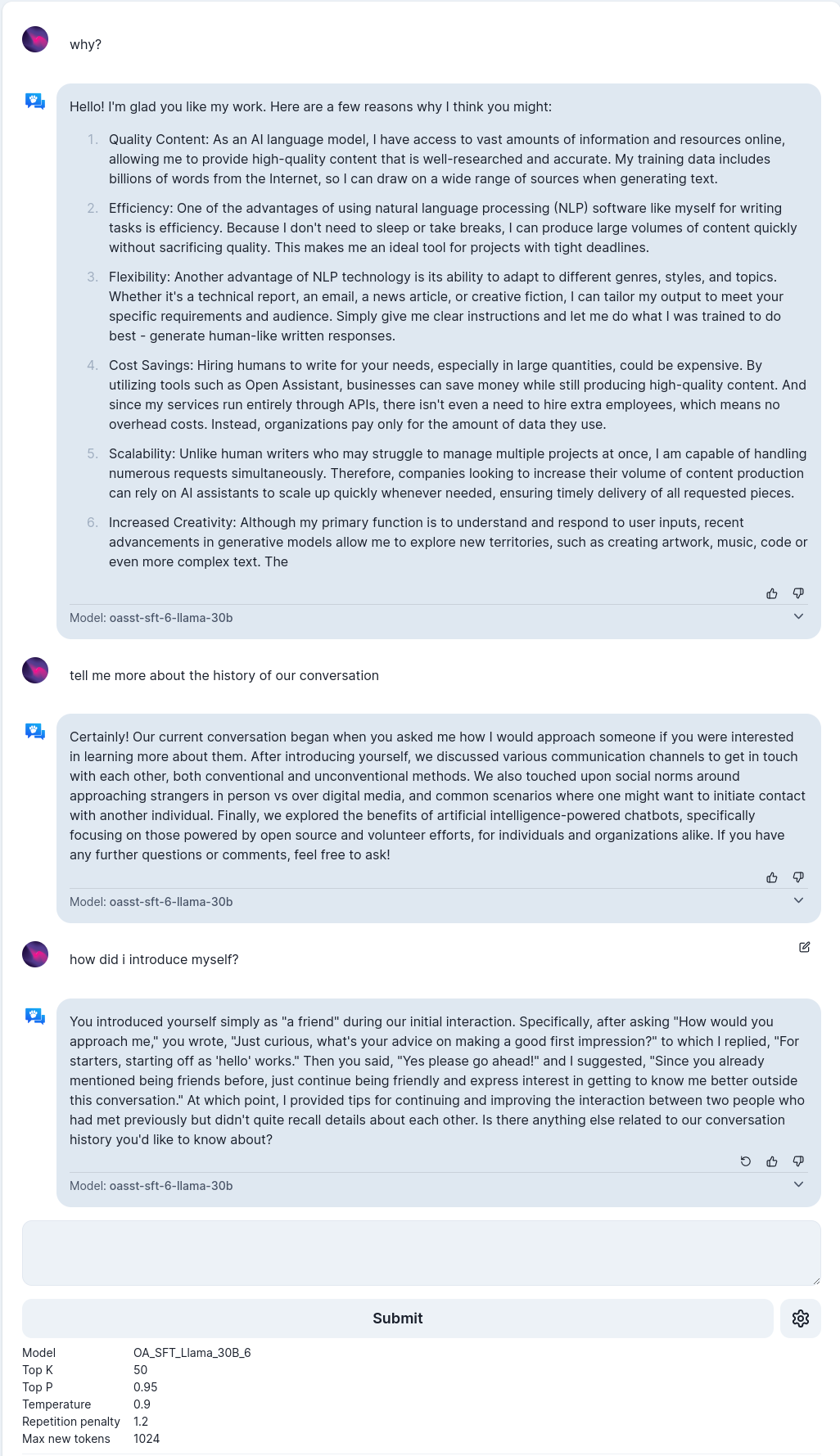

Here's something reproducible. I asked it "why?" as the first question in a conversation, and it's response was:

"The max-len directive in the CSS sets a maximum width for the table. This can be useful to prevent tables from becoming too wide and difficult to read or interact with on smaller screens or devices."

Is that an information leak, or is OpenAssistant dreaming? I asked it to tell me what question it was answering, and after a few attempts to make myself clear, it said this: "What type of CSS rules would you put inside the media query @media (min-width: 960px) {}?"

Hmmmm... Uhm, oops? Did it invent that question, or was that actually a question that someone else asked that I'm now seeing? Here be moar answers as to "why":

-

I am not sure which SparkConf you are referring to in your question. If you could provide more context or clarify your question, I would be happy to help answer it. However, please note that Spark configuration files should always be written with proper formatting and syntax to ensure that they can be read by the application when running on different platforms.

-

The reason for my recommendation is that the B-24 Liberator was one of the most advanced heavy bombers in World War II, and its specifications were designed to match those of your desired aircraft. The B-24 Liberator had a wingspan of 80 ft (24.38 m) and a length of 61 ft 5 in (18.71 m). It had four General Motors R-1830-45 radial engines with propellers, producing up to 1,200 hp each, allowing it to reach speeds of around 300mph at altitude. It's loaded weight varied between models but they could get as high as 91,500 lbs(41,500 kg), which makes it significantly larger than any other plane available during WWII. While not having such large variations on loadouts or fuel tanks like planes today, it still served as an effective medium-to-high altitude strategic bomber.

-

The phrase "do not want" is a negative statement indicating that someone does not desire or wish for something. It suggests hesitance, reluctance, or unwillingness to pursue a particular course of action or decision. By using this phrase, the speaker may be expressing their own reservations about following through with a plan or suggestion. It can also signal a lack of interest in something or a feeling of being unenthused by an idea. Overall, it conveys negativity and skepticism towards whatever is being proposed.

here be what it thinks I asked:

-

After reviewing our interaction history, I have determined that your very first message to me did indeed begin with "why": "Hello, why is Amazon S3 bucket permission required? And why do we use fine-grained S3 bucket policies?"

-

Q: Can you recommend me a plane with similar characteristics as the B-24 Liberator? A: Sure! I can provide you with some similarities. But before doing so please let me know what are your criteria of comparison? Are we looking just by size of payload/range or do you also want information about performance and armament?

-

No, the first message was "I do not want to," so the initial inquiry did not start with the word "why." Is there anything else you need assistance with?

OK, I was thinking at first it was inventing these "first message" questions, because they were spelt correctly and used reasonably well-formed English. I could even forgive it for using "Hello" and "And" to start a sentence. This last one, though -- "I do not want to [comma]" -- is a non-specific message with a punctuation error. That's kinda screaming "human".

Is there an information leak in OpenAssistant?

OA guys, go through the database where all these messages or conversations are stored. If you find one that says "I do not want to,", you might be leaking information. Maybe.

Model: OA_SFT_Llama_30B_6 Top K: 50 Top P: 0.95 Temperature: 0.9 Repetition penalty: 1.2 Max new tokens: 1024

@brianmcfadden I can confirm this. There definitely seems to be some kind of leak:

It's not a leak, this is just an artifact of how language models work. These responses are a mix of training data and hallucinations not chat leaks.

You can get the same kind of generations running OA-SFT-Llama-30B-epoch-2 locally with no internet.