kong

kong copied to clipboard

kong copied to clipboard

Issue when proxy socketio with custom uri

Summary

Client socket connection keeps getting disconnected, reconnect, disconnected, .... and so on

Steps To Reproduce

- Websocket server: http://192.168.99.100:9696

- Kong proxy:

-

Server: http://192.168.99.100:9000

-

API:

-

upstream: http://192.168.99.100:9696

-

uris: /api/socket\.*

-

strip uris: yes

-

Socket server:

'use strict' const express = require('express'), app = express(), route = express.Router({strict: true}), http = require('http'), server = http.createServer(app), env = require('./env'), io = require('socket.io').listen(server, {origin: '*', path: `/socket.io`}); app.use('', route) server.listen(6969); // Socket io io.sockets.on('connection', function(socket) { console.log('connected') socket.on('disconnect', function(token) { console.log('disconnect') }); });- Socket client:

'use strict' const io = require('socket.io-client'), env = require('./env') const socket = io('http://192.168.99.100:9000', {path: '/api/socket/socket.io'}) socket.on('connect', () => { console.log('connected') }) socket.on('disconnect', () => { console.log('disconnected') }) -

-

Additional Details & Logs

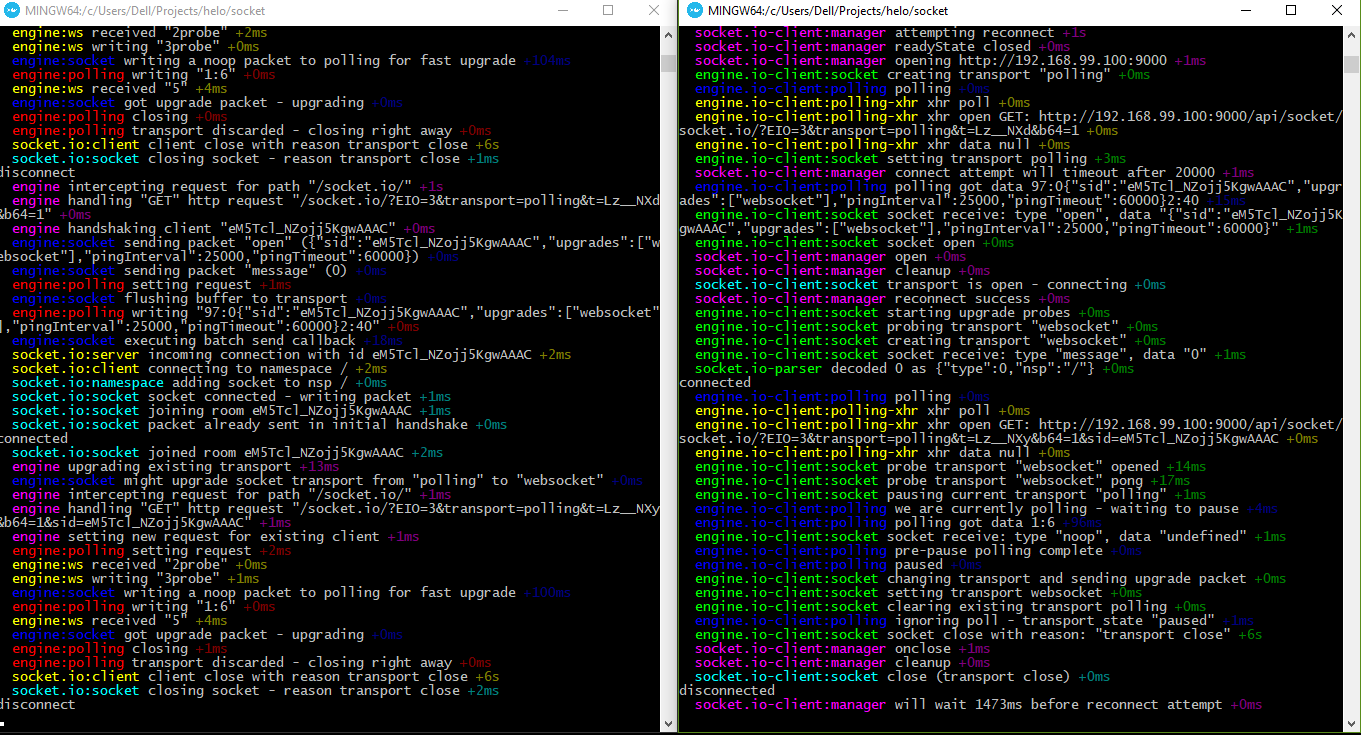

- In picture: left terminal is server, right is client. Client log shows:

engine.io-client:socket socket close with reason: "transport close" +6s

socket.io-client:manager onclose +1ms

socket.io-client:manager cleanup +0ms

socket.io-client:socket close (transport close) +0ms

Tried with a nginx proxy instead of Kong, and it works fine, the client socket connection stays without being closed. Nginx configuration:

upstream websocket {

server 192.168.99.100:8083;

}

server {

listen 80;

server_name localhost;

access_log /var/log/nginx/websocket.access.log main;

location / {

proxy_pass http://websocket;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location /sample.html {

root /usr/share/nginx/html;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

@anhdd-savvycom Hi,

Thanks for reporting, we'll take a look at this as soon as we can manage.

@thibaultcha Do we have any updates on this issue? I like Kong, but due to this error I'm temporary switching to pure Nginx now.

Increasing the timeouts in API config fixed all issues with websockets for us. If they're too low the connections will be terminated.

Do we have any updates on this issue? The websocket proxying feature works in 0.11 CE, but not in 0.12+ CE.

@ziru Not up-to-date with this issue, but I don't think any of the websocket code changed between 0.11 and 0.12 - what issue are you seeing exactly? Maybe better to make another issue to talk about it. Btw, we are making some updates to the websocket proxying reference that may help clarifying their use: https://github.com/Kong/getkong.org/pull/655

We are also improving usability for this feature in 0.13 (by accepting the ws and wss protocols in the Service's protocol attribute.

This is still issue for my case

Using:

- image: kong:1.0

- socket.io for front and back part

- server is on aiohttp

Socket keep connecting, disconnecting, connecting..and so on After some tests - "Read timeout" parameter (in the service) seems to have impact on it. If this value was increased/decreased - then socket was "dead" after "Read timeout" amount of time. Tried to set "0" but got schema validation error:

[error] 31#0: *149 failed to run balancer_by_lua*: /usr/local/share/lua/5.1/kong/init.lua:618: bad read timeout

stack traceback:

[C]: in function 'error'

/usr/local/openresty/lualib/ngx/balancer.lua:223: in function 'set_timeouts'

/usr/local/share/lua/5.1/kong/init.lua:618: in function 'balancer'

balancer_by_lua:2: in function <balancer_by_lua:1> while connecting to upstream, client: 172.18.0.1, server: kong, request: "GET /socket.io/?EIO=3&transport=polling........

Eventually found this advise:

https://github.com/Kong/kong/issues/4186#issuecomment-453674065

It was offered to use KONG_NGINX_PROXY_PROXY_IGNORE_CLIENT_ABORT=on parameter, what I did in docket compose file, so it looks like:

kong:

image: kong:1.0

environment:

- KONG_NGINX_PROXY_PROXY_IGNORE_CLIENT_ABORT=on

- ....

...

And this works for me! Hope it'll help someone to save time If somebody knows the better way to do this or can explain such behavior - you're welcome :)

Hi,

Is there any update about this issue or workaround? I'm having the event, isn't keep the connection stablish.

Regards.

I'm also having this issue and would like to contribute a possible clue for anybody stumbling on this. In my case, the reason behind the disconnection is the fact that KONG (or something between KONG and my browser) is stripping away the vital Connection: keep-alive and Keep-Alive: timeout=5 response headers. Socket IO does send a request header of Connection: keep-alive, but KONG seems to ignore it. In a different setup I have with Node JS & Express, these headers are correctly sent to the client.

There is also an issue reported on GitHub that may be worth tracking: https://github.com/Kong/kong/issues/3008

We're currently investigating this and will update here if we find anything.

Seeing this as well. Is there a formalized fix for this? This issue has been open for four years.

Hi everyone. I got the same error in my Kong API Gateway configuration. There´s any feedback about this issue?

hi,is this problem solved?

is there any workaround to solve this problem?

is this issue fixed

- the heartbeat interval of socket.io is 25s, see more: https://stackoverflow.com/questions/12815231/controlling-the-heartbeat-timeout-from-the-client-in-socket-io

- if use Nginx to proxy ws, the default

proxy_read_timeoutis 60s, see more: https://nginx.org/en/docs/http/websocket.html?_ga=2.219808217.1349535884.1636598551-1257987705.1633943387

that's why Nginx works well with socket.io

If you want to use the Kong proxy ws (based on socket.io framework) , set service.read_timeout to be greater than the heartbeat interval of socket.io(the value you actually set)

Increasing the timeouts in API config fixed all issues with websockets for us. If they're too low the connections will be terminated.

I think this is the solution.

Closing this due to lack of activity. Please re-open if needed.