Issues with some regression models [tbc]

using MLJ, RDatasets, DataFrames;

#Load & make model list.

@inline function load_m(model_list)

@inbounds for (i, model) in enumerate(model_list)

load(model.name, pkg=model.package_name, verbosity=0) #

end

end

# Load all models ONCE!

drop_pkg = ["NaiveBayes", "ScikitLearn"];

models(m -> m.package_name ∉ drop_pkg) |> load_m

#

@inline function one_hot_encode(d::DataFrame)

encoded = DataFrame()

@inbounds for col in names(d), val in unique(d[!, col])

lab = string(col) * "_" * string(val)

encoded[!, Symbol(lab) ] = ifelse.(d[!, col] .== val, 1, 0)

end

return encoded

end

#AZ: convert Strings & Count to OHE.

@inline function AZ(X)

sch = schema(X);

#ty = [CategoricalString{UInt8}, CategoricalString{UInt32}, CategoricalValue{Int64,UInt32}]

tn = [Int, Float16, Float32, Float64]

vs = [];

@inbounds for (name, type) in zip(sch.names, sch.types)

if type ∉ tn #∈ ty #∉ [Int32, Int64, Float64]

#println(:($name) , " ", type)

push!(vs, :($name) )

#global X = coerce(X, :($name) =>Continuous);

end

end

#

Xd= DataFrame(X);

X_ohe = one_hot_encode( Xd[:, vs] )

Xd = hcat( X_ohe, select(Xd, Not( vs )) )

Xd = coerce(Xd, autotype(Xd, :discrete_to_continuous))

#sch= schema(Xd);

#@show sch.scitypes;

#

X=Xd

return X

end

#Train & Score.

#NOTE: if we do target engineering we need to transform Y back to compare score.

@inline function train_m(m, X, y, train, test, pr, meas; invtrans=identity)

t1 = time_ns()

m = m.name

println(m)

if m =="XGBoostRegressor"

mdl = eval(Meta.parse("$(m)(num_round=500)"))

elseif m=="LGBMRegressor"

mdl = eval(Meta.parse("$(m)(num_iterations = 1_000, min_data_in_leaf=10)"))

elseif m=="EvoTreeRegressor"

mdl = eval(Meta.parse("$(m)(nrounds = 1500)"))

else

mdl = eval(Meta.parse("$(m)()"))

end

#

mach = machine(mdl, X, y)

fit!(mach, rows=train, verbosity=0) #, verbosity=0

#ŷ = MLJ.pr(mach, rows=test)

ŷ = pr(mach, rows=test)

ŷ = invtrans.(ŷ)

y = invtrans.(y)

#AZ Custom oos-R2

if meas==rmsl

s = meas(abs.(ŷ), abs.(y[test]) ) #abs.() for rmsl AMES.

else

s = meas(ŷ, y[test])

end

t2 = time_ns()

return [m,round(s, sigdigits=5), round((t2-t1)/1.0e9, sigdigits=5)]

end

#

@inline function f(X, y, train, test, pr, meas; pr_type = [:deterministic, :probabilistic])

X = AZ(X)

dropm = ["ARDRegressor"]; drop_pkg = ["NaiveBayes", "ScikitLearn"];

m_match = models(matching(X, y),

x -> x.prediction_type ∈ pr_type,

x -> x.package_name ∉ drop_pkg,

x -> x.name ∉ dropm);

sc = Array{Any}(undef, size(m_match, 1), 3)

@inbounds for (i,m) in enumerate(m_match)

sc[i,:] .= try

train_m(m, X, y, train, test, pr, meas)

catch

m.name, 10_000,10_000

end

end

df= DataFrame(Model = sc[sortperm(sc[:,2]), 1],

SCORE = sc[sortperm(sc[:,2]), 2],

Time = sc[sortperm(sc[:,2]), 3])

#showtable(df)

#

return df

end

Now apply to Boston data (eg):

X, y = @load_boston;

train, test = partition(eachindex(y), .7, rng=333);

df = f(X, y, train, test, predict, rmsp, pr_type = [:deterministic])

The model LinearRegressor doesn't work.

Note: I dropped all modes in ["NaiveBayes", "ScikitLearn"]

The model LinearRegressor doesn't work

Please report the error, stack trace, and ideally a minimum working example.

When I run my code to train all (deterministic regression) models the only two (out of 50) that give errors are:

MLJLinearModels.LinearRegressor() & ScikitLearn.LinearRegressor()

When I run the code separately (restarting Julia):

using MLJ

X, y = @load_boston

train, test = partition(eachindex(y), .7, rng=333);

julia> @load LinearRegressor

ERROR: LoadError: ArgumentError: Ambiguous model name. Use pkg=... .

The model LinearRegressor is provided by these packages:

["MLJLinearModels", "GLM", "ScikitLearn"].

Stacktrace:

[1] info(::String; pkg::Nothing) at /Users/AZevelev/.julia/packages/MLJModels/uSKTW/src/model_search.jl:80

[2] load(::String; pkg::Nothing, kwargs::Base.Iterators.Pairs{Symbol,Any,Tuple{Symbol,Symbol},NamedTuple{(:modl, :verbosity),Tuple{Module,Int64}}}) at /Users/AZevelev/.julia/packages/MLJModels/uSKTW/src/loading.jl:81

[3] @load(::LineNumberNode, ::Module, ::Any, ::Vararg{Any,N} where N) at /Users/AZevelev/.julia/packages/MLJModels/uSKTW/src/loading.jl:125

in expression starting at none:1

It appears there are 3 pkg w/ LinearRegressor(), ["MLJLinearModels", "GLM", "ScikitLearn"], w/ GLM probabilistic the other two deterministic.

#

@load LinearRegressor pkg = MLJLinearModels

mdl = LinearRegressor()

mach = machine(mdl, X, y)

fit!(mach, rows=train, verbosity=0)

ŷ = predict(mach, rows=test)

rmsp(ŷ, y[test])

#

@load LinearRegressor pkg = ScikitLearn

mdl = LinearRegressor()

#mdl = ScikitLearn.LinearRegressor()

mach = machine(mdl, X, y)

fit!(mach, rows=train, verbosity=0)

ŷ = predict(mach, rows=test)

rmsp(ŷ, y[test])

For reasons I can't understand: LinearRegressor works separately, but not through my framework to train all models. (before it worked fine though...)

I'm gonna have to think more about this.

A few other points:

- this was discussed before: multiple models w/ the same name creates problems.

Perhaps:

MLJLinearModels.LinearRegressor(),ScikitLearn.LinearRegressor(),GLM.LinearRegressor() - yesterday @OkonSamuel made

ScikitLearn.jlwork on mac again. We are lucky to have him onboard.

Wait now they're working (I think you have to restart Juno a couple times...) It's no longer working, this may be specific to my stuff, I'll have to work on it some more.

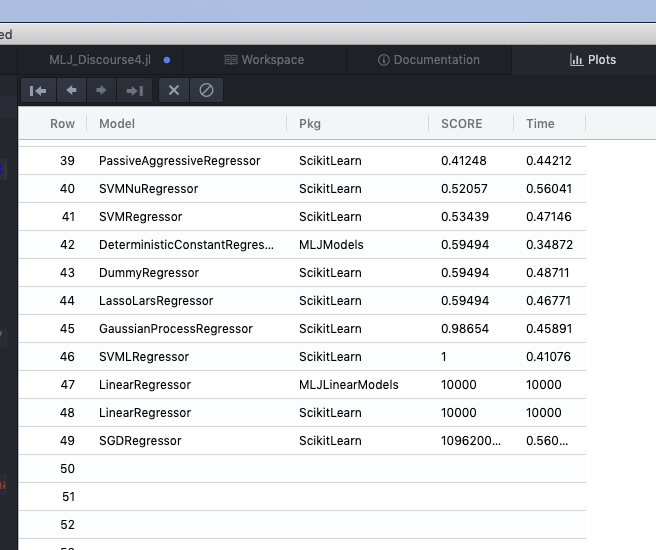

Btw, the exercise above provides a sanity check:

- the top-5 models are the boosted trees

│ Row │ Model │ Pkg │ SCORE │ Time │

│ │ Any │ Any │ Any │ Any │

├─────┼──────────────────────────────────────┼───────────────────┼───────────┼───────────┤

│ 1 │ EvoTreeRegressor │ EvoTrees │ 0.13985 │ 6.662 │

│ 2 │ GradientBoostingRegressor │ ScikitLearn │ 0.14363 │ 0.65507 │

│ 3 │ XGBoostRegressor │ XGBoost │ 0.14375 │ 1.7388 │

│ 4 │ ExtraTreesRegressor │ ScikitLearn │ 0.15092 │ 0.68245 │

│ 5 │ LGBMRegressor │ LightGBM │ 0.15601 │ 3.2093 │

│ 6 │ RandomForestRegressor │ ScikitLearn │ 0.16037 │ 0.0062503 │

│ 7 │ RandomForestRegressor │ DecisionTree │ 0.16325 │ 0.63957 │

- Huber models give the same score

│ 11 │ HuberRegressor │ MLJLinearModels │ 0.22567 │ 3.2882 │

│ 12 │ HuberRegressor │ ScikitLearn │ 0.22567 │ 0.011471 │

- (Deterministic) Linear models give the same score (GLM is probabilistic & omitted here)

│ 18 │ LinearRegressor │ MLJLinearModels │ 0.25235 │ 0.17905 │

│ 19 │ LinearRegressor │ ScikitLearn │ 0.25235 │ 0.0013115 │

- three Ridge models give the same score

│ 28 │ RidgeRegressor │ MLJLinearModels │ 0.26502 │ 2.423 │

│ 29 │ RidgeRegressor │ MultivariateStats │ 0.26502 │ 0.0025984 │

│ 30 │ RidgeRegressor │ ScikitLearn │ 0.26502 │ 0.0054935 │

- Two Lasso & two EN:

│ 36 │ LassoRegressor │ MLJLinearModels │ 0.32835 │ 0.78851 │

│ 37 │ LassoRegressor │ ScikitLearn │ 0.32835 │ 0.054286 │

│ 38 │ ElasticNetRegressor │ MLJLinearModels │ 0.32837 │ 1.9358 │

│ 39 │ ElasticNetRegressor │ ScikitLearn │ 0.32837 │ 0.008472 │

- Two constant regressors give the same score:

│ 43 │ DeterministicConstantRegressor │ MLJModels │ 0.59494 │ 0.3227 │

│ 44 │ DummyRegressor │ ScikitLearn │ 0.59494 │ 0.48613 │

- some models have suboptimal default HP:

│ 45 │ LassoLarsRegressor │ ScikitLearn │ 0.59494 │ 0.42588 │

│ 46 │ PassiveAggressiveRegressor │ ScikitLearn │ 0.6607 │ 0.45705 │

│ 47 │ GaussianProcessRegressor │ ScikitLearn │ 0.98654 │ 0.48546 │

│ 48 │ SVMLRegressor │ ScikitLearn │ 1.0 │ 0.46353 │

│ 49 │ SGDRegressor │ ScikitLearn │ 3.3938e12 │ 0.47502 │

@tlienart I'm ready to start working on a tutorial.

@azev77 Cool

Nice!

Re multiple models with same name, please add any wishes to the discussion here: https://github.com/alan-turing-institute/MLJModels.jl/issues/242#issuecomment-647755073 . May get to this soon.

@azev77 So can we close this?

@ablaom can you give me a bit more time to get to the bottom of this?

Sure - no worries! Appreciate the investigation.

@azev77 Still unresolved?

@ablaom can we close this issue once we finish creating the tutorial: https://github.com/alan-turing-institute/DataScienceTutorials.jl/issues/47

@azev77 this is unrelated, if you can run the script please kindly close this issue as the name is fairly ominous! Thanks :)

@tlienart

- I have a program to automatically train all relevant models on a given dataset

- Previously it worked for all models

- One day a few of the models didn’t work, in my script, so I opened this issue.

- Those models still work individually, outside my script. Not sure why.

- Once we get to bottom of this & publish tutorial, I’d feel easier closing this issue.

You’re right, the title of this issue is ominous/misleading/unfair to MLJ. I learned this trick from our leader (potus)...

Yes that analysis is fine however

- the tutorial will take some time to get integrated

- the present issue is currently not super helpful because it doesn't point to a clear problem that we could open a PR for

So I suggest

- closing this for now

- working on your suggestion in DST (which I'm very grateful for)

- flagging specific issues from there

but it's fine; I'll just change the title here.