PENet_ICRA2021

PENet_ICRA2021 copied to clipboard

PENet_ICRA2021 copied to clipboard

How to back project pixel-wise .png depth image to .bin point cloud?

Hi, kirkzhengYW, I think you can have a look at this work "https://github.com/mileyan/Pseudo_Lidar_V2/blob/master/src/preprocess/generate_lidar_from_depth.py". I hope it will help you.

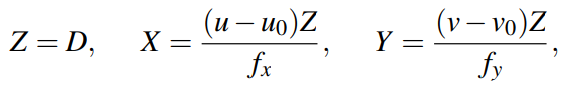

Thanks for your interest! You could calculate the 3D coordinate via:

and transform them back from the camera's coordinate to the LiDaR's coordinate. Be careful of the transformation matrices. The extrinsic matrix and extrinsic matrix are introduced in [Geiger A, Lenz P, Stiller C, et al. Vision meets robotics: The kitti dataset[J]. The International Journal of Robotics Research, 2013, 32(11): 1231-1237.].

And you might also refer to this question.

and transform them back from the camera's coordinate to the LiDaR's coordinate. Be careful of the transformation matrices. The extrinsic matrix and extrinsic matrix are introduced in [Geiger A, Lenz P, Stiller C, et al. Vision meets robotics: The kitti dataset[J]. The International Journal of Robotics Research, 2013, 32(11): 1231-1237.].

And you might also refer to this question.

Hi, Laihu08. Thank you for your attention and reply!I still have a question: is the input .npy depth map or disparity map in rectified cam coordinate system and calibrated back to velodyne coordinate system before back propagation in the provided script?

Thank you for your reply @JUGGHM . And I think according to the equation you provided, u0 and v0 need to be adjusted accordingly due to cropping image size to multiple of 32 as network input (output depth images are the same size ), right?https://github.com/JUGGHM/PENet_ICRA2021/issues/10

That's right. And you could refer to the code in model.py, basic.py and CoordConv.py for calculating coordinates.

Thank you @JUGGHM !

Hi, @KirkZhengYW do you have code to project dense depth map (.png) to Point cloud coordinate (.bin), Thanks in advance!

@Laihu08, I made some modifications due to the work you provide at https://github.com/KirkZhengYW/Pseudo_Lidar_V2/tree/master/src/preprocess. Hope it will help.

Hi, @KirkZhengYW, saw your code, in that https://github.com/KirkZhengYW/Pseudo_Lidar_V2/blob/b2c35951e7a17ea3a6594fac57c95ab350b08c1b/src/preprocess/generate_lidar_from_depth.py#L37 , from where can we get Calib file like you mentioned here ! if you successfully run this modification, can you send .bin files for respective .png files. Thank you very much for your effort!

Thank you for your question @Laihu08! The Calib files are in KITTI raw data http://www.cvlibs.net/datasets/kitti/raw_data.php from where you can see that each sequence has its calibration file including calib_cam_to_cam.txt and calib_velo_to_cam.txt that are needed in the code. Run generate_lidar_from_depth.py for .bin point cloud. If you want to back project predicted depth map, make sure that you have removed invalid pixel in .png depth map where no valid guiding depth value exist like sky.

Hi, @KirkZhengYW Thank you very much for your quick reply, Can you tell me how to remove invalid pixel in .png depth map ? Thanks in advance

Hi, @Laihu08, Annotated depth map can be taken as reference.

ok thank you very much, In https://github.com/KirkZhengYW/Pseudo_Lidar_V2/blob/b2c35951e7a17ea3a6594fac57c95ab350b08c1b/src/preprocess/generate_lidar_from_depth.py#L40 mentioned "kitti_09_30_20_prediction_validpix_02" do you mean annotated depth map here ?

I have one more question, is it possible to estimate depth from 3D object detection dataset like i mentioned in attached figure, in that we have image, calib and pointcloud(.bin) but in depth completion dataset we have front view (sparse depth map) in .png and remaining all are same. I would like to estimate depth and going to use in 3D object detection in same dataset. Please guide me to do this process. Thank you

ok thank you very much, In https://github.com/KirkZhengYW/Pseudo_Lidar_V2/blob/b2c35951e7a17ea3a6594fac57c95ab350b08c1b/src/preprocess/generate_lidar_from_depth.py#L40 mentioned "kitti_09_30_20_prediction_validpix_02" do you mean annotated depth map here ? I have one more question, is it possible to estimate depth from 3D object detection dataset like i mentioned in attached figure, in that we have image, calib and pointcloud(.bin) but in depth completion dataset we have front view (sparse depth map) in .png and remaining all are same. I would like to estimate depth and going to use in 3D object detection in same dataset. Please guide me to do this process. Thank you

Hi! You could refer to this question for combining depth completion and 3D object detection.

Hi, @JUGGHM I couldn't understand from mentioned question. can you explain me how its related to my request ? Thank you

Hi, @JUGGHM I couldn't understand from mentioned question. can you explain me how its related to my request ? Thank you

Oh I think I misunderstood your question. Now I think you could directly project the .bin files into a front-viewed sparse depth map and complete it, what's the difficulty?

yeah, but PENET takes only .png format and i saw your code named bin2png but in that code the input is from Kitti_Raw. i want to do depth completion 3D object detection dataset. can you provide me code to directly taking input from .bin not .png ? Thank you very much for taking my question in to consideration !

ok thank you very much, In https://github.com/KirkZhengYW/Pseudo_Lidar_V2/blob/b2c35951e7a17ea3a6594fac57c95ab350b08c1b/src/preprocess/generate_lidar_from_depth.py#L40 mentioned "kitti_09_30_20_prediction_validpix_02" do you mean annotated depth map here ?

I have one more question, is it possible to estimate depth from 3D object detection dataset like i mentioned in attached figure, in that we have image, calib and pointcloud(.bin) but in depth completion dataset we have front view (sparse depth map) in .png and remaining all are same. I would like to estimate depth and going to use in 3D object detection in same dataset. Please guide me to do this process. Thank you

Hi, @Laihu08 ! The "kitti_09_30_20_prediction_validpix_02" directory contains the depth maps whose invalid depth pixels have been removed. You can also find code in Pseudo_Lidar_V2 work for projecting 3D object detection .bin point cloud to .png front view depth map in generate_depth_map.py.

Hi, @KirkZhengYW Thank you very much for your guide. just now i generated depth map by the code from Pseudo_Lidar_V2 for 3D object detection but it saved in .npy format, is that possible to save in .png ? if so please provide me the code lines to do that. Thanks in advance!

Hi, @Laihu08. I have tried to utilize functions in https://github.com/JUGGHM/PENet_ICRA2021/blob/main/vis_utils.py#L106 to save .png depth map but some other problems come up: ①

’img‘ has no attribute named cpu()

@JUGGHM , would you tell me how to solve it correctly?

I modified img = np.squeeze(img.data.cpu().numpy()) to img = np.squeeze(img) and succeded in saving .png depth map, but:

② the depth maps are in white background.

I haven't solved ② problem yet, I think we can explore the solution together @Laihu08 .

Sure, I would love to solve the problem together ! Let me check with that code. Thank you

Hi, @KirkZhengYW did you use the function from https://github.com/JUGGHM/PENet_ICRA2021/blob/main/vis_utils.py#L106 in Pseudo_Lidar_V2 for generate_depth_map.py ? if so please send me that code, I will look into that! Thank you

Hi, @Laihu08. I have tried to utilize functions in https://github.com/JUGGHM/PENet_ICRA2021/blob/main/vis_utils.py#L106 to save .png depth map but some other problems come up: ①

’img‘ has no attribute named cpu()

@JUGGHM , would you tell me how to solve it correctly? I modified

img = np.squeeze(img.data.cpu().numpy())toimg = np.squeeze(img)and succeded in saving .png depth map, but: ② the depth maps are in white background. I haven't solved ② problem yet, I think we can explore the solution together @Laihu08 .

Hi! You could check whether the img variable is a pytorch tensor first.

@Laihu08, I made some modifications due to the work you provide at https://github.com/KirkZhengYW/Pseudo_Lidar_V2/tree/master/src/preprocess. Hope it will help. Hi, @KirkZhengYW Thank you for providing modified code to me,

- Do you successfully converted the .png to the .bin file by running your modified code?

- I have little doubt about GDC provided in pseudo-Lidar V2, https://github.com/mileyan/Pseudo_Lidar_V2/blob/aaf375b1050c602cb22bbe78113d0c702669e08b/gdc/ptc2depthmap.py#L36 do you know what Split file means? I don't what they referring to! Thanks in advance!

Thanks for your interest! You could calculate the 3D coordinate via:

and transform them back from the camera's coordinate to the LiDaR's coordinate. Be careful of the transformation matrices. The extrinsic matrix and extrinsic matrix are introduced in [Geiger A, Lenz P, Stiller C, et al. Vision meets robotics: The kitti dataset[J]. The International Journal of Robotics Research, 2013, 32(11): 1231-1237.]. And you might also refer to this question.

Hi, @JUGGHM Can you tell from where you got this formula? It will be useful for me to understand Thanks!

Thanks for your interest! You could calculate the 3D coordinate via:

and transform them back from the camera's coordinate to the LiDaR's coordinate. Be careful of the transformation matrices. The extrinsic matrix and extrinsic matrix are introduced in [Geiger A, Lenz P, Stiller C, et al. Vision meets robotics: The kitti dataset[J]. The International Journal of Robotics Research, 2013, 32(11): 1231-1237.]. And you might also refer to this question.

Hi, @JUGGHM Can you tell from where you got this formula? It will be useful for me to understand Thanks!

Hi! It is derived from the camera projection model.

Hi, @JUGGHM can you tell me what is D, u,v, uo,vo,fx and fy means ? Thanks in advance