lmdeploy

lmdeploy copied to clipboard

lmdeploy copied to clipboard

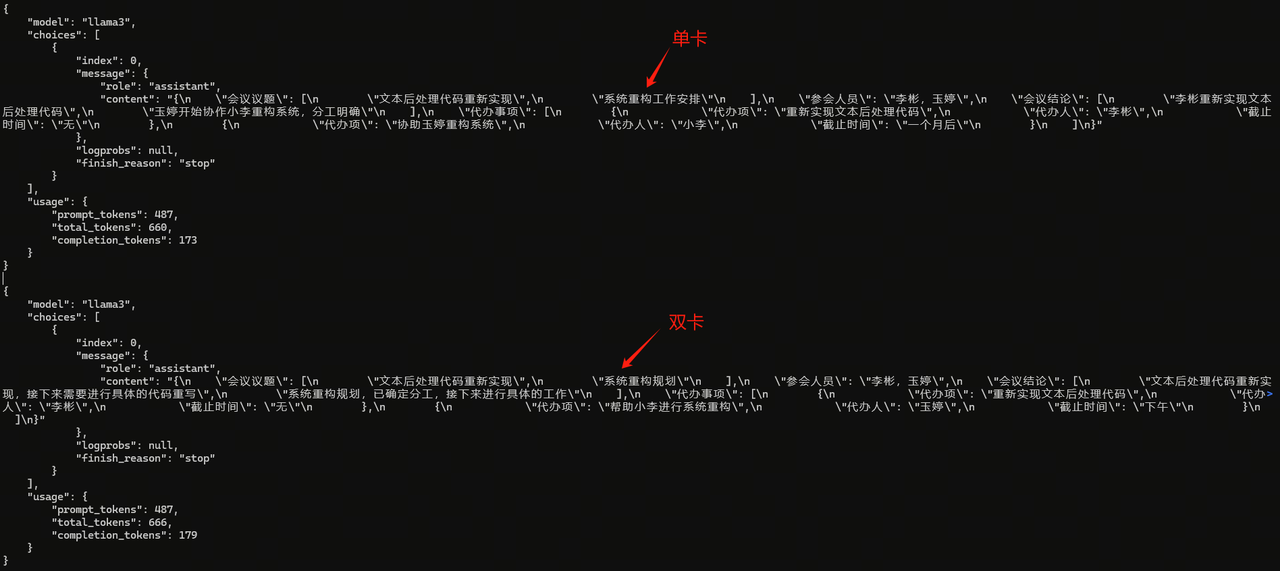

[Bug] Lmdeploy LLM Llama3在4090单卡和双卡上的推理结果不一致

Checklist

- [X] 1. I have searched related issues but cannot get the expected help.

- [X] 2. The bug has not been fixed in the latest version.

- [X] 3. Please note that if the bug-related issue you submitted lacks corresponding environment info and a minimal reproducible demo, it will be challenging for us to reproduce and resolve the issue, reducing the likelihood of receiving feedback.

Describe the bug

docker开启服务,turbomind推理框架,llama3-8b微调模型,未量化,在4090单卡和双卡上的推理结果不一致

Reproduction

-

模型转化 单卡:lmdeploy convert llama3 /path/origin_model --model-format hf --tp 1 --dst-path /path/converted_model 双卡:lmdeploy convert llama3 /path/origin_model --model-format hf --tp 2 --dst-path /path/converted_model

-

服务部署 docker run -d --name lmdeploy_server --gpus all

-v /home/xxx:/root/xxx

-p 9090:9090

--ipc=host

openmmlab/lmdeploy:v0.4.2-post3

lmdeploy serve api_server /root/xxx/converted_model --server-port 9090 -

请求调用 curl -i -X POST

-H "Content-Type:application/json"

-d

'{ "model":"llama3", "messages":[ { "role":"system", "content":"假设你是一个智能座舱的会议纪要助手,能根据用户的电话会议对话抽取出一些纪要。\n你的输入是会议对话文本,期望输出的会议纪要包括:会议议题、参会人员、会议结论、代办事项,其中代办事项包括具体的内容、代办人以及截止时间。如果有多个会议议题和代办事项,请分开描述。每条会议议题、代办事项的内容需精简,字数不超过50。" }, { "role":"user", "content":"会议对话如下所示:\n{"time": "2023.09.12.08:35:30", "user": "李彬", "query": "文本后处理这块代码需要重新实现"}\n{"time": "2023.09.12.08:36:35", "user": "李彬", "query": "这块我来搞吧,你手里还有其他的活吗?"}\n{"time": "2023.09.12.08:36:55", "user": "玉婷", "query": "我在做系统重构,工作量大概一个月"}\n{"time": "2023.09.12.08:37:23", "user": "李彬", "query": "重构工作量挺大的,我让小李帮你一起做"}\n{"time": "2023.09.12.08:37:44", "user": "玉婷", "query": "行,下午我找他沟通一下,我们分一下工"}\n\n请严格按照下面的格式输出会议纪要:\n{\n "会议议题": [\n "xx",\n "xx"\n ],\n "参会人员": "xx,xx",\n "会议结论": [\n "xx",\n "xx"\n ],\n "代办事项": [\n {\n "代办项": "xx",\n "代办人": "xx",\n "截止时间": "xx"\n },\n {\n "代办项": "xx",\n "代办人": "xx",\n "截止时间": "xx"\n }\n ]\n}" } ], "temperature":1, "top_k": 1, "stream":false, "user":"fc33fe12-b346-4c36-bccd-691518c36166" }'

'http://xxx:9090/v1/chat/completions'

Environment

GPU:4090

CUDA Version:12.2

Docker Image:https://hub.docker.com/r/openmmlab/lmdeploy/tags

Docker Tag:v0.4.2-post3

Error traceback

No response

temperature 为 1 本来就是很随机的啊,你改成 0.001 试试。

temperature 为 1 本来就是很随机的啊,你改成 0.001 试试。

temperature 为 1,就是使用默认的softmax,top_k = 1,推理结果应该没有随机性

@lzhangzz @lvhan028 请问这个问题有进展吗?

TP 数量影响 Linear 层在 k 方向上的并发度,会造成累加顺序的不同。浮点加法不满足结合律,不同的累加顺序的结果会有细微的差别。

This issue is marked as stale because it has been marked as invalid or awaiting response for 7 days without any further response. It will be closed in 5 days if the stale label is not removed or if there is no further response.

This issue is closed because it has been stale for 5 days. Please open a new issue if you have similar issues or you have any new updates now.