pyrosm

pyrosm copied to clipboard

pyrosm copied to clipboard

Add possibility to crop PBF and save to disk

For processing very large pbf files, it is better to allow the user to first crop the pbf into smaller chunk and save that to disk (i.e. mimic what can be done with Osmosis). This should also be relatively fast with Cython.

As a concrete example of this feature (which I already would love to have), the OSM PBF file for my relevant region is all of Kanto (which is 7 prefectures including a ton of remote islands), but I am only processing central Tokyo, which is only about 2% of the land area (although, maybe half the road nodes).

It would be great if I could isolate my region of interest, then export the result to a new PBF like...

thisAreaData = pyrosm.OSM('kanto-latest.osm.pbf', bounding_box=boundingPolygon)

thisAreaData.toPBF('tokyoMain.osm.pbf')

It would be even more awesome if I could convert it into a NetworkX graph, make changes like adding links and attributes, and then save it as a PBF. If I could do that, then I could use pyrosm and the PBF to extract ROIs in my final API instead of just the data augmentation step. Something like:

nodes, edges = thisAreaData.get_network(nodes=True, network_type="walking")

thisWalkingGraph = thisAreaData.to_graph(nodes, edges, graph_type="networkx", osmnx_compatible=False, retain_all=True)

thisWalkingGraph = nx.DiGraph(thisWalkingGraph)

edgeAttributesToDelete = ['access', 'name', 'ref', 'service', 'surface', 'tracktype', 'timestamp', 'version', 'tags', 'osm_type', 'key']

for n1, n2, d in thisWalkingGraph.edges(data=True):

for att in edgeAttributesToDelete:

d.pop(att, None)

nodeAttributesToDelete = ['tags', 'timestamp', 'version', 'changeset']

for n1, d in thisWalkingGraph.nodes(data=True):

for att in nodeAttributesToDelete:

d.pop(att, None)

>>Add some nodes (i.e., stations and exits) and links (i.e. edges connecting stations to exits, and exits to roads)

writePBF(thisWalkingGraph, 'walkingNetwork-tokyoMain.osm.pbf')

Then, if I wanted to do some analysis around a station, I could use a circle around that station as the boundingPolygon for:

thisStationData = pyrosm.OSM('walkingNetwork-tokyoMain.osm.pbf', bounding_box=boundingPolygon)

I haven't yet looked into the PBF protocol, but I don't expect it would be more difficult than creating an XML converter.

@bramson Yes, being able to crop PBF is definitely something that would be extremely useful to have. I have done some testing for doing it, but there were some mysterious issues happening after the data was filtered, and the resulting PBF file quite often came out corrupted and could not be read again.. 🤔 Haven't had time to think about this in awhile but hopefully will figure out a solution (or someone else finds a solution 🙂).

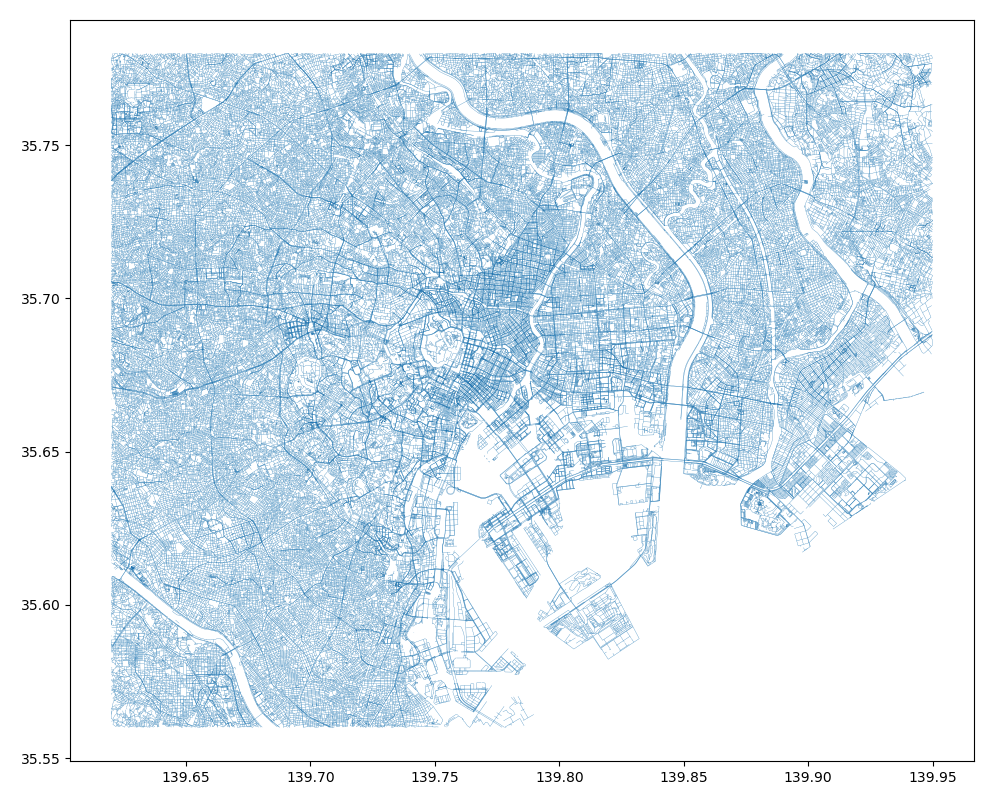

Regarding your issue with Kanto/Tokyo region, are you aware that you can also directly download the data from Tokyo (check the available datasets section)? Although, I'm not sure if this covers your area of interest? ⬇️

from pyrosm import OSM, get_data

osm = OSM(get_data("tokyo"))

nodes, edges = osm.get_network(nodes=True)

edges.plot()

It would be even more awesome if I could convert it into a NetworkX graph, make changes like adding links and attributes, and then save it as a PBF. If I could do that, then I could use pyrosm and the PBF to extract ROIs in my final API instead of just the data augmentation step.

This would be cool but unfortunately using the PBF protocol of OSM (you can see it here) is not fit for this purpose as such. Hence, it would require making an own schema for exporting the graph. And doing that is of course possible but I'm not sure if that would anyway be the best way for implementing something that you described.

I've been thinking this a bit and to me perhaps the best way forward would be to do something like converting the graph data to HDF5 or Apache Arrow and then using something like Vaex to do fast out-of-core filtering of the data. Hence, implementing a converter to export graph from pyrosm for HDF5 or Apache Arrow would be better option than PBF.

Okay, I don't really understand the details of PBF, or anything you said in the last paragraph, but I'll take your word for it that what I imagined is not practical.

Because the unpacked data for my whole area is too big to use for my API... Here's what I'm currently planning to do, and maybe you've got a better idea:

- Break the network down into tiles (e.g. 2x2 km) and save each tile as a pkl.

- There will be a separate lookup GeoDataFrame pkl used to match input lat/lon values to the tile containing it, and return file indices.

- Those indices are used to pull the focal tile and the 8 Moore neighbors, and fuse them together with NetworkX.

- Then I can run a BFS starting from the lat/lon in the focal tile and be sure that it won't exceed the 9-tile area (in the case of walking for 15 minutes...faster movement speeds need to gather more tiles).

In future cases, as the algorithm traverses a path, it can pull tiles as needed when it reaches a node where the tile hasn't yet been pulled. This will dramatically slow down the BFS algorithm, but it will probably still be faster than loading a huge network.

As you can probably guess, I'm not excited about this workflow.

I probably won't need to do this while adding the station links because that network may fit in memory, but maybe not. I'm currently working on testing that network's size.

As for getting data by name, without a reference to what areas are given which labels, it's impossible to use this reliably, especially for Japan.

For example, osm = OSM(get_data("tokyo")) does pull a part of central Tokyo, not even all of central Tokyo, and not the prefecture of Tokyo (which I would have guessed). There is no way to know what 'tokyo' would retrieve without trying it, and now that I know that it isn't the region I want, I have no idea what name I could use to get, say "23 wards", or all of "Tokyo-to", or the whole Tokyo Area (Tokyo, Saitama, Chiba, and Kanagawa prefectures combined).

Because I already have the admin areas for my region, it's easier and safer to just get my area of interest from that and dissolve the polygons into a single geometry, and use that as a bounding region...which from what I have seen, only your package supports, which is why I'm using it.

That part is so fast that being able to save the resulting OSM data as cropped PBF is not really needed.

Being able to convert a GeoJSON to osm.pbf (or some equivalently fast format) would be a great feature for speed in loading/filtering that kind of data.

BTW, I tried to get the walking network for the Tokyo Area. Extracting the OSM data within my polygon only took 0.76 seconds.

Based on an extraction to dataframe using a different package (esy.osm.pbf) I know this should be around 4GB as a pkl, but I got a MemoryError from nodes, edges = thisAreaData.get_network(nodes=True, network_type="walking"):

File "C:\WinPython\python-3.6.5.amd64\lib\site-packages\pyrosm\pyrosm.py", line 178, in get_network

self._read_pbf()

File "C:\WinPython\python-3.6.5.amd64\lib\site-packages\pyrosm\pyrosm.py", line 98, in _read_pbf

self._node_coordinates = create_node_coordinates_lookup(self._nodes)

File "pyrosm\geometry.pyx", line 285, in pyrosm.geometry.create_node_coordinates_lookup

File "pyrosm\geometry.pyx", line 286, in pyrosm.geometry.create_node_coordinates_lookup

File "pyrosm\geometry.pyx", line 72, in pyrosm.geometry._create_node_coordinates_lookup

Thus, even for adding and linking stations and exits, I'm going to have to use a windowed approach.

@HTenkanen is this planned for the memory improvements in the next release you mentioned in #87 and #111? Trying to parse a relatively large (but not unreasonable) bounding box that crosses common GeoFabrik boundaries leaves a user with little choice but to parse a much larger file.

For example, I tried finding buildings within a box that covers the South-East quadrant of London and some of the commuter circle, this means that the Greater London PBF didn't have enough data and I was forced to use the one of England. The England PBF is about 1GB, but in parsing the buildings it used up over 20GB of RAM..

Hi @Alfred-Mountfield!

The cropping will probably not be part of the next release (other memory enhancements will be done though). However, for your problem I would recommend using BBBike's online cropping tool that allows you to select any area in the world. Read more from the docs: https://pyrosm.readthedocs.io/en/latest/basics.html#what-to-do-if-you-cannot-find-the-data-for-your-area-of-interest

Would this solve your issue?

Part of the appeal of Pyrosm for me is how easy it is to integrate it in an automated pipeline (massive kudos there) without requiring a user to download separate input files. That said, it's definitely not necessary at this time, I already require a downloaded file at a different step for now so it's not a massive deal. As a user though it definitely seems like it'd be a really useful feature to have integrated. If it's still not been implemented in a few months when I might have some spare time I could take a stab at it.

Regarding BBBike, I did see that in the docs but on going to the website the schema they supplied under the PBF format looked like it would only contain network data for bike-routing and the like. I'm going to guess I interpreted that incorrectly and am trying to make a clipped file at the moment, I'll report back if it works!

As a sidenote, is using up 20GB of RAM off a 1GB file intended behaviour?

(Edit) Update: using a cropped PBF from BBike has meant the query time has gone from an hour to just over 1 minute... thank you! Shows how useful the feature of being able to crop the PBF before use would be

@Alfred-Mountfield Yep, definitely the goal is to support easy cropping of PBF files with pyrosm 👍🏻 I did some early testing with that, but there were some peculiarities of the compression that made the first attempt quite unstable, and I'm not totally sure why that happened (I have some guesses but haven't had time to investigate more). But definitely if you find time to look at that (before I do), I'd be more than happy to take a look at your PR! 🙂

Regarding the memory usage: it is expected behavior because the data in PBF is heavily compressed, and when converting the data into Shapely geometries and associated attributes, it just consumes quite a bit of memory. That said, however, there definitely is room for improvement in terms of how the logic of parsing the data happens, so the upcoming improvements should made it possible to read the data with lower memory footprint and have a better control of what attributes are stored.

(Edit) Update: using a cropped PBF from BBike has meant the query time has gone from an hour to just over 1 minute... thank you! Shows how useful the feature of being able to crop the PBF before use would be

Indeed! 👍🏻

Hi all, we (@mnm-matin and myself) tried to extract with pyrosm substation (power) data for the whole African continent which also lead to a memory-related crash (16GB RAM laptop). Extracting the data for each country with pyrosm didn't solve the problem, because some countries in Africa still contained too much data.

We used afterwards the "esy-osmfilter" on each country which worked for us. The data for Nigeria was there in 2min. Extracting the data for the whole continent on one go worked as well (roughly 20min). According to THIS, the esy-osmfilter is a python package that works 4 times faster than the osmosis filter. Maybe that tool might be suitable for a pyrosm integration?

Having something to accomplish this would be very useful!

Hi all, we (@mnm-matin and myself) tried to extract with pyrosm substation (power) data for the whole African continent which also lead to a memory-related crash (16GB RAM laptop). Extracting the data for each country with pyrosm didn't solve the problem, because some countries in Africa still contained too much data.

We used afterwards the "esy-osmfilter" on each country which worked for us. The data for Nigeria was there in 2min. Extracting the data for the whole continent on one go worked as well (roughly 20min). According to THIS, the esy-osmfilter is a python package that works 4 times faster than the osmosis filter. Maybe that tool might be suitable for a pyrosm integration?

@pz-max any chance you can share a little more how you did this? can't quite crack the nut with esy-osmfilter to simply crop the size of a pbf and then use it with pyrosm. Thanks!

@jaguardo, esy-osm does not crop the pbf. It can just deal with large pbf -- I guess. We integrated it here to extract power infrastructure from OSM. The output is a geoJSON containing all the data from OSM.

@mnm-matin redesigned esy-osm to ease the use a bit. For instance, you can download any category relatively easy over large areas. We only need to package this together and write some docs. Atm it's not the priority but packing things together can be done relatively quickly.

Is there any python libary that can be used to crop bpf files? or better split them into multiple smaller ones based on coordinates?