Add version_info and authorization_code params to google_bigquery_data_transfer_config

The gcloud REST API methods to manage BigQuery Data Transfer Configs specify two parameters which are not available in the google_bigquery_data_transfer_config resource: versionInfo and authorizationCode.

This seems like a fairly trivial correction to make; I have therefore made the relevant modifications to magic-modules to be downstreamed to terraform-provider-google.

Fixes https://github.com/hashicorp/terraform-provider-google/issues/11886.

If this PR is for Terraform, I acknowledge that I have:

- [X] Searched through the issue tracker for an open issue that this either resolves or contributes to, commented on it to claim it, and written "fixes {url}" or "part of {url}" in this PR description. If there were no relevant open issues, I opened one and commented that I would like to work on it (not necessary for very small changes).

- [x] Generated Terraform, and ran

make testandmake lintto ensure it passes unit and linter tests. - [x] Ensured that all new fields I added that can be set by a user appear in at least one example (for generated resources) or third_party test (for handwritten resources or update tests).

- [x] Ran relevant acceptance tests (If the acceptance tests do not yet pass or you are unable to run them, please let your reviewer know).

- [x] Read the Release Notes Guide before writing my release note below.

Release Note Template for Downstream PRs (will be copied)

bigquery: added `version_info` and `authorization_code` fields to `google_bigquery_data_transfer_config` resource

Hello! I am a robot who works on Magic Modules PRs.

I've detected that you're a community contributor. @slevenick, a repository maintainer, has been assigned to assist you and help review your changes.

:question: First time contributing? Click here for more details

Your assigned reviewer will help review your code by:

- Ensuring it's backwards compatible, covers common error cases, etc.

- Summarizing the change into a user-facing changelog note.

- Passes tests, either our "VCR" suite, a set of presubmit tests, or with manual test runs.

You can help make sure that review is quick by running local tests and ensuring they're passing in between each push you make to your PR's branch. Also, try to leave a comment with each push you make, as pushes generally don't generate emails.

If your reviewer doesn't get back to you within a week after your most recent change, please feel free to leave a comment on the issue asking them to take a look! In the absence of a dedicated review dashboard most maintainers manage their pending reviews through email, and those will sometimes get lost in their inbox.

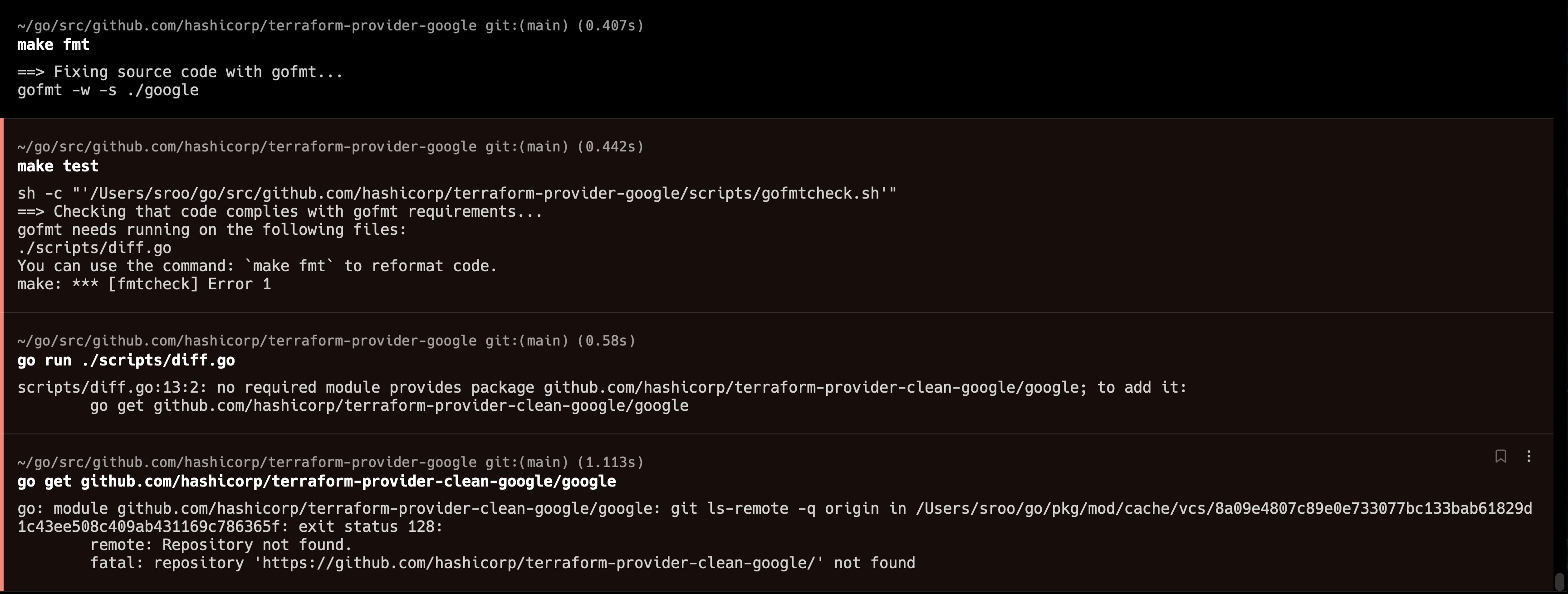

Hey @slevenick! Having a bit of an issue running make test and I'm kind of uncertain how I ought to fix this, since it seems like terraform-provider-clean-google is a private repo and evidently access to it is mandatory:

Hi! I'm the modular magician. Your PR generated some diffs in downstreams - here they are.

Diff report:

Terraform GA: Diff ( 2 files changed, 77 insertions(+)) Terraform Beta: Diff ( 2 files changed, 77 insertions(+)) TF Validator: Diff ( 3 files changed, 23 insertions(+), 3 deletions(-))

See the testing guide here: https://github.com/hashicorp/terraform-provider-google/blob/main/.github/CONTRIBUTING.md#tests

You'll want to only run the tests that are for this resource, as the full suite takes a very long time to run and won't run successfully. You don't need to run the diff script for this, you can remove it if it causes build issues in your local environment

Hi! I'm the modular magician. Your PR generated some diffs in downstreams - here they are.

Diff report:

Terraform GA: Diff ( 2 files changed, 81 insertions(+)) Terraform Beta: Diff ( 2 files changed, 81 insertions(+)) TF Validator: Diff ( 3 files changed, 23 insertions(+), 3 deletions(-))

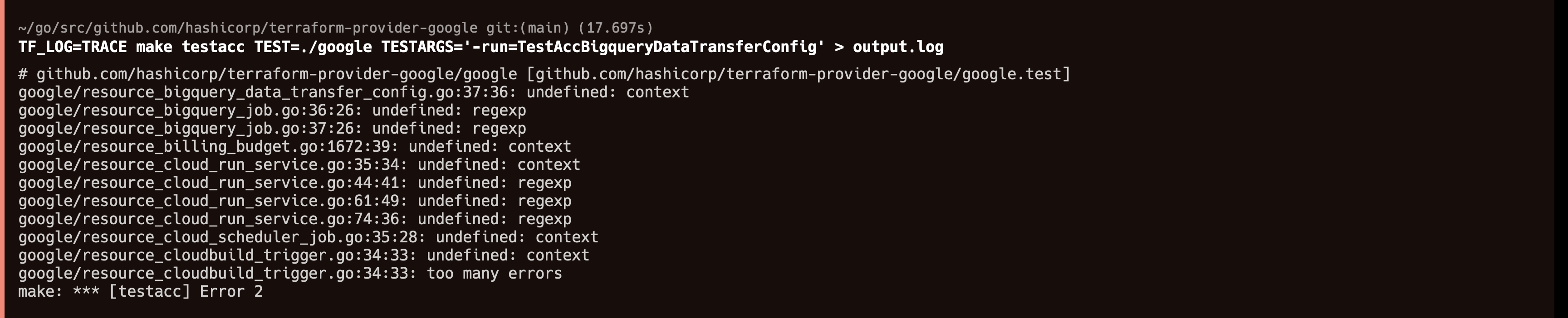

@slevenick Thanks for that! Something I noticed when I got rid of diff.go and tried to run the more limited-scope tests is that the generated Terraform files are missing a bunch of imports:

I started resolving all of these manually, but hundreds of these files are evidently missing import calls for things like context,encoding/json, strings, regexp, and a handful of other stdlib and external dependencies.

Is that normal, or have I set this up wrong for testing? Or am I maybe missing a big mega-import file of some kind?

Tests analytics

Total tests: 2144

Passed tests 1903

Skipped tests: 230

Failed tests: 11

Action taken

Triggering VCR tests in RECORDING mode for the tests that failed during VCR. Click here to see the failed tests

TestAccSqlDatabaseInstance_withPrivateNetwork_withAllocatedIpRange|TestAccComputeInstance_soleTenantNodeAffinities|TestAccComputeGlobalForwardingRule_internalLoadBalancing|TestAccCloudFunctions2Function_fullUpdate|TestAccCloudfunctions2function_cloudfunctions2BasicAuditlogsExample|TestAccCloudfunctions2function_cloudfunctions2BasicGcsExample|TestAccCloudRunService_cloudRunServiceScheduledExample|TestAccCloudRunService_cloudRunServiceStaticOutboundExample|TestAccCGCSnippet_storageObjectLifecycleSettingExample|TestAccPrivatecaCertificateAuthority_privatecaCertificateAuthoritySubordinateExample|TestAccSqlDatabaseInstance_SqlServerAuditConfig

Tests passed during RECORDING mode:

TestAccCloudfunctions2function_cloudfunctions2BasicAuditlogsExample[view]

TestAccCloudfunctions2function_cloudfunctions2BasicGcsExample[view]

Tests failed during RECORDING mode:

TestAccSqlDatabaseInstance_withPrivateNetwork_withAllocatedIpRange[view]

TestAccComputeInstance_soleTenantNodeAffinities[view]

TestAccComputeGlobalForwardingRule_internalLoadBalancing[view]

TestAccCloudFunctions2Function_fullUpdate[view]

TestAccCloudRunService_cloudRunServiceScheduledExample[view]

TestAccCloudRunService_cloudRunServiceStaticOutboundExample[view]

TestAccCGCSnippet_storageObjectLifecycleSettingExample[view]

TestAccPrivatecaCertificateAuthority_privatecaCertificateAuthoritySubordinateExample[view]

TestAccSqlDatabaseInstance_SqlServerAuditConfig[view]

Please fix these to complete your PR View the build log or the debug log for each test

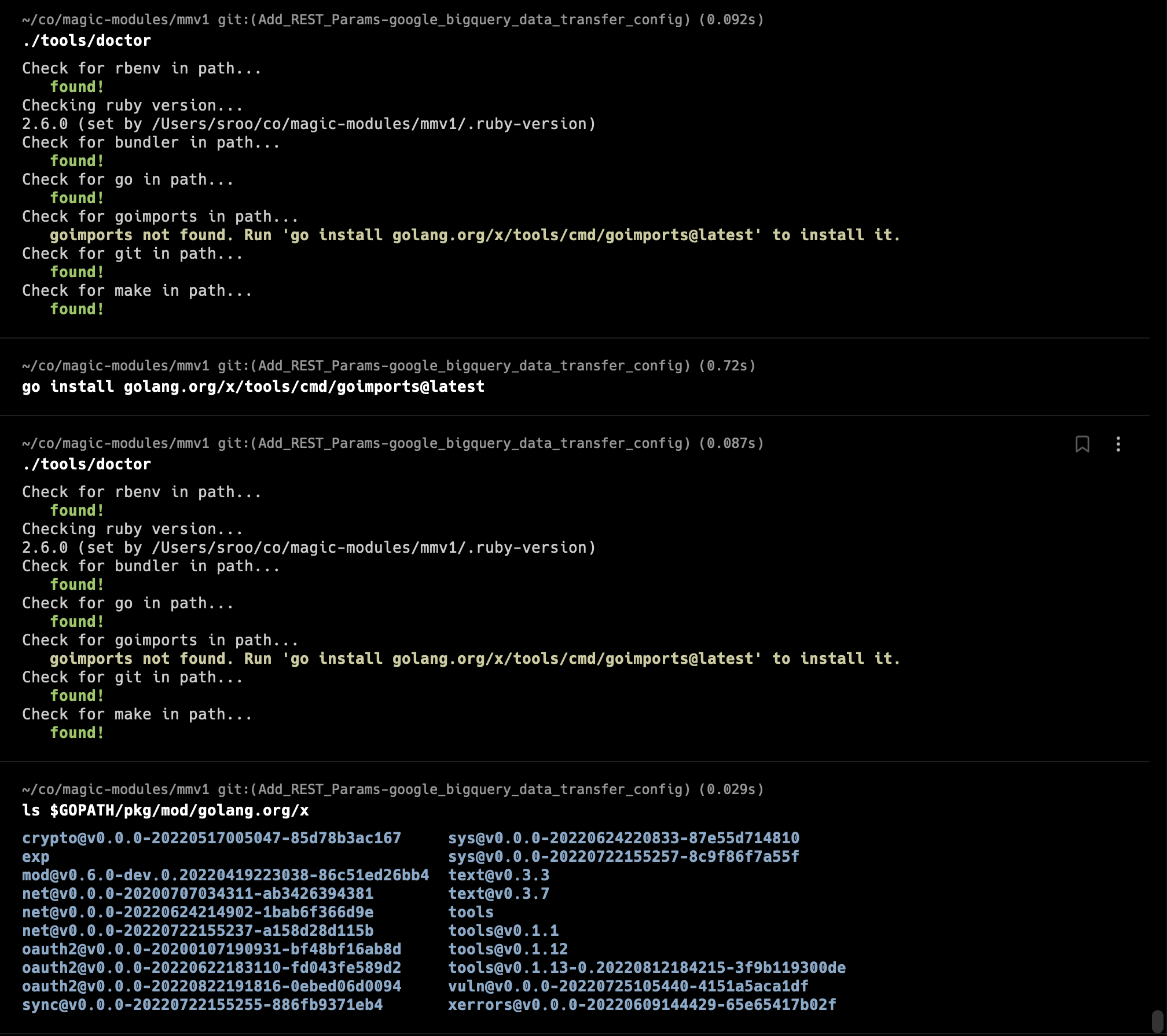

Yikes, what you're saying points to a goimports or gofmt issue. We use goimports to handle correct imports on our resources

Do you have goimports installed and set up? You can check using ./tools/doctor in the magic-modules repo

Ah, yeah, that would be it--though I thought I had already installed goimports (and it turns out I was only half right):

Sorry if I'm missing something really obvious here--I'm not a professional or highly experienced Gopher 😅

Ah, I figured it out--$GOPATH/bin wasn't in my path. Solved!

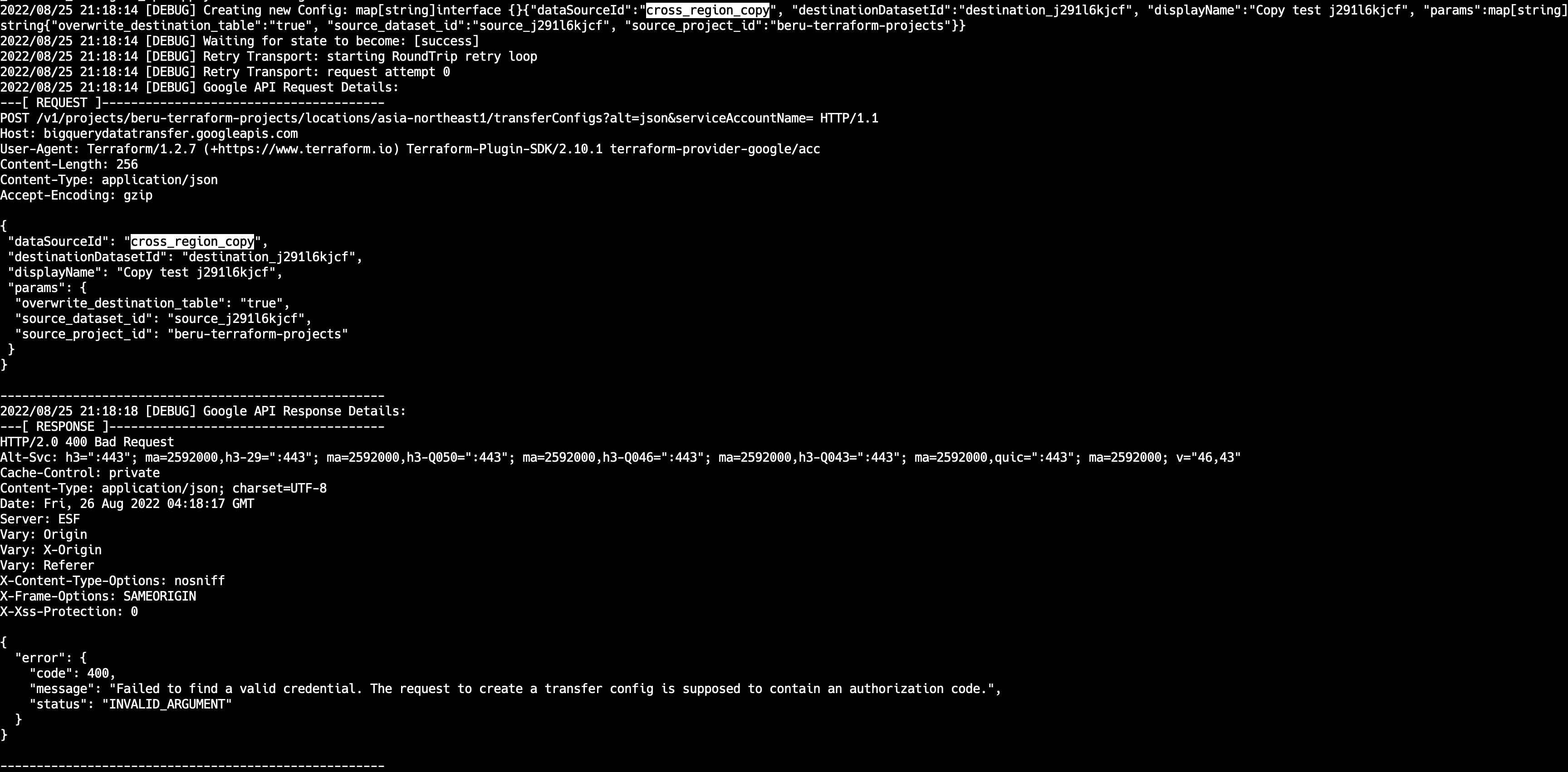

Okay--through some persistence, I have gotten my Acceptance Tests running, but I have now discovered an actual problem with my change! Banner day for me.

Right, so the failure that I see here is actually probably essentially good news, in that it relates to my change (which provides the option for an Authorization Code or Version Info, previously missing):

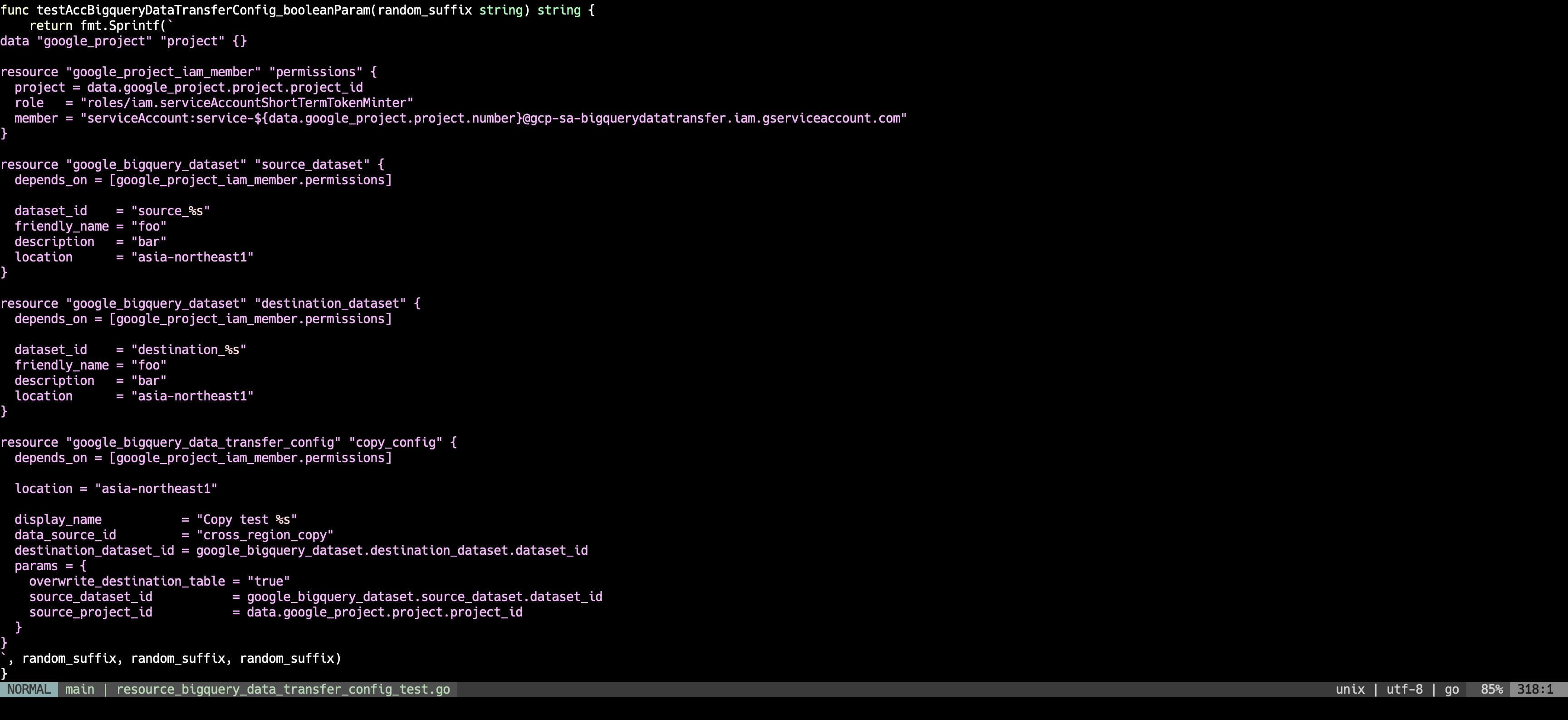

...and here's the terraform in the acc test:

In order to get either of the new fields (authentication_code or version_info) (and so both resolve the errors we're now seeing in acctests and fully test the new fields), we have to query bigquerydatatransfer.googleapis.com (gated on OAuth2 auth in a browser), with relevant scope and client_id as params in the URL...and both of these values require the google_bigquery_data_transfer_config to be created already, in order for us to retrieve them, unless I misunderstand.

To reiterate (because this is all a bit baroque), the flow for either testing or production should be, as I gather:

- Create the

google_bigquery_data_transfer_config - Using the

data_source_idparam, get info about the newly-created transfer config (i.e.,client_idandscope) - Use

client_idandscopeto fetch aversion_idorauthorization_codevia the method supplied on the transferCreate method's REST reference (https://www.gstatic.com/bigquerydatatransfer/oauthz/auth? client_id=clientId&scope=data_source_scopes &redirect_uri=urn:ietf:wg:oauth:2.0:oob&response_type=authorization_code) - ...profit? I'm actually not sure how to use the

version_infoorauthorization_code paramin my newly created fields, because it seems like you need to have one of those values to create a transfer config. But it also seems like you need to create a transfer config first in order to getclient_idandscopeand thereby retrieveversion_infoorauthorization_code.

I've asked the requester how they thought to accomplish this, but honestly looking back at this I'm just sort of amazed that this works at all, as it is. Do you have any thoughts on the best way to resolve this? Because I used to think I was good at Terraform untilI started staring at this resource too hard!

It looks like this will need to involve a separate manual step to retrieve the authorization_code. I think from Terraform we have to expect the user to find this code themselves and add it to their config so we can just send it to the API. We can't go through OAuth in the browser or anything like that, so we have to make the user do that in a separate step

We may be able to use an invalid test code during testing, but they may be validated immediately, which will make this difficult

Ah; I understand what's going on here. I may have mis-scoped this initially; the REST API provides for three mutually-exclusive possibilities for authorizing the transfer:

- a Service Account

- an Authorization Code (if not using a Service Account & the bq data source is a YouTube channel)

- a Version Info (if not using a Service Account & the bq data source is not a YouTube channel)

However: the module is currently written to assume a base_url of projects/{{project}}/locations/{{location}}/transferConfigs?serviceAccountName={{service_account_name}}.

So I'm thinking that, to achieve the same functionality, we would need to account for that in some way--though I'm not really sure what magic-modules supports, but depending on that, I would assume the options would be:

- Conditionalize the

base_urlif api.yml supports e.g. ansible-stylewhen:statements - Refactor the bigQueryDataTransfer resource into multiple separate resources depending on the type of auth used

- Refactor the bigQueryDataTransfer resource into a non-magic-modules resource so that we can more flexibly program the base_url based on the supplied parameters.

Does that track?

So, for these two new fields, the flow should be:

- Create bigQueryDataTransfer resource without authorization?

- Add version_info or authorization_code and re-apply

Yikes, that's some odd API behavior.

Yeah we will likely need to rewrite the URL based on which authentication method is provided. There are ways to do this in magic-modules via pre_create, pre_delete and pre_update functions, so it should be possible

- Using the

data_source_idparam, get info about the newly-created transfer config (i.e.,client_idandscope

I think there is a misunderstanding here: it's true that to get the value for client_id and scope, you need to call dataSources/get and provide the data_source_id as an input. However this id is defined and known for each Data Source supported by the BigQuery Data Transfer Service. E.g. for the Carbon API, it's projects/

This does not depend on a transfer config having been created. However, what it does depend on (at least in the case of the Carbon API) is calling this endpoint first: https://cloud.google.com/bigquery/docs/reference/datatransfer/rest/v1/projects/enrollDataSources.

[1] https://cloud.google.com/carbon-footprint/docs/api