Bugs when using seq2seq in fednlp.

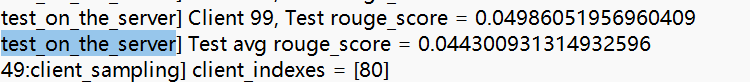

Hello, I'm using fednlp to do some experiments, but I find there are no improvment between two epoch in rouge score. I once suspected that this is caused by my fault. But I tried to run the demo and found the same error. Here is the example:

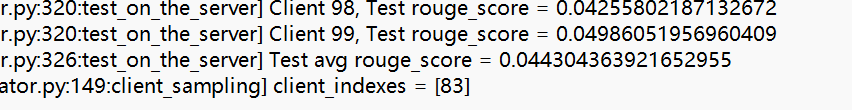

And next time when it run test:

And next time when it run test:

Is this caused by some config or others? Or can you give me some tips?

Is this caused by some config or others? Or can you give me some tips?

@Luoyang144 normally, you need to check the entire training/test accuracy/loss curve. Sometimes, it's normal that the optimization between two iterations are the same (epochs/round, etc.)

@chaoyanghe But the final result are same as beyong, I suspect the model didn't get any improvement? So in fact, I guess there are some bug in learning process?

@zuluzazu Hi Mrigank, please check this bug.

@Luoyang144 This maybe because you are using only 1 client at each round. 1 client at each round will not give enough useful information for the server to aggregate and learning will get stuck. In my demo I gave 1 client because I did not have enough GPU memory for 5 clients. You need to try atleast 6-8 clients at each round. Even after that if you feel there is a bug feel free to reach out here

@zuluzazu Hello, I tried to set 6 clients per round, but get same bad result. Is there any other config need to change?

@zuluzazu Hello, will you solve this problem? It really made me confused.

Hi @Luoyang144 When I trained it was converging. Currently I am having department orientations. I will try to check this over the weekend.

@Luoyang144 I am pretty certain that the convergence issue is most probably due to hyper parameters. So we in the meanwhile can you please do a hyper parameter tuning like maybe decreasing the learning rate and changing batch sizes. In my experience Federated settings are very sensitive to hyperparameters so it would be cool if you could do some hyper parameters tuning and I will also try to check for any bug over the weekend

@zuluzazu Thank you, I will try to change some parameters.

@zuluzazu Hello, this weekend I tried to tune some hyper parameters, like lr, client_number, but all get bad result, even worse than original parameters.

@Luoyang144 Does your Rouge score improve if you do centralized training. If not then there is an issue with the model and not the FedNLP code in itself. Can you check?

@Luoyang144 Were you able to resolve the issue?