dolfinx

dolfinx copied to clipboard

dolfinx copied to clipboard

Interpolation between different meshes

Note: The post below describes the first proposed implementation. I later developed a second one, so this post is no longer relevant. See https://github.com/FEniCS/dolfinx/pull/1598#issuecomment-915942471

In this branch, I implemented functionalities to interpolate discrete functions between different meshes.

I will now give a brief explanation of the algorithm that I implemented.

The serial algorithm

Consider two discrete functions v, the function to be interpolated [source function], and u, the interpolating function [target function].

The idea behind my implementation is to reuse the existing code that interpolates from a user-provided analytical expression, namely

void interpolate(

Function<T>& u,

const std::function<xt::xarray<T>(const xt::xtensor<double, 2>&)>& f,

const xt::xtensor<double, 2>& x, const xtl::span<const std::int32_t>& cells)

In there, this method computes auto values = f(x) and then uses these values to define the interpolating function u.

I figured that if I can obtain those same values from v, as opposed to from f, then it will all be downhill from there. To this end, I recycle existing code to compute the points at which v needs to be sampled in order to define u [fem::interpolation_coords] with the idea of using v.eval on those points to sample v.

Before I can do that, I need to find out which cell of v's mesh contains each of these points. I use the existing BoundingBoxTree and collision detection functionalities to accomplish this quickly.

The parallel algorithm

When running in parallel, the biggest issue is that, since meshes are partitioned independently, the cell that contains a given point will not, in general, reside on the same process that contains that point.

The memory-optimal way of doing this would be, for each process p, to find the processes owning the cells that contain the points p owns, to later arrange the optimal amount of data exchange.

My current implementation follows a different philosophy.

Initially, each process contains the list of points at which it needs to evaluate vin order to define u.

First of all, I gather all these lists into a big list on every process: now, every process contains the list of all the points needed by all the processes.

I then proceed to find, on every process, which points lie in cells that that process owns. Although these are many points, for large numbers of processes it is likely that most of these points will lie outside the mesh that a single process contains, and those will be very fast checks.

Next, I evaluate v at all those points on every process. This way, every point is evaluated on at least one process. Again, since most points will likely lie outside of a process's local mesh, most of these will be ignored.

Finally, I can combine these values back on all processes, so that each process gets the full list of values matching the full list of points. The portion of interest can then be extracted and passed to the existing interpolate function for local interpolation.

Note

I removed the Work-in-progress tag although some performance optimisation should still be possible.

I forgot to mention, I added a demo case in the demo folder to demonstrate the interpolation, so you can use that for testing -- it doesn't have to stay there, I just didn't know where to put it.

I would strongly suggest that you use: compute_global_tree and compute_collisions_point to reduce the number of points shared with each process. See for instance:

https://github.com/FEniCS/dolfinx/blob/d316ef77c58883f09d0e68486aa1caa24a10f6ec/python/test/unit/geometry/test_bounding_box_tree.py#L431

With such an implementation, you can gather all ranks that might intersect with the points, and create a neighbourhood communicator that only communicates the subset of points that are relevant

Thanks @jorgensd for the feedback! The one you suggest seems to be the other approach I mentioned in the first post, is it not? I have been wondering if the overhead of sending more nodes than necessary outweighs the overhead of sending several messages: in case I wanted to only send the relevant nodes, I'm thinking I would need to orchestrate several independent transfers, having one communicator per process, is this correct?

Imagine you have two sets of meshes with 500 millions vertices in each (and assuming that we have a CG-1 space that would be the same number of dofs). If we want to interpolate these between processes, and we have the unfortunate scenario that there is no process overlap between the partitions, we would need to send these to all processes (but only one or a few actually needs it).

You can use unsymmetric MPI neighborhood communciators to send the subset information back and forth between processes https://github.com/FEniCS/dolfinx/blob/main/cpp/dolfinx/common/MPI.h#L92-L93 (it is used through dolfin-x, and also in my MPC library https://github.com/jorgensd/dolfinx_mpc).

One thing to note is that by using process bounding boxes, it is still not guaranteed that the process has the point in it. However, it is a very easy way to reduce the amount of data that is communicated.

@jorgensd thanks for the input. I gave it a thought and, while it is true that sharing only the necessary points reduces the memory footprint and the network traffic, it tends to impose a sequential procedure because if, say, process 0 is in an MPI call with its neighbours, processes 1 and 2, then all the processes which are neighbours of processes 1 and 2 must wait for the communication involving processes 0, 1 and 2 to finish before they can start their own. Since all processes are connected to each other, it seems like I would need to have the neighbourhoods communicate sequentially.

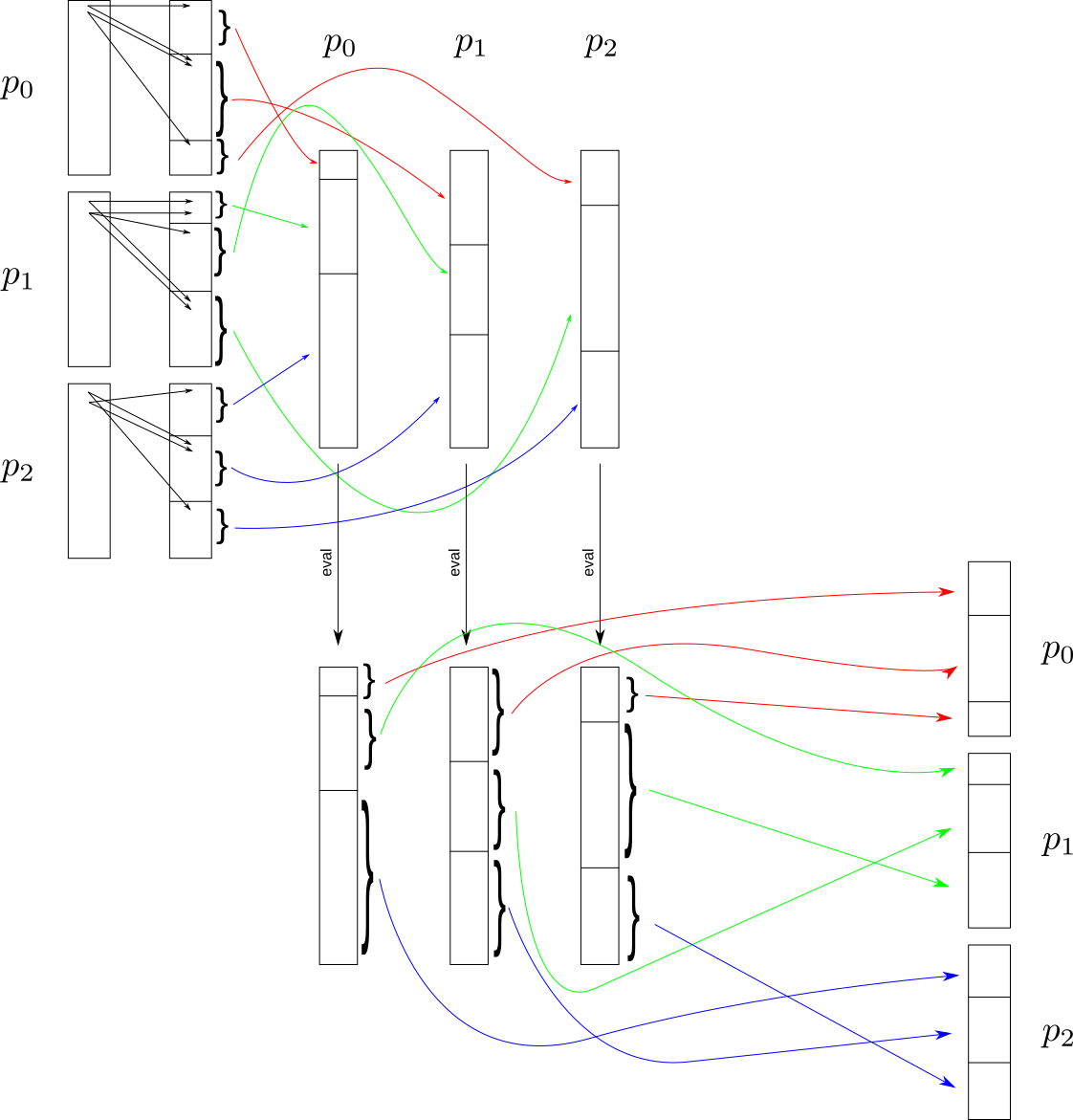

Then I thought: I once took a mini-course on one-sided MPI communications, and that paradigm would totally do the trick here, so I implemented the version I just pushed using one-sided MPI functions and the approach you suggested. The idea is then that, using a global bounding box, processes find what other processes might be able to evaluate a point they need [this is as per your advice], then they assemble, on each process, a list of points that that process might have, each process evaluates, and finally each process fetches back the data it needs.

I made an image to illustrate the idea for the case of three processes.

You can directly compare the two implementations: the old one is at commit 766c09f06 and the new one is the head of the branch.

I did some quick timing tests on a cluster with InfiniBand interconnect, nodes with 24 threads each, with two meshes consisting of 24M tetras each.

n t decrease factor

24 371.889

48 190.165 -0.9676203339015955

96 158.629 -0.26159519798458825

144 109.428 -0.9157417895107853

192 85.247 -0.8680202825388009

240 70.104 -0.8764450956489861

Here the decrease factor is the in

and perfect strong scalability corresponds to

, so it seems to be scaling at least decently.

Edit: I forgot to mention that the above test is for P1 functions.

More timings, just for the fun of it, with a P2 function on the same meshes.

n t decrease factor

24 1007.359714

48 506.031528 -0.9932797623666415

96 426.84532 -0.24551391257479313

144 298.413884 -0.882789410980575

192 223.71631 -1.0014618462533544

240 183.774946 -0.8813469419348123

We would need some tests to make sure this is correct. I.e. Interpolate a known function onto a sub-set (similar to your C++ demo), and check that the interpolated function has the correct values.

There are questions about scalability of this code. When every process communicates with every other process, it becomes a quadratic scaling, which is not good. Obviously, it can be difficult to avoid, but you should have a good idea of the communication pattern from the initial global BBtree collisions, and can then limit the communication to the processes that actually need it, e.g. by using a neighborhood communicator.

We would need some tests to make sure this is correct. I.e. Interpolate a known function onto a sub-set (similar to your C++ demo), and check that the interpolated function has the correct values.

I can prepare a unit test that also validates the interpolated values, if you'd like me to.

There are questions about scalability of this code. When every process communicates with every other process, it becomes a quadratic scaling, which is not good. Obviously, it can be difficult to avoid, but you should have a good idea of the communication pattern from the initial global BBtree collisions, and can then limit the communication to the processes that actually need it, e.g. by using a neighborhood communicator.

I think this already happens. Please be advised that the currently implemented algorithm is the one described at https://github.com/FEniCS/dolfinx/pull/1598#issuecomment-915942471 , according to which a process only communicates with processes that can possibly evaluate its points.

There are questions about scalability of this code. When every process communicates with every other process, it becomes a quadratic scaling, which is not good. Obviously, it can be difficult to avoid, but you should have a good idea of the communication pattern from the initial global BBtree collisions, and can then limit the communication to the processes that actually need it, e.g. by using a neighborhood communicator.

I think this already happens. Please be advised that the currently implemented algorithm is the one described at #1598 (comment) , according to which a process only communicates with processes that can possibly evaluate its points.

I guess that is not strictly true, as you would have a send->recv of a an array with 0 data size for all processes with no incoming points.

I guess that is not strictly true, as you would have a send->recv of a an array with 0 data size for all processes with no incoming points.

The current implementation with one-sided communication has no send and receive, it just copies data onto the target memory space. This can be implementation-dependent, but I would imagine that a copy of size zero is actually a no-op, since no synchronisation with the target process is needed. We can enforce this with an if statement anyway.

The unit test isn't working and it seems to be an issue with MPICH [it works on my machine with OpenMPI]. How do you see temporarily ignoring the unit test until this issue is fixed?

The unit test isn't working and it seems to be an issue with MPICH [it works on my machine with OpenMPI]. How do you see temporarily ignoring the unit test until this issue is fixed?

Of course it wasn't an issue in MPICH, it was my fault [and mine alone!]. I see green light from the unit tests now :D

Closing, see updated version in #2245.