Introduce generated navigational mesh to interpret maps

Initial implementation as an alternative of markers. The goal is to allow AIs to properly read all maps that are reasonably sanely made. All queries towards the data structures and algorithms are guaranteed to be optimal and annotated. This will remove a large hurdle for AIs in terms of performance

Must haves:

- [x] Is it possible to path from

atob? (yes / no answer) - [x] Generate a path from

atob(a path) - [ ] Generate a path from

atob, given a maximum amount of threat (a path) - [ ] Generate a path from

atob, while trying to avoid threat (a path) - [ ] Generate a path from

atob, while trying to seek threat (be attracted to it, like experimentals) - [ ] Generate a path from

atob, that doesn't wall hug (a path)

Should haves, likely future pull requests:

- Square meters of an area

- Number of resources of an area

- A distance query from point a to b, over the graph

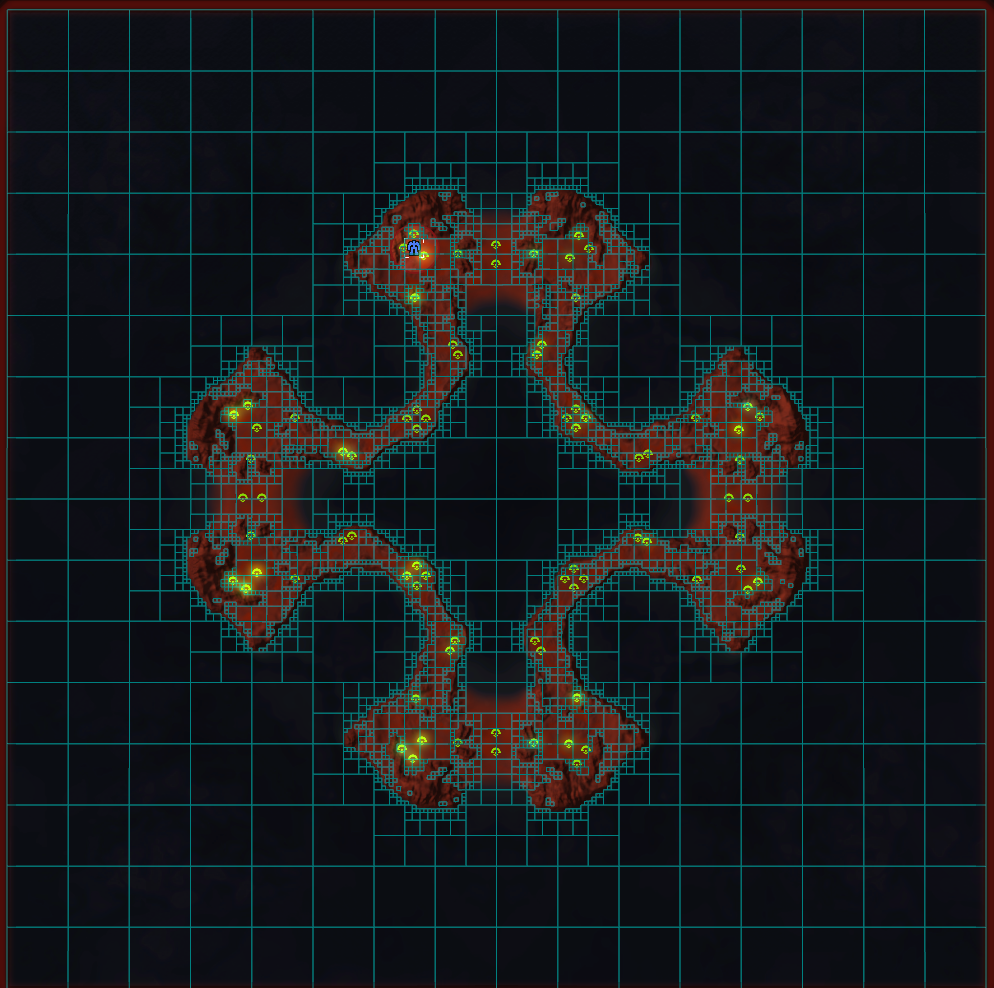

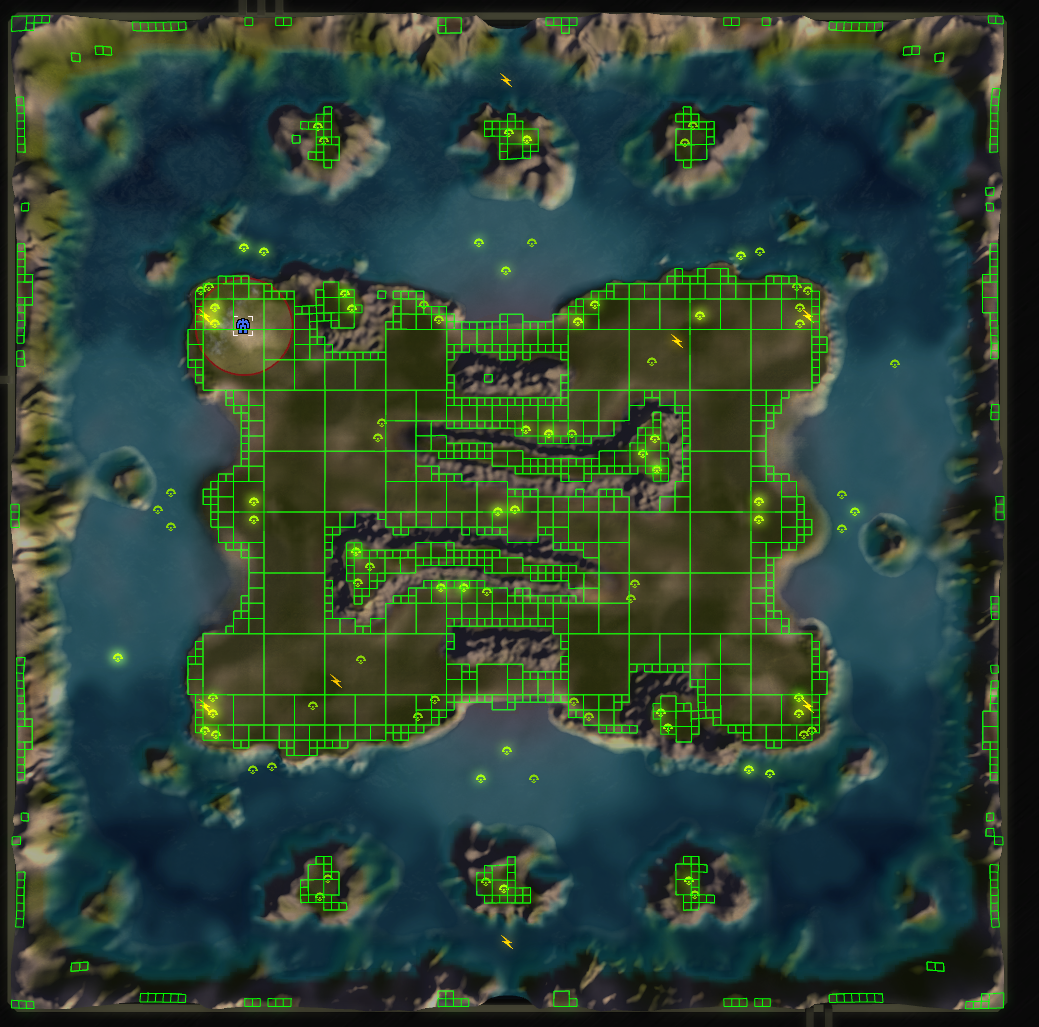

Crag Dunes - 0.085876 seconds

Bermuda Locket - 1.4 seconds

Craftious Max - 0.3 seconds

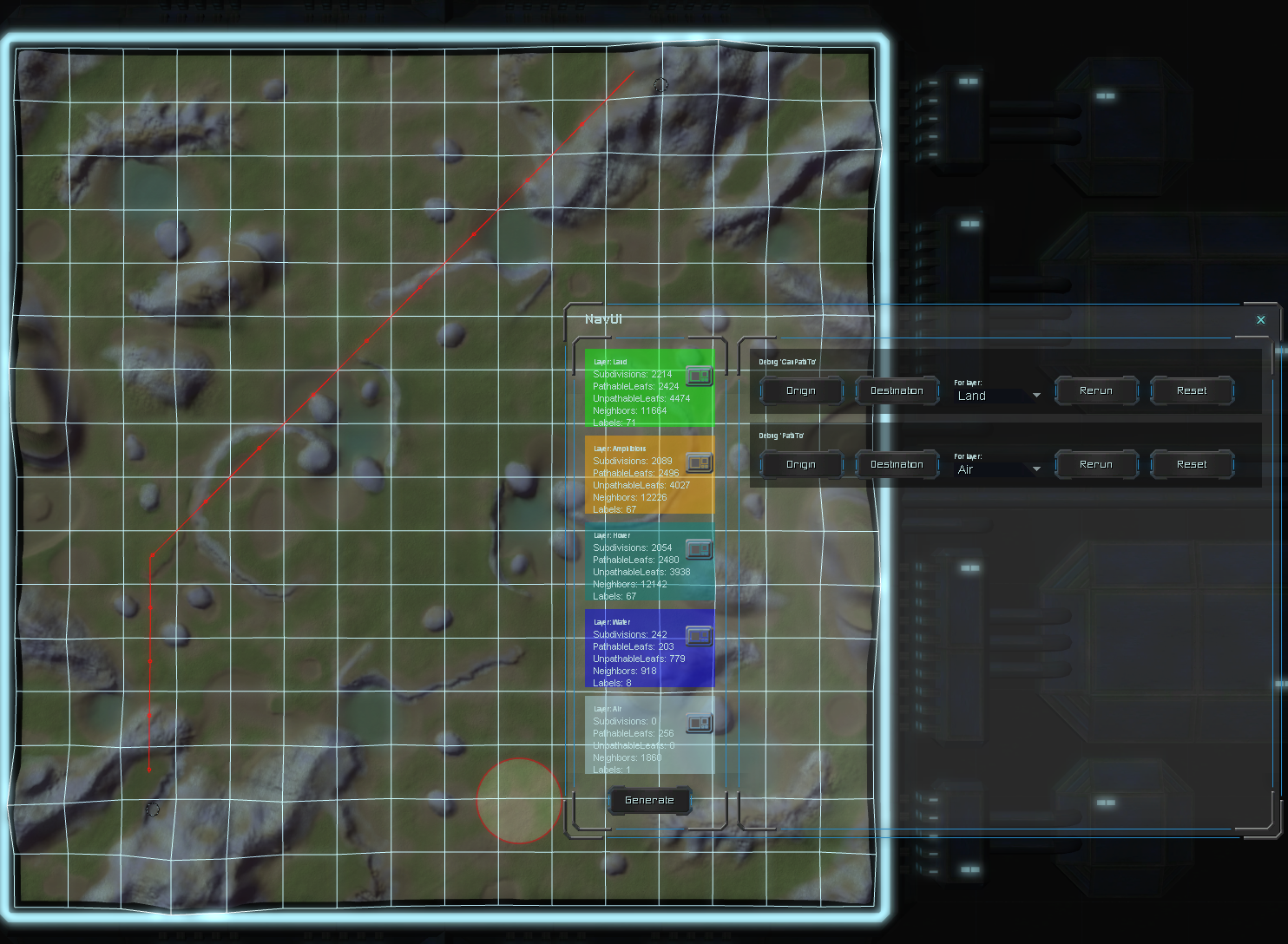

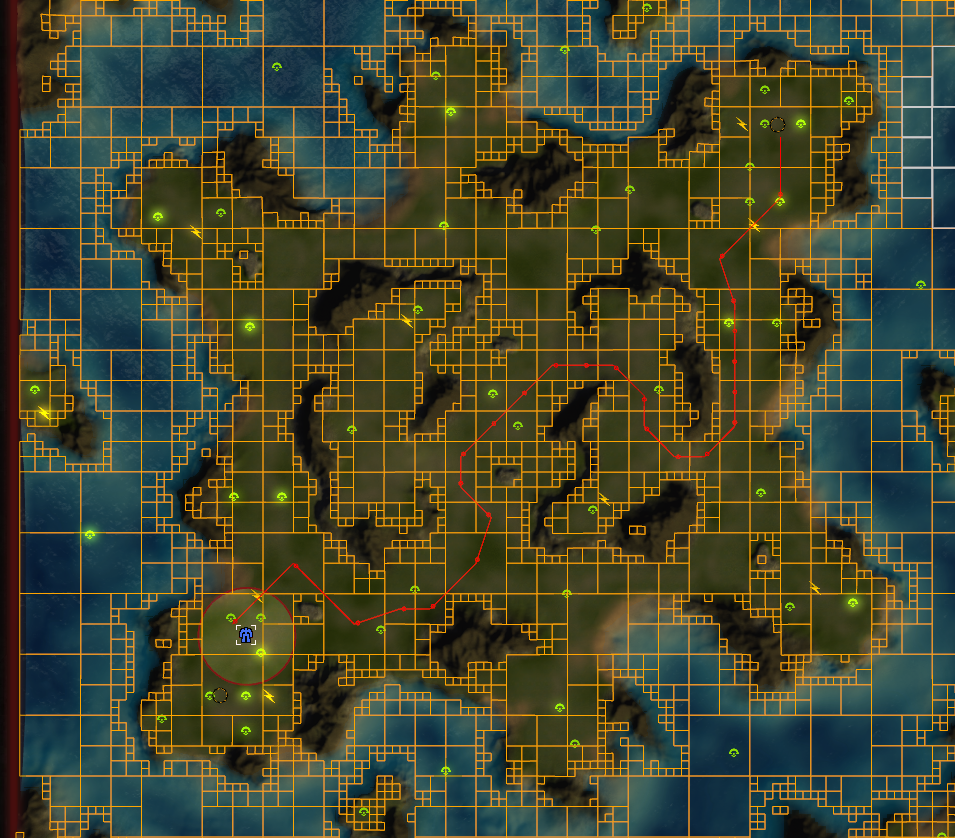

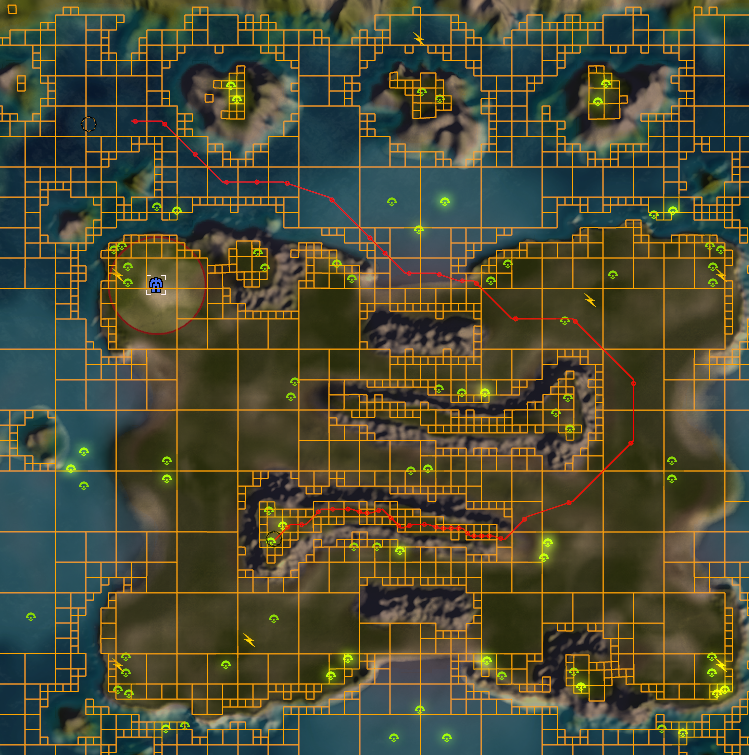

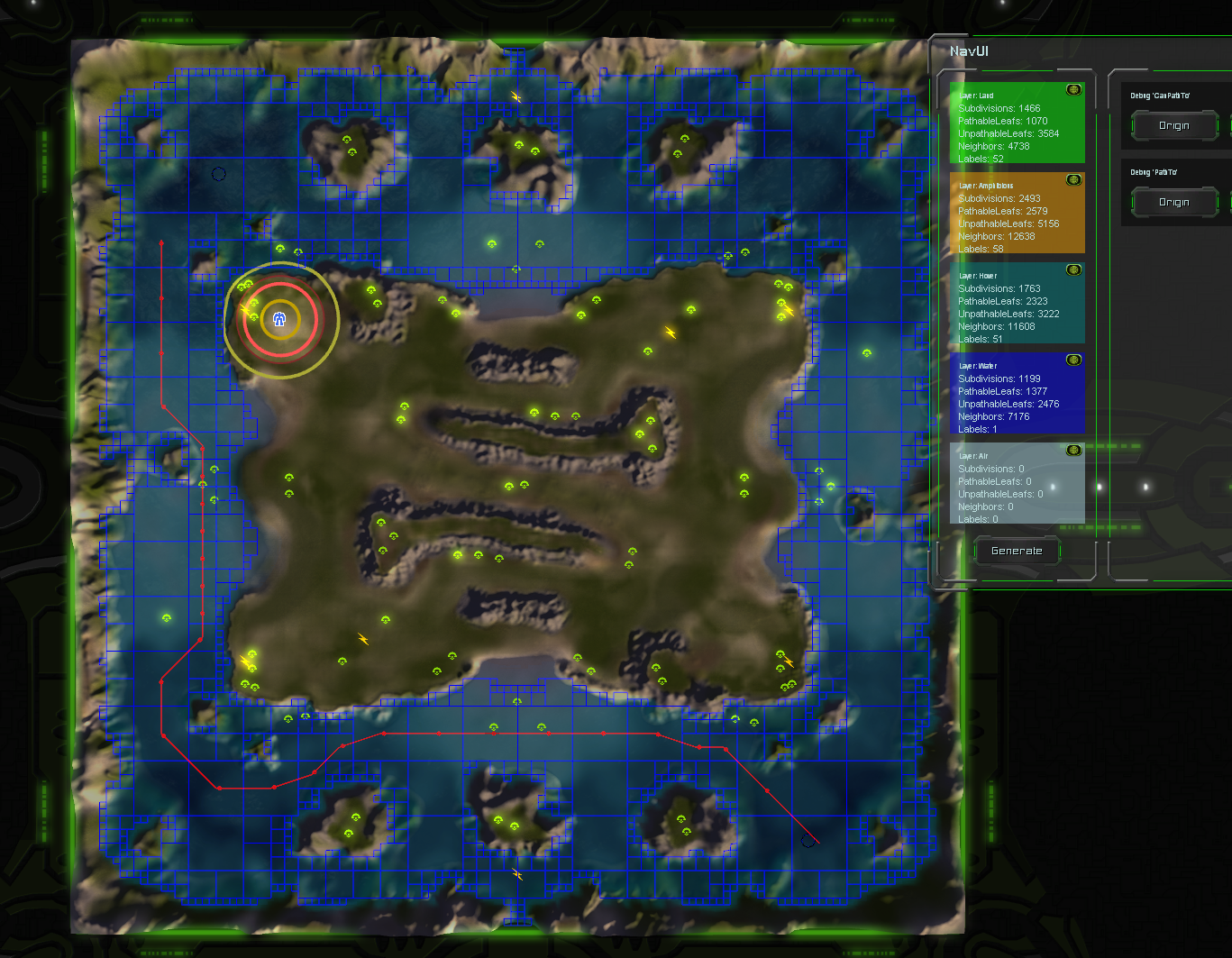

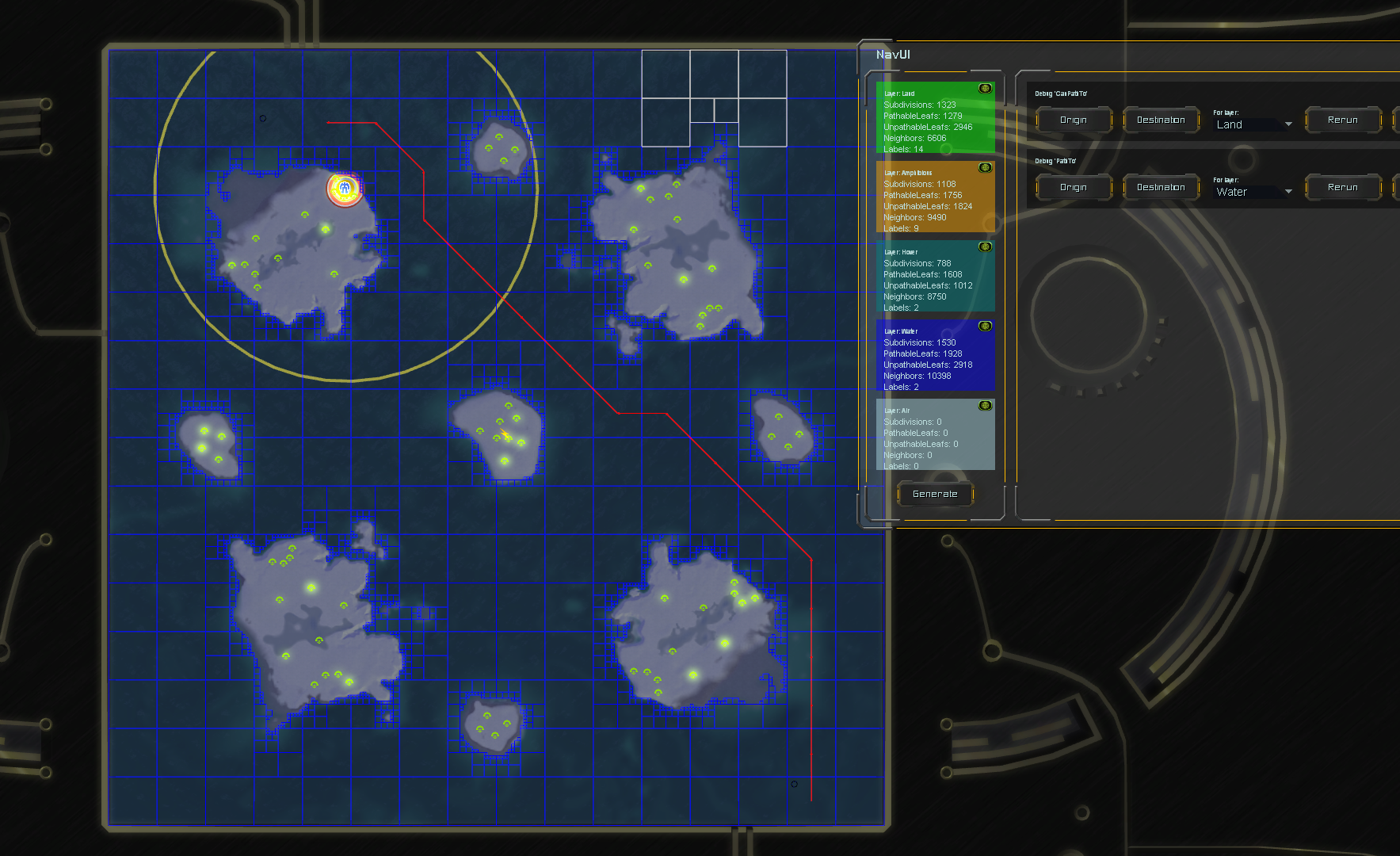

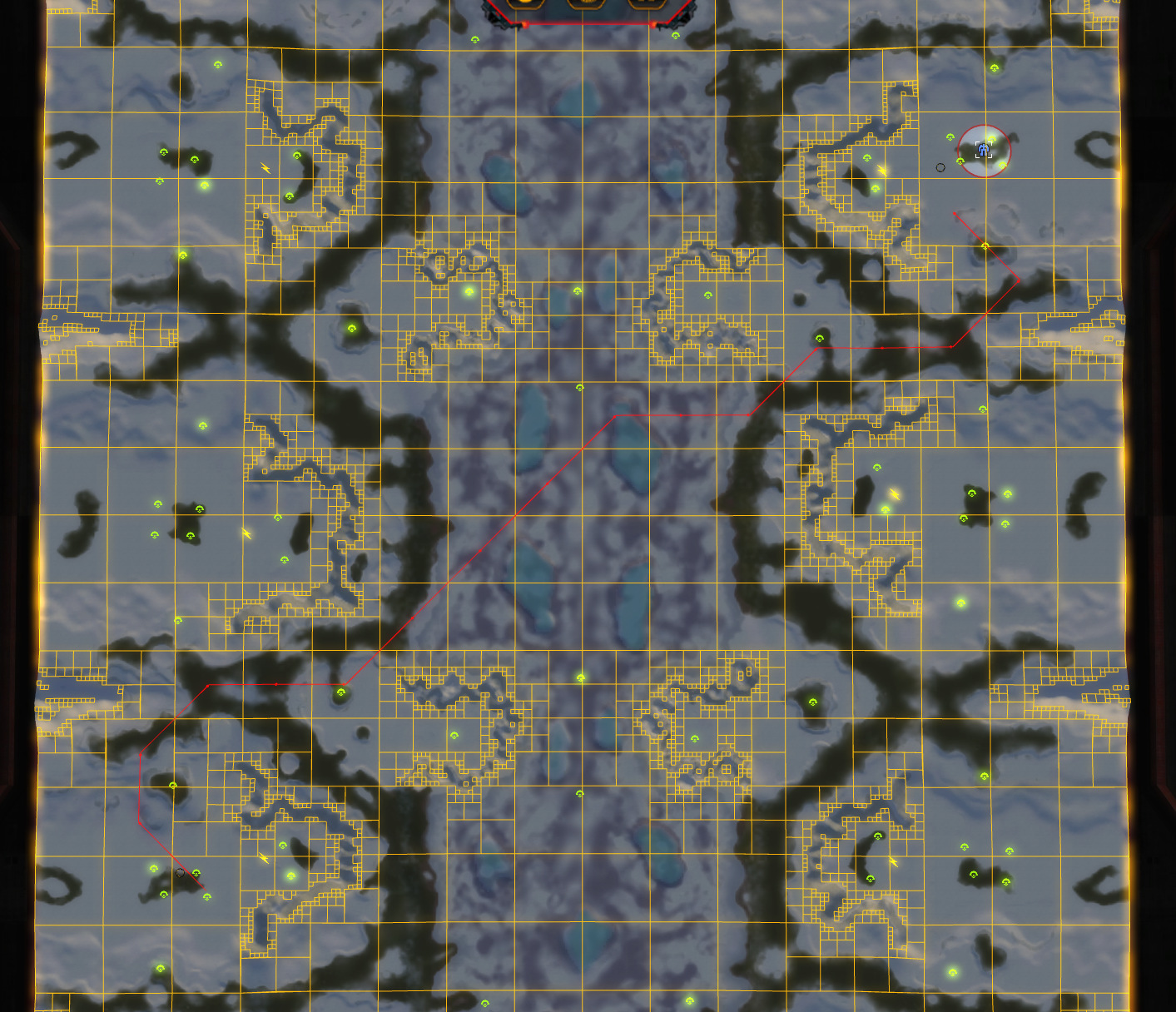

We now have neighbor information stored per leaf of each quad tree. See the image, where the neighbours have an additional black square inside it. The white square is highlighted. With this, we can start doing path finding!

Made a debugging tool for CanPathTo to make testing easier. There is both a visual representation of the status quo, and the answer (you'd get in code) is visible in the interface.

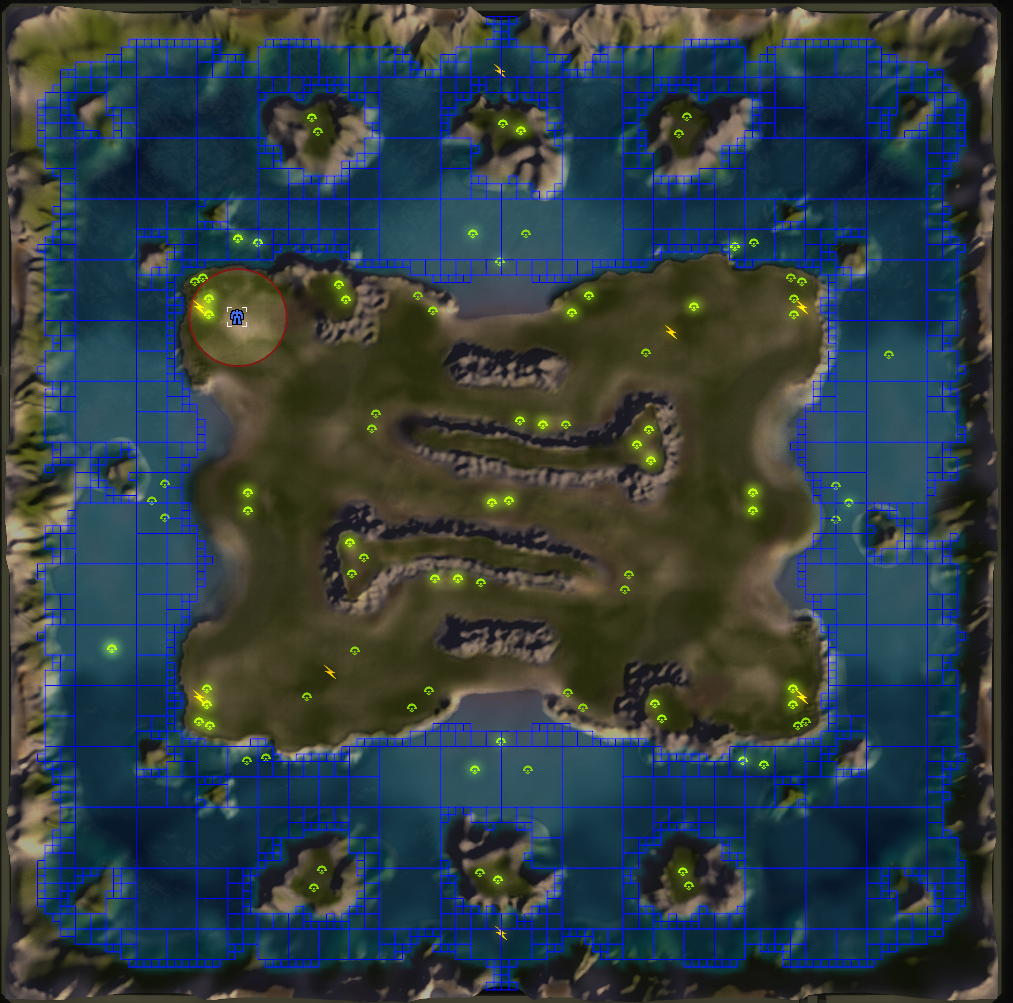

Example of an air grid, along with a path 😄

A few more examples of the output of the current status quo