k8s-bigip-ctlr

k8s-bigip-ctlr copied to clipboard

k8s-bigip-ctlr copied to clipboard

Virtual Server Default Pool not set by CIS in custom resource mode

Setup Details

CIS Version : 2.7 Build: f5networks/k8s-bigip-ctlr:2.7.1 BIGIP Version: Big IP 15.1.4 Build 0.0.47 Final AS3 Version: 3.34.0 Build 4

Agent Mode: AS3 Orchestration: OpenShift Container Platform Orchestration Version: 4.8.24 Pool Mode: Nodeport

Description

VirtualServer default pool is not set by CIS deployed in CRD mode

Steps To Reproduce

- Deploy CIS in CRD mode (--custom-resource-mode=true)

- Deploy Virtual Server

Expected Result

I expect "Default Pool" to be selected correctly.

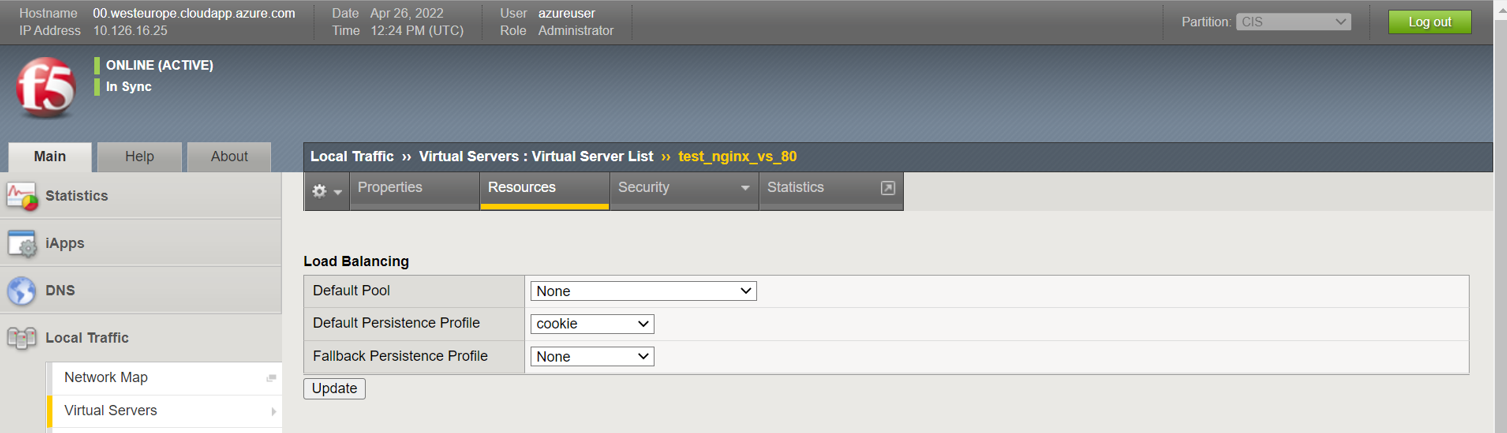

Actual Result

"Default" Pool is set to "None", hence the Virtual Server Availability: Unknown (Enabled) - The children pool member(s) either don't have service checking enabled, or service check results are not available yet

Diagnostic Information

CIS deployment options:

--credentials-directory

/tmp/creds

--agent=as3

--as3-validation=true

--bigip-partition=CIS

--bigip-url=https://ltm-azure-cis-test.snam.it

--insecure=true

--log-as3-response=true

--log-level=debug

--manage-configmaps=false

--manage-ingress=false

--manage-routes=false

--pool-member-type=nodeport

--share-nodes=true

--custom-resource-mode=true

VIP deployment template:

apiVersion: cis.f5.com/v1

kind: VirtualServer

metadata:

labels:

f5cr: "true"

name: test-nginx

namespace: f5-test-nginx

spec:

pools:

- monitor:

interval: 5

send: "GET /\r\n"

timeout: 16

type: http

path: /

service: nginx-http

servicePort: 80

virtualServerAddress: 10.126.19.73

virtualServerName: test-nginx-vs

To better explain the problem:

what I see on ltm is the following:

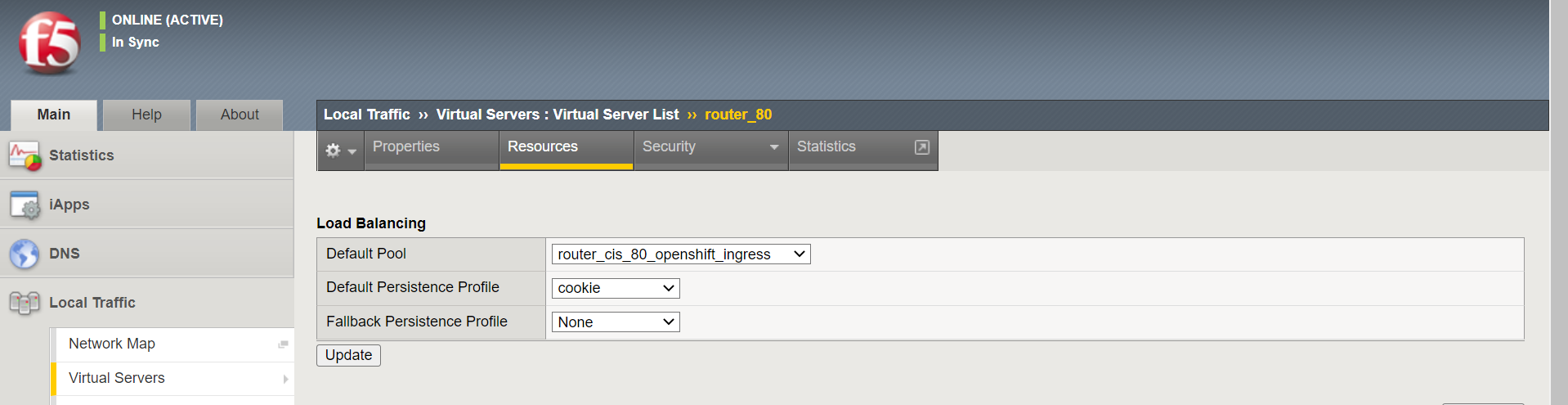

If I manually set the default pool what I get is:

And the status I see is: Availability: Available (Enabled) - The virtual server is available

This configuration does not persist because CIS restores the configuration after some time. Shouldn't this property be set automatically by CIS? Is there a way to set this in CIS/CRD kubernetes configs?

+1 on the above issue. I do too observe such behavior. I have very similar configuration.

My VS:

apiVersion: "cis.f5.com/v1"

kind: VirtualServer

metadata:

name: nginx

labels:

f5cr: "true"

spec:

virtualServerAddress: "10.138.0.33"

persistenceProfile: source-address

pools:

- path: /

service: nginx-nodeport

servicePort: 80

monitor:

type: http

send: "GET /rn"

recv: ""

interval: 10

timeout: 10

My setup Details: CIS Version : 2.8 Build: f5networks/k8s-bigip-ctlr:2.8.1 BIGIP Version: Big IP 15.1.2.1 Build 0.0.10 Point Release 1 AS3 Version: 3.32.0 Build 4

Agent Mode: AS3 Orchestration: Kubernetes v1.20.12 Pool Mode: Nodeport

This issue might have something in common. In my case configuring TransportServer populates "Default Pool"

+1 on the above issue. I do too observe such behavior. I have very similar configuration. (...)

Answering myself. As pointed by @azzauzuaz Virtual Server Availability status is "Unknown" due to lack of value for "Default Pool" resource.

However, in my case, traffic is still balanced due to Policy created along applying VirtualServer resource. So even though VirtualServer health status is unknown, the traffic is still correctly proxied to Kubernetes service.

I guess it would be nice to be able to set default pool on VirtualServer object with VirtualServer Kubernetes CRD.

+1 I also have this problem, however everything works for me its just a visual bug

Same issue here. This caused some confusion in our infrastructure. Traffic works fine but looks broken in the F5 Network Map if you look at the overview.

Our Setup: CIS Build: f5networks/k8s-bigip-ctlr:2.6.1 BigIP: BIG-IP 15.1.2.1 Build 0.0.10 Point Release 1 AS3: 3.31.0 Build 6 Orchestration: Kubernetes v1.21.2 Pool Mode: ClusterIP

I confirm that the traffic on LTM works correctly, the only problem is the unknown state of the VIP (Virtual Server Availability: Unknown), which results in a "red" state on the GTM that should monitor it. The workaround we found for this problem was moving the application probes from LTM to GTM.

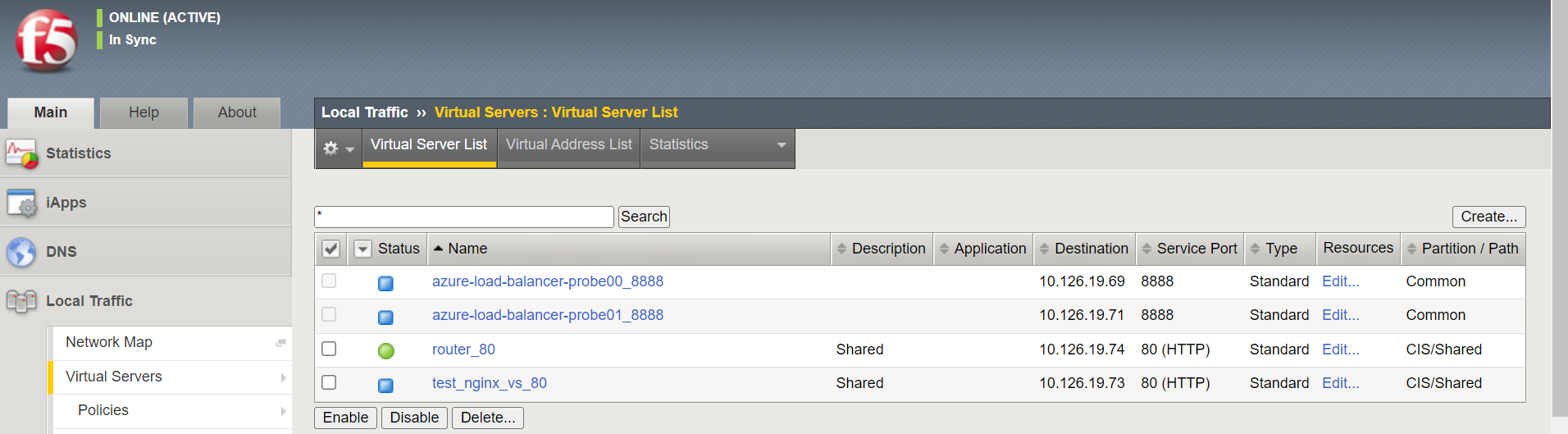

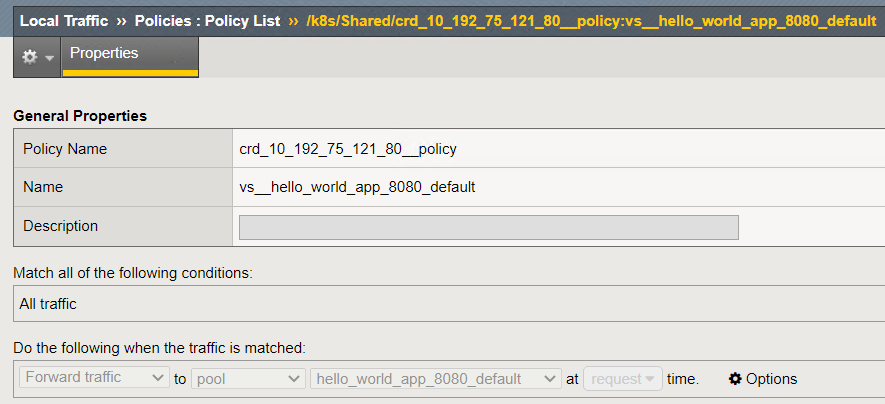

@azzauzuaz going to address your question/issue first

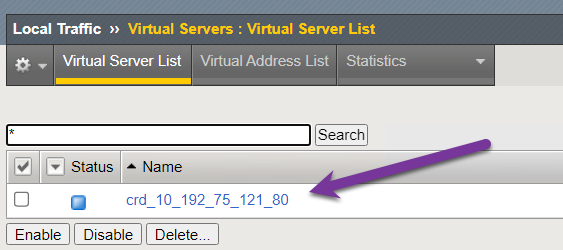

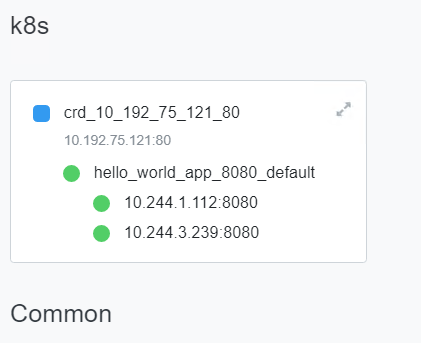

When using a CRD, CIS uses policies. The pool is associated to the policy. When a policy is used the virtual status will always show blue. Example below

https://github.com/mdditt2000/kubernetes-1-19/tree/master/cis%202.10/k8s/cis/cafe/unsecure

and resource

and policy

@azzauzuaz what you are seeing is correct behavior of BIG-IP. Use a monitor to monitor the backend application from GTM as in my example above

@Creat1v Network map will show as

This is expected behavior when using a VirtualServer CRD, OCP route. If you use a TransportServer no policy is created. TS adds the pool to the VS.

@cchriso BIG-IP is working correctly. Not a defect. Due to the policy when using layer 7 on BIG-IP.

At this time CIS is functioning correctly. Recommend using a TransportServer as an option. No policy is created and therefore the Virtual status will show green.

This issue can be closed out at this time.

In this case, when all backends are down, the pool has the "Offline" status, but the virtual server is unaware of this and has an "Unknown" status. This is a problem because it is necessary to prevent traffic from being directed to the virtual server in this situation.