Inference based on YourTTS-zeroshot-VC-demo

Hi!

I tried to inference some audios using files that were generated by training vits model on ljspeech dataset.

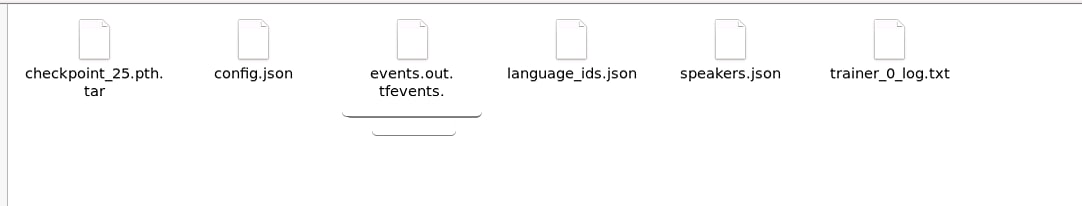

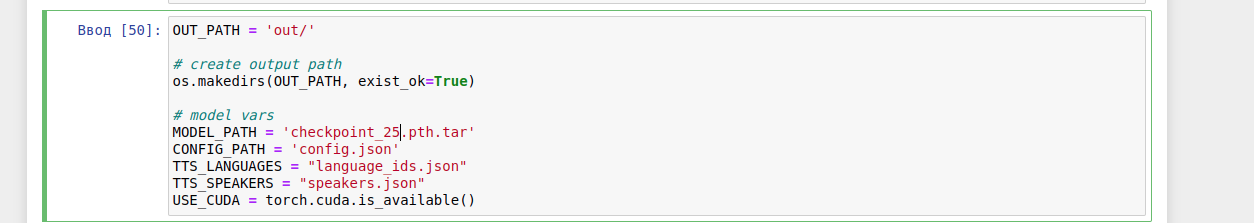

I substituted files in the YourTTS-zeroshot-VC-demo for my own

I substituted files in the YourTTS-zeroshot-VC-demo for my own

However I noticed that your model checkpoint 'best_model.pth.tar' weighs 380 Mb, while my checkpoint_25.pth.tar weighs 1.1Gb, even with mixed_precision = true.

However I noticed that your model checkpoint 'best_model.pth.tar' weighs 380 Mb, while my checkpoint_25.pth.tar weighs 1.1Gb, even with mixed_precision = true.

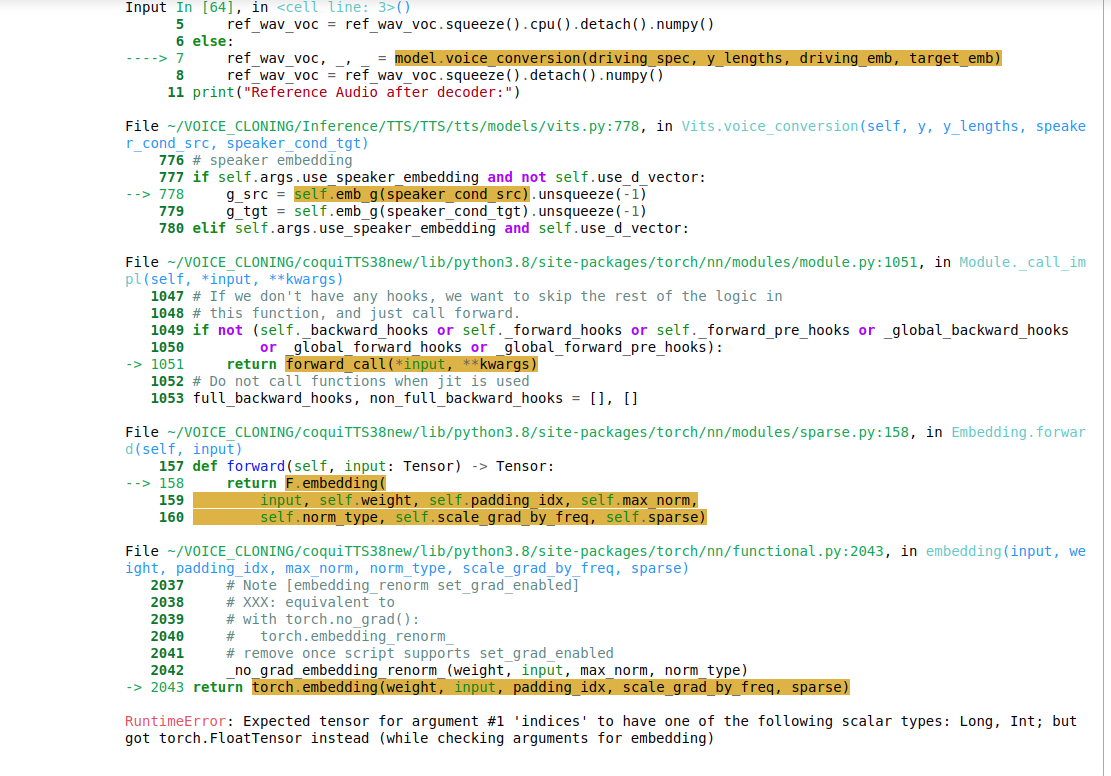

So I upload and prepare audio files and make target_emb, driving_emb and driving_spec, but when I try to convert the voice I get an error: RuntimeError: Expected tensor for argument #1 'indices' to have one of the following scalar types: Long, Int; but got torch.FloatTensor instead (while checking arguments for embedding)

Did anyone else run into such problem? Please, help

Hi, Looks like that use_d_vector is false. So it means that you are not using external speakers embeddings. The demo provided only works for external speaker embeddings training. You need to modify it, instead of providing speaker embeddings (that is a Float Tensor) you must provide a Long tensor with the target speaker id.