website: improve information from search result

Description

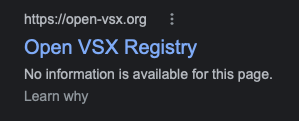

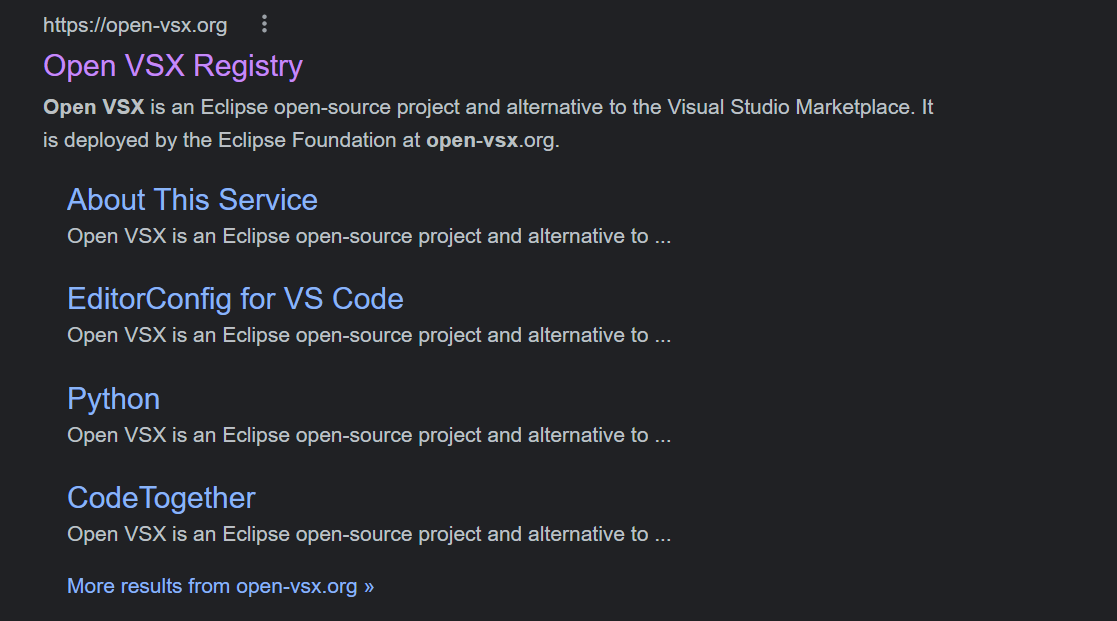

The search result for https://open-vsx.org is not very informative for newcomers, and would be better to provide details on what the site is about (no mention of extensions, vscode, open-source):

Additional Information

Working with @chrisguindon, it looks like the meta tags in index.html are incorrect.

I'll submit a PR to replace

<!-- Google Meta Tags -->

<meta itemprop="name" content="Open VSX Registry">

<meta itemprop="description" content="Open VSX is a vendor-neutral open-source alternative to the Visual Studio Marketplace. It provides a server application that manages VS Code extensions in a database, a web application similar to the VS Code Marketplace, and a command-line tool for publishing extensions similar to vsce.">

<meta itemprop="image" content="https://open-vsx.org/openvsx-preview.png">

with

<meta name="description" content="Open VSX is an Eclipse open-source project and alternative to the Visual Studio Marketplace. It is deployed by the Eclipse Foundation at open-vsx.org.">

<meta name="keywords" content="eclipse,ide,open source,development environment,development,vs code,visual studio code,extension,plugin,plug-in,registry,theia">

I changed the description to fit within 150 chars.

@kineticsquid I wound't replace the google meta tags but I would simply add the new one that you have in your comment.

@kineticsquid I missed that you said you were going to submit a PR.

I already made one: https://github.com/EclipseFdn/open-vsx.org/pull/1256

Feel free to close it and if you were planning to make more changes.

Thanks @chrisguindon, I approved and merged the PR

@kineticsquid any idea when the change goes live? At least on my end the google result still doesn't show information.

@vince-fugnitto It will be in the next deploy after this one that's currently underway: https://github.com/EclipseFdn/open-vsx.org/issues/1293.

I can see the meta data updates after this recent deploy, but the search results remain unchanged. Is there a mechanism to tell the bots to update?

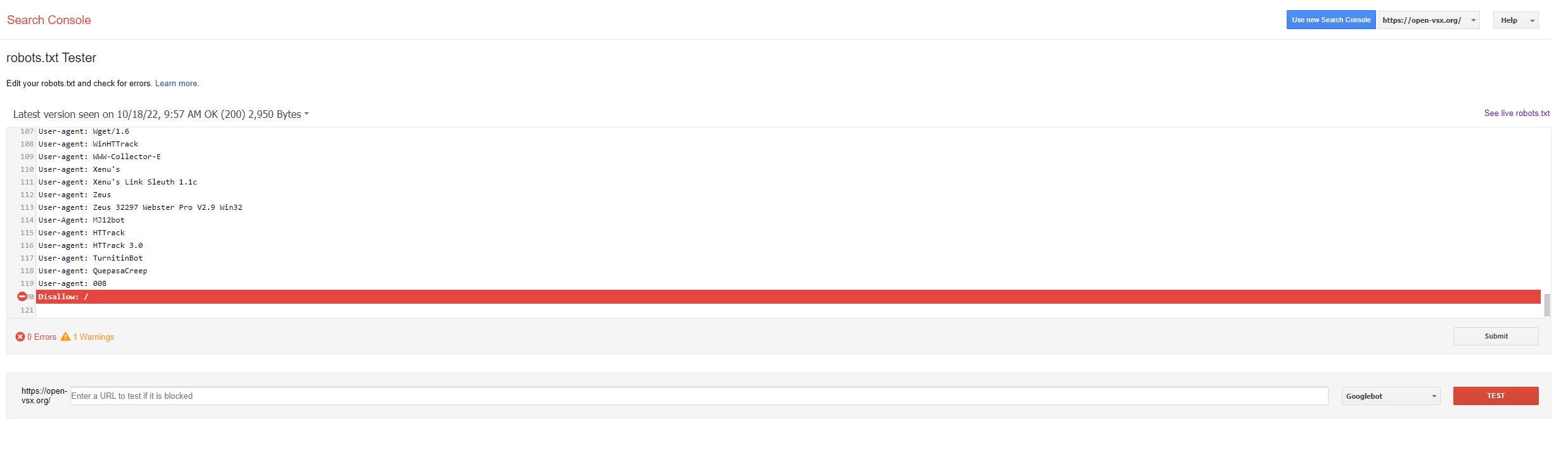

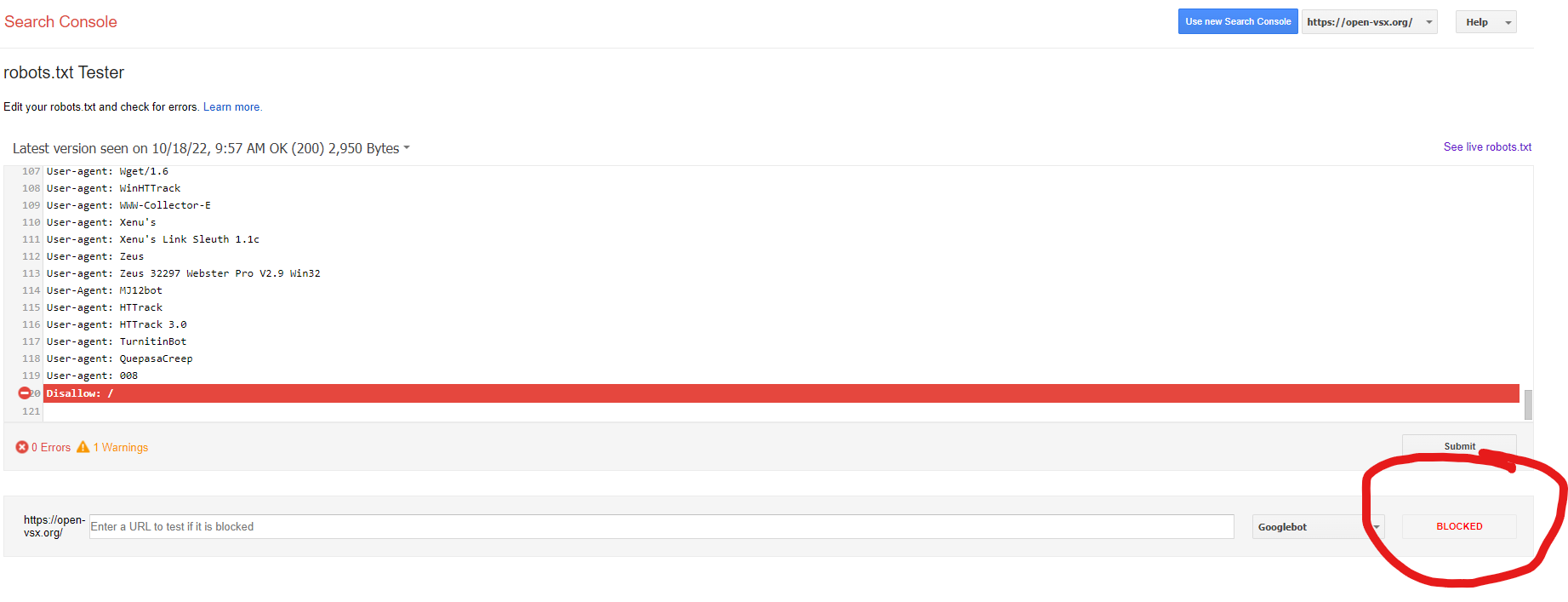

@kineticsquid Looks like there is an issue with the robots.txt file: https://open-vsx.org/robots.txt

Disallow: /

This is stopping the site from being indexed.

@chrisguindon I see that same line at the end of eclipse.org/robots.txt. I tried running that file through the Google analyzer, but I don't know enough or have the right access.

I have access and it reports that the page is blocked by our robots.txt file. Here's an updated screenshot where the tools says that the page is blocked. This is true for all pages on the site not just the homepage.

@chrisguindon Right, sorry, my point was that if this line, Disallow: / is blocking pages on open-vsx.org from being crawled, why is the same line in eclipse.org/robots.txt not having the same effect?

@chrisguindon Right, sorry, my point was that if this line,

Disallow: /is blocking pages on open-vsx.org from being crawled, why is the same line ineclipse.org/robots.txtnot having the same effect?

I was wondering the same thing and did a bit of research this morning and found the explanation:

The instructions (entries) in robots.txt always consist of two parts. In the first part, you define which robots (user-agents) the following instruction apply for. The second part contains the instruction (disallow or allow). "user-agent: Google-Bot" and the instruction "disallow: /clients/" mean that Google bot is not allowed to search the directory /clients/.

If we look at https://www.eclipse.org/robots.txt we quickly notice that we have 2 blocks. The first one instructs all user-agent to disallow the forums and a few other sections such as search and download.php. The second block instructs a few different user-agents such as but not limited to QuepasaCreep, TurnitinBot to not index the full site (Disallow: /).

Now, if we look at https://open-vsx.org/robots.txt, we notice that we only have 1 disallow instructions which includes User-agent: * and the full-list of crawler. They are not separated into 2 blocks of instructions like the one on eclipse.org. The open-vsx version basically instructs all bots to NOT index open-vsx.org.

@chrisguindon let me preface this with, my knowledge here is thin...

In https://www.eclipse.org/robots.txt are the two blocks separated by the empty line? If yes, top block says everyone can scan everything except what's listed with the Disallow: statements. The second block says, except all you all listed below, you can't scan anything.

In https://open-vsx.org/robots.txt, I see:

User-agent: *

Crawl-delay: 2

User-agent: Alexibot

...

That also looks like two blocks. The top one says everyone can scan everything, with essentially some rate limiting. The second is similar to the second from https://www.eclipse.org/robots.txt. Is that right?

In https://www.eclipse.org/robots.txt are the two blocks separated by the empty line? If yes, top block says everyone can scan everything except what's listed with the Disallow: statements. The second block says, except all you all listed below, you can't scan anything.

@kineticsquid I don't think the empty line matter for google. I am saying that based on the testing I did via: https://github.com/EclipseFdn/open-vsx.org/issues/1240#issuecomment-1298723834

That tool is reporting that google is not indexing the site because of our robots.txt file. I

My understanding is that the sections in the robots.txt file are separated into 2 parts. The first part is for designing the WHO (which bot/user-agent) is affected and the second part is the instruction which is usually a disallow or allow command.

This is my interpretation and it seems to align with what I am seeing from parsing the https://open-vsx.org/robots.txt

I did some testing with a different tool: https://technicalseo.com/tools/robots-txt/

That site reports that Crawl-delay: 2 rule is ignored by googlebots. Maybe that's the reason why the robots.txt is not working as we expect. The instruction in the first block is ignored so it's putting all the user-agent in the same group.

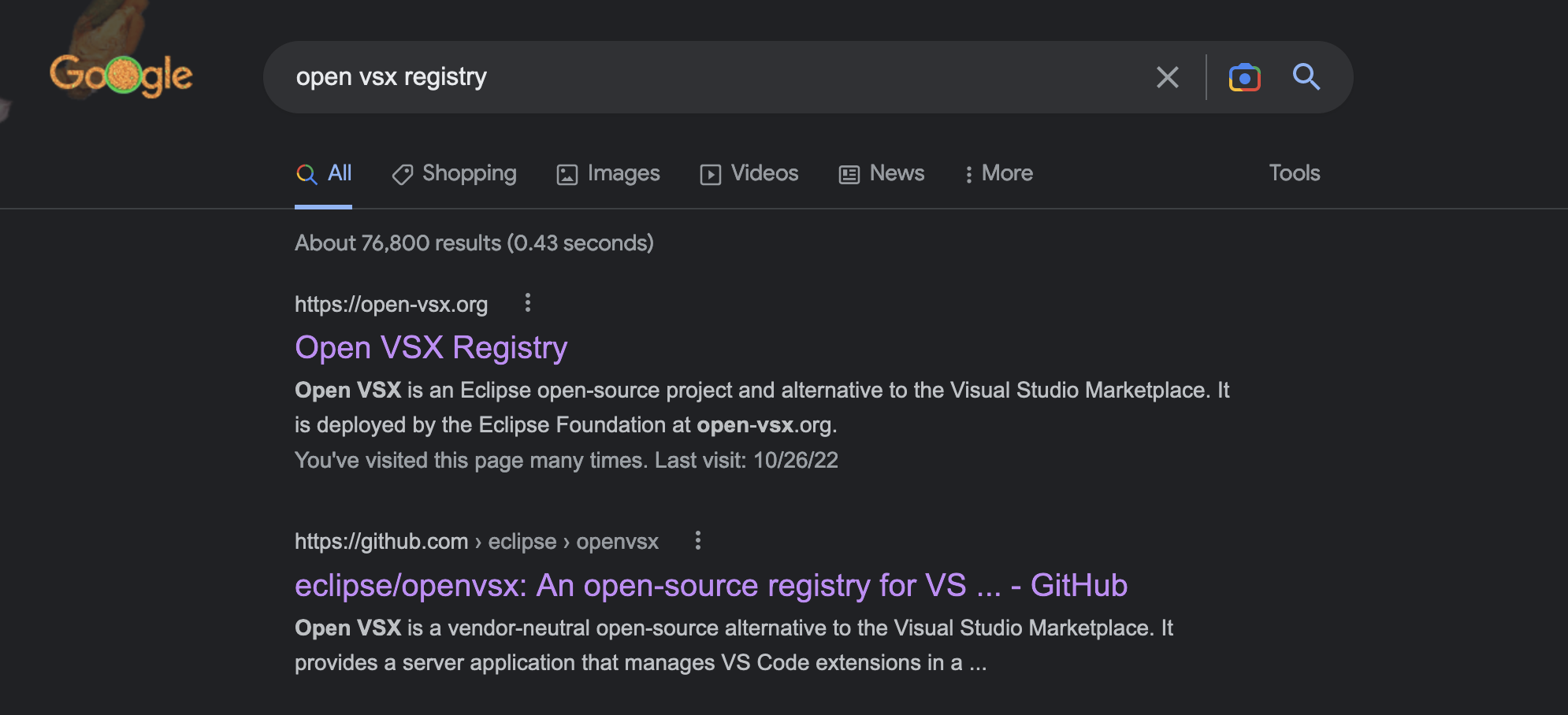

Now with some extensions... However, I would recommend that the application is upgraded to include a different description per page.

As you can see in my screenshot, the same description "Open VSX is an Eclipse open-source project and alternative to ... " is used for each page and that's not ideal.

For those following this thread, the indexing issue was addressed here: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/issues/2190

@chrisguindon Good observation. You mean, e.g. with this page https://open-vsx.org/extension/ms-toolsai/jupyter, we'd generate different text for the meta tags (presumably based on the plug-in information):

<meta name="description" content="Open VSX is an Eclipse open-source project and alternative to the Visual Studio Marketplace. It is deployed by the Eclipse Foundation at open-vsx.org.">

@chrisguindon Good observation. You mean, e.g. with this page https://open-vsx.org/extension/ms-toolsai/jupyter, we'd generate different text for the meta tags (presumably based on the plug-in information):

+1

Google is starting to show the extension description (Prisma, Astro):

Server side webui rendering (https://github.com/eclipse/openvsx/issues/8) can still be beneficial to rank higher in search results because it improves the page indexing speed.

This is awesome @amvanbaren. I am sure this is going to help users find the open-vsx website!

@amvanbaren @chrisguindon we good to close this one?

+1

Ditto to @chrisguindon's comments, this is really nice work!