graphql-ruby-fragment_cache

graphql-ruby-fragment_cache copied to clipboard

graphql-ruby-fragment_cache copied to clipboard

Use multi read when possible

Hi there! I've been doing some testing in a pet project to see if we could integrate this great gem into another project.

We've encountered this very issue though, and the performance hit would be pretty bad due to the N+1's.

We're using Redis, so I've tried to fiddle around with the code trying to see if I could get it to use MGET with all the required keys. Tried to use BatchLoader for this as it worked very nicely before in solving this kind of issues, but I'm now stuck doing N+1 MGET calls. :sweat_smile:

# something like this (temporarily deleted previous lines)

def read(keep_in_context = false)

BatchLoader.for(cache_key).batch do |cache_keys, loader|

FragmentCache.cache_store.read_multi(*cache_keys).each do |key, value|

loader.call(key, value)

end

end

end

resulting in:

Started POST "/graphql" for ::1 at 2021-06-03 15:03:59 +0300

Processing by GraphqlController#execute as */*

Parameters: {"query"=>"{\n users {\n id\n name\n createdAt\n updatedAt\n computedUuid\n }\n}", "variables"=>nil, "graphql"=>{"query"=>"{\n users {\n id\n name\n createdAt\n updatedAt\n computedUuid\n }\n}", "variables"=>nil}}

(0.1ms) SELECT sqlite_version(*)

↳ app/controllers/graphql_controller.rb:18:in `execute'

User Load (0.1ms) SELECT "users".* FROM "users"

↳ app/controllers/graphql_controller.rb:18:in `execute'

Redis (0.18ms) [ MGET <BINARY DATA> ]

Redis (0.11ms) [ MGET <BINARY DATA> ]

Redis (0.11ms) [ MGET <BINARY DATA> ]

Redis (0.12ms) [ MGET <BINARY DATA> ]

Redis (0.11ms) [ MGET <BINARY DATA> ]

Redis (0.12ms) [ MGET <BINARY DATA> ]

Redis (0.11ms) [ MGET <BINARY DATA> ]

Redis (0.14ms) [ MGET <BINARY DATA> ]

Redis (0.15ms) [ MGET <BINARY DATA> ]

Redis (0.12ms) [ MGET <BINARY DATA> ]

Completed 200 OK in 26ms (Views: 0.8ms | ActiveRecord: 0.4ms | Redis: 1.3ms | Allocations: 21242)

I'm up for giving a hand in optimizing this, but I think I need some hints toward the right direction.

How do you think this should be handled @DmitryTsepelev?

Hi @alex-damian-negru! I believe we could use lazy resolving here (it's used by the batch loader under the hood too).

This is how library works now:

- There is an extension that wraps field execution with the cache_fragment method.

- This method tries to get cached value or resolves the field. Fragment class remembers the field to cache, while library itself keeps track of fragments.

- There is an instrumentation that waits for GQL to complete execution

- When execution is completed Cacher goes through fragments and persists them

I guess we should do the following:

- Mark fields with

cache_fragmentas lazy - Register a special lazy resolver for such fields

- Lazy resolver should be able to read all keys it got from Redis

The only problem I see is that when someone uses a cache_fragment helper inside the method—we probably won't be able to mark this field as lazy. We need to find a workaround for that (at least, it should keep working as it works now).

Hi @DmitryTsepelev!

I picked this repo from a task on the Cult of Martians, and it's been an interesting code dive for me both into this repo as well as into graphql-ruby.

Can you take a look at my implementation of lazy "batching" (since it kinda makes cache reads get executed lazily at the end, but still all close to each other) in this commit?

I'm currently thinking on how to make a real #read_multi, can you tell me what you think of the following idea:

- I'd like to store all cache keys to be resolved in the context (

query_ctx[:lazy_cache_resolver_keys]) - Once one of the LazyCacheResolvers starts resolving, it resolves all keys in the context

module GraphQL

module FragmentCache

module Schema

using Ext

class LazyCacheResolver

def initialize(query_ctx, object)

@cache_key = object._graphql_cache_key

@lazy_state = query_ctx[:lazy_cache_resolver_keys] ||= {

pending_keys: Set.new,

resolved_keys: {}

}

@lazy_state[:pending_keys] << @cache_key

end

def resolve

resolved_key = @lazy_state[:resolved_keys][@cache_key]

if resolved_key

resolved_key

else

resolved_key_vals = Fragment.read_multi(@lazy_state[:pending_keys].to_a) # a static method

@lazy_state[:pending_keys].clear

resolved_key_vals.each { |key, value| @lazy_state[:resolved_keys][key] = value }

@lazy_state[:resolved_keys][@cache_key]

end

end

end

end

end

end

@DmitryTsepelev can you take a look at a PR with my MVP solution?

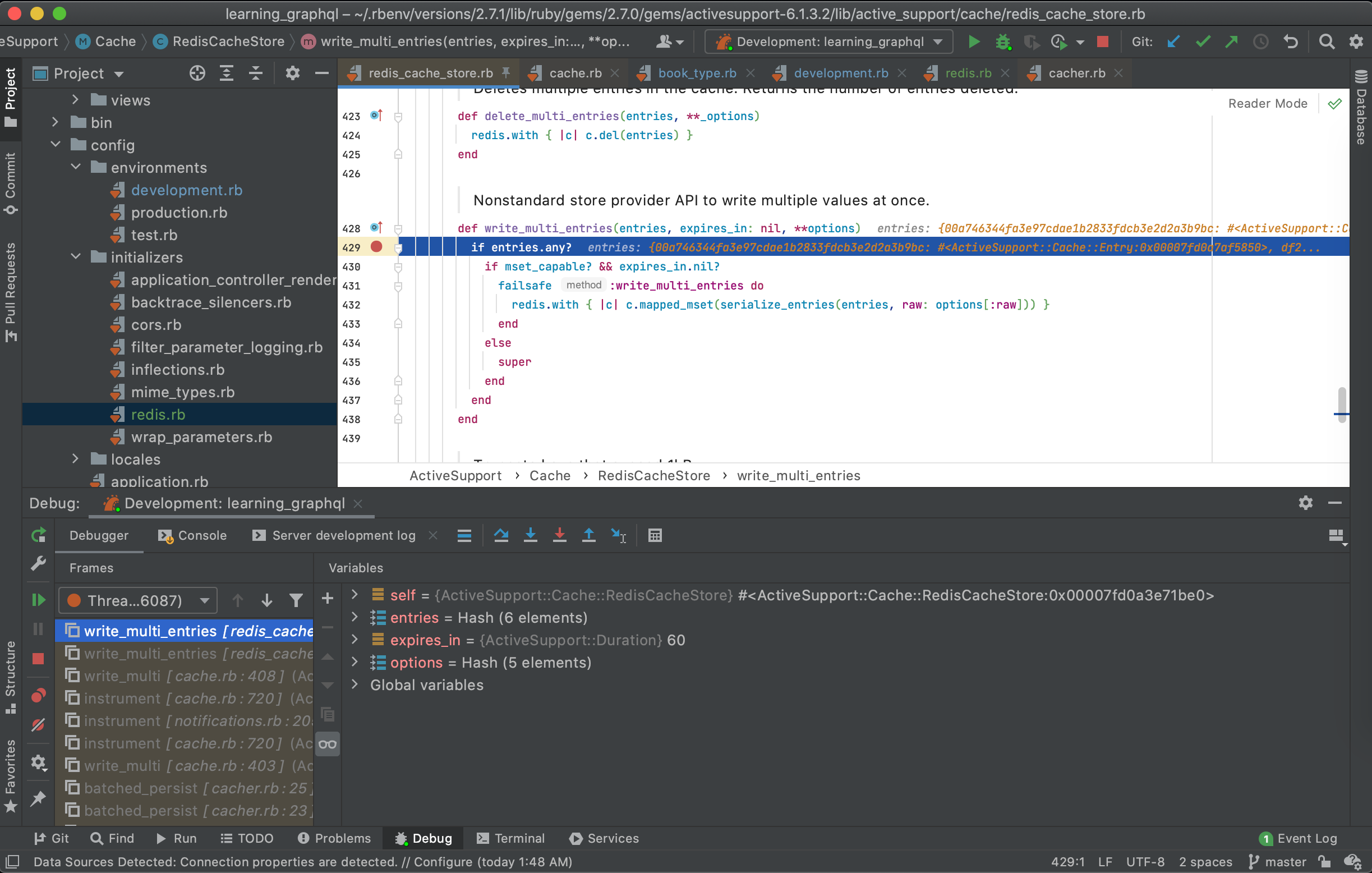

I've debugged all the way into the ActiveSupport RedisCacheStore implementation, and it currently hits the write_multi implementation of Redis.

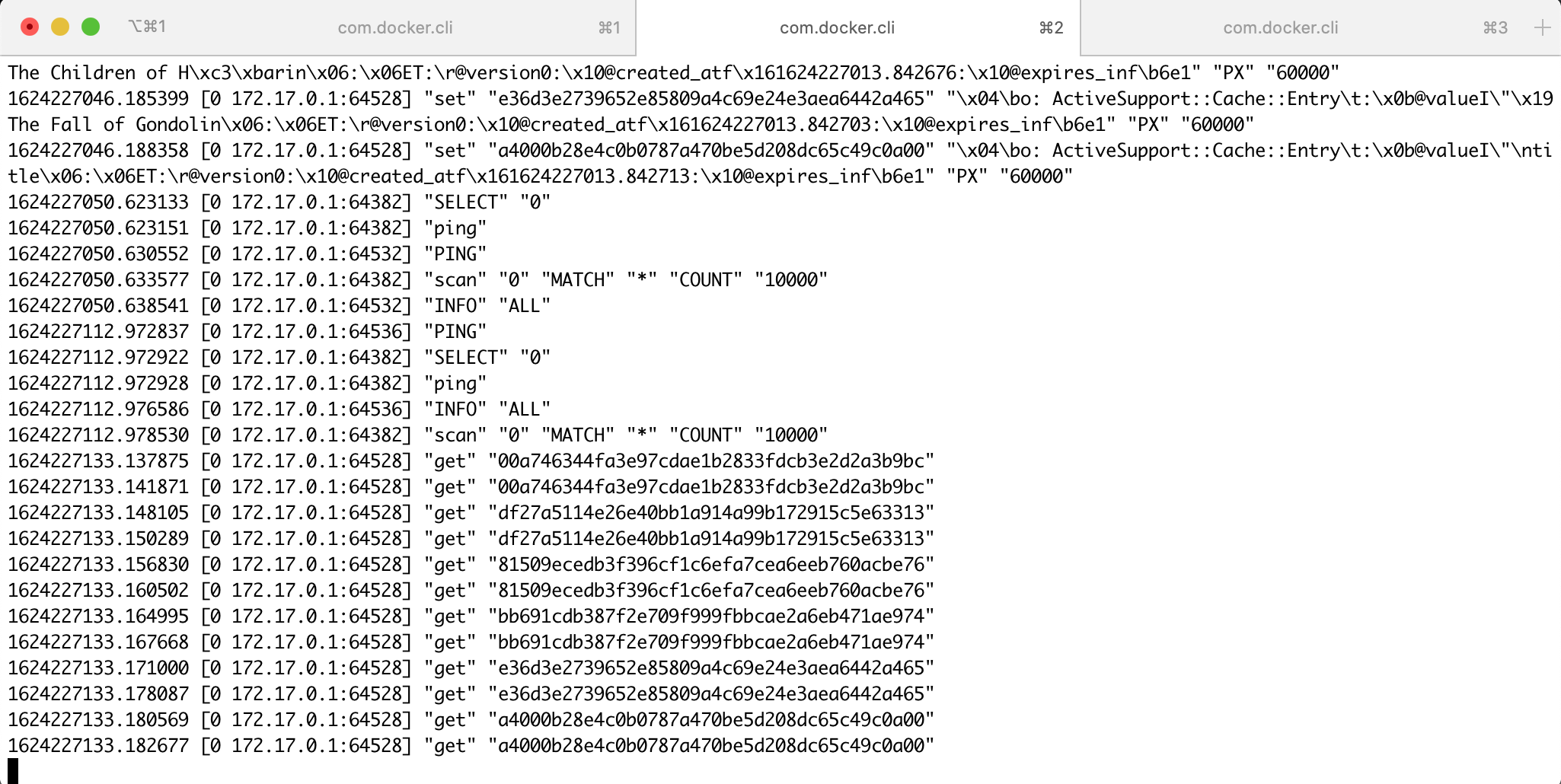

I wonder though why it does multiple GETs instead of MGET:

Docs say that write/read_multi use MSET and MGET, so I'm a little puzzled

Hi @daukadolt! Sorry for the silence, I'm a bit overloaded these days, will do my best to take a look this week 😞