loguru

loguru copied to clipboard

loguru copied to clipboard

Multi thread, when rotating logger,The log has been splied to each log file according to thread

I wish to when rotation, also Multi thread log to only one log file. How could I do ?

Hi.

Sorry but it's not clear to me: do you get an unexpected result (like a bug) or do you want to update the rotation behavior (like a feature)?

My configuration:

logger.add(

os.path.join(LOGS_PATH, 'mq.chat.log'),

filter=lambda record: record["extra"]["name"] == "mq_log",

enqueue=True,

level='DEBUG',

rotation='00:00',

retention='10 days',

encoding="utf-8",

colorize=True,

format="{time:YYYY-MM-DD HH:mm:ss} | {level} | pid:{process} | threadID:{thread} | {module} | {function} | {line} "

"| {message} "

)

multiprocess

First time, the logs of all process can be printed in mq_log. but when rotation,It seems that each process log has been to print to each log file

I see. You're probably facing a problem with multiprocessing causing the same file sink to be added multiple time.

It seems to work well at first, since all the logs are written in the same file. But at the time of rotation, each sink will try to create a new file without overwriting the others, which causes a lot of new files to be created unexpectedly.

This is common problem if multiprocessing and loguru are misused altogether.

Take a look at this part of the documentation: Compatibility with multiprocessing using enqueue argument.

I don't know the details of your application, but you must make sure that logger.add() is only called once, and not one time per process.

use supervisord to start multiprocessing:

[program:consumer_1] command=python -u -m router.mq.chat stdout_logfile=/dev/fd/1 stdout_logfile_maxbytes=0 stderr_logfile=/dev/fd/2 stderr_logfile_maxbytes=0 autostart=true autorestart=true

[program:consumer_2] command=python -u -m router.mq.chat stdout_logfile=/dev/fd/1 stdout_logfile_maxbytes=0 stderr_logfile=/dev/fd/2 stderr_logfile_maxbytes=0 autostart=true autorestart=true

init configure: logger.add( os.path.join(LOGS_PATH, 'mq.chat.log'), filter=lambda record: record["extra"]["name"] == "mq_log", enqueue=True, level='DEBUG', rotation='00:00', retention='10 days', encoding="utf-8", colorize=True, format="{time:YYYY-MM-DD HH:mm:ss} | {level} | pid:{process} | threadID:{thread} | {module} | {function} | {line} " "| {message} " )

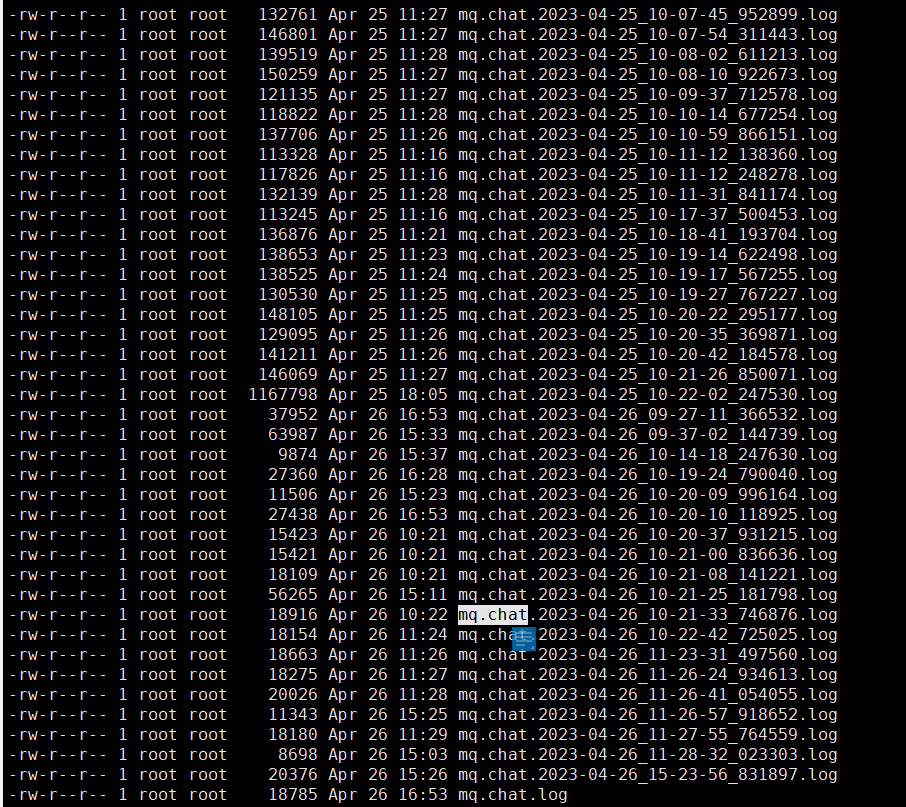

result:

expectation: multiprocessing logs print only in one file when rotation. there are only one log file every day

Given that supervisord implements its own mechanism of logs rotation I would advise to use that rather than configuring it in Loguru.

Managing multi-processing is tricky. Loguru is not aware of how supervisord start and stop processes, which makes things even more difficult. For it to work well with Loguru, you need to:

- make sure

logger.add()withenqueue=Trueis called exactly once by the main process (and not by the children) - make sure the child processes inherit the

loggerobject of the main process when they are started

I'm working on a solution to make it easier, but in the meantime, you have to be very careful that the logging configuration is correct.

but when I start a process,logger.add() will be called.

According to the docs, it seems supervisord will capture stdout and stderr of child processes. Therefore, maybe you just need to call logger.add(sys.stderr) in each process.

but when I start a process,logger.add() will be called.

I have encountered the same problem. Is there a solution available?

@ddz-mark Not really. I'll add a new method to ease interoperability with multiprocessing, but it's not yet available. For now, you must make sure logger.add() is only called in your main process and that the logger is properly inherited by your child processes.

I'm also facing the same issue i have a Django service that runs on a windows env.The logger is configured in a middleware class

and the logs keep spilling here is the log file

but when you check the logs it has different logs

and the name isn't being created with the right date stamp on rotation my-service_2024-01-13.2024-01-17_09-18-50_302220 it some how retains the date file when the service was restarted or an update was given. here is the middle ware am i missing something in the config?

def __init__(self, get_response):

#Configure the logger

logFile = os.path.join(logDir, f"my-service_{datetime.date.today()}.log")

logger.remove()

utcOffset = strftime("%z", gmtime())

utcOffsetFormatted = int(utcOffset[:-2])

logger.add(logFile, rotation = datetime.time(hour=0, minute=0,tzinfo = datetime.timezone(datetime.timedelta(hours=utcOffsetFormatted)))) #TRied using this thinking the 00:00 rotation was the problem

logger.add(

sys.stderr,

format = "{time:MMMM D, YYYY > HH:mm:ss.SSSZ} | {level} | {message} | {extra}",

level = "DEBUG",

catch = False,

backtrace = False,

enqueue = True,

diagnose = False

)```

@AndyAbok Please open a new issue detailing your setup and the frameworks you're using. Right now, It's hard for me to give more indications than what I've already mentioned in this thread.

我希望在轮换时,也将多线程日志仅记录到一个日志文件。我该怎么办?

我也有同样的需求,不知道你是否已经解决