📒Introduction

Awesome-LLM-Inference: A curated list of 📙Awesome LLM Inference Papers with Codes, check 📖Contents for more details. This repo is still updated frequently ~ 👨💻 Welcome to star ⭐️ or submit a PR to this repo!

©️Citations

@misc{Awesome-LLM-Inference@2024,

title={Awesome-LLM-Inference: A curated list of Awesome LLM Inference Papers with codes},

url={https://github.com/DefTruth/Awesome-LLM-Inference},

note={Open-source software available at https://github.com/DefTruth/Awesome-LLM-Inference},

author={DefTruth, liyucheng09 etc},

year={2024}

}

📙Awesome LLM Inference Papers with Codes

🎉Download PDFs

Awesome LLM Inference for Beginners.pdf: 500 pages, FastServe, FlashAttention 1/2, FlexGen, FP8, LLM.int8(), PagedAttention, RoPE, SmoothQuant, WINT8/4, Continuous Batching, ZeroQuant 1/2/FP, AWQ etc.

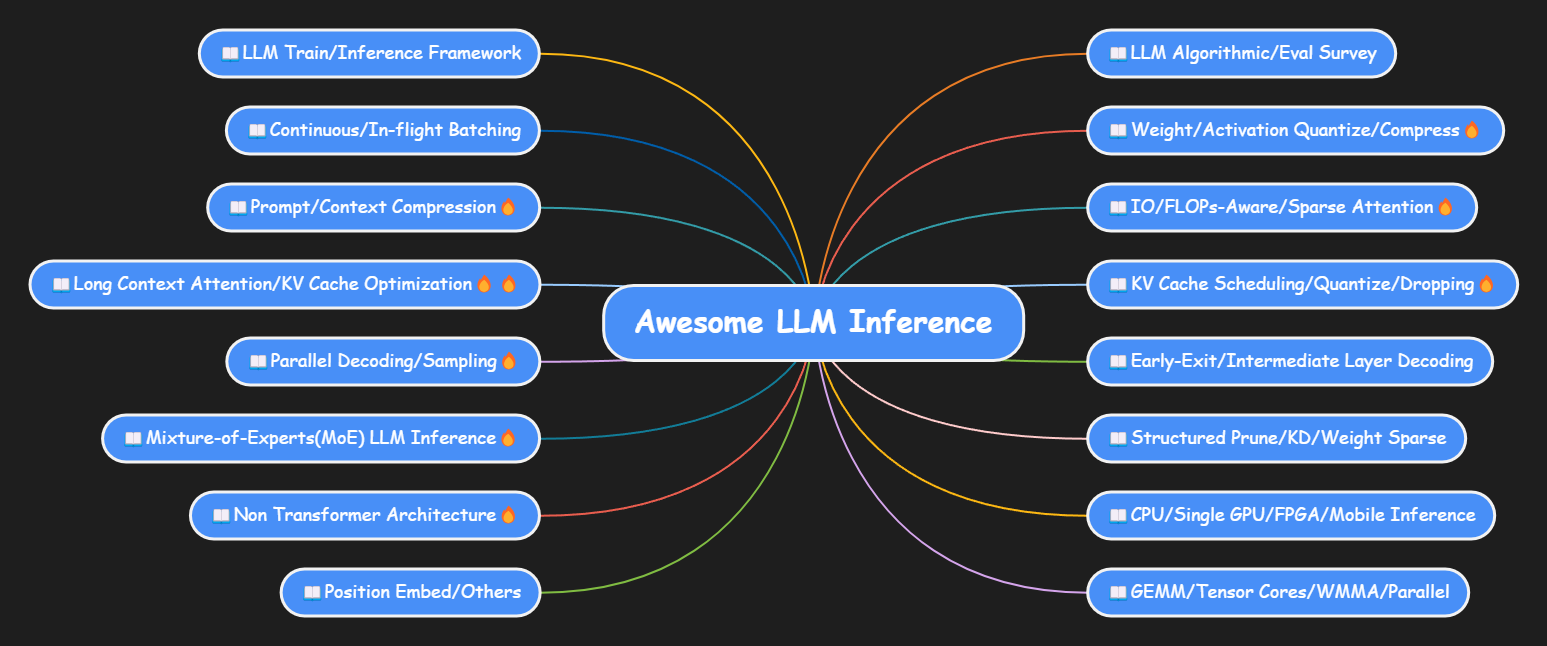

📖Contents

- 📖LLM Algorithmic/Eval Survey

- 📖LLM Train/Inference Framework

- 📖Weight/Activation Quantize/Compress🔥

- 📖Continuous/In-flight Batching

- 📖IO/FLOPs-Aware/Sparse Attention🔥

- 📖KV Cache Scheduling/Quantize/Dropping🔥

- 📖Prompt/Context Compression🔥

- 📖RAG/Long Context Attention/KV Cache Optimization🔥🔥

- 📖Early-Exit/Intermediate Layer Decoding

- 📖Parallel Decoding/Sampling🔥

- 📖Structured Prune/KD/Weight Sparse

- 📖Mixture-of-Experts(MoE) LLM Inference🔥

- 📖CPU/Single GPU/FPGA/Mobile Inference

- 📖Non Transformer Architecture🔥

- 📖GEMM/Tensor Cores/WMMA/Parallel

- 📖Position Embed/Others

📖LLM Algorithmic/Eval Survey (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2023.10 |

[Evaluating] Evaluating Large Language Models: A Comprehensive Survey(@tju.edu.cn) |

[pdf] |

[Awesome-LLMs-Evaluation]  |

⭐️ |

| 2023.11 |

🔥[Runtime Performance] Dissecting the Runtime Performance of the Training, Fine-tuning, and Inference of Large Language Models(@hkust-gz.edu.cn) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.11 |

[ChatGPT Anniversary] ChatGPT’s One-year Anniversary: Are Open-Source Large Language Models Catching up?(@e.ntu.edu.sg) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

[Algorithmic Survey] The Efficiency Spectrum of Large Language Models: An Algorithmic Survey(@Microsoft) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

[Security and Privacy] A Survey on Large Language Model (LLM) Security and Privacy: The Good, the Bad, and the Ugly(@Drexel University) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

🔥[LLMCompass] A Hardware Evaluation Framework for Large Language Model Inference(@princeton.edu) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.12 |

🔥[Efficient LLMs] Efficient Large Language Models: A Survey(@Ohio State University etc) |

[pdf] |

[Efficient-LLMs-Survey]  |

⭐️⭐️ |

| 2023.12 |

[Serving Survey] Towards Efficient Generative Large Language Model Serving: A Survey from Algorithms to Systems(@Carnegie Mellon University) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.01 |

[Understanding LLMs] Understanding LLMs: A Comprehensive Overview from Training to Inference(@Shaanxi Normal University etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.02 |

[LLM-Viewer] LLM Inference Unveiled: Survey and Roofline Model Insights(@Zhihang Yuan etc) |

[pdf] |

[LLM-Viewer]  |

⭐️⭐️ |

📖LLM Train/Inference Framework (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2020.05 |

🔥[Megatron-LM] Training Multi-Billion Parameter Language Models Using Model Parallelism(@NVIDIA) |

[pdf] |

[Megatron-LM]  |

⭐️⭐️ |

| 2023.03 |

[FlexGen] High-Throughput Generative Inference of Large Language Models with a Single GPU(@Stanford University etc) |

[pdf] |

[FlexGen]  |

⭐️ |

| 2023.05 |

[SpecInfer] Accelerating Generative Large Language Model Serving with Speculative Inference and Token Tree Verification(@Peking University etc) |

[pdf] |

[FlexFlow]  |

⭐️ |

| 2023.05 |

[FastServe] Fast Distributed Inference Serving for Large Language Models(@Peking University etc) |

[pdf] |

⚠️ |

⭐️ |

| 2023.09 |

🔥[vLLM] Efficient Memory Management for Large Language Model Serving with PagedAttention(@UC Berkeley etc) |

[pdf] |

[vllm]  |

⭐️⭐️ |

| 2023.09 |

[StreamingLLM] EFFICIENT STREAMING LANGUAGE MODELS WITH ATTENTION SINKS(@Meta AI etc) |

[pdf] |

[streaming-llm]  |

⭐️ |

| 2023.09 |

[Medusa] Medusa: Simple Framework for Accelerating LLM Generation with Multiple Decoding Heads(@Tianle Cai etc) |

[blog] |

[Medusa]  |

⭐️ |

| 2023.10 |

🔥[TensorRT-LLM] NVIDIA TensorRT LLM(@NVIDIA) |

[docs] |

[TensorRT-LLM]  |

⭐️⭐️ |

| 2023.11 |

🔥[DeepSpeed-FastGen 2x vLLM?] DeepSpeed-FastGen: High-throughput Text Generation for LLMs via MII and DeepSpeed-Inference(@Microsoft) |

[pdf] |

[deepspeed-fastgen]  |

⭐️⭐️ |

| 2023.12 |

🔥[PETALS] Distributed Inference and Fine-tuning of Large Language Models Over The Internet(@HSE Univesity etc) |

[pdf] |

[petals]  |

⭐️⭐️ |

| 2023.10 |

[LightSeq] LightSeq: Sequence Level Parallelism for Distributed Training of Long Context Transformers(@UC Berkeley etc) |

[pdf] |

[LightSeq]  |

⭐️ |

| 2023.12 |

[PowerInfer] PowerInfer: Fast Large Language Model Serving with a Consumer-grade GPU(@SJTU) |

[pdf] |

[PowerInfer]  |

⭐️ |

| 2024.01 |

[inferflow]INFERFLOW: AN EFFICIENT AND HIGHLY CONFIGURABLE INFERENCE ENGINE FOR LARGE LANGUAGE MODELS(@Tencent AI Lab) |

[pdf] |

[inferflow]  |

⭐️ |

📖Continuous/In-flight Batching (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2022.07 |

🔥[Continuous Batching] Orca: A Distributed Serving System for Transformer-Based Generative Models(@Seoul National University etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.10 |

🔥[In-flight Batching] NVIDIA TensorRT LLM Batch Manager(@NVIDIA) |

[docs] |

[TensorRT-LLM]  |

⭐️⭐️ |

| 2023.11 |

🔥[DeepSpeed-FastGen 2x vLLM?] DeepSpeed-FastGen: High-throughput Text Generation for LLMs via MII and DeepSpeed-Inference(@Microsoft) |

[blog] |

[deepspeed-fastgen]  |

⭐️⭐️ |

| 2023.11 |

[Splitwise] Splitwise: Efficient Generative LLM Inference Using Phase Splitting(@Microsoft etc) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

[SpotServe] SpotServe: Serving Generative Large Language Models on Preemptible Instances(@cmu.edu etc) |

[pdf] |

[SpotServe]  |

⭐️ |

| 2023.10 |

[LightSeq] LightSeq: Sequence Level Parallelism for Distributed Training of Long Context Transformers(@UC Berkeley etc) |

[pdf] |

[LightSeq]  |

⭐️ |

📖Weight/Activation Quantize/Compress (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2022.06 |

🔥[ZeroQuant] Efficient and Affordable Post-Training Quantization for Large-Scale Transformers(@Microsoft) |

[pdf] |

[DeepSpeed]  |

⭐️⭐️ |

| 2022.08 |

[FP8-Quantization] FP8 Quantization: The Power of the Exponent(@Qualcomm AI Research) |

[pdf] |

[FP8-quantization]  |

⭐️ |

| 2022.08 |

[LLM.int8()] 8-bit Matrix Multiplication for Transformers at Scale(@Facebook AI Research etc) |

[pdf] |

[bitsandbytes]  |

⭐️ |

| 2022.10 |

🔥[GPTQ] GPTQ: ACCURATE POST-TRAINING QUANTIZATION FOR GENERATIVE PRE-TRAINED TRANSFORMERS(@IST Austria etc) |

[pdf] |

[gptq]  |

⭐️⭐️ |

| 2022.11 |

🔥[WINT8/4] Who Says Elephants Can’t Run: Bringing Large Scale MoE Models into Cloud Scale Production(@NVIDIA&Microsoft) |

[pdf] |

[FasterTransformer]  |

⭐️⭐️ |

| 2022.11 |

🔥[SmoothQuant] Accurate and Efficient Post-Training Quantization for Large Language Models(@MIT etc) |

[pdf] |

[smoothquant]  |

⭐️⭐️ |

| 2023.03 |

[ZeroQuant-V2] Exploring Post-training Quantization in LLMs from Comprehensive Study to Low Rank Compensation(@Microsoft) |

[pdf] |

[DeepSpeed]  |

⭐️ |

| 2023.06 |

🔥[AWQ] AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration(@MIT etc) |

[pdf] |

[llm-awq]  |

⭐️⭐️ |

| 2023.06 |

[SpQR] SpQR: A Sparse-Quantized Representation for Near-Lossless LLM Weight Compression(@University of Washington etc) |

[pdf] |

[SpQR]  |

⭐️ |

| 2023.06 |

[SqueezeLLM] SQUEEZELLM: DENSE-AND-SPARSE QUANTIZATION(@berkeley.edu) |

[pdf] |

[SqueezeLLM]  |

⭐️ |

| 2023.07 |

[ZeroQuant-FP] A Leap Forward in LLMs Post-Training W4A8 Quantization Using Floating-Point Formats(@Microsoft) |

[pdf] |

[DeepSpeed]  |

⭐️ |

| 2023.09 |

[KV Cache FP8 + WINT4] Exploration on LLM inference performance optimization(@HPC4AI) |

[blog] |

⚠️ |

⭐️ |

| 2023.10 |

[FP8-LM] FP8-LM: Training FP8 Large Language Models(@Microsoft etc) |

[pdf] |

[MS-AMP]  |

⭐️ |

| 2023.10 |

[LLM-Shearing] SHEARED LLAMA: ACCELERATING LANGUAGE MODEL PRE-TRAINING VIA STRUCTURED PRUNING(@cs.princeton.edu etc) |

[pdf] |

[LLM-Shearing]  |

⭐️ |

| 2023.10 |

[LLM-FP4] LLM-FP4: 4-Bit Floating-Point Quantized Transformers(@ust.hk&meta etc) |

[pdf] |

[LLM-FP4]  |

⭐️ |

| 2023.11 |

[2-bit LLM] Enabling Fast 2-bit LLM on GPUs: Memory Alignment, Sparse Outlier, and Asynchronous Dequantization(@Shanghai Jiao Tong University etc) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

[SmoothQuant+] SmoothQuant+: Accurate and Efficient 4-bit Post-Training Weight Quantization for LLM(@ZTE Corporation) |

[pdf] |

[smoothquantplus]  |

⭐️ |

| 2023.11 |

[OdysseyLLM W4A8] A Speed Odyssey for Deployable Quantization of LLMs(@meituan.com) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

🔥[SparQ] SPARQ ATTENTION: BANDWIDTH-EFFICIENT LLM INFERENCE(@graphcore.ai) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.12 |

[Agile-Quant] Agile-Quant: Activation-Guided Quantization for Faster Inference of LLMs on the Edge(@Northeastern University&Oracle) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

[CBQ] CBQ: Cross-Block Quantization for Large Language Models(@ustc.edu.cn) |

[pdf] |

⚠️ |

⭐️ |

| 2023.10 |

[QLLM] QLLM: ACCURATE AND EFFICIENT LOW-BITWIDTH QUANTIZATION FOR LARGE LANGUAGE MODELS(@ZIP Lab&SenseTime Research etc) |

[pdf] |

⚠️ |

⭐️ |

| 2024.01 |

[FP6-LLM] FP6-LLM: Efficiently Serving Large Language Models Through FP6-Centric Algorithm-System Co-Design(@Microsoft etc) |

[pdf] |

⚠️ |

⭐️ |

📖IO/FLOPs-Aware/Sparse Attention (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2018.05 |

[Online Softmax] Online normalizer calculation for softmax(@NVIDIA) |

[pdf] |

⚠️ |

⭐️ |

| 2019.11 |

🔥[MQA] Fast Transformer Decoding: One Write-Head is All You Need(@Google) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2020.10 |

[Hash Attention] REFORMER: THE EFFICIENT TRANSFORMER(@Google) |

[pdf] |

[reformer]  |

⭐️⭐️ |

| 2022.05 |

🔥[FlashAttention] Fast and Memory-Efficient Exact Attention with IO-Awareness(@Stanford University etc) |

[pdf] |

[flash-attention]  |

⭐️⭐️ |

| 2022.10 |

[Online Softmax] SELF-ATTENTION DOES NOT NEED O(n^2) MEMORY(@Google) |

[pdf] |

⚠️ |

⭐️ |

| 2023.05 |

[FlashAttention] From Online Softmax to FlashAttention(@cs.washington.edu) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.05 |

[FLOP, I/O] Dissecting Batching Effects in GPT Inference(@Lequn Chen) |

[blog] |

⚠️ |

⭐️ |

| 2023.05 |

🔥🔥[GQA] GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints(@Google) |

[pdf] |

[flaxformer]  |

⭐️⭐️ |

| 2023.06 |

[Sparse FlashAttention] Faster Causal Attention Over Large Sequences Through Sparse Flash Attention(@EPFL etc) |

[pdf] |

[dynamic-sparse-flash-attention]  |

⭐️ |

| 2023.07 |

🔥[FlashAttention-2] Faster Attention with Better Parallelism and Work Partitioning(@Stanford University etc) |

[pdf] |

[flash-attention]  |

⭐️⭐️ |

| 2023.10 |

🔥[Flash-Decoding] Flash-Decoding for long-context inference(@Stanford University etc) |

[blog] |

[flash-attention]  |

⭐️⭐️ |

| 2023.11 |

[Flash-Decoding++] FLASHDECODING++: FASTER LARGE LANGUAGE MODEL INFERENCE ON GPUS(@Tsinghua University&Infinigence-AI) |

[pdf] |

⚠️ |

⭐️ |

| 2023.01 |

[SparseGPT] SparseGPT: Massive Language Models Can be Accurately Pruned in One-Shot(@ISTA etc) |

[pdf] |

[sparsegpt]  |

⭐️ |

| 2023.12 |

🔥[GLA] Gated Linear Attention Transformers with Hardware-Efficient Training(@MIT-IBM Watson AI) |

[pdf] |

gated_linear_attention  |

⭐️⭐️ |

| 2023.12 |

[SCCA] SCCA: Shifted Cross Chunk Attention for long contextual semantic expansion(@Beihang University) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

🔥[FlashLLM] LLM in a flash: Efficient Large Language Model Inference with Limited Memory(@Apple) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.03 |

🔥🔥[CHAI] CHAI: Clustered Head Attention for Efficient LLM Inference(@cs.wisc.edu etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.04 |

[Flash Tree Attention] DEFT: FLASH TREE-ATTENTION WITH IO-AWARENESS FOR EFFICIENT TREE-SEARCH-BASED LLM INFERENCE(@Westlake University etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

📖KV Cache Scheduling/Quantize/Dropping (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2019.11 |

🔥[MQA] Fast Transformer Decoding: One Write-Head is All You Need(@Google) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2022.06 |

[LTP] Learned Token Pruning for Transformers(@UC Berkeley etc) |

[pdf] |

[LTP]  |

⭐️ |

| 2023.05 |

🔥🔥[GQA] GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints(@Google) |

[pdf] |

[flaxformer]  |

⭐️⭐️ |

| 2023.05 |

[KV Cache Compress] Scissorhands: Exploiting the Persistence of Importance Hypothesis for LLM KV Cache Compression at Test Time(@) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.06 |

[H2O] H2O: Heavy-Hitter Oracle for Efficient Generative Inference of Large Language Models(@Rice University etc) |

[pdf] |

[H2O]  |

⭐️ |

| 2023.06 |

[QK-Sparse/Dropping Attention] Faster Causal Attention Over Large Sequences Through Sparse Flash Attention(@EPFL etc) |

[pdf] |

[dynamic-sparse-flash-attention]  |

⭐️ |

| 2023.08 |

🔥🔥[Chunked Prefills] SARATHI: Efficient LLM Inference by Piggybacking Decodes with Chunked Prefills(@Microsoft etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.09 |

🔥🔥[PagedAttention] Efficient Memory Management for Large Language Model Serving with PagedAttention(@UC Berkeley etc) |

[pdf] |

[vllm]  |

⭐️⭐️ |

| 2023.09 |

[KV Cache FP8 + WINT4] Exploration on LLM inference performance optimization(@HPC4AI) |

[blog] |

⚠️ |

⭐️ |

| 2023.10 |

🔥[TensorRT-LLM KV Cache FP8] NVIDIA TensorRT LLM(@NVIDIA) |

[docs] |

[TensorRT-LLM]  |

⭐️⭐️ |

| 2023.10 |

🔥[Adaptive KV Cache Compress] MODEL TELLS YOU WHAT TO DISCARD: ADAPTIVE KV CACHE COMPRESSION FOR LLMS(@illinois.eduµsoft) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.10 |

[CacheGen] CacheGen: Fast Context Loading for Language Model Applications(@Chicago University&Microsoft) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

[KV-Cache Optimizations] Leveraging Speculative Sampling and KV-Cache Optimizations Together for Generative AI using OpenVINO(@Haim Barad etc) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

[KV Cache Compress with LoRA] Compressed Context Memory for Online Language Model Interaction (@SNU & NAVER AI) |

[pdf] |

[Compressed-Context-Memory]  |

⭐️⭐️ |

| 2023.12 |

🔥🔥[RadixAttention] Efficiently Programming Large Language Models using SGLang(@Stanford University etc) |

[pdf] |

[sglang]  |

⭐️⭐️ |

| 2024.01 |

🔥🔥[DistKV-LLM] Infinite-LLM: Efficient LLM Service for Long Context with DistAttention and Distributed KVCache(@Alibaba etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.02 |

🔥🔥[Prompt Caching] Efficient Prompt Caching via Embedding Similarity(@UC Berkeley) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.02 |

🔥🔥[Less] Get More with LESS: Synthesizing Recurrence with KV Cache Compression for Efficient LLM Inference(@CMU etc) |

[pdf] |

⚠️ |

⭐️ |

| 2024.02 |

🔥🔥[MiKV] No Token Left Behind: Reliable KV Cache Compression via Importance-Aware Mixed Precision Quantization(@KAIST) |

[pdf] |

⚠️ |

⭐️ |

| 2024.02 |

🔥🔥[Shared Prefixes] Hydragen: High-Throughput LLM Inference with Shared Prefixes |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.03 |

🔥[QAQ] QAQ: Quality Adaptive Quantization for LLM KV Cache(@@smail.nju.edu.cn) |

[pdf] |

[QAQ-KVCacheQuantization]  |

⭐️⭐️ |

| 2024.03 |

🔥🔥[DMC] Dynamic Memory Compression: Retrofitting LLMs for Accelerated Inference(@NVIDIA etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.03 |

🔥🔥[Keyformer] Keyformer: KV Cache reduction through key tokens selection for Efficient Generative Inference(@ece.ubc.ca etc) |

[pdf] |

[Keyformer]  |

⭐️⭐️ |

| 2024.03 |

[FASTDECODE] FASTDECODE: High-Throughput GPU-Efficient LLM Serving using Heterogeneous(@Tsinghua University) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.03 |

[Sparsity-Aware KV Caching] ALISA: Accelerating Large Language Model Inference via Sparsity-Aware KV Caching(@ucf.edu) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.03 |

🔥[GEAR] GEAR: An Efficient KV Cache Compression Recipe for Near-Lossless Generative Inference of LLM(@gatech.edu) |

[pdf] |

[GEAR]  |

⭐️ |

| 2024.04 |

[SqueezeAttention] SQUEEZEATTENTION: 2D Management of KV-Cache in LLM Inference via Layer-wise Optimal Budget(@lzu.edu.cn etc) |

[pdf] |

[SqueezeAttention]  |

⭐️⭐️ |

| 2024.04 |

[SnapKV] SnapKV: LLM Knows What You are Looking for Before Generation(@UIUC) |

[pdf] |

[SnapKV]  |

⭐️ |

📖Prompt/Context Compression (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2023.04 |

🔥[Selective-Context] Compressing Context to Enhance Inference Efficiency of Large Language Models(@Surrey) |

[pdf] |

Selective-Context  |

⭐️⭐️ |

| 2023.05 |

[AutoCompressor] Adapting Language Models to Compress Contextss(@Princeton) |

[pdf] |

AutoCompressor  |

⭐️ |

| 2023.10 |

🔥[LLMLingua] LLMLingua: Compressing Prompts for Accelerated Inference of Large Language Models(@Microsoft) |

[pdf] |

LLMLingua  |

⭐️⭐️ |

| 2023.10 |

🔥🔥[LongLLMLingua] LongLLMLingua: Accelerating and Enhancing LLMs in Long Context Scenarios via Prompt Compression(@Microsoft) |

[pdf] |

LLMLingua  |

⭐️⭐️ |

| 2024.03 |

🔥[LLMLingua-2] LLMLingua-2: Data Distillation for Efficient and Faithful Task-Agnostic Prompt Compression(@Microsoft) |

[pdf] |

LLMLingua series  |

⭐️ |

📖RAG/Long Context Attention/KV Cache Optimization (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2023.05 |

🔥🔥[Blockwise Attention] Blockwise Parallel Transformer for Large Context Models(@UC Berkeley) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.05 |

🔥[Landmark Attention] Random-Access Infinite Context Length for Transformers(@epfl.ch) |

[pdf] |

landmark-attention  |

⭐️⭐️ |

| 2023.07 |

🔥[LightningAttention-1] TRANSNORMERLLM: A FASTER AND BETTER LARGE LANGUAGE MODEL WITH IMPROVED TRANSNORMER(@OpenNLPLab) |

[pdf] |

TransnormerLLM  |

⭐️⭐️ |

| 2023.07 |

🔥[LightningAttention-2] Lightning Attention-2: A Free Lunch for Handling Unlimited Sequence Lengths in Large Language Models(@OpenNLPLab) |

[pdf] |

lightning-attention  |

⭐️⭐️ |

| 2023.10 |

🔥🔥[RingAttention] Ring Attention with Blockwise Transformers for Near-Infinite Context(@UC Berkeley) |

[pdf] |

[RingAttention]  |

⭐️⭐️ |

| 2023.11 |

🔥[HyperAttention] HyperAttention: Long-context Attention in Near-Linear Time(@yale&Google) |

[pdf] |

hyper-attn  |

⭐️⭐️ |

| 2023.11 |

[Streaming Attention] One Pass Streaming Algorithm for Super Long Token Attention Approximation in Sublinear Space(@Adobe Research etc) |

[pdf] |

⚠️ |

⭐️ |

| 2023.11 |

🔥[Prompt Cache] PROMPT CACHE: MODULAR ATTENTION REUSE FOR LOW-LATENCY INFERENCE(@Yale University etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.11 |

🔥🔥[StripedAttention] STRIPED ATTENTION: FASTER RING ATTENTION FOR CAUSAL TRANSFORMERS(@MIT etc) |

[pdf] |

[striped_attention]  |

⭐️⭐️ |

| 2024.01 |

🔥🔥[KVQuant] KVQuant: Towards 10 Million Context Length LLM Inference with KV Cache Quantization(@UC Berkeley) |

[pdf] |

[KVQuant]  |

⭐️⭐️ |

| 2024.02 |

🔥[RelayAttention] RelayAttention for Efficient Large Language Model Serving with Long System Prompts(@sensetime.com etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.04 |

🔥🔥[Infini-attention] Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention(@Google) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2024.04 |

🔥🔥[RAGCache] RAGCache: Efficient Knowledge Caching for Retrieval-Augmented Generation(@Peking University&ByteDance Inc) |

[pdf] |

⚠️ |

⭐️⭐️ |

📖Early-Exit/Intermediate Layer Decoding (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2020.04 |

[DeeBERT] DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference(@uwaterloo.ca) |

[pdf] |

⚠️ |

⭐️ |

| 2021.06 |

[BERxiT] BERxiT: Early Exiting for BERT with Better Fine-Tuning and Extension to Regression(@uwaterloo.ca) |

[pdf] |

[berxit]  |

⭐️ |

| 2023.10 |

🔥[LITE] Accelerating LLaMA Inference by Enabling Intermediate Layer Decoding via Instruction Tuning with LITE(@Arizona State University) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.12 |

🔥🔥[EE-LLM] EE-LLM: Large-Scale Training and Inference of Early-Exit Large Language Models with 3D Parallelism(@alibaba-inc.com) |

[pdf] |

[EE-LLM]  |

⭐️⭐️ |

| 2023.10 |

🔥[FREE] Fast and Robust Early-Exiting Framework for Autoregressive Language Models with Synchronized Parallel Decoding(@KAIST AI&AWS AI) |

[pdf] |

[fast_robust_early_exit]  |

⭐️⭐️ |

📖Parallel Decoding/Sampling (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2018.11 |

🔥[Parallel Decoding] Blockwise Parallel Decoding for Deep Autoregressive Models(@Berkeley&Google) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.02 |

🔥[Speculative Sampling] Accelerating Large Language Model Decoding with Speculative Sampling(@DeepMind) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.05 |

🔥[Speculative Sampling] Fast Inference from Transformers via Speculative Decoding(@Google Research etc) |

[pdf] |

[LLMSpeculativeSampling]  |

⭐️⭐️ |

| 2023.09 |

🔥[Medusa] Medusa: Simple Framework for Accelerating LLM Generation with Multiple Decoding Heads(@Tianle Cai etc) |

[pdf] |

[Medusa]  |

⭐️⭐️ |

| 2023.10 |

[OSD] Online Speculative Decoding(@UC Berkeley etc) |

[pdf] |

⚠️ |

⭐️⭐️ |

| 2023.12 |

[Cascade Speculative] Cascade Speculative Drafting for Even Faster LLM Inference(@illinois.edu) |

[pdf] |

⚠️ |

⭐️ |

| 2024.02 |

🔥[LookaheadDecoding] Break the Sequential Dependency of LLM Inference Using LOOKAHEAD DECODING(@UCSD&Google&UC Berkeley) |

[pdf] |

[LookaheadDecoding]  |

⭐️⭐️ |

| 2024.02 |

🔥🔥[Speculative Decoding] Decoding Speculative Decoding(@cs.wisc.edu) |

[pdf] |

⚠️ |

⭐️ |

| 2024.04 |

🔥🔥[TriForce] TriForce: Lossless Acceleration of Long Sequence Generation with Hierarchical Speculative Decoding(@cmu.edu&Meta AI) |

[pdf] |

[TriForce]  |

⭐️⭐️ |

| 2024.04 |

🔥🔥[Hidden Transfer] Parallel Decoding via Hidden Transfer for Lossless Large Language Model Acceleration(@pku.edu.cn etc) |

[pdf] |

⚠️ |

⭐️ |

📖Structured Prune/KD/Weight Sparse (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2023.12 |

[FLAP] Fluctuation-based Adaptive Structured Pruning for Large Language Models(@Chinese Academy of Sciences etc) |

[pdf] |

[FLAP]  |

⭐️⭐️ |

| 2023.12 |

🔥[LASER] The Truth is in There: Improving Reasoning in Language Models with Layer-Selective Rank Reduction(@mit.edu) |

[pdf] |

[laser]  |

⭐️⭐️ |

| 2023.12 |

[PowerInfer] PowerInfer: Fast Large Language Model Serving with a Consumer-grade GPU(@SJTU) |

[pdf] |

[PowerInfer]  |

⭐️ |

| 2024.01 |

[Admm Pruning] Fast and Optimal Weight Update for Pruned Large Language Models(@fmph.uniba.sk) |

[pdf] |

[admm-pruning]  |

⭐️ |

| 2024.01 |

[FFSplit] FFSplit: Split Feed-Forward Network For Optimizing Accuracy-Efficiency Trade-off in Language Model Inference(@1Rice University etc) |

[pdf] |

⚠️ |

⭐️ |

📖Mixture-of-Experts(MoE) LLM Inference (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2022.11 |

🔥[WINT8/4] Who Says Elephants Can’t Run: Bringing Large Scale MoE Models into Cloud Scale Production(@NVIDIA&Microsoft) |

[pdf] |

[FasterTransformer]  |

⭐️⭐️ |

| 2023.12 |

🔥 [Mixtral Offloading] Fast Inference of Mixture-of-Experts Language Models with Offloading(@Moscow Institute of Physics and Technology etc) |

[pdf] |

[mixtral-offloading]  |

⭐️⭐️ |

| 2024.01 |

[MoE-Mamba] MoE-Mamba: Efficient Selective State Space Models with Mixture of Experts(@uw.edu.pl) |

[pdf] |

⚠️ |

⭐️ |

| 2024.04 |

[MoE Inference] Toward Inference-optimal Mixture-of-Expert Large Language Models(@UC San Diego etc) |

[pdf] |

⚠️ |

⭐️ |

📖CPU/Single GPU/FPGA/Mobile Inference (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2023.03 |

[FlexGen] High-Throughput Generative Inference of Large Language Models with a Single GPU(@Stanford University etc) |

[pdf] |

[FlexGen]  |

⭐️ |

| 2023.11 |

[LLM CPU Inference] Efficient LLM Inference on CPUs(@intel) |

[pdf] |

[intel-extension-for-transformers]  |

⭐️ |

| 2023.12 |

[LinguaLinked] LinguaLinked: A Distributed Large Language Model Inference System for Mobile Devices(@University of California Irvine) |

[pdf] |

⚠️ |

⭐️ |

| 2023.12 |

[OpenVINO] Leveraging Speculative Sampling and KV-Cache Optimizations Together for Generative AI using OpenVINO(@Haim Barad etc) |

[pdf] |

⚠️ |

⭐️ |

| 2024.03 |

[FlightLLM] FlightLLM: Efficient Large Language Model Inference with a Complete Mapping Flow on FPGAs(@Infinigence-AI) |

[pdf] |

⚠️ |

⭐️ |

| 2024.03 |

[Transformer-Lite] Transformer-Lite: High-efficiency Deployment of Large Language Models on Mobile Phone GPUs(@OPPO) |

[pdf] |

⚠️ |

⭐️ |

📖Non Transformer Architecture (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2023.05 |

🔥🔥[RWKV] RWKV: Reinventing RNNs for the Transformer Era(@Bo Peng etc) |

[pdf] |

[RWKV-LM]  |

⭐️⭐️ |

| 2023.12 |

🔥🔥[Mamba] Mamba: Linear-Time Sequence Modeling with Selective State Spaces(@cs.cmu.edu etc) |

[pdf] |

[mamba]  |

⭐️⭐️ |

📖GEMM/Tensor Cores/WMMA/Parallel (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2018.03 |

[Tensor Core] NVIDIA Tensor Core Programmability, Performance & Precision(@KTH Royal etc) |

[pdf] |

⚠️ |

⭐️ |

| 2022.06 |

[Microbenchmark] Dissecting Tensor Cores via Microbenchmarks: Latency, Throughput and Numeric Behaviors(@tue.nl etc) |

[pdf] |

[DissectingTensorCores]  |

⭐️ |

| 2022.09 |

[FP8] FP8 FORMATS FOR DEEP LEARNING(@NVIDIA) |

[pdf] |

⚠️ |

⭐️ |

| 2023.08 |

[Tensor Cores] Reducing shared memory footprint to leverage high throughput on Tensor Cores and its flexible API extension library(@Tokyo Institute etc) |

[pdf] |

[wmma_extension]  |

⭐️ |

| 2024.02 |

[QUICK] QUICK: Quantization-aware Interleaving and Conflict-free Kernel for efficient LLM inference(@SqueezeBits Inc) |

[pdf] |

[QUICK]  |

⭐️⭐️ |

| 2024.02 |

[Tensor Parallel] TP-AWARE DEQUANTIZATION(@IBM T.J. Watson Research Center) |

[pdf] |

⚠️ |

⭐️ |

📖Position Embed/Others (©️back👆🏻)

| Date |

Title |

Paper |

Code |

Recom |

| 2021.04 |

🔥[RoPE] ROFORMER: ENHANCED TRANSFORMER WITH ROTARY POSITION EMBEDDING(@Zhuiyi Technology Co., Ltd.) |

[pdf] |

[transformers]  |

⭐️ |

| 2022.10 |

[ByteTransformer] A High-Performance Transformer Boosted for Variable-Length Inputs(@ByteDance&NVIDIA) |

[pdf] |

[ByteTransformer]  |

⭐️ |

©️License

GNU General Public License v3.0

🎉Contribute

Welcome to star & submit a PR to this repo!

Awesome-LLM-Inference copied to clipboard

Awesome-LLM-Inference copied to clipboard