glommio

glommio copied to clipboard

glommio copied to clipboard

Cargo test hangs

Running cargo test hangs with test failures here and there in my environment.

cargo test output

running 360 tests

test channels::spsc_queue::tests::test_try_poll ... ok

test channels::spsc_queue::tests::test_try_push ... ok

test channels::spsc_queue::tests::test_wrap - should panic ... ok

test error::test::composite_error_from_into ... ok

test error::test::channel_wouldblock_err_msg - should panic ... ok

test error::test::channel_closed_err_msg - should panic ... ok

test error::test::enhance_error ... ok

test error::test::enhance_error_converted ... ok

test error::test::extended_file_err_msg - should panic ... ok

test error::test::extended_closed_file_err_msg - should panic ... ok

test error::test::extended_file_err_msg_unwrap - should panic ... ok

test error::test::file_wouldblock_err_msg - should panic ... ok

test error::test::queue_still_active_err_msg - should panic ... ok

test error::test::queue_not_found_err_msg - should panic ... ok

test error::test::rwlock_wouldblock_err_msg - should panic ... ok

test error::test::rwlock_closed_err_msg - should panic ... ok

test error::test::semaphore_closed_err_msg - should panic ... ok

test error::test::semaphore_wouldblock_err_msg - should panic ... ok

test executor::placement::test::cpuset_difference ... ok

test executor::placement::test::cpuset_disjoint ... ok

test executor::placement::test::cpuset_intersection ... ok

test executor::placement::test::cpuset_iterate ... ok

test executor::placement::test::cpuset_subset_and_superset ... ok

test executor::placement::test::cpuset_symmetric_difference ... ok

test executor::placement::pq_tree::test::construct_pq_tree_spread ... ok

test executor::placement::test::cpuset_union ... ok

test executor::placement::pq_tree::test::construct_pq_tree_pack ... ok

test executor::placement::test::custom_placement ... ok

test executor::placement::test::max_packer_numa_in_pkg ... ok

test executor::placement::test::max_packer_with_topology_0 ... ok

test executor::placement::test::max_packer_with_topology_empty ... ok

test executor::placement::test::max_spreader_with_topology_0 ... ok

test executor::placement::test::max_spreader_with_topology_1 ... ok

test executor::placement::test::max_spreader_with_topology_2 ... ok

test executor::placement::test::max_spreader_with_topology_empty ... ok

test channels::spsc_queue::tests::test_threaded ... ok

test executor::placement::test::cpu_set ... ok

test executor::placement::test::placement_fenced_clone ... ok

test executor::placement::test::placement_custom_clone ... ok

test executor::placement::test::max_spreader_this_machine ... ok

test executor::placement::test::max_packer_this_machine ... ok

test executor::placement::test::placement_unbound_clone ... ok

test executor::test::bind_to_cpu_set_range ... ok

test executor::placement::test::placement_max_pack_clone ... ok

test executor::placement::test::placement_max_spread_clone ... ok

test executor::test::create_fail_to_bind ... ok

test channels::local_channel::test::producer_consumer ... ok

test channels::local_channel::test::producer_bounded_has_capacity ... ok

test channels::local_channel::test::producer_receiver_serialized ... ok

test channels::shared_channel::test::close_sender_while_blocked_on_send ... ok

test channels::local_channel::test::producer_bounded_early_error ... ok

test channels::local_channel::test::producer_parallel_consumer ... ok

test channels::local_channel::test::non_stream_receiver ... ok

test channels::local_channel::test::producer_bounded_ping_pong ... ok

test channels::local_channel::test::producer_bounded_previously_blocked_still_errors_out ... ok

test channels::local_channel::test::multiple_task_receivers ... ok

test executor::test::executor_invalid_executor_count ... ok

test channels::shared_channel::test::copy_shared ... ok

test channels::channel_mesh::tests::test_partial_mesh ... ok

test channels::channel_mesh::tests::test_join_more_than_capacity - should panic ... ok

test channels::shared_channel::test::consumer_never_connects ... ok

test channels::channel_mesh::tests::test_join_more_than_once - should panic ... ok

test channels::local_channel::test::producer_early_drop_receiver ... ok

test channels::shared_channel::test::producer_consumer ... ok

test channels::shared_channel::test::non_copy_shared ... ok

test channels::shared_channel::test::consumer_sleeps_before_producer_produces ... ok

test channels::shared_channel::test::shared_drop_cascade_drop_executor ... ok

test channels::shared_channel::test::producer_sleeps_before_consumer_consumes ... ok

test channels::shared_channel::test::producer_never_connects ... ok

test channels::shared_channel::test::pass_function ... ok

test channels::shared_channel::test::send_to_full_channel ... ok

test channels::shared_channel::test::shared_drop_cascade_drop_executor_reverse ... ok

test executor::test::task_optimized_for_throughput ... ok

test executor::test::create_and_bind ... ok

test executor::test::spawn_without_executor - should panic ... ok

test executor::test::create_and_destroy_executor ... ok

test executor::test::scoped_task ... ok

test free_list::free_list_smoke_test ... ok

test executor::test::current_task_queue_matches ... ok

test executor::test::wake_by_ref_refcount_underflow_with_sleep ... ok

test channels::shared_channel::test::shared_drop_gets_called_reversed ... ok

test executor::test::executor_pool_builder_spawn_cancel ... ok

test executor::test::multiple_spawn ... ok

test executor::test::wake_refcount_underflow_with_sleep ... ok

test executor::test::panic_is_not_list - should panic ... ok

test io::buffered_file::test::file_open_nonexistent ... ok

test executor::test::executor_inception - should panic ... ok

test channels::shared_channel::test::producer_stream_consumer ... ok

test channels::sharding::tests::test ... ok

test executor::test::invalid_task_queue ... ok

test executor::test::wake_refcount_underflow_with_join_handle ... ok

test executor::test::wake_by_ref_refcount_underflow_with_join_handle ... ok

test io::buffered_file::test::file_create_close ... ok

test executor::test::executor_pool_builder_return_values ... ok

test executor::test::executor_pool_builder_thread_panic ... ok

test executor::test::executor_pool_builder ... ok

test io::buffered_file_stream::test::lines ... ok

test executor::test::cross_executor_wake_hold_waker ... ok

test io::buffered_file::test::file_open ... ok

test io::buffered_file_stream::test::mix_and_match_apis ... ok

test executor::test::task_with_latency_requirements ... ok

test io::buffered_file_stream::test::read_exact_empty_file ... ok

test io::buffered_file_stream::test::nop_seek_write ... ok

test channels::channel_mesh::tests::test_channel_mesh ... ok

test io::buffered_file::test::write_past_end ... ok

test io::buffered_file::test::random_io ... ok

test io::buffered_file_stream::test::read_line ... ok

test io::buffered_file_stream::test::read_until_empty_file ... ok

test io::buffered_file_stream::test::write_and_flush ... ok

test io::buffered_file_stream::test::read_until_eof ... ok

test io::buffered_file_stream::test::read_exact_part_of_file ... ok

test io::buffered_file_stream::test::seek_end_and_read_exact ... ok

test io::buffered_file_stream::test::read_slice ... ok

test io::buffered_file_stream::test::seek_start_and_read_exact ... ok

test io::buffered_file_stream::test::read_to_end_from_start ... ok

test channels::channel_mesh::tests::test_peer_id_invariance ... ok

test io::buffered_file_stream::test::read_until ... ok

test io::buffered_file_stream::test::seek_and_write ... ok

test io::buffered_file_stream::test::read_to_end_after_seek ... ok

test io::buffered_file_stream::test::split ... ok

test executor::test::test_detach ... ok

test io::buffered_file_stream::test::write_simple ... ok

test io::bulk_io::tests::read_no_coalescing ... ok

test io::bulk_io::tests::nonmonotonic_iovec_merging ... ok

test io::bulk_io::tests::monotonic_iovec_merging ... ok

test io::bulk_io::tests::large_input_passthrough ... ok

test channels::sharding::tests::test_send_to ... ok

test io::bulk_io::tests::read_amplification_limit ... ok

test channels::sharding::tests::test_send ... ok

test io::dma_file::test::file_fallocate_alocatee ... ok

test io::dma_file::test::file_empty_read ... ok

test io::dma_file::test::file_create_close ... ok

test io::dma_file::test::cancellation_doest_crash_futures_not_polled ... ok

test io::dma_file::test::file_fallocate_zero ... ok

test io::dma_file::test::file_invalid_readonly_write ... ok

test io::dma_file::test::file_many_reads ... ok

test io::dma_file::test::cancellation_doest_crash_futures_polled ... ok

test io::dma_file::test::file_many_reads_no_coalescing ... ok

test io::dma_file::test::file_path ... ok

test io::dma_file::test::file_rename ... ok

test io::dma_file::test::file_open ... ok

test io::dma_file::test::file_open_nonexistent ... ok

test io::dma_file::test::file_many_reads_unaligned ... ok

test io::dma_file::test::file_simple_readwrite ... ok

test io::dma_file::test::file_rename_noop ... ok

test io::dma_file::test::is_same_file ... ok

test io::dma_file::test::per_queue_stats ... ok

test io::dma_file_stream::test::flushstate ... ok

test io::dma_file_stream::test::flushed_position_small_buffer ... ok

test io::dma_file_stream::test::flush_and_drop ... ok

test controllers::deadline_queue::test::deadline_queue_successfully_drops_item_when_done ... ok

test io::dma_file_stream::test::poll_reader_repeatedly ... ok

test channels::shared_channel::test::destroy_with_pending_wakers ... ok

test io::dma_file_stream::test::read_exact_empty_file ... ok

test executor::test::ten_yielding_queues ... ok

test controllers::deadline_queue::test::deadline_queue_shares_ok_if_we_process_smoothly ... ok

test controllers::deadline_queue::test::deadline_queue_shares_increase_if_we_dont_process ... ok

test controllers::deadline_queue::test::deadline_queue_behaves_well_with_zero_total_units ... ok

test controllers::deadline_queue::test::deadline_queue_behaves_well_if_we_process_too_much ... ok

test executor::latch::test::ready ... ok

test io::dma_file_stream::test::read_exact_crosses_buffer ... ok

test executor::latch::test::cancel ... ok

test io::dma_file_stream::test::read_exact_early_eof ... ok

test io::dma_file_stream::test::read_get_buffer_aligned_cross_boundaries ... ok

test io::dma_file_stream::test::read_exact_single_buffer ... ok

test io::dma_file_stream::test::read_exact_zero_buffer ... ok

test io::dma_file_stream::test::read_exact_late_start ... ok

test io::read_result::tests::equal_struct_size ... ok

test executor::test::cross_executor_wake_early_drop ... ok

test io::dma_file_stream::test::read_get_buffer_aligned ... ok

test channels::shared_channel::test::shared_drop_gets_called ... ok

test io::dma_file_stream::test::read_exact_unaligned_file_size ... ok

test io::sched::test::file_sched_drop_orphan ... ok

test executor::test::cross_executor_wake_by_ref ... ok

test io::sched::test::file_sched_conflicts ... ok

test executor::test::cross_executor_wake_by_value ... ok

test executor::test::cross_executor_wake_with_join_handle ... ok

test io::sched::test::file_sched_lifetime ... ok

test io::dma_file_stream::test::read_exact_skip ... ok

test io::sched::test::file_opt_out_dedup ... FAILED

test io::dma_file_stream::test::write_all ... FAILED

test io::dma_file_stream::test::sync_and_close ... FAILED

test io::dma_file_stream::test::read_get_buffer_aligned_entire_buffer_at_once ... FAILED

test io::immutable_file::test::fail_on_already_existent - should panic ... ok

test io::sched::test::source_sched_lifetime ... ok

test io::sched::test::source_sched_drop_orphan ... ok

test io::immutable_file::test::seal_and_stream ... FAILED

test io::dma_file_stream::test::write_multibuffer ... FAILED

test iou::registrar::tests::double_register - should panic ... ok

test iou::tests::test_resultify ... ok

test iou::registrar::tests::empty_update_err - should panic ... ok

test iou::registrar::tests::placeholder_update ... ok

test iou::registrar::tests::empty_unregister_err - should panic ... ok

test iou::registrar::tests::offset_out_of_bounds_update - should panic ... ok

test iou::registrar::tests::register_empty_slice - should panic ... ok

test iou::registrar::tests::valid_fd_update ... ok

test iou::registrar::tests::slice_len_out_of_bounds_update - should panic ... ok

test iou::registrar::tests::register_bad_fd - should panic ... ok

test io::dma_file_stream::test::write_with_readable_file ... ok

test io::dma_file_stream::test::write_close_twice ... ok

test io::dma_file_stream::test::read_to_end_of_empty_file ... ok

test io::sched::test::file_hard_link_dedup ... ok

test io::immutable_file::test::fail_reader_on_non_existent - should panic ... ok

test io::immutable_file::test::seal_and_random ... ok

test executor::test::test_no_spin ... ok

test io::immutable_file::test::seal_ready_many ... ok

test io::dma_file_stream::test::write_zero_buffer ... ok

test io::glommio_file::test::drop_closes_the_file ... ok

test io::dma_file_stream::test::write_no_write_behind ... ok

test io::dma_file_stream::test::read_mixed_api ... ok

test io::immutable_file::test::stream_pos ... ok

test io::dma_file_stream::test::read_get_buffer_aligned_past_the_end ... ok

test net::udp_socket::tests::broadcast ... FAILED

test net::tcp_socket::tests::tcp_invalid_timeout ... FAILED

test net::tcp_socket::tests::tcp_stream_nodelay ... FAILED

test net::tcp_socket::tests::tcp_listener_ttl ... FAILED

test net::tcp_socket::tests::tcp_connect_timeout_error ... FAILED

test net::tcp_socket::tests::tcp_read_timeout ... FAILED

test io::test::remove_nonexistent ... ok

test io::dma_file_stream::test::read_to_end_after_skip ... ok

test net::tcp_socket::tests::connect_local_server ... ok

test io::dma_file_stream::test::read_get_buffer_aligned_zero_buffer ... ok

test io::sched::test::file_offset_dedup ... ok

test net::tcp_socket::tests::parallel_accept ... FAILED

test io::sched::test::file_simple_dedup ... ok

test executor::test::test_spin ... FAILED

test io::sched::test::file_soft_link_dedup ... ok

test net::tcp_socket::tests::multibuf_fill ... ok

test io::sched::test::file_simple_dedup_concurrent ... ok

test net::tcp_socket::tests::accepted_tcp_stream_peer_addr ... FAILED

test net::tcp_socket::tests::stream_of_connections ... ok

test net::tcp_socket::tests::connect_and_ping_pong ... ok

test net::udp_socket::tests::zero_sized_send ... FAILED

test net::tcp_socket::tests::multi_executor_accept ... FAILED

test net::udp_socket::tests::multicast_v4 ... FAILED

test io::dma_file_stream::test::read_wronly_file ... ok

test net::tcp_socket::tests::peek ... ok

test net::unix::tests::connect_local_server ... FAILED

test net::udp_socket::tests::peek ... FAILED

test net::unix::tests::pair ... FAILED

test net::udp_socket::tests::recvfrom_blocking ... FAILED

test net::udp_socket::tests::udp_connected_ping_pong ... FAILED

test net::tcp_socket::tests::overconsume ... ok

test net::tcp_socket::tests::repeated_fill_before_consume ... ok

test net::tcp_socket::tests::tcp_connect_timeout ... ok

test io::dma_file_stream::test::read_simple ... ok

test net::udp_socket::tests::peekfrom_non_blocking ... FAILED

test net::udp_socket::tests::udp_connect_peers ... FAILED

test net::udp_socket::tests::set_multicast_loop_v4 ... FAILED

test net::udp_socket::tests::udp_unconnected_recv - should panic ... ok

test net::udp_socket::tests::multicast_v6 ... ok

test net::udp_socket::tests::set_ttl ... ok

test net::tcp_socket::tests::tcp_force_poll ... ok

test sync::rwlock::test::test_get_mut ... ok

test sync::rwlock::test::test_get_mut_close ... ok

test net::udp_socket::tests::udp_connected_recv_filter ... FAILED

test sync::rwlock::test::test_into_inner_close ... ok

test sync::rwlock::test::test_into_inner ... ok

test sync::rwlock::test::test_into_inner_drop ... ok

test net::tcp_socket::tests::tcp_stream_ttl ... ok

test sync::rwlock::test::test_try_read ... FAILED

test sync::gate::tests::test_dropped_task ... FAILED

test sync::rwlock::test::rwlock_reentrant ... FAILED

test sync::gate::tests::test_immediate_close ... FAILED

test net::unix::tests::read_until ... FAILED

test net::udp_socket::tests::recvfrom_non_blocking ... ok

test net::udp_socket::tests::sendto_blocking ... ok

test net::tcp_socket::tests::test_lines ... ok

test net::tcp_socket::tests::test_read_line ... ok

test net::udp_socket::tests::udp_unconnected_send - should panic ... ok

test io::dma_file_stream::test::read_to_end_no_read_ahead ... ok

test sync::semaphore::test::semaphore_overflow ... FAILED

test sync::semaphore::test::permit_raii_works ... FAILED

test sync::semaphore::test::semaphore_ensure_execution_order ... FAILED

test sync::semaphore::test::semaphore_acquisition_for_zero_unit_works ... FAILED

test sync::rwlock::test::test_smoke ... FAILED

test sync::semaphore::test::try_acquire_insufficient_units ... ok

test sync::semaphore::test::try_acquire_permit_insufficient_units ... ok

test net::unix::tests::datagram_pair_ping_pong ... FAILED

test net::unix::tests::datagram_connect_unbounded ... FAILED

test sync::semaphore::test::try_acquire_permit_semaphore_is_closed ... ok

test net::unix::tests::datagram_send_to_recv_from ... FAILED

test sync::rwlock::test::test_frob ... ok

test executor::test::executor_pool_builder_placements ... FAILED

test sync::semaphore::test::try_acquire_permit_sufficient_units ... ok

test sync::semaphore::test::try_acquire_sufficient_units ... ok

test sync::semaphore::test::try_acquire_semaphore_is_closed ... ok

test sync::semaphore::test::try_acquire_static_permit_insufficient_units ... ok

test sync::semaphore::test::broken_semaphore_if_close_happens_first - should panic ... ok

test sync::rwlock::test::test_ping_pong ... FAILED

test sys::sysfs::test::hex_bit_iterator ... ok

test sys::hardware_topology::test::check_topology_check - should panic ... ok

test sync::semaphore::test::explicit_signal_unblocks_many_wakers ... FAILED

test sync::rwlock::test::test_try_write ... FAILED

test sync::semaphore::test::try_acquire_static_permit_sufficient_units ... ok

test net::udp_socket::tests::set_multicast_loop_v6 ... FAILED

test sys::sysfs::test::range_iter ... ok

2021-12-10T09:07:51.801157Z ERROR glommio::test_utils: Started tracing..

test sys::sysfs::test::list_iterator ... ok

test io::dma_file_stream::test::read_to_end_buffer_crossing_read_ahead ... ok

test net::tcp_socket::tests::multi_executor_bind_works ... FAILED

test net::udp_socket::tests::set_multicast_ttl_v4 ... ok

test net::udp_socket::tests::sendto ... FAILED

test sys::uring::tests::allocator_exhaustion ... ok

test net::unix::tests::datagram_send_recv ... FAILED

test net::udp_socket::tests::multi_executor_bind_works ... FAILED

test test_utils::test_tracing_init ... ok

test net::tcp_socket::tests::test_read_until ... ok

test io::dma_file_stream::test::read_to_end_buffer_crossing ... ok

test sys::uring::tests::unterminated_sqe_link_chain - should panic ... ok

test executor::test::executor_pool_builder_shards_limit has been running for over 60 seconds

Tests that failed succeeds if run individually; e.g. cargo test net::udp_socket::tests::multi_executor_bind_works or cargo test dma_file. glommio seems to be working without any issue if used as a library from my application in the same environment (though I have not stress tested it yet).

Meta

- OS:

archlinux 5.14.16-arch1-1 - CPU: Threadripper 2970WX

- Memory: 64GB

ulimit -l: 2048

Hello!

Would you be able to run it with a timeout so that the tests eventually fail and produce an output? It would be nice to see what they output to use it as a clue.

Thank you for pointing me in the right direction for debugging.

I added a timeout, and it turned out that many tests were failing due to the "too many open files" error. So I increased the maximum number of open file descriptors from 1024 to 65536 and tried again. This time, most errors disappeared, and I was left with the following issues:

sys::hardware_topology::test::topology_this_machine_unique_idsnet::udp_socket::tests::multi_executor_bind_worksandexecutor::test::executor_pool_builder_shards_limithangs forever- Random test failures

1. topology_this_machine_unique_ids

This test fails with the following error:

thread 'sys::hardware_topology::test::topology_this_machine_unique_ids' panicked at 'assertion failed: `(left == right)`

left: `0`,

right: `1`: core 0 in numa_nodes 1 and 0', glommio/src/sys/hardware_topology.rs:169:21

The error message says the same core id is assigned to CPUs in different NUMA nodes. Indeed, the value of /sys/devices/system/cpu/cpuX/topology/core_id has many duplicates:

❯ for i in (seq 0 47); cat /sys/devices/system/cpu/cpu$i/topology/core_id | tr \n ' '; end

0 1 2 4 5 6 0 1 2 4 5 6 0 1 2 4 5 6 0 1 2 4 5 6 0 1 2 4 5 6 0 1 2 4 5 6 0 1 2 4 5 6 0 1 2 4 5 6

So...is there a way to fix this? The comment in the test states that Check that we don't have a system where any hardware component has an id that is not unique system-wide. What does this mean? If what we need is some value that can uniquely identify the core, could we use a pair of (die_id, core_id) as a virtual core id?

2. Hanging tests

net::udp_socket::tests::multi_executor_bind_works and executor::test::executor_pool_builder_shards_limit hangs forever if I don't add timeout (via ntest crate). They both succeeds if run indiviually. I haven't debugged further.

3. Random test failures

Sorry if this is already a known issue; some tests randomly failed while I ran cargo test multiple times. I will paste some outputs left in the history:

---- timer::timer_impl::test::timeout_does_not_expire stdout ----

thread 'timer::timer_impl::test::timeout_does_not_expire' panicked at 'assertion failed: now.elapsed().as_millis() < 10', glommio/src/timer/timer_impl.rs:811:13

---- executor::test::task_optimized_for_throughput stdout ----

thread 'executor::test::task_optimized_for_throughput' panicked at 'I was preempted but should not have been', glommio/src/executor/mod.rs:2528:37

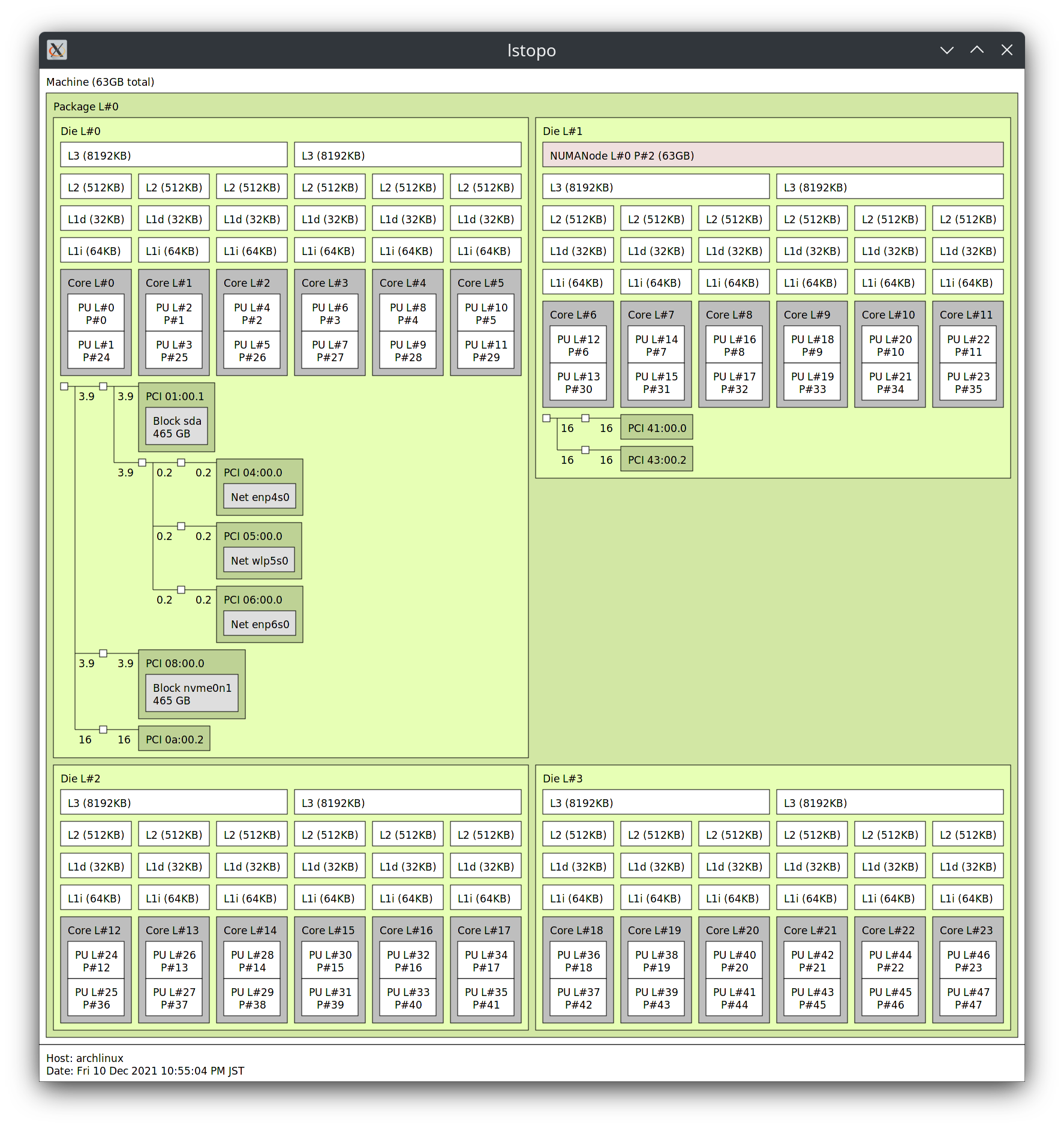

FYI: lstopo

The big amount of files is likely due to the fact that cargo will start as many test threads as there are cores. And you seem to have plenty =)

Your point 1. should be easy to fix, I will take a look later. Machines this big don't come around that often, so this code end up not getting tested in that environment.

- and 3. are likely timing related, and will be a bit harder without access to the environment. So I propose let's handle 1 first, and then we'll get to them =)

Thanks!

Can you paste the contents of:

/sys/devices/system/cpu/online

/sys/devices/system/node/online

And for each node:

/sys/devices/system/node/nodeX/cpulist

This is the files that are scanned by get_machine_topology_unsorted to build the topology map

Here is the output:

❯ cat /sys/devices/system/cpu/online

0-47

❯ cat /sys/devices/system/node/online

0-3

❯ for i in (seq 0 3); cat /sys/devices/system/node/node$i/cpulist; end

0-5,24-29

12-17,36-41

6-11,30-35

18-23,42-47

Concerning 3., I have submitted #478 for the possible fix. Given that it's not reproducible in every environment, I imagine that this is not a priority for you, so please check it out when you have time.

The files you provided look sane.

About the output that you posted that has duplicates, note that this is totally fine: you are scanning the list of CPUs, and printing their core_ids: It is expected that many CPUs will be in a core.

That info is actually in a file that I forgot to ask you for, physical_package_id (sorry, my bad), that is inside /sys/cpuX/topology. A similar loop printing the contents of that file would be useful, but again, duplicates are expected (many CPUs will fall in a package)

What is not expected is that a CPU will be listed in more than one of either node or package.

Another thing that would be very useful is if you could println({:?}, topology) as we enter the check_topology function so we can see what do we end up with

I suspect some resource exhaustion is behind the other two tests hanging as well. The placement one, in particular, the only blocking operation it is doing is starting threads.

physical_package_id

They are all zero :(

❯ for i in (seq 0 47); cat /sys/devices/system/cpu/cpu$i/topology/physical_package_id | tr \n ' '; end

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

println({:?}, topology)

This is what we get

[

CpuLocation { cpu: 0, core: 0, package: 0, numa_node: 0 },

CpuLocation { cpu: 24, core: 0, package: 0, numa_node: 0 },

CpuLocation { cpu: 1, core: 1, package: 0, numa_node: 0 },

CpuLocation { cpu: 25, core: 1, package: 0, numa_node: 0 },

CpuLocation { cpu: 2, core: 2, package: 0, numa_node: 0 },

CpuLocation { cpu: 26, core: 2, package: 0, numa_node: 0 },

CpuLocation { cpu: 3, core: 4, package: 0, numa_node: 0 },

CpuLocation { cpu: 27, core: 4, package: 0, numa_node: 0 },

CpuLocation { cpu: 4, core: 5, package: 0, numa_node: 0 },

CpuLocation { cpu: 28, core: 5, package: 0, numa_node: 0 },

CpuLocation { cpu: 5, core: 6, package: 0, numa_node: 0 },

CpuLocation { cpu: 29, core: 6, package: 0, numa_node: 0 },

CpuLocation { cpu: 12, core: 0, package: 0, numa_node: 1 },

CpuLocation { cpu: 36, core: 0, package: 0, numa_node: 1 },

CpuLocation { cpu: 13, core: 1, package: 0, numa_node: 1 },

CpuLocation { cpu: 37, core: 1, package: 0, numa_node: 1 },

CpuLocation { cpu: 14, core: 2, package: 0, numa_node: 1 },

CpuLocation { cpu: 38, core: 2, package: 0, numa_node: 1 },

CpuLocation { cpu: 15, core: 4, package: 0, numa_node: 1 },

CpuLocation { cpu: 39, core: 4, package: 0, numa_node: 1 },

CpuLocation { cpu: 16, core: 5, package: 0, numa_node: 1 },

CpuLocation { cpu: 40, core: 5, package: 0, numa_node: 1 },

CpuLocation { cpu: 17, core: 6, package: 0, numa_node: 1 },

CpuLocation { cpu: 41, core: 6, package: 0, numa_node: 1 },

CpuLocation { cpu: 6, core: 0, package: 0, numa_node: 2 },

CpuLocation { cpu: 30, core: 0, package: 0, numa_node: 2 },

CpuLocation { cpu: 7, core: 1, package: 0, numa_node: 2 },

CpuLocation { cpu: 31, core: 1, package: 0, numa_node: 2 },

CpuLocation { cpu: 8, core: 2, package: 0, numa_node: 2 },

CpuLocation { cpu: 32, core: 2, package: 0, numa_node: 2 },

CpuLocation { cpu: 9, core: 4, package: 0, numa_node: 2 },

CpuLocation { cpu: 33, core: 4, package: 0, numa_node: 2 },

CpuLocation { cpu: 10, core: 5, package: 0, numa_node: 2 },

CpuLocation { cpu: 34, core: 5, package: 0, numa_node: 2 },

CpuLocation { cpu: 11, core: 6, package: 0, numa_node: 2 },

CpuLocation { cpu: 35, core: 6, package: 0, numa_node: 2 },

CpuLocation { cpu: 18, core: 0, package: 0, numa_node: 3 },

CpuLocation { cpu: 42, core: 0, package: 0, numa_node: 3 },

CpuLocation { cpu: 19, core: 1, package: 0, numa_node: 3 },

CpuLocation { cpu: 43, core: 1, package: 0, numa_node: 3 },

CpuLocation { cpu: 20, core: 2, package: 0, numa_node: 3 },

CpuLocation { cpu: 44, core: 2, package: 0, numa_node: 3 },

CpuLocation { cpu: 21, core: 4, package: 0, numa_node: 3 },

CpuLocation { cpu: 45, core: 4, package: 0, numa_node: 3 },

CpuLocation { cpu: 22, core: 5, package: 0, numa_node: 3 },

CpuLocation { cpu: 46, core: 5, package: 0, numa_node: 3 },

CpuLocation { cpu: 23, core: 6, package: 0, numa_node: 3 },

CpuLocation { cpu: 47, core: 6, package: 0, numa_node: 3 }

]

Thanks.

this makes it pretty obvious what is going on. The code assumes that the core IDs exposed by the system are global. But they are not.

You will see that core only goes up to 6, so indeed you have core 0 in many numa nodes.

The fix should be to make the cores id global by combining them with the NUMA node id. I am a bit busy today with personal matters but I can work on a fix later today. Alternatively, if you're willing to contribute that, that would be awesome!

The proper fix should first try to understand if there is any global core ID buried somewhere in sysfs that we can use, and if not, make core_id = num_numa_nodes * nr_numa_node + core_id

I would also love to understand why the physical_package_id is zero in this system =(

Yeah, I am happy to contribute, hopefully finishing by this weekend.

why the physical_package_id is zero

I am by no mean expert on Linux...but looking at the documentation, physical_package_id is described as follows:

physical package id of cpuX. Typically corresponds to a physical socket number,

but the actual value is architecture and platform dependent. Values: integer

So in a single socket system (which I assume is true for most systems, including ThreadRipper), it seems natural that the physical_package_id is identical for all CPUs.

Chiming in here; net::udp_socket::tests::multi_executor_bind_works seems to hang every time for me (when running the whole test suite, whole test suite with 1 thread and just the single test). I have seen a handful of others hang as well, but I don't have the names handy.

Linux 5.10.0-1052-oem #54-Ubuntu SMP Tue Nov 23 09:06:13 UTC 2021 x86_64

dhmo% cat /sys/devices/system/cpu/online

0-15

(davidblewett)Thu Feb 3, 16:33 | /home/davidblewett/go/src/github.com/DataDog/busly

dhmo% cat /sys/devices/system/node/online

0

(davidblewett)Thu Feb 3, 16:34 | /home/davidblewett/go/src/github.com/DataDog/busly

dhmo% cat /sys/devices/system/node/node0/cpulist

0-15

dhmo% ulimit -l

65536

Let me know if there's more info you want, or want me to do more troubleshooting.