neat-python

neat-python copied to clipboard

full_nodirect initialization is a bit odd, especially for CTRNN

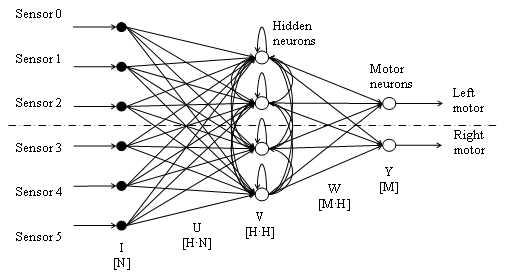

I just wanted to suggest an alternative (and probably more adequate) initialization for recurrent networks. In evolutionary robotics experiments people usually work with CTRNN that at least start with some hidden neurons that are fully-connected between them (as is shown in the picture). But the current implementation only includes self-connections, which I found a bit odd. Anyway, is there a way to start with such an architecture in the current version?