bdit_data-sources

bdit_data-sources copied to clipboard

bdit_data-sources copied to clipboard

Performance improvements for WYS and Miovision pipelines: reduce dead tuples

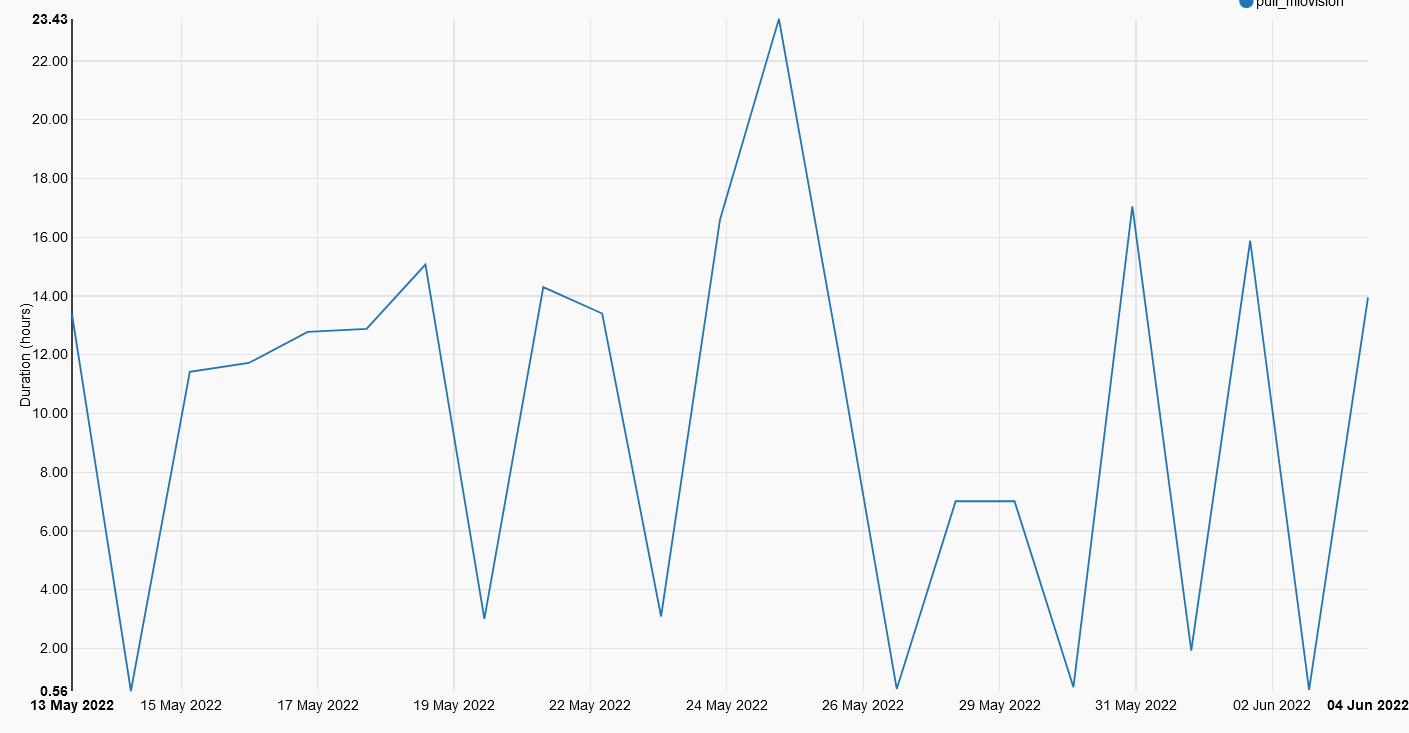

So this might be why miovision 15min aggregation is taking a real long time.

select * from pg_stat_all_tables ORDER BY n_dead_tup DESC LIMIT 10

In the top 4 are "wys"."raw_data_2022" "miovision_api"."volumes_2022" "miovision_api"."volumes_15min_mvt"

After I did VACUUM ANALYZE to reduce the number of dead tuples to 0 the function ran in record time, and then went back up again.

(granted this is a little inconsistent.... I don't remember all the times I ran vacuum)

but that time difference is all in the 15_min aggregation

(granted this is a little inconsistent.... I don't remember all the times I ran vacuum)

but that time difference is all in the 15_min aggregation

[2022-06-05 03:32:59,171] {bash_operator.py:157} INFO - 05 Jun 2022 03:32:59 INFO Updated gapsize table and found gaps exceeding allowable size

[2022-06-05 16:56:58,457] {bash_operator.py:157} INFO - 05 Jun 2022 16:56:58 INFO Aggregated to 15 minute movement bins

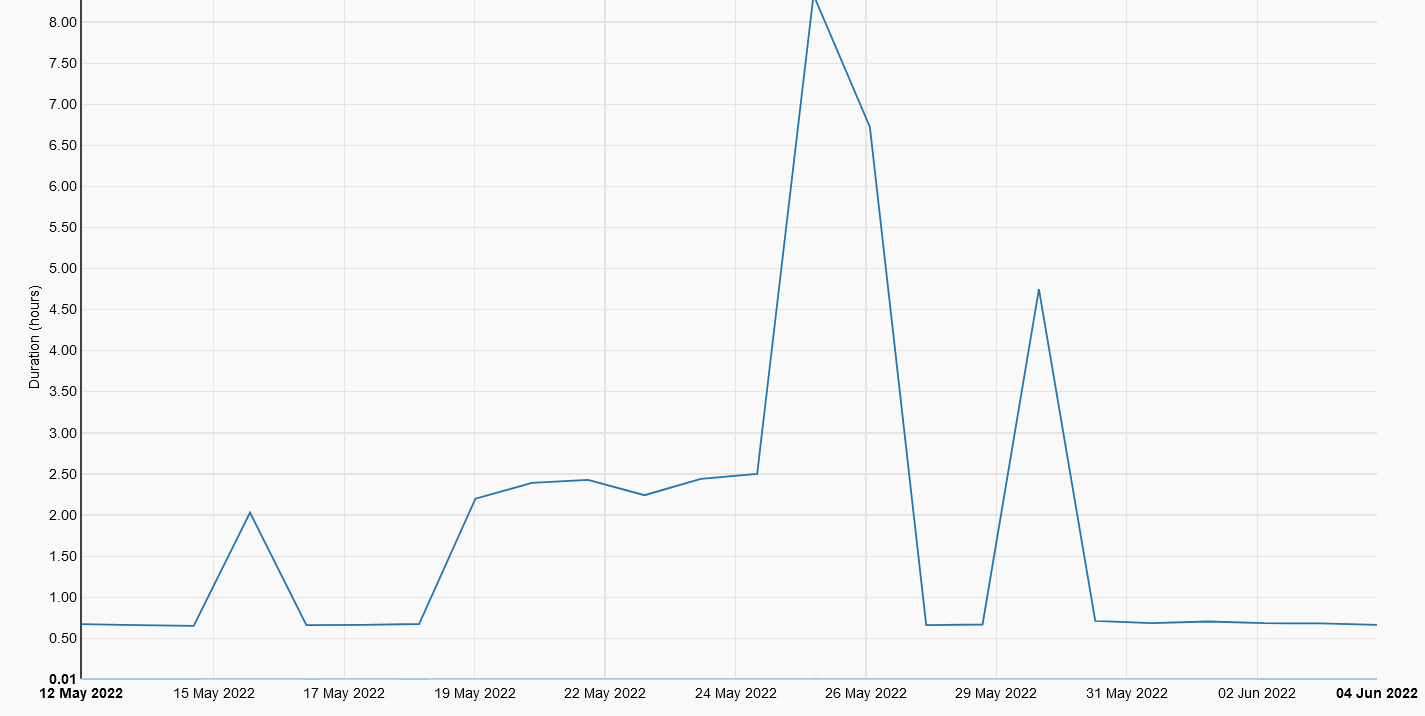

For pull_wys performance has been a bit more consistent and I think is more constrained by the API

This is discussed at length in this blog though I find their solution supremely unsatisfying, since we need both these unique constraints and to do an update (sometimes) instead of nothing on conflict. Also as part of the 15min_mvt function, but we do need to do the UPDATE on the volumes table.

Some possible tuning tips with the caveat that we can't modify these at a database level (but we can change the table params) https://www.cybertec-postgresql.com/en/tuning-autovacuum-postgresql/

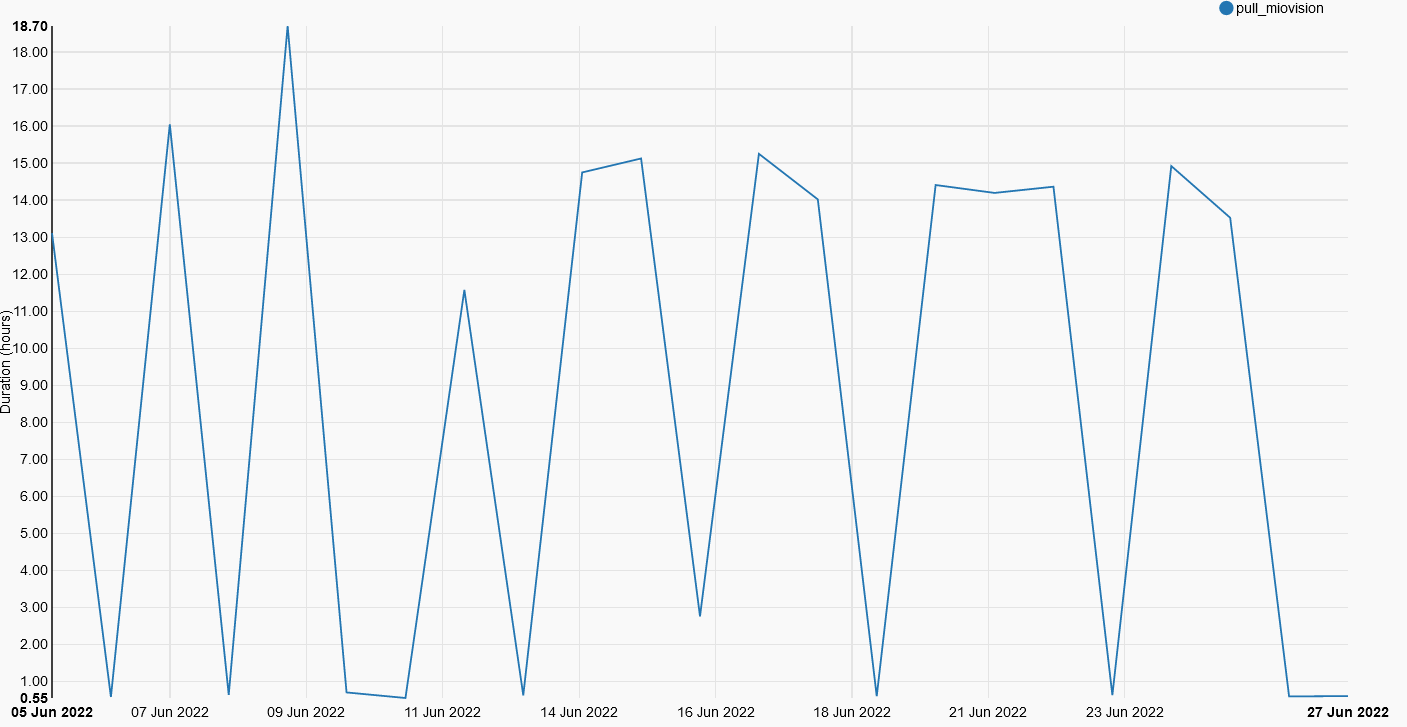

Running just a VACUUM ANALYZE miovision_api.volumes_2022 after today's pipeline finished (after 13 hours), will see what the performance boost is tomorrow

Aggregation ran in 2 minutes

[2022-06-07 03:32:13,506] {bash_operator.py:157} INFO - 07 Jun 2022 03:32:13 INFO Updated gapsize table and found gaps exceeding allowable size

[2022-06-07 03:33:53,654] {bash_operator.py:157} INFO - 07 Jun 2022 03:33:53 INFO Aggregated to 15 minute movement bins

And now there are 702k dead tuples in the volumes_2022 table.

We can see how often the VACUUM runs for Miovision

Let's see if this works

ALTER TABLE miovision_api.volumes_2018 SET (autovacuum_enabled = TRUE);

ALTER TABLE miovision_api.volumes_2019 SET (autovacuum_enabled = TRUE);

ALTER TABLE miovision_api.volumes_2020 SET (autovacuum_enabled = TRUE);

ALTER TABLE miovision_api.volumes_2021 SET (autovacuum_enabled = TRUE);

ALTER TABLE miovision_api.volumes_2022 SET (autovacuum_enabled = TRUE);

ALTER TABLE miovision_api.volumes_2018 SET ( autovacuum_vacuum_cost_limit = 400, autovacuum_vacuum_cost_delay =1);

ALTER TABLE miovision_api.volumes_2019 SET ( autovacuum_vacuum_cost_limit = 400, autovacuum_vacuum_cost_delay =1);

ALTER TABLE miovision_api.volumes_2020 SET ( autovacuum_vacuum_cost_limit = 400, autovacuum_vacuum_cost_delay =1);

ALTER TABLE miovision_api.volumes_2021 SET ( autovacuum_vacuum_cost_limit = 400, autovacuum_vacuum_cost_delay =1);

ALTER TABLE miovision_api.volumes_2022 SET ( autovacuum_vacuum_cost_limit = 400, autovacuum_vacuum_cost_delay =1);

The miovision DAG has been running faster & more consistently since we completed the re-partitioning #735 (task durations). I propose that after closing #781 we can mark this issue as closed too.

Removing Miovision tag from this issue as discussed with @radumas