InceptionResnetV2 comments

Hi guys,

First thanks very much for the package! I found it very easy to use and am very grateful that you guys put in the effort to make this.

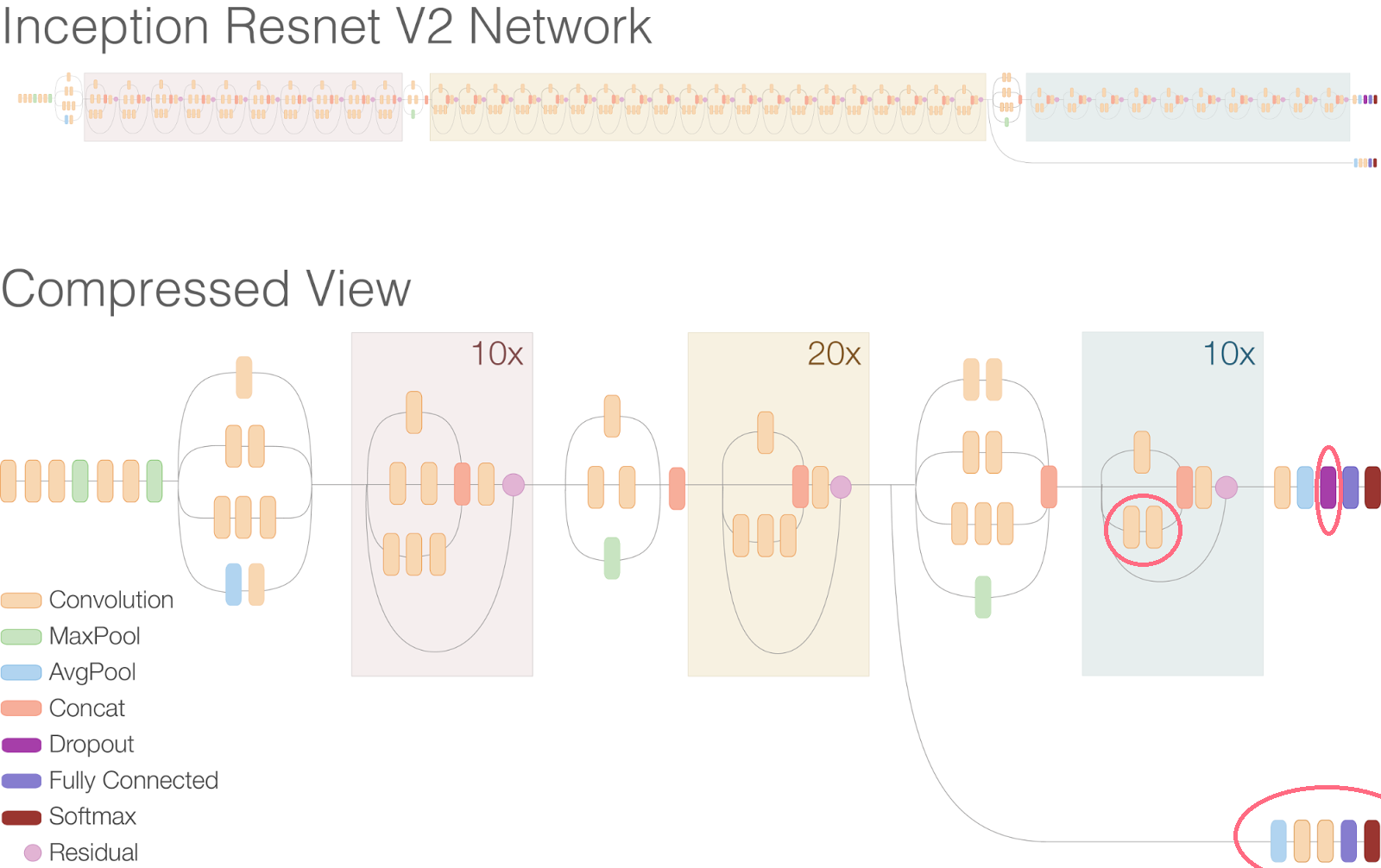

I was looking into the implementation of InceptionResnetv2 and comparing it to Google's description. I see 3 areas where I can't quite match up the code to the online description, and I wondered what you thought about them. The 3 issues are annotated on this image:

-

Branch (1) in block 8 is three 2d convolutions rather than two. I suspect this was on purpose given the kernel sizing of the second two convolutions [(1,3) then (3,1)] but I wanted to check.

-

I cannot find the dropout layer at the end of the network between avgpool_1a and last_linear

-

I cannot see the extra branch that comes out of the middle of the network (between repeat/sequential/Block17 and mixed_7a in your implementation). I also guess this was on purpose, is this intended for the user to implement?

Thanks for your help!

EDIT: I see that 1) has a direct analogy in the TensorFlow repo . 3) is, I think relevant to the "create_aux_logits" check in the TF repo.

Hi @andrewgrahambrown,

Thanks for submitting this issue :)

-

Sorry I did not understand your issue. Could you please point out the concerned line from this repo and the tensorflow repo? thanks

-

It seems that I forgot the dropout at the end.

-

You are right, I did not port it. I never tried to finetune this network btw, so I don't know if this branch is useful or not.

-

The issue is that the lines 214/215 in inceptionresnetv2.py do not match Google's blog post description of InceptionResnetV2. However, on further inspection, lines 214/215 do match lines 92-95 in the TF implementation of inception_resnet_v2.py and also match Fig. 13 in the related publication. So I have to suspect that it is Google's blog post that has a small error, not the code.

-

Happy to help there then!

-

I'm also not sure. I would suspect it causes a small difference if only because it is optional in the TF implementation.

-

Feel free to submit a pull request ;)

-

Yeah this branch could be helpful for fine tuning as well. However I am not sure if I could find the time to add it tho. Why do you need it?

Make a fork with the dropout layer

On 15 June 2018 at 20:08, Remi [email protected] wrote:

Feel free to submit a pull request ;) 2.

Yeah I would expect it helps training with this branch. I am not sure if could find the time to add it tho. Why do you need it?

— You are receiving this because you were mentioned. Reply to this email directly, view it on GitHub https://github.com/Cadene/pretrained-models.pytorch/issues/73#issuecomment-397700617, or mute the thread https://github.com/notifications/unsubscribe-auth/AKe_bk3Xe1kPu96Jb1illovVLZdRv9m9ks5t8_gNgaJpZM4UnpSZ .

is there a fork with the dropout layer?