plugin_thold_host_failed not updating Thold V1.5 Cacti 1.2.16

Hello Everyone,

I have come across an issue with one of the instances I have where the plugin_thold_host_failed table has around 8k entries in it despite only have about 100 devices down

I did a lab test and found that when dead host notification is disabled that this table does not update at all

I found this routine

// Lets find hosts that were down, but are now back up

if (read_config_option('remote_storage_method') == 1) {

$failed = db_fetch_assoc_prepared('SELECT *

FROM plugin_thold_host_failed

WHERE poller_id = ?',

array($config['poller_id']));

} else {

$failed = db_fetch_assoc('SELECT *

FROM plugin_thold_host_failed');

}

That should update the table but does not when the dead notification is set to off

another case I found is that even when the notification is on the entry is not always removed this results in email and command execution for devices that were down some time ago

We tested on both the daemon and the standard PHP process and found the same outcome

What I notice is that in the table all devices ID's show to be on poller 1 however the device is not on poller 1 I am not sure if poller_id = 1 on the thold side is because thold runs on the main poller ??

A bit more outputs

here in my example I fail a bunch of test devices and restore

2021-01-29 17:36:11 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.181] Hostname[1.1.1.181] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:11 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.174] Hostname[1.1.1.174] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:11 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.182] Hostname[1.1.1.182] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:11 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.175] Hostname[1.1.1.175] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:10 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.185] Hostname[1.1.1.185] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:09 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.168] Hostname[1.1.1.168] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:09 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.12] Hostname[1.1.1.12] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:09 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.187] Hostname[1.1.1.187] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:08 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.178] Hostname[1.1.1.178] NOTICE: HOST EVENT: Device Returned from DOWN State

2021-01-29 17:36:08 - SPINE: Poller[Main Poller] PID[17783] Device[1.1.1.165] Hostname[1.1.1.165] NOTICE: HOST EVENT: Device Returned from DOWN State

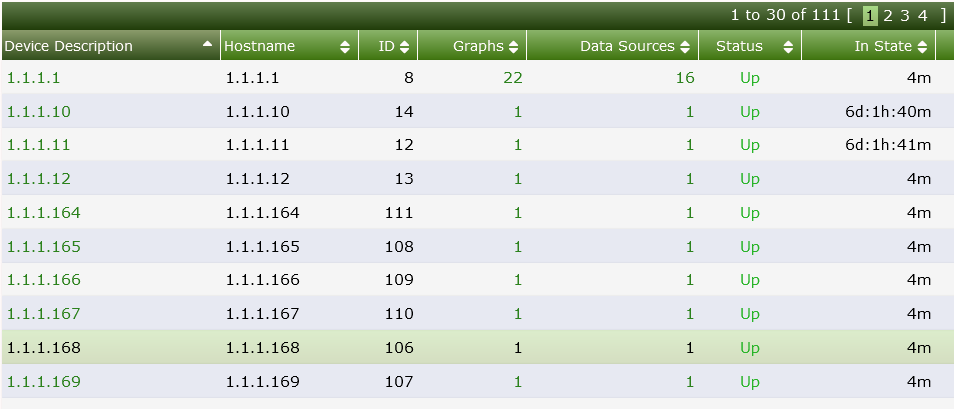

Devices show up in the console

while the devices were down the dead device notification we set the dead device notification is off

Table entries remain

MariaDB [cacti]> select * from plugin_thold_host_failed;

+----+-----------+---------+

| id | poller_id | host_id |

+----+-----------+---------+

| 1 | 1 | 8 |

| 2 | 1 | 9 |

| 3 | 1 | 13 |

| 4 | 1 | 86 |

| 5 | 1 | 87 |

| 6 | 1 | 88 |

| 7 | 1 | 89 |

| 8 | 1 | 90 |

| 9 | 1 | 91 |

| 10 | 1 | 92 |

| 11 | 1 | 93 |

| 12 | 1 | 94 |

| 13 | 1 | 95 |

| 14 | 1 | 96 |

| 15 | 1 | 97 |

| 16 | 1 | 98 |

| 17 | 1 | 99 |

| 18 | 1 | 101 |

| 19 | 1 | 102 |

| 20 | 1 | 104 |

| 21 | 1 | 105 |

| 22 | 1 | 106 |

| 23 | 1 | 107 |

| 24 | 1 | 108 |

| 25 | 1 | 109 |

| 26 | 1 | 110 |

| 27 | 1 | 111 |

+----+-----------+---------+

27 rows in set (0.000 sec)

once you enable dead device notification a flood of emails will come even though those devices have since come back up

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.164] Hostname[1.1.1.164] can not send a Device recovering email for '1.1.1.164' since the 'Alert Email' setting is not set for Device!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.164] Hostname[1.1.1.164] is recovering!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.167] Hostname[1.1.1.167] can not send a Device recovering email for '1.1.1.167' since the 'Alert Email' setting is not set for Device!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.167] Hostname[1.1.1.167] is recovering!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.166] Hostname[1.1.1.166] can not send a Device recovering email for '1.1.1.166' since the 'Alert Email' setting is not set for Device!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.166] Hostname[1.1.1.166] is recovering!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.165] Hostname[1.1.1.165] can not send a Device recovering email for '1.1.1.165' since the 'Alert Email' setting is not set for Device!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.165] Hostname[1.1.1.165] is recovering!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.169] Hostname[1.1.1.169] can not send a Device recovering email for '1.1.1.169' since the 'Alert Email' setting is not set for Device!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.169] Hostname[1.1.1.169] is recovering!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.168] Hostname[1.1.1.168] can not send a Device recovering email for '1.1.1.168' since the 'Alert Email' setting is not set for Device!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.168] Hostname[1.1.1.168] is recovering!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.170] Hostname[1.1.1.170] can not send a Device recovering email for '1.1.1.170' since the 'Alert Email' setting is not set for Device!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.170] Hostname[1.1.1.170] is recovering!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.171] Hostname[1.1.1.171] can not send a Device recovering email for '1.1.1.171' since the 'Alert Email' setting is not set for Device!

2021-01-29 17:41:14 - THOLD WARNING: Device[1.1.1.171] Hostname[1.1.1.171] is recovering!

Ok did a more deep dive

so in polling.php there is this statment

function thold_update_host_status() {

global $config;

// Return if we aren't set to notify

$deadnotify = (read_config_option('alert_deadnotify') == 'on');

if (!$deadnotify) {

return 0;

}

This makes sense if the dead host notification is set to off the problem is that if you turn this off when you have down hosts or if you add devices in automation where an email showing the device is in a restored state this table will not be clear because the device update_host_status function will not complete

Another thing is with the new execute a command option introduced in 1,5 thold still attempts to send an email with no way to turn that off

so if this table has a bunch of these entries and you turn on dead peer notification even if you expect to send a command to a ticketing system or something else your system will still try to process sending out an email as well

I think what should happen is that if you disable dead host notification that any existing entries are truncated/removed since that table only seems to be for thold to track whats down and only when dead host notification is enabled based on my tests

Hey guys just wanted to give this a bump in case it got lost in the mix

I was hoping @browniebraun could put some eyes on this.

Hey @browniebraun do you have any thoughts ? Would I safely be able to truncate this table ?

In my test I have and it seems to be ok but I am not sure if in the background its messing something up