Add a kernel that stores objects twice: with Intervals and Gmpq

Summary of Changes

Add a kernel and a numbertype that stores interval approximations for Gmqs.

TODO:

- [ ] Go through comments 'AF: '

- [x] Remove initialization of the infinite vertex in T3

- [x] Bench

Release Management.

- Affected package(s):

Kernel_23 - License and copyright ownership: GF

Ah, yes, that's a good idea, I did think about it when I noticed how much time a filtered rational kernel spends converting the same rational to an interval again and again.

TODO: Once all tests pass, add specialization of the construction wrapper to use approx input when possible (to avoid non needed conversion to interval).

TODO: Once all tests pass, add specialization of the construction wrapper to use approx input when possible (to avoid non needed conversion to interval).

After the exact construction we create the approximation. However, we have "constructions" such as Construct_source_2(Segment_2) where we can just take the approximation of the point instead of regenerating it again from the exact result. Question: Isn't it enough to distinguish between result types that are copies and result types that are references?

@sloriot @afabri

2490190 and feae9aa need reviewing.

Former is to get CGAL::Iso_rectangle_2<FRK>::Rep::first_type and CGAL::Getter to play nice together.

Latter is because const CGAL::Getter<Args>::first_type&... gives a const& and then it doesn't match the specific overloads for result_of, for example for Construct_vertex_2 which by default has result type const Point_2&, but if matched with (Iso_rectangle_2, int), it becomes simply Point_2. So, result_of<CV2(const Iso_rectangle_2&, int)> won't see Point_2butconst Point_2&and then you haveconst&` of temporaries.

As we do constructions always with the exact time, we get lots of mallocs and reallocs of Gmpq. @mglisse I saw this newsgroup entry and I am wondering if you still have your trivial allocator.

As we do constructions always with the exact time, we get lots of mallocs and reallocs of

Gmpq.

Did you try the latest version of GMP? Did you try mpq_class (or boost) instead? It might reduce a bit the overhead (no miracle though).

@mglisse I saw this newsgroup entry and I am wondering if you still have your trivial allocator.

What "trivial allocator" are you talking about? The pool mentioned in the linked message sounds similar to the old branch cgal-public-dev/STL_Extension-recycle_Handle_for-glisse .

One point about static filters (I don't know if this is the right place to discuss it, but it is relevant to this branch). The way they are engineered is, we store for each number a bound on the absolute value of the number and a bound epsilon on the absolute error. Then we propagate this and get a bound on the final error. One important aspect is how we initialize the error. We can assume that the input doubles are exact, which leads to a small epsilon, but can only be used if fit_in_double. Or we can assume that the input double has an error smaller than 1 ulp (.5 for some types) and get a larger epsilon (fails more often), but be able to use the filters in more cases. In particular, here, if we do constructions with mpq_t and create an interval from that, most intervals should have length 1ulp and thus be good candidates for the relaxed static filters.

Looking at cgal-public-dev/STL_Extension-recycle_Handle_for-glisse I am wondering if we do not speak about different things here. I thought one would have to use a pool on a lower level, namely Custom Allocation. Your goal is to avoid deleting reps, right?

Looking at cgal-public-dev/STL_Extension-recycle_Handle_for-glisse I am wondering if we do not speak about different things here. I thought one would have to use a pool on a lower level, namely Custom Allocation.

I read the mailing list discussion you linked to in the previous message, and I don't see any mention of an allocator there, so I am confused... You can pass your own allocation function to GMP, I probably tried it at some point but I don't remember it.

Your goal is to avoid deleting reps, right?

That branch never destructs any Gmpq, they get reused, which limits allocations a lot.

The Filtered_rational_kernel is used as Exact_predicates_exact_constructions_kernel when CGAL_USE_FILTERED_RATIONAL_KERNEL is defined.

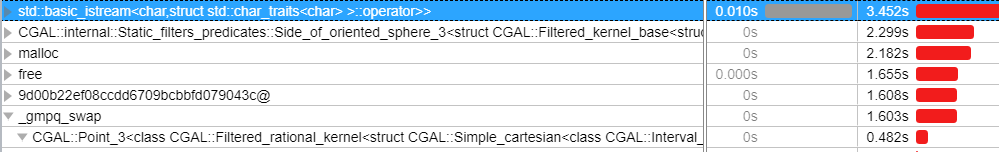

I compared the performance of three programs

- Polygon_mesh_processing/examples/Polygon_mesh_processin/corefinement_mesh_union_with_evpm.cpp takes 6.9 sec instead of 5.1 sec

- Boolean_set_operations/benchmark/Boolean_set_operations/polygon_set_2_join.cpp takes 17 sec instead of 7 sec.

- A 3D Delaunay triangulation with Epeck takes 18 sec instead of 11 sec

The time for 3D Delaunay is surprising. There is no construction involved, this version of Epeck should do pretty much the same computations as the lazy Epeck. The memory is arranged slightly less efficiently (intervals and Gmpq interspersed), but I wouldn't expect it to have such a large effect. Or is the extra time spent in the initial conversion from double to Gmpq to construct the points, where the lazy kernel only constructs a node with the double, while the actual Delaunay computation time remains the same? Did you also compare with Epeck with -DCGAL_DONT_USE_LAZY_KERNEL?

Everything becomes slighlty slower. For example the fit_in_double inside the static filter runs on an interval and has to determine again and again that inf == sup , the hilbert sort compares intervals, std::nth, and even the assignment of a point when std::swapped while doing the std::random_shuffle.

We probably should focus on examples where we really make constructions.

the fit_in_double inside the static filter runs on an interval and has to determine again and again that inf == sup

How does the lazy kernel avoid doing the same thing? fit_in_double(Lazy_exact_nt) calls fit_in_double(Interval_nt).

the hilbert sort compares intervals, std::nth,

What does the lazy kernel do differently there?

and even the assignment of a point when std::swapped while doing the std::random_shuffle.

Ok, you assign 3 Handle_for and 3 Interval_nt (1 per coordinate) instead of 1 Handle (for the whole point), although you could use Cartesian instead of Simple_cartesian if that's what you want. On the other hand, I thought that the goal of this kernel would be thread-safety, so even using Gmpq (as opposed to mpq_class or mpq_rational) is strange, but those would be way more costly to copy.

NB: swap doesn't seem to give very good code for Gmpq or lazy point, we may want to do something about it at some point.

I looked again at the 3D Delaunay with the Lazy_kernel vs the Filtered_rational_kernel. The former does not construct a single Gmpq. The latter constructs them and then in the static filter when calling p .x() every time makes a pair of an interval and a copy of a Gmpq (though just a handle).

@sloriot and @MaelRL could you please have a look at Coercion_traits etc.

calling

p .x()every time makes a pair of an interval and a copy of aGmpq

Ah, I hadn't noticed yet that you used a pair<approx_point,exact_point> as point, and not an array of 3 pair<interval,Gmpq>.

@sloriot could you have a look at this line in travis.

I looked again at the 3D Delaunay with the

Lazy_kernelvs theFiltered_rational_kernel. The former does not construct a singleGmpq. The latter constructs them and then in the static filter when callingp .x()every time makes a pair of an interval and a copy of aGmpq(though just a handle).

What about having a function `fit_in_double_x(const Point

calling

p .x()every time makes a pair of an interval and a copy of aGmpqAh, I hadn't noticed yet that you used a pair<approx_point,exact_point> as point, and not an array of 3 pair<interval,Gmpq>.

@lrineau why don't we use get_approx(p) in the static filter of Orientation_3 as we do in Equal_2.h? Wouldn't that also be better for Epeck?

@lrineau why don't we use

get_approx(p)in the static filter ofOrientation_3as we do inEqual_2.h? Wouldn't that also be better forEpeck?

In fact it turns out that the Get_approx was a way to avoid constructing a Lazy_exacxt_nt when p.x() gets called., but it was superseded by the usage of Static_filtered_predicate. However we still had lot's of Get_approx because only one commit that had added them was reverted. In this PR we remove them from all the static filters.

Here are the latest timings with each time two runs: Delaunay_3 |V| = 1000000 |C| = 6748016

Epick 8.353 sec 8.28 sec

Epeck - Lazy_exact_nt<Gmpq> 11.295 sec 11.176 sec

Epeck - Lazy_exact_nt<boost::multiprecision::numberboost::multiprecision::backends::gmp_rational,1 > 11.201 sec 11.824 sec

Epeck - Filtered_rational<Interval_nt<0>,Gmpq> 13.48 sec 13.872 sec

Epeck - Filtered_rational<Interval_nt<0>,boost::multiprecision::numberboost::multiprecision::backends::gmp_rational,1 > 14.306 sec 14.408 sec

Filtered_rational_kernel creates in any case a GMP rational number, which explains the runtime difference, which is essentially the malloc and free. We could make the generation of the rationals lazy, but that is probably not worth it, Can we gain sonething on the swaps?

dt.insert(std::make_move_iterator(points.begin()), std::make_move_iterator(points.end())); may remove a few allocations.

I tried your kernel with simple_2.cpp, and mpq_swap takes very little time (at most 2%), so I am not sure what is going on in your test.

My points now only store a default constructed rational. And the rational is constructed from the interval (with inf==sup) if needed. @mglisse do you have any idea how to avoid the mpq_init, that to even initialize on an is needed base.

I though the point of this kernel was to avoid mutable lazy stuff that is thread-unsafe? If you have a bool to indicate if the exact part is initialized, this is manually reimplementing optional<exact_part>, which can handle not initializing just fine. If you want to save the space of the bool, I guess you could replace the exact object with some suitably aligned buffer, memset(0) it when you don't want it, test if the _mp_d of _mp_den is 0 (this part is not exactly casher), use placement new to construct an exact object when needed, explicitly copy/destruct in constructors/destructors.

Looking at the assignment operator of gmp_rational

gmp_rational& operator = (const gmp_rational& o)

{

if(m_data[0]._mp_den._mp_d == 0)

mpq_init(m_data);

mpq_set(m_data, o.m_data);

return *this;

}

why is that multi threading safe? As mpq_init changes m_data[0]._mp_den._mp_d to nonzero, can't that pose a problem @mglisse ?

why is that multi threading safe? As

mpq_initchangesm_data[0]._mp_den._mp_dto nonzero, can't that pose a problem @mglisse ?

You are saying that x=y modifies x, which is true, but not really an issue. There are several levels of safety. Concurrent data-structures want to allow to threads to modify the structure at the same time. Simple thread-safety lets several threads read a variable at the same time, but if you are modifying a variable, reading it at the same time in another thread is unsafe. The issue with lazy* is that reading is actually a mutating operation.

Stupid me. By the way do you have any idea when m_data[0]._mp_den._mp_d equals zero? Reading the code I had the impression that it is always initialized.

Stupid me. By the way do you have any idea when

m_data[0]._mp_den._mp_dequals zero? Reading the code I had the impression that it is always initialized.

After a move constructor maybe?

Seeing the DO NOT PUSH TO MASTER, in last commit (https://github.com/CGAL/cgal/pull/4495/commits/8e89bfcb858383522decbfc2861b78180d0103b1), I have converted the PR to a draft.