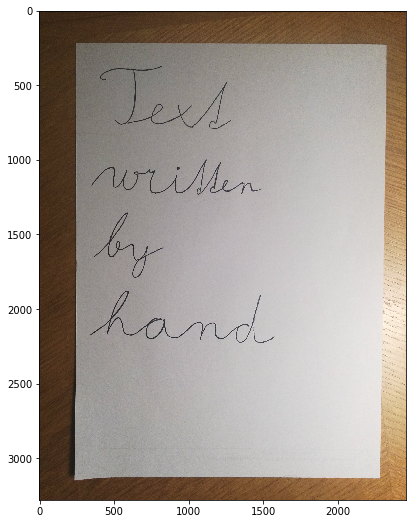

handwriting-ocr

handwriting-ocr copied to clipboard

handwriting-ocr copied to clipboard

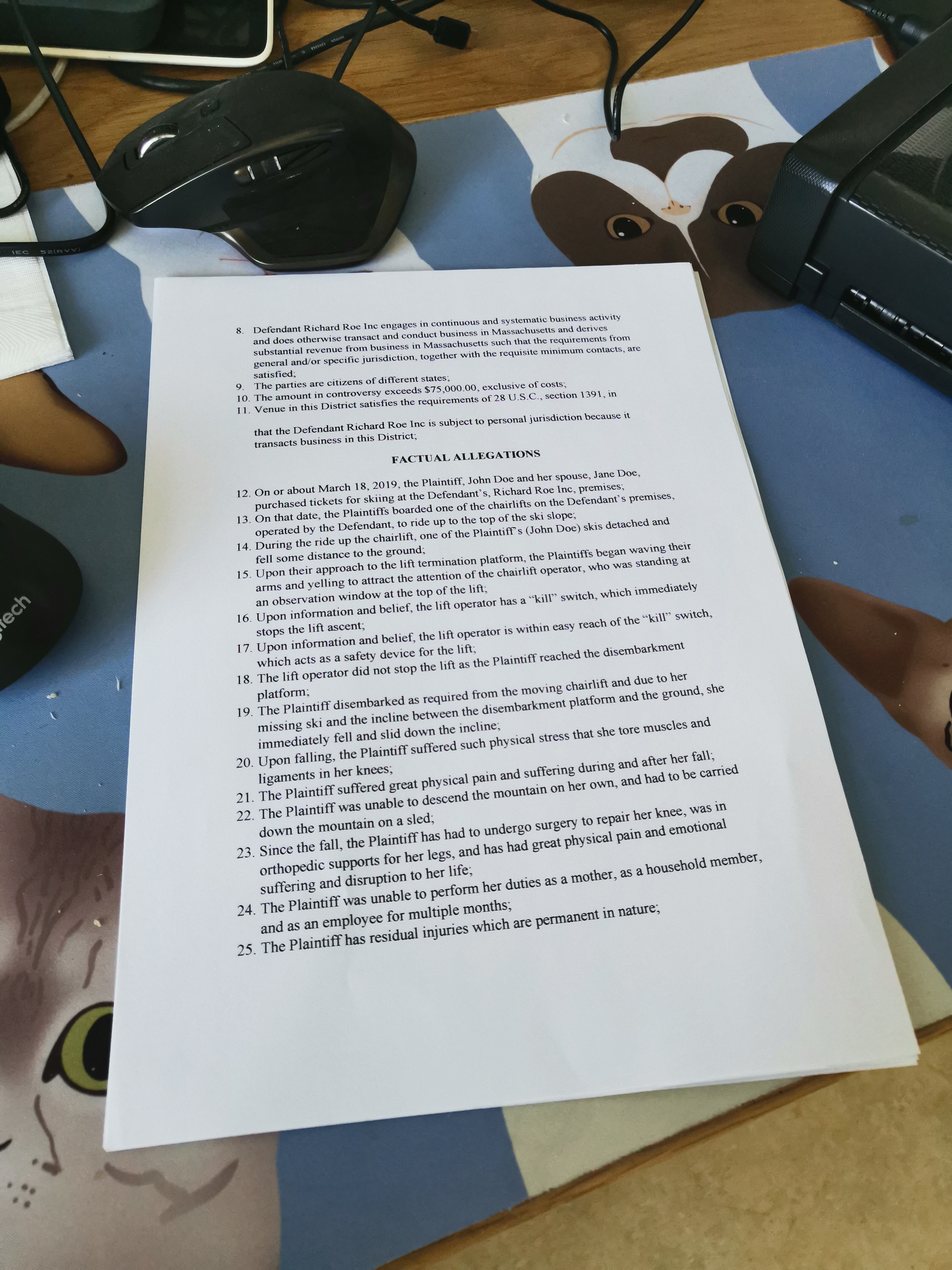

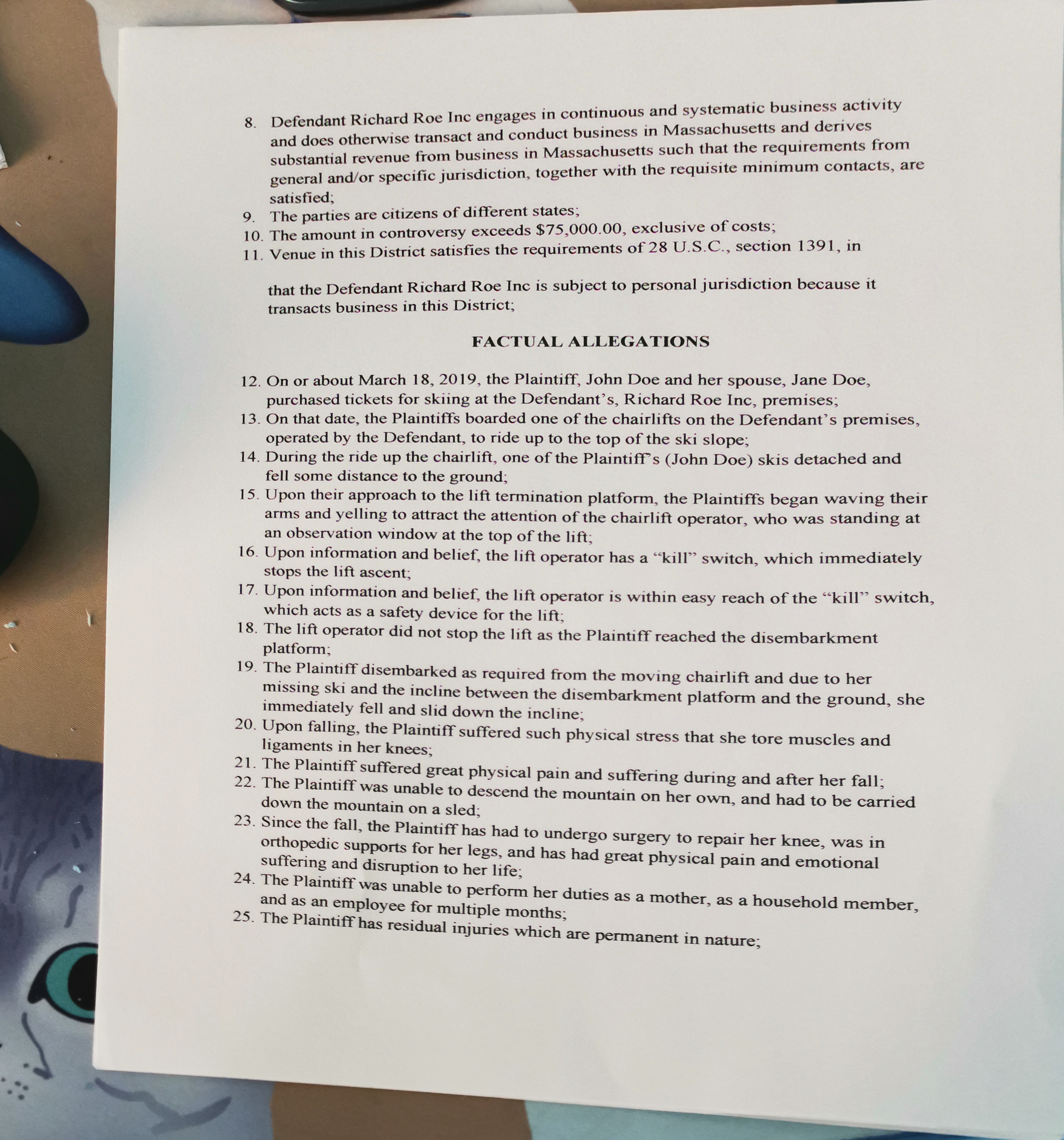

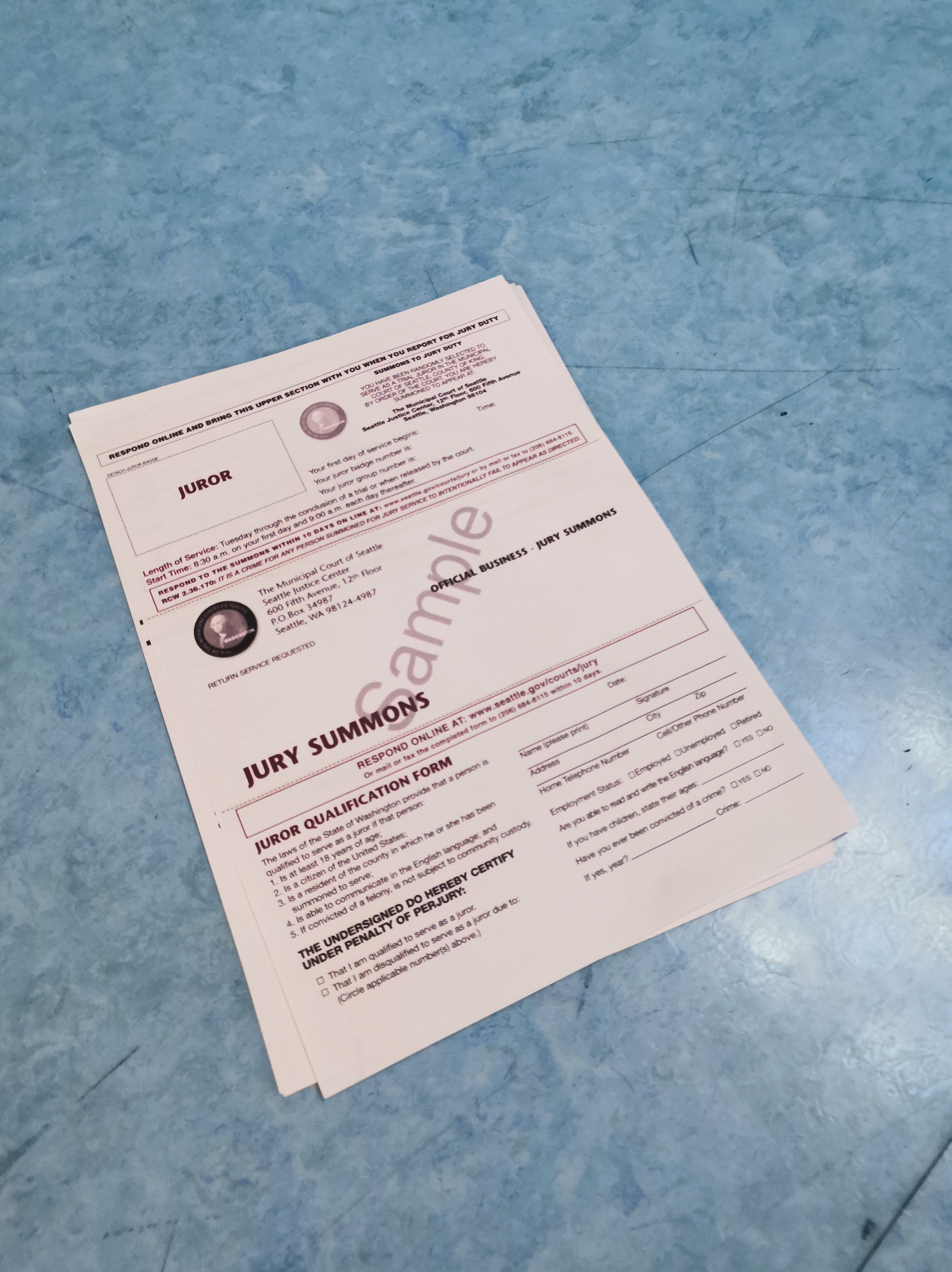

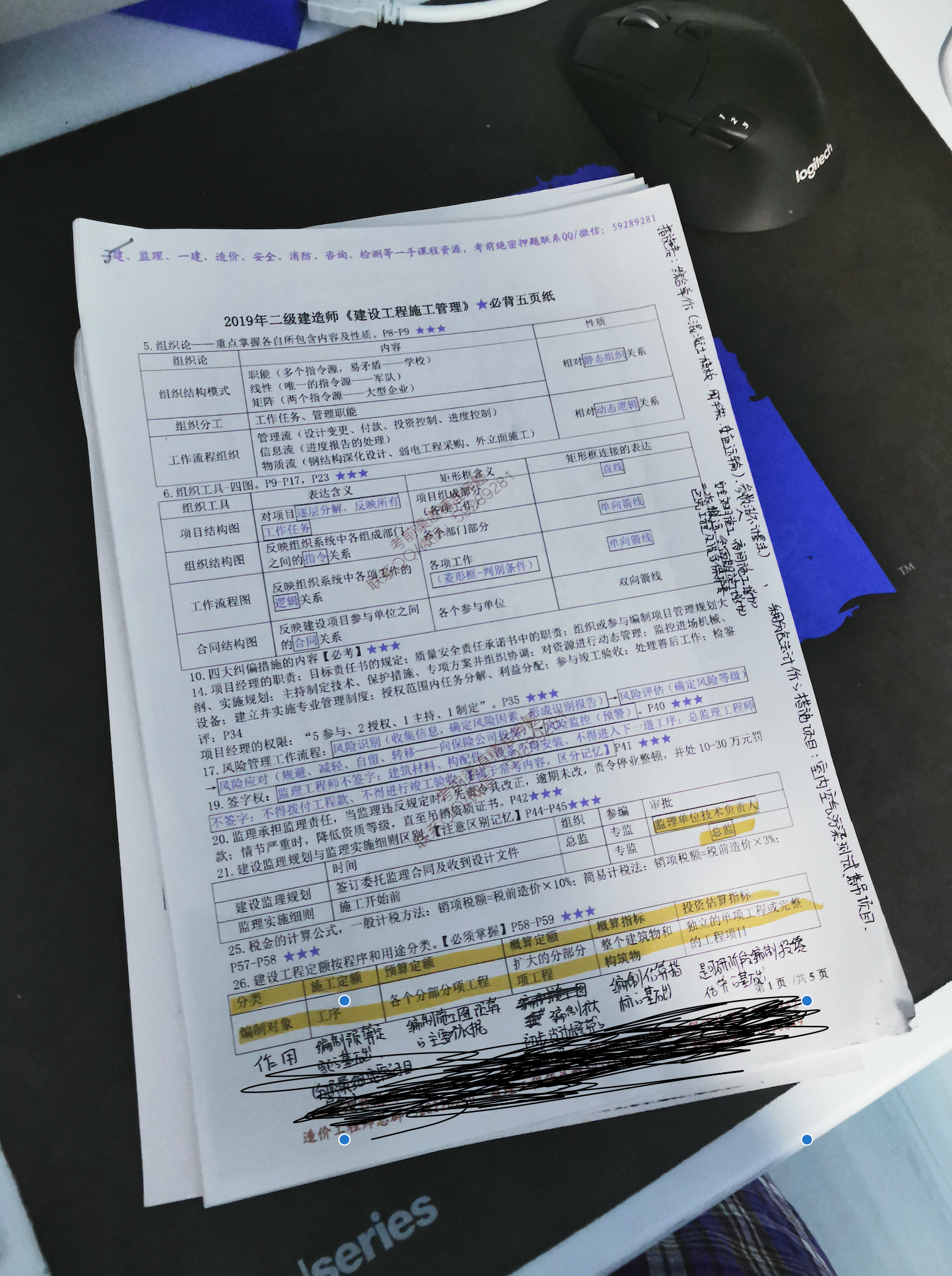

page detection doesn't work correctly in some case

i've got these result

opencv 4.1.0 python 3.6.7

this is image from example https://github.com/Breta01/handwriting-ocr/blob/master/notebooks/page_detection.ipynb

SMALL_HEIGHT = 800

def processImageAlt(imagepath, ext):

image = cv2.cvtColor(cv2.imread(imagepath), cv2.COLOR_BGR2RGB)

edges_image = edges_det(image, 200, 250)

edges_image = cv2.morphologyEx(edges_image, cv2.MORPH_CLOSE, np.ones((5, 11)))

page_contour = find_page_contours(edges_image, resize(image))

print("PAGE CONTOUR:")

print(page_contour)

implt(cv2.drawContours(resize(image), [page_contour], -1, (0, 255, 0), 3))

page_contour = page_contour.dot(ratio(image))

newImage = persp_transform(image, page_contour)

implt(newImage, t='Result')

filename_output = generate() + ext

cv2.imwrite('./static/' + filename_output, newImage)

def edges_det(img, min_val, max_val):

""" Preprocessing (gray, thresh, filter, border) + Canny edge detection """

img = cv2.cvtColor(resize(img), cv2.COLOR_BGR2GRAY)

# Applying blur and threshold

img = cv2.bilateralFilter(img, 9, 75, 75)

img = cv2.adaptiveThreshold(img, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 115, 4)

implt(img, 'gray', 'Adaptive Threshold')

# Median blur replace center pixel by median of pixels under kelner

# => removes thin details

img = cv2.medianBlur(img, 11)

# Add black border - detection of border touching pages

# Contour can't touch side of image

img = cv2.copyMakeBorder(img, 5, 5, 5, 5, cv2.BORDER_CONSTANT, value=[0, 0, 0])

implt(img, 'gray', 'Median Blur + Border')

return cv2.Canny(img, min_val, max_val)

def four_corners_sort(pts):

""" Sort corners: top-left, bot-left, bot-right, top-right"""

diff = np.diff(pts, axis=1)

summ = pts.sum(axis=1)

return np.array([pts[np.argmin(summ)],

pts[np.argmax(diff)],

pts[np.argmax(summ)],

pts[np.argmin(diff)]])

def contour_offset(cnt, offset):

""" Offset contour because of 5px border """

cnt += offset

cnt[cnt < 0] = 0

return cnt

def find_page_contours(edges, img):

""" Finding corner points of page contour """

# Getting contours

contours, hierarchy = cv2.findContours(edges, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

# Finding biggest rectangle otherwise return original corners

height = edges.shape[0]

width = edges.shape[1]

MIN_COUNTOUR_AREA = height * width * 0.5

MAX_COUNTOUR_AREA = (width - 10) * (height - 10)

max_area = MIN_COUNTOUR_AREA

page_contour = np.array([[0, 0],

[0, height-5],

[width-5, height-5],

[width-5, 0]])

for cnt in contours:

perimeter = cv2.arcLength(cnt, True)

approx = cv2.approxPolyDP(cnt, 0.03 * perimeter, True)

# Page has 4 corners and it is convex

if (len(approx) == 4 and

cv2.isContourConvex(approx) and

max_area < cv2.contourArea(approx) < MAX_COUNTOUR_AREA):

max_area = cv2.contourArea(approx)

page_contour = approx[:, 0]

# Sort corners and offset them

page_contour = four_corners_sort(page_contour)

return contour_offset(page_contour, (-5, -5))

def persp_transform(img, s_points):

""" Transform perspective from start points to target points """

# Euclidean distance - calculate maximum height and width

height = max(np.linalg.norm(s_points[0] - s_points[1]),

np.linalg.norm(s_points[2] - s_points[3]))

width = max(np.linalg.norm(s_points[1] - s_points[2]),

np.linalg.norm(s_points[3] - s_points[0]))

# Create target points

t_points = np.array([[0, 0],

[0, height],

[width, height],

[width, 0]], np.float32)

# getPerspectiveTransform() needs float32

if s_points.dtype != np.float32:

s_points = s_points.astype(np.float32)

M = cv2.getPerspectiveTransform(s_points, t_points)

return cv2.warpPerspective(img, M, (int(width), int(height)))

def resize(img, height=SMALL_HEIGHT, allways=False):

"""Resize image to given height."""

if (img.shape[0] > height or allways):

rat = height / img.shape[0]

return cv2.resize(img, (int(rat * img.shape[1]), height))

return img

def ratio(img, height=SMALL_HEIGHT):

"""Getting scale ratio."""

return img.shape[0] / height

def implt(img, cmp=None, t=''):

"""Show image using plt."""

plt.imshow(img, cmap=cmp)

plt.title(t)

plt.show()

i've change this line from

im2, contours, hierarchy = cv2.findContours(edges, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

into to make it work with 4x

contours, hierarchy = cv2.findContours(edges, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

Yes, I am aware it is not perfect.

The small inaccuracy is due to the fact that the lines are sometimes not completely straight and the images is scaled before processing. The reason behind the wrong detection is probably bad contrast between background and the paper edges.

The other image is not being recognized probably because the paper is too small try changing this line:

MIN_COUNTOUR_AREA = height * width * 0.5

To something like:

MIN_COUNTOUR_AREA = height * width * 0.2

This basically means that the smallest detected paper should cover at least 20% of the input image.

Feel free to experiment with the process and different parameters. Let me know if you find something which works better.

i've tried reduce MIN_COUNTOUR_AREA

what if we use object detective to get object bounding then work in that bounding. will this work better?

Yes, if you train it on some good dataset, I guess it will work better. Especially in this situations when there is more complex background. I haven't tried it by myself yet.