Max Balandat

Max Balandat

This looks very related to what's happening in #1287. Could you try the workaround proposed [there](https://github.com/pytorch/botorch/issues/1287#issuecomment-1177703776) to make sure that this is indeed the same issue?

I can repro this issue, looking into this further. Am a little hamstrung by running into this error frequently, seems to be a known issue with mprof :( https://github.com/pythonprofilers/memory_profiler/issues/163 ```...

The fact that Sait's example shows significant growth means that there must be some tensors / graph components of significant size that are not being garbage collected...

hmm `with gpytorch.settings.fast_computations(False, False, False)` doesn't fix the issue for me....

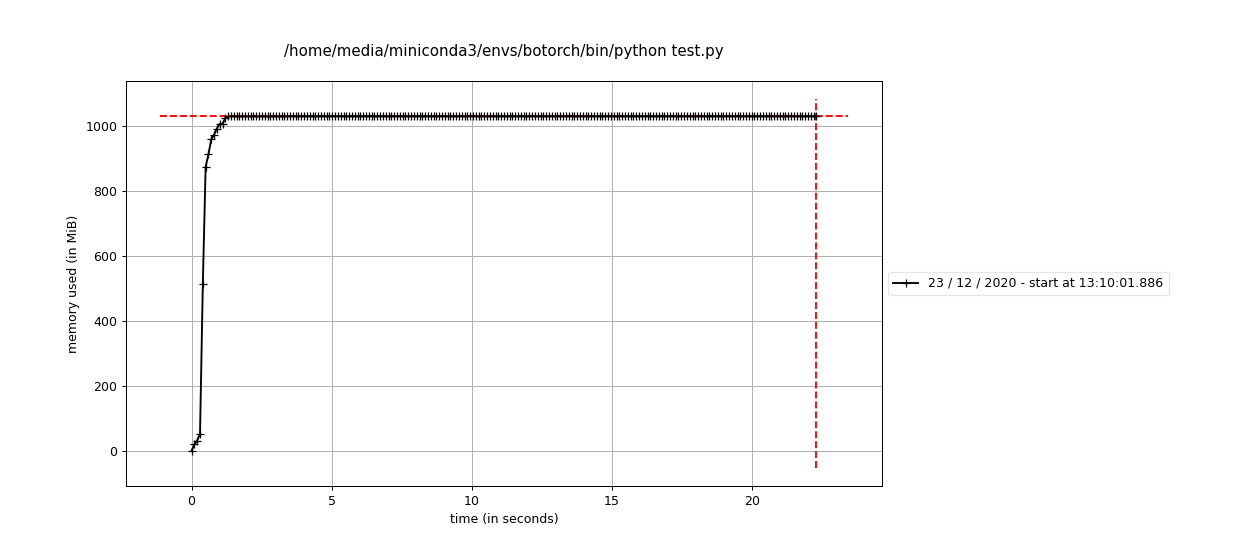

OK just tested this on an old linux machine, no memory leak there:  Here is the exact same code run on MacOS X 11.1 with the same config (python...

Hmm turns out that running this with `python - m mprof run` instead of `mprof` to circumvent the issues mentioned earlier is what caused this to be so slow. I...

@mshvartsman Any updates on this? On my end it's still reproducible on OSX but works fine on ubuntu.

Fair enough. This one seems nasty enough that I'm not very tempted to go real deep here. I'll leave the issue open for tracking though.

We don't currently have concrete plans for this. The way `HeteroskedasticSingleTaskGP` models the variance internally makes it quite hard to do this "properly" - essentially conditioning on observations would require...

Thanks for flagging this. I believe this could be indexing bug on the gpytorch side of things related to lazy kernel evaluation. To check this, can you try wrapping your...