bicep

bicep copied to clipboard

bicep copied to clipboard

Set up a way to do some (manual?) perf/stress tests on the linter rules

Agreed we needed this soon to get an idea of possible perf impact of adding a bunch of new rules.

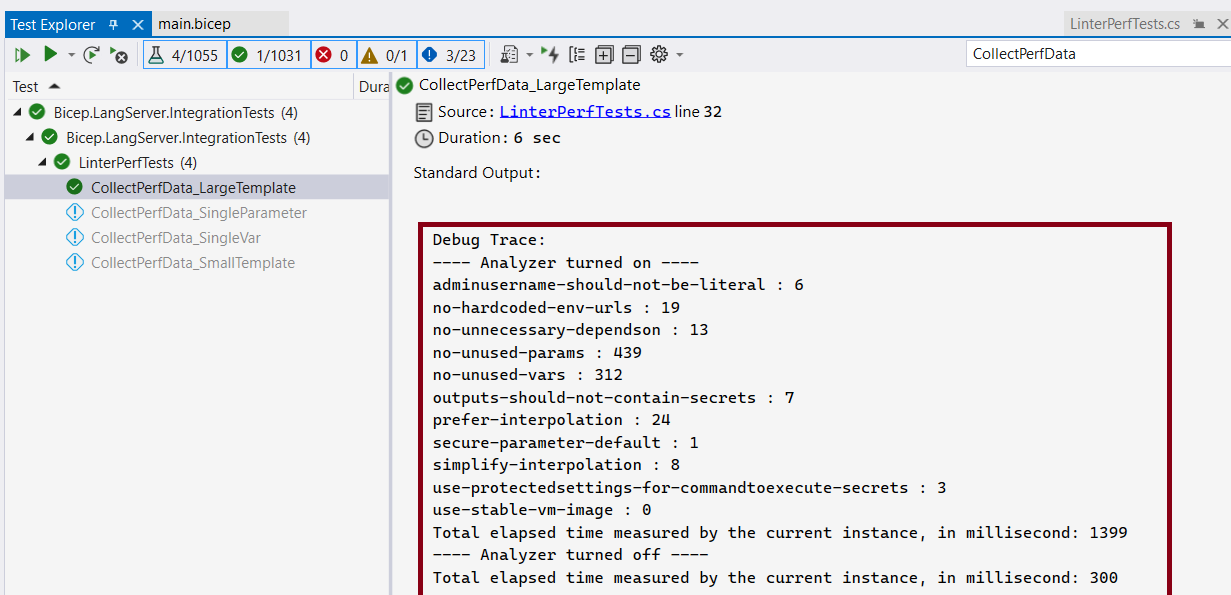

Below are the steps to manually measure impact of linters on file open. Integration test I used to get the data measures amount of time it takes to get the diagnostics information on file open for large/small bicep file with/without linters.

- Add stopwatch measurements in LinterRuleBase.cs https://github.com/Azure/bicep/blob/7bcea89b64aa8721b985473fbbc8d741b641f3c5/src/Bicep.Core/Analyzers/Linter/LinterRuleBase.cs#L87-L96

- Add stopwatch measurements in SematicModel.cs https://github.com/Azure/bicep/blob/7bcea89b64aa8721b985473fbbc8d741b641f3c5/src/Bicep.Core/Semantics/SemanticModel.cs#L185-L197

- Add the below integration test https://github.com/Azure/bicep/blob/7bcea89b64aa8721b985473fbbc8d741b641f3c5/src/Bicep.LangServer.IntegrationTests/LinterPerfTests.cs#L31-L81

- Replace the contents of main.bicep used in above test with attached large.txt and small.txt to get perf numbers for a large and relatively small bicep files

- Running the integration test should log numbers to standard output. Note: Below screenshot is from visual studio test explorer window

- Results of running the test on 1/12: LinterPerf.docx

@ucheNkadiCode, @StephenWeatherford , as discussed offline, I have documented the steps I used to get the perf numbers on file open with linters enabled/disabled. Could you please take a look and let me know if we need anything else for this issue?

Thanks!

I thought you were going to check in the tests, then make it so that it outputs the calculated times but always passes. We want to do this at least every release, it shouldn't require so many manual steps.

@StephenWeatherford - do we need to do more for this issue or we can close this?

FWIW since this issue was created, we have the ability to benchmark code; the cost to implement this should be lower. (see https://github.com/Azure/bicep/blob/main/src/Bicep.Tools.Benchmark)