bicep

bicep copied to clipboard

bicep copied to clipboard

Create a CDN to pull bicep assets to avoid github api limits

Bicep version Latest

Describe the bug We run Bicep deployments from a k8s operator we built. This can trigger many times an hour for multiple environments and runs in containers. We are often seeing failures to get the latest tag name from github in our Bicep pipeline. I saw that there is a TTL built-in since V4 but since we run in containers that are only active for the duration of the deployment we cannot take advantage of the TTL nor of caching.

To Reproduce Run 61 Bicep deployments within an hour using run-once containers.

Additional context We are going to look into using a cached version of Bicep to turn the templates into arm json and supplying the az cli with the json instead. However this means maintaining a custom image with Bicep and az-cli instead of using the az-cli container image.

The github API for anonymous requests is very limited, I think either the Bicep team should internally fix that Github drops this limit specifically for Bicep releases or a different CDN should be used that doesn't have such a strict limit. This is only going to become a bigger problem over time with more users picking up Bicep. For example: Org x with a couple hundred developers uses a single outgoing IP from their internal network. The org loves Bicep and wants to standardize on it. They might even hit the API limit with only day-to-day development work. I don't think this is an unlikely scenario.

For context, the default GitHub throttling is 60 anonymous requests per hour per IP address. This is why 61 reproduces the problem. I agree that we should setup a CDN to avoid this issue or increase the limits.

What version of az cli are you using? (az --version). I believe @shenglol added some backoff strategies to the download algorithm to avoid these throttling issues. CDN is still the right solution, but curious if this can somehow be avoided in the meantime.

@alex-frankel we are using run-once containers, so whichever is the latest Bicep version at the time of running. This also means the backoff/caching does not work for us since there's no persistence.

Ah got it. Do the "run once" containers have the same identifying info when it comes to the GitHub throttle? IOW, why doesn't creating a new container reset the limit?

The throttling is per IP address. If the IP address doesn't change or if you have a bunch of things NAT'd together, they will appear to be coming from the same IP and the limit won't reset.

Until a CDN is set you could use my docker image miqm/bicep-cli. Although unlike azure-cli it's based on debian not alpine, it has pre-downloaded latest bicep cli. So you shouldn't hit the limit as bicep compiler will be already there. AFAIR @shenglol implemented checking for latest version in a way that even if the check fails and binary is present, there will be no error.

Ah got it. Do the "run once" containers have the same identifying info when it comes to the GitHub throttle? IOW, why doesn't creating a new container reset the limit?

We run in kubernetes so we have one outgoing ip for all the containers, that's why the limit is not reset. We run our deployments from a gitops repo so any change to the repo (for example a dev version application, unrelated to Bicep) triggers a new Bicep deployment. This is quite spammy because we can't accurately determine if a deployment is necessary due to what-if limitations. The more azure deployments we do from our cluster the more we will hit this api limit. Currently about twice a day I would say.

I just reported a duplicate of this issue. The solution I would like would be for bicep to just be fully baked into the azure CLI or as its own cli tool which is available via package manager. What we need is for it to installed into the container used for building and then we wouldn't be reaching out to github at all thereafter.

I would like to essentially just do this in my container:

RUN apt update && apt install azure-cli azure-bicep -y

And now I would have az and bicep available to run, which would do no further pulling from pip or github.

You can always run az bicep install after installing the cli. I do this in my bicep-cli image.

Although it would be best if cli docker image came with bicep preinstalled.

az bicep install is what is failing for me :) It reaches out to github when called and errors with a throttling issue. I can re-run it until it randomly works and then we're good for a while but every time that image is rebuilt I have to basically keep retrying until it randomly works. It adds a ton of instability to the build.

I see there are some new instructions on how to do a stand-alone install of bicep now:

https://docs.microsoft.com/en-us/azure/azure-resource-manager/bicep/install

I was able to utilize these instructions to successfully install bicep and I haven't hit any CDN limits yet.

We are seeing similar rate limiting issues, when using the Azure CLI commands az bicep ....

Our use case is from within a pipeline, running on shared infrastructure (hereunder public IPs), which uses the azure-cli image, where we assume a version of Bicep is available. This also means that we don't take explicit care of installing the bicep tooling.

We have filed an issue on the azure-cli issue tracker for this as well, refer to https://github.com/Azure/azure-cli/issues/21338.

As the linked issue indicates, we would like az bicep ... to be less prone to errors such as rate limits, and that it should simply continue on with the command using whatever version may be available within the CLI.

@Agger1995 - as an intermediate solution you can use https://hub.docker.com/r/miqm/bicep-cli image (however as it's on dockerhub it might be affected with docker hub limits). Some solution would be to pre-install bicep on azure-cli image. Then only latest version checking would be subject to rate limits (unless we could set some config file of az cli to not check for version which could be handy in case of docker image).

We've started hitting the rate limits a lot more often over the last week or so:

We run our deployments via azure devops agents self hosted behind a firewall (Single outbound ip). I install azure-cli and bicep into the docker image each night which helped, but we're still hitting the limit just by running az deployment group create enough times in deployments.

Is there a progress update on this by any chance? Or thoughts on being able to disable az cli doing a lookup on every command? The docker image is rebuilt every night so I'm not worried that we'd fall behind on versions

Or as another possible option (Not sure whether it's more of an azcli question though), is there a way we could pass in our own authentication to the github lookup? The default rate limit appears to be 60 requests per ip per minute, but if we could authenticate (even with just a standard user account) we'd be able to make 5,000 requests per minute: https://docs.github.com/en/rest/overview/resources-in-the-rest-api#rate-limiting

@AKTheKnight install bicep locally 8n your image, then in your pipelines do a bicep build (not az bicep build, call the bicep binary directly), then pass the generated json and not the bicep to az deployment group create. You should with this flow have only 1 call to github, while creating the container.

Thanks @rouke-broersma, I'll work on doing this for now and hopefully in the future we'll be able to go back to just using the az cli

Status update from our side. The CDN is up and running. The remaining work is to update the PS and CLI to use the new URI to download Bicep. @davidcho23 is working on this part.

Btw we only need to update Azure CLI since Azure PS requires Bicep CLI to be manually installed by users.

Good pt!

@shenglol Are we going to run into throttling hitting the GitHub APIs to check for release versions?

E.g. here: https://github.com/Azure/azure-cli/blob/215960c2a6f1da41f5e60df041b2a75c52ff2ed0/src/azure-cli/azure/cli/command_modules/resource/_bicep.py#L147 and here: https://github.com/Azure/azure-cli/blob/215960c2a6f1da41f5e60df041b2a75c52ff2ed0/src/azure-cli/azure/cli/command_modules/resource/_bicep.py#L156

Do we have these URLs behind a CDN, or any plans to put them behind one? Of course, we'd need shorter TTLs as the response will change when we cut a new release.

Any update on this for us? We have started hitting this error as well.

We are gradually moving from ARM to Bicep for all our projects, and run more validation and deployment pipelines for them. This resulted in increased 403 errors on our pipelines also. When can we the CDN to be used to prevent these errors?

We hit an issue with the first CDN rollout and had to revert. We have a new design finalized that will fix the problem and @davidcho23 is working on it. We're shooting to switch back to the CDN in a month or so unless we discover more issues.

Any updates?

Having this problem also.

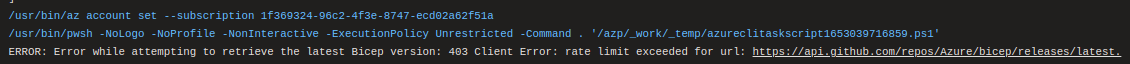

ERROR: Error while attempting to retrieve the latest Bicep version: 403 Client Error: rate limit exceeded for url

We're still working on this one as a high priority, and expect to have it rolled out by end of October.

So happy October is ending today, and I just hit this issue :)

Hit this issue again.

So happy October is ending today, and I just hit this issue :)

@georgekosmidis We don't know if it is Oct 2022 or Oct NEVER

Hit this issue just now as well.

Same here