Increase in "Microsoft.AspNetCore.Connections.ConnectionAbortedException" exceptions due to RpcWorkerChannel disposal

Investigative information

- Timestamp: started observing increase since 4/27

- Function App version: increases seen in 3.6.1 and 4.3.1

Full stack of the exception:

System.AggregateException : One or more errors occurred. (The request stream was aborted.) ---> The request stream was aborted. ---> The HTTP/2 connection faulted.

at System.Threading.Tasks.Task`1.GetResultCore(Boolean waitCompletionNotification)

at System.Threading.Tasks.Task`1.get_Result()

at async Microsoft.Azure.WebJobs.Script.Grpc.FunctionRpcService.<>c__DisplayClass4_0.<EventStream>b__1(??) at /src/azure-functions-host/src/WebJobs.Script.Grpc/Server/FunctionRpcService.cs : 46

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at async Microsoft.Azure.WebJobs.Script.Grpc.FunctionRpcService.EventStream(IAsyncStreamReader`1 requestStream,IServerStreamWriter`1 responseStream,ServerCallContext context) at /src/azure-functions-host/src/WebJobs.Script.Grpc/Server/FunctionRpcService.cs : 93 ---> (Inner Exception #0) System.IO.IOException : The request stream was aborted. ---> Microsoft.AspNetCore.Connections.ConnectionAbortedException : The HTTP/2 connection faulted. End of inner exception

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.IO.Pipelines.PipeCompletion.ThrowLatchedException()

at System.IO.Pipelines.Pipe.GetReadResult(ReadResult& result)

at System.IO.Pipelines.Pipe.GetReadAsyncResult()

at System.IO.Pipelines.Pipe.DefaultPipeReader.GetResult(Int16 token)

at async Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http2.Http2MessageBody.ReadAsync(CancellationToken cancellationToken)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at async Grpc.AspNetCore.Server.Internal.PipeExtensions.ReadStreamMessageAsync[T](PipeReader input,HttpContextServerCallContext serverCallContext,Func`2 deserializer,CancellationToken cancellationToken)

at System.Runtime.ExceptionServices.ExceptionDispatchInfo.Throw()

at System.Threading.Tasks.ValueTask`1.get_Result()

at async Grpc.AspNetCore.Server.Internal.HttpContextStreamReader`1.<MoveNext>g__MoveNextAsync|10_0[TRequest](??)<---

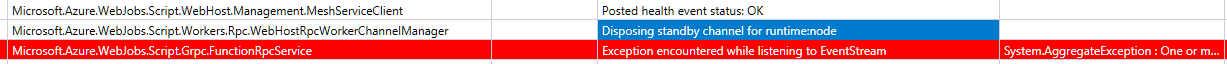

From the internal release dashboard, we can see that the exception rate jumped from 149 exceptions in 13 apps for 3.5.2 to 3596 exceptions in 597 apps for 3.6.1. The same trend is observed for 4.3.1 vs previous V4. Screenshot of relevant dashboard piece:

While spot-checking the affected apps, the trend is clear - the exception is preceded by a language worker channel disposal. More specifically, images that are brought up with several language workers need to dispose of unused language workers after specialization (e.g. Python app gets specialized on a container that had both Node and Python workers running; Node gets killed).

Questions for further investigation - 1: The language worker disposal after specialization is not new behaviour, but the exceptions have increased significantly. What changes have been made in the disposal path to exacerbate this? 2: Can we make these exceptions less verbose/sensitive to disposal?

Tagging @fabiocav / @liliankasem for triage

We think the worker we're doing for graceful shutdown will help address this.

- https://github.com/Azure/azure-functions-host/issues/2308

@brettsam made some changes to the disposal path and will validate if this was the cause for increased exceptions.

In addition to the work being done for graceful shutdown, we'll also look into handling this exception and making sure the noise is reduced.

Closing stale issue.