Cycle-CenterNet

Cycle-CenterNet copied to clipboard

Cycle-CenterNet copied to clipboard

CycleCenternet based on MMDetection

Cycle-CenterNet

Table structure parsing (TSP), Wired Table in the Wild (WTW).

The unofficial Cycle-CenterNet architecture repository, based on the MMDetection fork.

Parsing table structures in the wild,

Long, R., Wang, W., Xue, N., Gao, F., Yang, Z., Wang, Y., & Xia, G. S.

Contact: [email protected]. Any questions or discussions are welcome!

Abstract

This paper tackles the problem of table structure parsing (TSP) from images in the wild. In contrast to existing studies that mainly focus on parsing well-aligned tabular images with simple layouts from scanned PDF documents, we aim to establish a practical table structure parsing system for real-world scenarios where tabular input images are taken or scanned with severe deformation, bending or occlusions. For designing such a system, we propose an approach named Cycle-CenterNet on the top of CenterNet with a novel cycle-pairing module to simultaneously detect and group tabular cells into structured tables. In the cycle-pairing module, a new pairing loss function is proposed for the network training. Alongside with our Cycle-CenterNet, we also present a large-scale dataset, named Wired Table in the Wild (WTW), which includes well-annotated structure parsing of multiple style tables in several scenes like photo, scanning files, web pages, etc.. In experiments, we demonstrate that our Cycle-CenterNet consistently achieves the best accuracy of table structure parsing on the new WTW dataset by 24.6% absolute improvement evaluated by the TEDS metric. A more comprehensive experimental analysis also validates the advantages of our proposed methods for the TSP task.

Models

Link to download model CenterNet(ResNet backbone). Config

Link to download model CenterNet(DLA backbone). Config

Link to download model Cycle-CenterNet(DLA backbone) trained on bounding boxes. Config

Link to download model Cycle-CenterNet(DLA backbone) trained on bounding quadrangles. Config

Installation

conda create --name openmmlab python=3.8 -y

conda activate openmmlab

pip install torch==1.8.1+cu101 torchvision==0.9.1+cu101 torchaudio==0.8.1 -f https://download.pytorch.org/whl/torch_stable.html

pip install mmcv-full==1.6.2 -f https://download.openmmlab.com/mmcv/dist/cu101/torch1.8/index.html

pip install -e .

conda install -n openmmlab ipykernel --update-deps --force-reinstall

MMDetection README

📘Documentation | 🛠️Installation | 👀Model Zoo | 🆕Update News | 🚀Ongoing Projects | 🤔Reporting Issues

English | 简体中文

Introduction

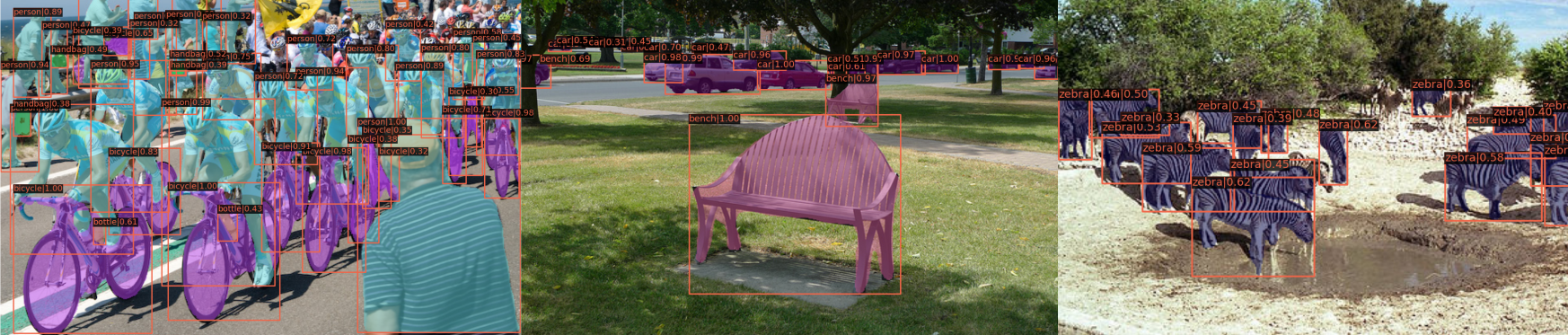

MMDetection is an open source object detection toolbox based on PyTorch. It is a part of the OpenMMLab project.

The master branch works with PyTorch 1.5+.

Major features

-

Modular Design

We decompose the detection framework into different components and one can easily construct a customized object detection framework by combining different modules.

-

Support of multiple frameworks out of box

The toolbox directly supports popular and contemporary detection frameworks, e.g. Faster RCNN, Mask RCNN, RetinaNet, etc.

-

High efficiency

All basic bbox and mask operations run on GPUs. The training speed is faster than or comparable to other codebases, including Detectron2, maskrcnn-benchmark and SimpleDet.

-

State of the art

The toolbox stems from the codebase developed by the MMDet team, who won COCO Detection Challenge in 2018, and we keep pushing it forward.

Apart from MMDetection, we also released a library mmcv for computer vision research, which is heavily depended on by this toolbox.

What's New

💎 Stable version

2.25.2 was released in 15/9/2022:

- Fix bugs in Dynamic Head and Swin Transformer.

- Refine docstring and documentation.

Please refer to changelog.md for details and release history.

For compatibility changes between different versions of MMDetection, please refer to compatibility.md.

🌟 Preview of 3.x version

A brand new version of MMDetection v3.0.0rc0 was released in 31/8/2022:

- Unifies interfaces of all components based on MMEngine.

- Faster training and testing speed with complete support of mixed precision training.

- Refactored and more flexible architecture.

- Provides more strong baselines and a general semi-supervised object detection framework. See tutorial of semi-supervised detection.

- Allows any kind of single-stage model as an RPN in a two-stage model. See tutorial.

Find more new features in 3.x branch. Issues and PRs are welcome!

Installation

Please refer to Installation for installation instructions.

Getting Started

Please see get_started.md for the basic usage of MMDetection. We provide colab tutorial and instance segmentation colab tutorial, and other tutorials for:

- with existing dataset

- with new dataset

- with existing dataset_new_model

- learn about configs

- customize_datasets

- customize data pipelines

- customize_models

- customize runtime settings

- customize_losses

- finetuning models

- export a model to ONNX

- export ONNX to TRT

- weight initialization

- how to xxx

Overview of Benchmark and Model Zoo

Results and models are available in the model zoo.

| Object Detection | Instance Segmentation | Panoptic Segmentation | Other |

|

|

|

|

| Backbones | Necks | Loss | Common |

|

|

|

|

Some other methods are also supported in projects using MMDetection.

FAQ

Please refer to FAQ for frequently asked questions.

Contributing

We appreciate all contributions to improve MMDetection. Ongoing projects can be found in out GitHub Projects. Welcome community users to participate in these projects. Please refer to CONTRIBUTING.md for the contributing guideline.

Acknowledgement

MMDetection is an open source project that is contributed by researchers and engineers from various colleges and companies. We appreciate all the contributors who implement their methods or add new features, as well as users who give valuable feedbacks. We wish that the toolbox and benchmark could serve the growing research community by providing a flexible toolkit to reimplement existing methods and develop their own new detectors.

Citation

If you use this toolbox or benchmark in your research, please cite this project.

@article{mmdetection,

title = {{MMDetection}: Open MMLab Detection Toolbox and Benchmark},

author = {Chen, Kai and Wang, Jiaqi and Pang, Jiangmiao and Cao, Yuhang and

Xiong, Yu and Li, Xiaoxiao and Sun, Shuyang and Feng, Wansen and

Liu, Ziwei and Xu, Jiarui and Zhang, Zheng and Cheng, Dazhi and

Zhu, Chenchen and Cheng, Tianheng and Zhao, Qijie and Li, Buyu and

Lu, Xin and Zhu, Rui and Wu, Yue and Dai, Jifeng and Wang, Jingdong

and Shi, Jianping and Ouyang, Wanli and Loy, Chen Change and Lin, Dahua},

journal= {arXiv preprint arXiv:1906.07155},

year={2019}

}

License

This project is released under the Apache 2.0 license.

Projects in OpenMMLab

- MMEngine: OpenMMLab foundational library for training deep learning models.

- MMCV: OpenMMLab foundational library for computer vision.

- MIM: MIM installs OpenMMLab packages.

- MMClassification: OpenMMLab image classification toolbox and benchmark.

- MMDetection: OpenMMLab detection toolbox and benchmark.

- MMDetection3D: OpenMMLab's next-generation platform for general 3D object detection.

- MMRotate: OpenMMLab rotated object detection toolbox and benchmark.

- MMSegmentation: OpenMMLab semantic segmentation toolbox and benchmark.

- MMOCR: OpenMMLab text detection, recognition, and understanding toolbox.

- MMPose: OpenMMLab pose estimation toolbox and benchmark.

- MMHuman3D: OpenMMLab 3D human parametric model toolbox and benchmark.

- MMSelfSup: OpenMMLab self-supervised learning toolbox and benchmark.

- MMRazor: OpenMMLab model compression toolbox and benchmark.

- MMFewShot: OpenMMLab fewshot learning toolbox and benchmark.

- MMAction2: OpenMMLab's next-generation action understanding toolbox and benchmark.

- MMTracking: OpenMMLab video perception toolbox and benchmark.

- MMFlow: OpenMMLab optical flow toolbox and benchmark.

- MMEditing: OpenMMLab image and video editing toolbox.

- MMGeneration: OpenMMLab image and video generative models toolbox.

- MMDeploy: OpenMMLab model deployment framework.