Approximetal

Approximetal

> Sorry about the delay @Approximetal. That's a lot of data so I don't think finetuning is necessary. Since you have a big dataset my guess is that the large...

Sorry I made a mistake, I mean when I comment out the padding in inference generation step, the noise disappeared. BTW, do I only need to adjust `fc_channels` and `conditioning_channels`?...

In my knowledge, postnet is added to enhance the performance of mel output, is CBHG plays the same role? What about remove this module?

> > My patch doesn't fully fix this. > > @jaacoppi I have tried to apply the patch and it seems work. Could you show more details why you think...

> It seems to be fine but the discriminator loss is a little bit small. > I'm not sure this is the problem with the amount of training data. >...

> > the loss is almost not decreasing > > Which loss value do you mean? The discriminator loss of PWG keeps the same value around 0.5. [The figure](https://user-images.githubusercontent.com/25227045/87218850-25c7ac00-c389-11ea-85f2-d2454cbd68d3.png) is...

@kan-bayashi I've tried several times by modifying learning rate and lambda_adv, but it always becomes overfitting after 1M, any idea to avoid this? Thanks.

> I think in the case of PWG 1M iterations are enough. > Why don’t you try adaptation using good single speaker model? In my experiments, it works well with...

> > I'm now training single speaker models > > Did you use pretrained model? > 70 utterances are not enough to train from scratch. > I think it is...

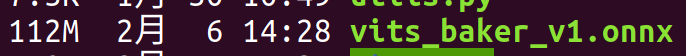

> I have export it to onnx, seems no obstacles to do this: > >  > > ``` > onnxexp glance -m vits_baker_v1.onnx vits!? > Namespace(model='vits_baker_v1.onnx', subparser_name='glance', verbose=False, version=False)...