Operator CRD is missing metadata for pod templates

My installation makes use of pod template annotations to enable things like monitoring, for example:

apiVersion: clickhouse.altinity.com/v1

kind: ClickHouseInstallation

metadata:

name: test

namespace: chop

spec:

templates:

podTemplates:

- name: foo

metadata:

baz: qux

In practice the operator uses the metadata when creating the stateful sets and it works perfectly.

We recently changed our deployment to use Kubernetes Server-Side Apply which is validating against the CRD and now the installations fail. It looks like it was mistakenly left out and this trivial change resolves it:

--- clickhouse-operator-install-crd.yaml.orig 2021-08-13 10:26:30.000000000 -0400

+++ clickhouse-operator-install-crd.yaml 2021-08-13 10:35:33.000000000 -0400

@@ -765,6 +765,9 @@

type: integer

minimum: 0

maximum: 65535

+ metadata:

+ type: object

+ nullable: true

spec:

# TODO specify PodSpec

type: object

@@ -1600,6 +1603,9 @@

type: integer

minimum: 0

maximum: 65535

+ metadata:

+ type: object

+ nullable: true

spec:

# TODO specify PodSpec

type: object

@Slach , please look

@ericisco which clickhouse-operator do you use? @alex-zaitsev I tried following manifest

apiVersion: clickhouse.altinity.com/v1

kind: ClickHouseInstallation

metadata:

name: issue-756

namespace: test

spec:

defaults:

templates:

podTemplate: foo

templates:

podTemplates:

- name: foo

metadata:

labels:

baz: qux

spec:

containers:

- name: clickhouse

image: yandex/clickhouse-server:21.8

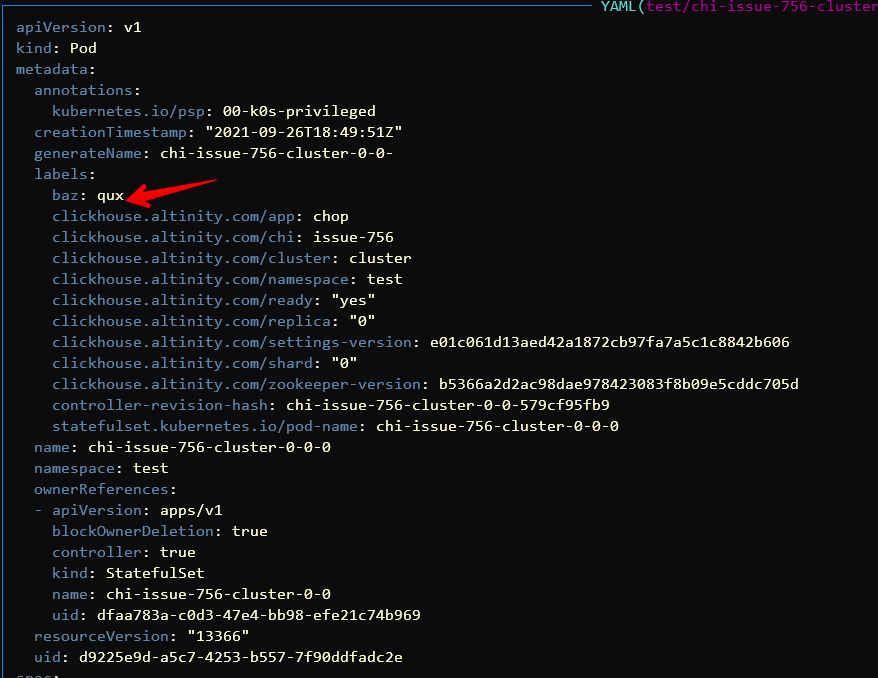

in 0.15.1 and 0.16.0 all works as expected

labels is present on created Pod

@ericisco is issue still relevant for you?

if yes, could you share your ClickHouseInstallation manifest?

Hi,

I was testing this using version 0.15.0.

Did you apply that manifest using SSA?

Transferring the metadata to the pods works as far as the operator is concerned, the issue is that when trying to apply the ClickHouseInstallation manifest via a Kubernetes Server-Side Apply it fails validation and won't apply at all because the CRD does not include the metadata field (see diff in original description).

The problem surfaced for me when attempting to use a Terraform kubernetes_manifest which requires SSA. I would expect other (non-Terraform) SSA methods to have the same problem but, admittedly, I did not try any other approaches.

Hope that clarifies the issue...

One workaround for this is to set force-conflicts to true.

--force-conflicts=false: If true, server-side apply will force the changes against conflicts

But in my humble opinion this is not safe to do as it defeated to purpose of server side validation.

@ericisco , could you test it with new 0.16.1 version? We have fixed a few minor manifest issues.