AutoQuant

AutoQuant copied to clipboard

AutoQuant copied to clipboard

R package for automation of machine learning, forecasting, model evaluation, and model interpretation

RemixAutoML website and reference manual

Table of Contents

- Background

- Highlights

- Installation

- RemixAutoML Blogs

Documentation + Code Examples

- Feature Engineering

- Supervised Learning

- Model Scoring

- Model Evaluation

- Panel Data Forecasting

- Intermittent Demand Forecasting

- Funnel Forecasting

- Time Series Forecasting

- Recommender Systems

- Database Management

- Utilities EDA and Misc

Background

Expand to view content

Automated Machine Learning - In my view, AutoML should consist of functions to help make professional model development and operationalization more efficient. The functions in this package are there to help no matter which part of the ML lifecycle you are working on. The functions in this package have been tested across a variety of industries and have consistently outperformed competing methods. RemixAutoML has enabled me to deliver exceptionally high quality solutions in minimal time and as a result I can focus more time on the other aspects of projects that are critical for generating a high ROI and high adoption rate.

Package Details

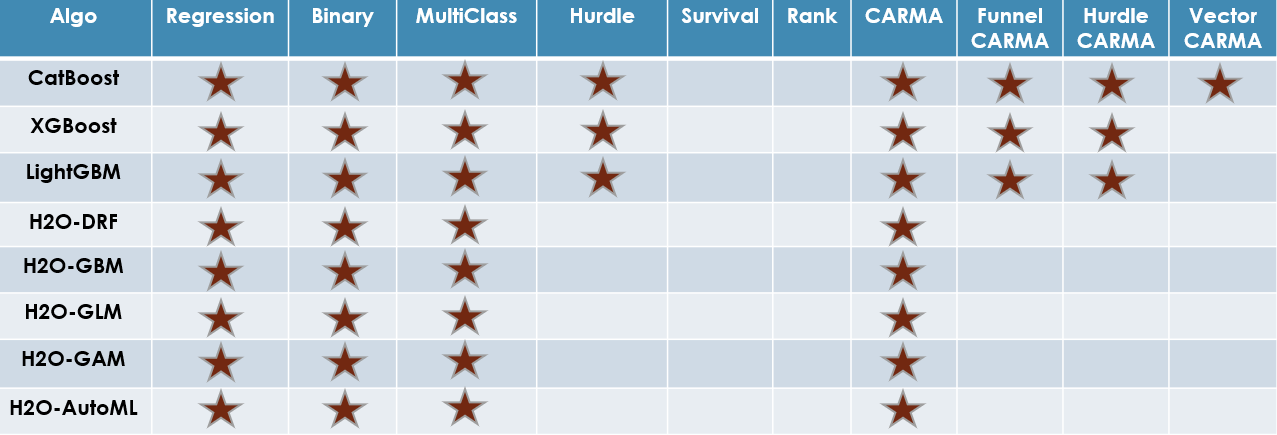

Supervised Learning - Currently, I'm utilizing CatBoost, LightGBM, XGBoost, and H2O for all of the automated Machine Learning related functions. GPU's can be utilized with CatBoost, LightGBM, and XGBoost, while those and the H2O models can all utilize 100% of CPU. Multi-armed bandit grid tuning is available for CatBoost, LightGBM, and XGBoost models, which utilize the concept of randomized probability matching, which is detailed in the R pacakge "bandit". My choice of included ML algorithms in the package is based on previous success when compared against other algorithms on real world use cases, the additional utilities these packages offer aside from accurate predictions, their ability to work on big data, and the fact that they're available in both R and Python which makes managing multiple languages a little more seamless in a professional setting.

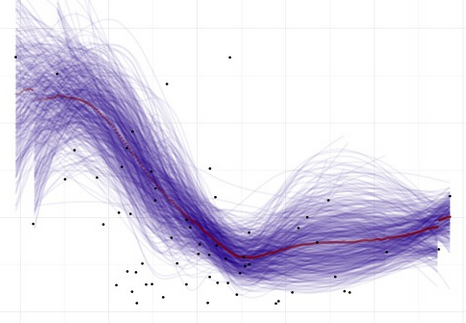

Forecasting - Automated functions for single series, panel data, vector autoregression, intermittent demand, and funnel panel data. The panel data models utilize the machine learning algos from above and the feature engineering functions below. They are extremely feature rich and the combination of all possible feature settings is huge. The models for individual series are fully optimized versions from the R package "forecast". I utilize the multi-armed bandit grid tuning algo used in the supervised learning models and apply it to the SARIMA and NNETAR models from the forecast package. I also measure performance on hold out data (and training data, or a blend of the two).

Feature Engineering - Some of the feature engineering functions can only be found in this package. I believe feature engineering is your best bet for improving model performance. I have functions that cover all feature types except image data. There are feature engineering functions for numeric data, categorical data, text data, and date data. They are all designed to generate features for training and scoring pipelines and they run extremely fast with low memory utilization. The package takes advantage of data.table for all feature engineering and data wrangling related functions which means I only have to go to big data tools if absolutely necessary.

Documentation - Each exported function in the package has a help file and can be viewed in your RStudio session, e.g.

?RemixAutoML::ModelDataPrep. Many of them come with examples coded up in the help files (at the bottom) that you can run to get a feel for how to set the parameters. There's also a listing of exported functions by category with code examples at the bottom of this readme. You can also jump into the R folder here to dig into the source code.

Overall process: Typically, I go to the warehouse to get all of my base features and then I run through all the relevant feature engineering functions in this package. Personally, I set up templates for features engineering, model training optimization, and model scoring (including feature engineering for scoring). I collect all relevant metdata in a list that is shared across templates and as a result, I never have to touch the model scoring template, which makes operationalize and maintenace a breeze. I can simply list out the columns of interest, which feature engineering functions I want to utilize, and then I simply kick off some command line scripts and everything else is automatically managed.

Highlights

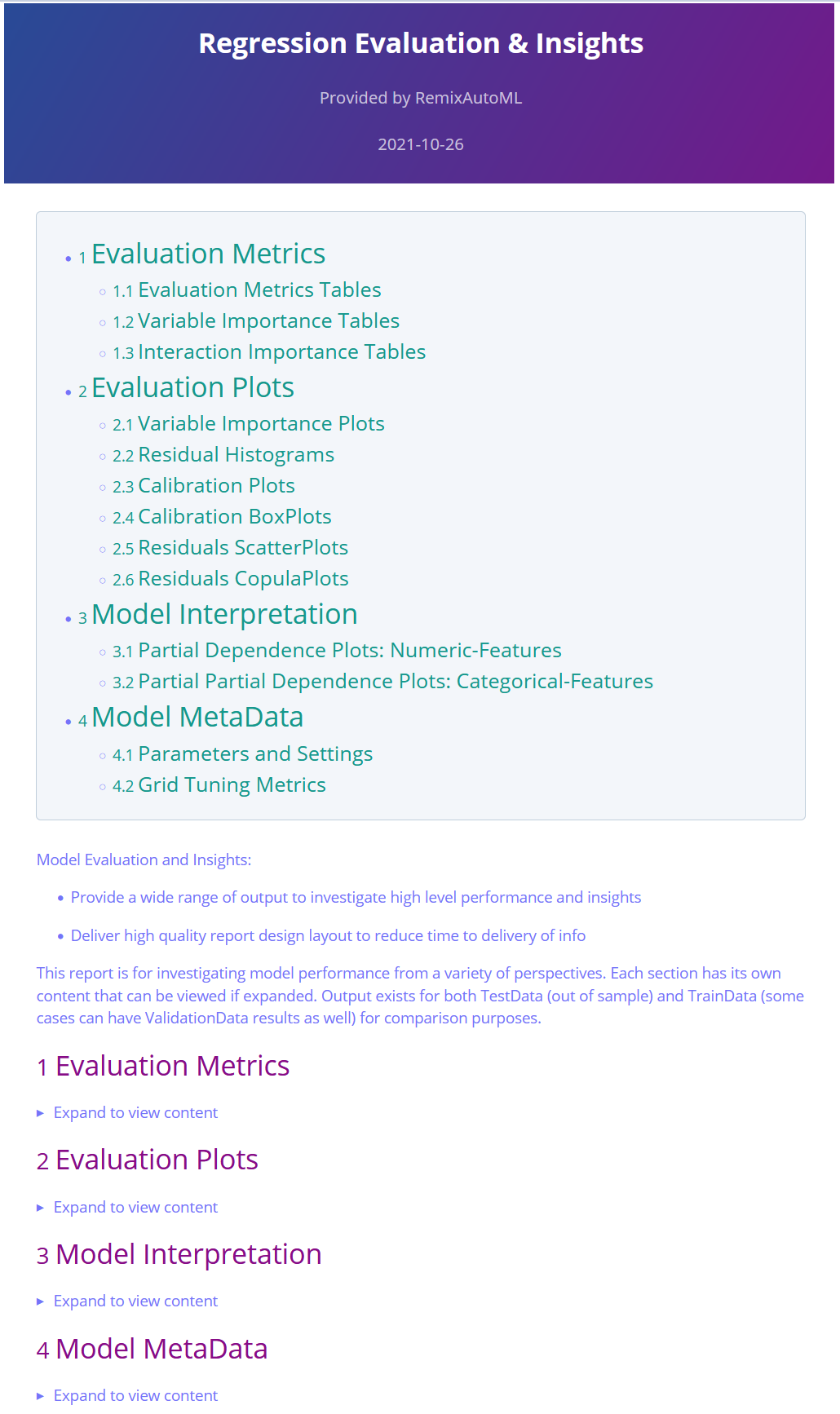

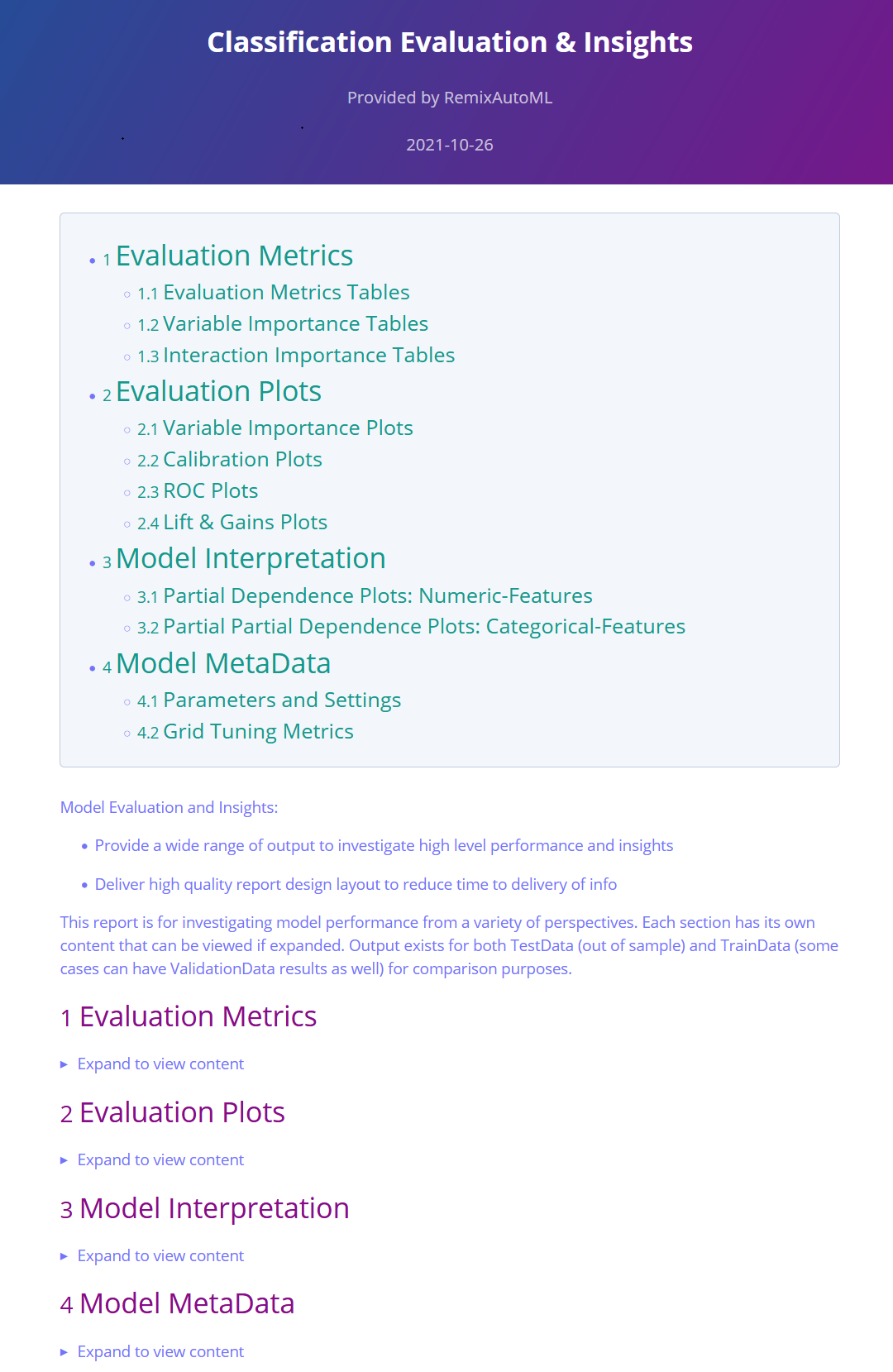

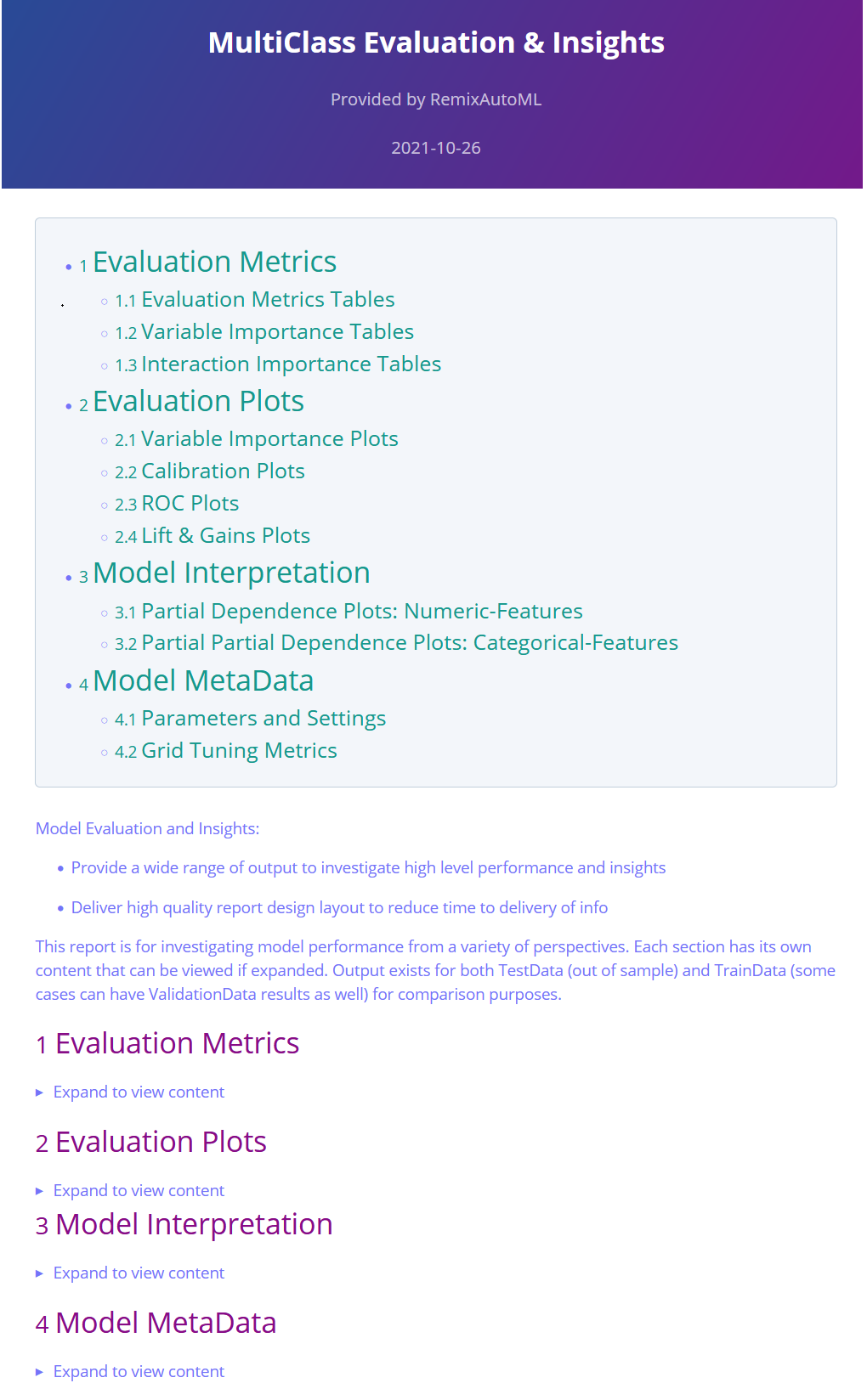

Model Insights Reports

Automatically generate reports with relevant evaluation metrics, tables, plots, and metadata

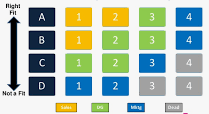

Machine Learning and Panel Forecasting Models

AutoML functions by algorithm type and use case type

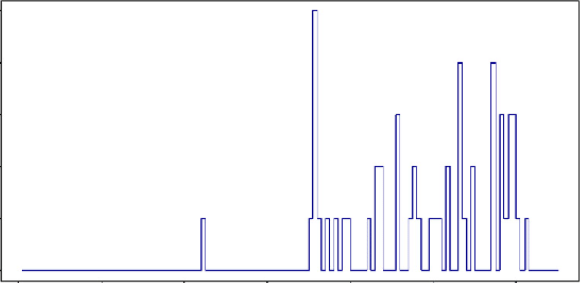

Feature Engineering

Feature engineering functions for a variety of data types and data sets

Installation

The Description File is designed to require only the minimum number of packages to install RemixAutoML. However, in order to utilize most of the functions in the package, you'll have to install additional libraries. I set it up this way on purpose. You don't need to install every single possible dependency if you are only interested in using a few of the functions. For example, if you only want to use CatBoost then install the catboost package and forget about the h2o, xgboost, and lightgbm packages. This is one of the primary benefits of not hosting an R package on cran, as they require dependencies to be part of the Imports section on the Description File, which subsequently requires users to have all dependencies installed in order to install the package.

The minimal set of packages that need to be installed are below. The full list can be found by expanding the section (Expand to view content).

- arules

- bit64

- data.table

- doParallel

- foreach

- lubridate

- timeDate

# Core pacakges

if(!("data.table" %in% rownames(installed.packages()))) install.packages("data.table"); print("data.table")

if(!("collapse" %in% rownames(install.packages()))) install.packages("collapse"); print("collapse")

if(!("arules" %in% rownames(installed.packages()))) install.packages("arules"); print("arules")

if(!("bit64" %in% rownames(installed.packages()))) install.packages("bit64"); print("bit64")

if(!("devtools" %in% rownames(installed.packages()))) install.packages("devtools"); print("devtools")

if(!("doParallel" %in% rownames(installed.packages()))) install.packages("doParallel"); print("doParallel")

if(!("foreach" %in% rownames(installed.packages()))) install.packages("foreach"); print("foreach")

if(!("lubridate" %in% rownames(installed.packages()))) install.packages("lubridate"); print("lubridate")

if(!("timeDate" %in% rownames(installed.packages()))) install.packages("timeDate"); print("timeDate")

# RemixAutoML

devtools::install_github('AdrianAntico/RemixAutoML', upgrade = FALSE, dependencies = FALSE, force = TRUE)

Expand to view content

Install ALL R package dependencies for all functions:

XGBoost and LightGBM can be used with GPU. However, their installation is much more involved than CatBoost, which comes with GPU capabilities simply by installing their package. The installation instructions for them below is for the CPU version only. Refer to each's home page for instructions for installing for GPU.

# Install Dependencies----

if(!("devtools" %in% rownames(installed.packages()))) install.packages("devtools"); print("devtools")

# Core pacakges

if(!("data.table" %in% rownames(installed.packages()))) install.packages("data.table"); print("data.table")

if(!("collapse" %in% rownames(install.packages()))) install.packages("collapse"); print("collapse")

if(!("arules" %in% rownames(installed.packages()))) install.packages("arules"); print("arules")

if(!("bit64" %in% rownames(installed.packages()))) install.packages("bit64"); print("bit64")

if(!("devtools" %in% rownames(installed.packages()))) install.packages("devtools"); print("devtools")

if(!("doParallel" %in% rownames(installed.packages()))) install.packages("doParallel"); print("doParallel")

if(!("foreach" %in% rownames(installed.packages()))) install.packages("foreach"); print("foreach")

if(!("lubridate" %in% rownames(installed.packages()))) install.packages("lubridate"); print("lubridate")

if(!("timeDate" %in% rownames(installed.packages()))) install.packages("timeDate"); print("timeDate")

# Additional dependencies for specific use cases

if(!("combinat" %in% rownames(install.packages()))) install.packages("combinat"); print("combinat")

if(!("DBI" %in% rownames(install.packages()))) install.packages("DBI"); print("DBI")

if(!("e1071" %in% rownames(installed.packages()))) install.packages("e1071"); print("e1071")

if(!("fBasics" %in% rownames(installed.packages()))) install.packages("fBasics"); print("fBasics")

if(!("forecast" %in% rownames(installed.packages()))) install.packages("forecast"); print("forecast")

if(!("fpp" %in% rownames(installed.packages()))) install.packages("fpp"); print("fpp")

if(!("ggplot2" %in% rownames(installed.packages()))) install.packages("ggplot2"); print("ggplot2")

if(!("gridExtra" %in% rownames(installed.packages()))) install.packages("gridExtra"); print("gridExtra")

if(!("itertools" %in% rownames(installed.packages()))) install.packages("itertools"); print("itertools")

if(!("MLmetrics" %in% rownames(installed.packages()))) install.packages("MLmetrics"); print("MLmetrics")

if(!("nortest" %in% rownames(installed.packages()))) install.packages("nortest"); print("nortest")

if(!("pROC" %in% rownames(installed.packages()))) install.packages("pROC"); print("pROC")

if(!("RColorBrewer" %in% rownames(installed.packages()))) install.packages("RColorBrewer"); print("RColorBrewer")

if(!("recommenderlab" %in% rownames(installed.packages()))) install.packages("recommenderlab"); print("recommenderlab")

if(!("RPostgres" %in% rownames(install.packages()))) install.packages("RPostgres"); print("RPostgres")

if(!("Rfast" %in% rownames(installed.packages()))) install.packages("Rfast"); print("Rfast")

if(!("scatterplot3d" %in% rownames(installed.packages()))) install.packages("scatterplot3d"); print("scatterplot3d")

if(!("stringr" %in% rownames(installed.packages()))) install.packages("stringr"); print("stringr")

if(!("tsoutliers" %in% rownames(installed.packages()))) install.packages("tsoutliers"); print("tsoutliers")

if(!("xgboost" %in% rownames(installed.packages()))) install.packages("xgboost"); print("xgboost")

if(!("lightgbm" %in% rownames(installed.packages()))) install.packages("lightgbm"); print("lightgbm")

if(!("regmedint" %in% rownames(installed.packages()))) install.packages("regmedint"); print("regmedint")

if(!("lme4" %in% rownames(installed.packages()))) install.packages("regmedint"); print("regmedint")

# If using R 4.20 with rtools42. Uninstall rstan and StanHeaders first (if they are installed). Put rtools42 in the C:/ drive; C:/rtools42

if(!("StanHeaders" %in% rownames(installed.packages()))) install.packages("StanHeaders", repos = c("https://mc-stan.org/r-packages/", getOption("repos")))

if(!("rstan" %in% rownames(installed.packages()))) install.packages("rstan", repos = c("https://mc-stan.org/r-packages/", getOption("repos")))

if(!("brms" %in% rownames(installed.packages()))) install.packages("brms"); print("brms")

for(pkg in c("RCurl","jsonlite")) if (! (pkg %in% rownames(installed.packages()))) { install.packages(pkg) }

install.packages("h2o", type = "source", repos = (c("http://h2o-release.s3.amazonaws.com/h2o/latest_stable_R")))

devtools::install_github('catboost/catboost', subdir = 'catboost/R-package')

devtools::install_github('AdrianAntico/prettydoc', upgrade = FALSE, dependencies = FALSE, force = TRUE)

# And lastly, RemixAutoML

devtools::install_github('AdrianAntico/RemixAutoML', upgrade = FALSE, dependencies = FALSE, force = TRUE)

Installation Troubleshooting

The most common issue some users are having when trying to install RemixAutoML is the installation of the catboost package dependency. Since catboost is not on CRAN it can only be installed through GitHub. To install catboost without error (and consequently install RemixAutoML without error), try running this line of code first, then restart your R session, then re-run the 2-step installation process above. (Reference):

If you're still having trouble submit an issue and I'll work with you to get it installed.

# Method for on premise servers

options(devtools.install.args = c("--no-multiarch", "--no-test-load"))

install.packages("https://github.com/catboost/catboost/releases/download/v0.26.1/catboost-R-Windows-0.26.1.tgz", repos = NULL, type = "source", INSTALL_opts = c("--no-multiarch", "--no-test-load"))

# Method for azure machine learning Designer pipelines

## catboost

install.packages("https://github.com/catboost/catboost/releases/download/v0.26.1/catboost-R-Windows-0.26.1.tgz", repos = NULL, type = "source", INSTALL_opts = c("--no-multiarch", "--no-test-load"))

## RemixAutoML

install.packages("https://github.com/AdrianAntico/RemixAutoML/archive/refs/tags/V0.5.9.tar.gz", repos = NULL, type = "source", INSTALL_opts = c("--no-multiarch", "--no-test-load"))

If you're having still having trouble installing see if the issue below helps out:

RemixAutoML Blogs

Expand to view content

Sales Funnel Forecasting with ML using RemixAutoML

The Most Feature Rich ML Forecasting Methods Available

AutoML Frameworks in R & Python

AI for Small to Medium Size Businesses: A Management Take On The Challenges...

Why Machine Learning is more Practical than Econometrics in the Real World

Build Thousands of Automated Demand Forecasts in 15 Minutes Using AutoCatBoostCARMA in R

Automate Your KPI Forecasts With Only 1 Line of R Code Using AutoTS

Feature Engineering

Expand to view content

AutoLagRollStats() and AutoLagRollStatsScoring()

Code Example

# Create fake Panel Data----

Count <- 1L

for(Level in LETTERS) {

datatemp <- RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 25000L,

ID = 0L,

ZIP = 0L,

FactorCount = 0L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

datatemp[, Factor1 := eval(Level)]

if(Count == 1L) {

data <- data.table::copy(datatemp)

} else {

data <- data.table::rbindlist(list(data, data.table::copy(datatemp)))

}

Count <- Count + 1L

}

# Add scoring records

data <- RemixAutoML::AutoLagRollStats(

# Data

data = data,

DateColumn = "DateTime",

Targets = "Adrian",

HierarchyGroups = NULL,

IndependentGroups = c("Factor1"),

TimeUnitAgg = "days",

TimeGroups = c("days", "weeks", "months", "quarters"),

TimeBetween = NULL,

TimeUnit = "days",

# Services

RollOnLag1 = TRUE,

Type = "Lag",

SimpleImpute = TRUE,

# Calculated Columns

Lags = list("days" = c(seq(1,5,1)), "weeks" = c(seq(1,3,1)), "months" = c(seq(1,2,1)), "quarters" = c(seq(1,2,1))),

MA_RollWindows = list("days" = c(seq(1,5,1)), "weeks" = c(seq(1,3,1)), "months" = c(seq(1,2,1)), "quarters" = c(seq(1,2,1))),

SD_RollWindows = NULL,

Skew_RollWindows = NULL,

Kurt_RollWindows = NULL,

Quantile_RollWindows = NULL,

Quantiles_Selected = NULL,

Debug = FALSE)

Code Example

# Create fake Panel Data----

Count <- 1L

for(Level in LETTERS) {

datatemp <- RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 25000L,

ID = 0L,

ZIP = 0L,

FactorCount = 0L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

datatemp[, Factor1 := eval(Level)]

if(Count == 1L) {

data <- data.table::copy(datatemp)

} else {

data <- data.table::rbindlist(list(data, data.table::copy(datatemp)))

}

Count <- Count + 1L

}

# Create ID columns to know which records to score

data[, ID := .N:1L, by = "Factor1"]

data.table::set(data, i = which(data[["ID"]] == 2L), j = "ID", value = 1L)

# Score records

data <- RemixAutoML::AutoLagRollStatsScoring(

# Data

data = data,

RowNumsID = "ID",

RowNumsKeep = 1,

DateColumn = "DateTime",

Targets = "Adrian",

HierarchyGroups = c("Store","Dept"),

IndependentGroups = NULL,

# Services

TimeBetween = NULL,

TimeGroups = c("days", "weeks", "months"),

TimeUnit = "day",

TimeUnitAgg = "day",

RollOnLag1 = TRUE,

Type = "Lag",

SimpleImpute = TRUE,

# Calculated Columns

Lags = list("days" = c(seq(1,5,1)), "weeks" = c(seq(1,3,1)), "months" = c(seq(1,2,1))),

MA_RollWindows = list("days" = c(seq(1,5,1)), "weeks" = c(seq(1,3,1)), "months" = c(seq(1,2,1))),

SD_RollWindows = list("days" = c(seq(1,5,1)), "weeks" = c(seq(1,3,1)), "months" = c(seq(1,2,1))),

Skew_RollWindows = list("days" = c(seq(1,5,1)), "weeks" = c(seq(1,3,1)), "months" = c(seq(1,2,1))),

Kurt_RollWindows = list("days" = c(seq(1,5,1)), "weeks" = c(seq(1,3,1)), "months" = c(seq(1,2,1))),

Quantile_RollWindows = list("days" = c(seq(1,5,1)), "weeks" = c(seq(1,3,1)), "months" = c(seq(1,2,1))),

Quantiles_Selected = c("q5","q10","q95"),

Debug = FALSE)

Function Description

AutoLagRollStats() builds lags and rolling statistics by grouping variables and their interactions along with multiple different time aggregations if selected. Rolling stats include mean, sd, skewness, kurtosis, and the 5th - 95th percentiles. This function was inspired by the distributed lag modeling framework but I wanted to use it for time series analysis as well and really generalize it as much as possible. The beauty of this function is inspired by analyzing whether a baseball player will get a basehit or more in his next at bat. One easy way to get a better idea of the likelihood is to look at his batting average and his career batting average. However, players go into hot streaks and slumps. How do we account for that? Well, in comes the functions here. You look at the batting average over the last N to N+x at bats, for various N and x. I keep going though - I want the same windows for calculating the players standard deviation, skewness, kurtosis, and various quantiles over those time windows. I also want to look at all those measure but by using weekly data - as in, over the last N weeks, pull in those stats too.

AutoLagRollStatsScoring() builds the above features for a partial set of records in a data set. The function is extremely useful as it can compute these feature vectors at a significantly faster rate than the non scoring version which comes in handy for scoring ML models. If you can find a way to make it faster, let me know.

AutoLagRollMode()

Code Example

# NO GROUPING CASE: Create fake Panel Data----

Count <- 1L

for(Level in LETTERS) {

datatemp <- RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 25000L,

ID = 0L,

ZIP = 0L,

FactorCount = 2L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

datatemp[, Factor1 := eval(Level)]

if(Count == 1L) {

data <- data.table::copy(datatemp)

} else {

data <- data.table::rbindlist(

list(data, data.table::copy(datatemp)))

}

Count <- Count + 1L

}

# NO GROUPING CASE: Create rolling modes for categorical features

data <- RemixAutoML::AutoLagRollMode(

data,

Lags = seq(1,5,1),

ModePeriods = seq(2,5,1),

Targets = c("Factor_1"),

GroupingVars = NULL,

SortDateName = "DateTime",

WindowingLag = 1,

Type = "Lag",

SimpleImpute = TRUE)

# GROUPING CASE: Create fake Panel Data----

Count <- 1L

for(Level in LETTERS) {

datatemp <- RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 25000L,

ID = 0L,

ZIP = 0L,

FactorCount = 2L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

datatemp[, Factor1 := eval(Level)]

if(Count == 1L) {

data <- data.table::copy(datatemp)

} else {

data <- data.table::rbindlist(

list(data, data.table::copy(datatemp)))

}

Count <- Count + 1L

}

# GROUPING CASE: Create rolling modes for categorical features

data <- RemixAutoML::AutoLagRollMode(

data,

Lags = seq(1,5,1),

ModePeriods = seq(2,5,1),

Targets = c("Factor_1"),

GroupingVars = "Factor_2",

SortDateName = "DateTime",

WindowingLag = 1,

Type = "Lag",

SimpleImpute = TRUE)

Function Description

AutoLagRollMode() Generate lags and rolling modes for categorical variables

AutoDiffLagN()

Code Example

##############################

# Current minus lag1

##############################

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 50000,

ID = 2L,

FactorCount = 3L,

AddDate = TRUE,

ZIP = 0L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Store Cols to diff

Cols <- names(data)[which(unlist(data[, lapply(.SD, is.numeric)]))]

# Clean data before running AutoDiffLagN

data <- RemixAutoML::ModelDataPrep(

data = data,

Impute = FALSE,

CharToFactor = FALSE,

FactorToChar = TRUE)

# Run function

data <- RemixAutoML::AutoDiffLagN(

data,

DateVariable = "DateTime",

GroupVariables = c("Factor_2"),

DiffVariables = Cols,

DiffDateVariables = "DateTime",

DiffGroupVariables = "Factor_1",

NLag1 = 0,

NLag2 = 1,

Sort = TRUE,

RemoveNA = TRUE)

##############################

# lag1 minus lag3

##############################

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 50000,

ID = 2L,

FactorCount = 3L,

AddDate = TRUE,

ZIP = 0L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Store Cols to diff

Cols <- names(data)[which(unlist(data[, lapply(.SD, is.numeric)]))]

# Clean data before running AutoDiffLagN

data <- RemixAutoML::ModelDataPrep(

data = data,

Impute = FALSE,

CharToFactor = FALSE,

FactorToChar = TRUE)

# Run function

data <- RemixAutoML::AutoDiffLagN(

data,

DateVariable = "DateTime",

GroupVariables = c("Factor_2"),

DiffVariables = Cols,

DiffDateVariables = "DateTime",

DiffGroupVariables = "Factor_1",

NLag1 = 1,

NLag2 = 3,

Sort = TRUE,

RemoveNA = TRUE)

Function Description

AutoDiffLagN() Generate differences for numeric columns, date columns, and categorical columns, by groups. You can specify NLag1 and NLag2 to generate the diffs based on any two time periods.

AutoInteraction()

Code Example

#########################################

# Feature Engineering for Model Training

#########################################

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 50000,

ID = 2L,

FactorCount = 2L,

AddDate = TRUE,

ZIP = 0L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Print number of columns

print(ncol(data))

# Store names of numeric and integer cols

Cols <-names(data)[c(which(unlist(lapply(data, is.numeric))),

which(unlist(lapply(data, is.integer))))]

# Model Training Feature Engineering

system.time(data <- RemixAutoML::AutoInteraction(

data = data,

NumericVars = Cols,

InteractionDepth = 4,

Center = TRUE,

Scale = TRUE,

SkipCols = NULL,

Scoring = FALSE,

File = getwd()))

# user system elapsed

# 0.32 0.22 0.53

# Print number of columns

print(ncol(data))

# 16

########################################

# Feature Engineering for Model Scoring

########################################

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 50000,

ID = 2L,

FactorCount = 2L,

AddDate = TRUE,

ZIP = 0L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Print number of columns

print(ncol(data))

# 16

# Reduce to single row to mock a scoring scenario

data <- data[1L]

# Model Scoring Feature Engineering

system.time(data <- RemixAutoML::AutoInteraction(

data = data,

NumericVars = names(data)[

c(which(unlist(lapply(data, is.numeric))),

which(unlist(lapply(data, is.integer))))],

InteractionDepth = 4,

Center = TRUE,

Scale = TRUE,

SkipCols = NULL,

Scoring = TRUE,

File = file.path(getwd(), "Standardize.Rdata")))

# user system elapsed

# 0.19 0.00 0.19

# Print number of columns

print(ncol(data))

# 1095

Function Description

AutoInteraction() will build out any number of interactions you want for numeric variables. You supply a character vector of numeric or integer column names, along with the names of any numeric columns you want to skip (including the interaction column names) and the interactions will be automatically created for you. For example, if you want a 4th degree interaction from 10 numeric columns, you will have 10 C 2, 10 C 3, and 10 C 4 columns created. Now, let's say you build all those features and decide you don't want all 10 features to be included. Remove the feature name from the NumericVars character vector. Now, let's say you modeled all of the interaction features and want to remove the ones will the lowest scores on the variable importance list. Grab the names and run the interaction function again except this time supply those poor performing interaction column names to the SkipCols argument and they will be ignored. Now, if you want to interact any categorical variable with a numeric variable, you'll have to dummify the categorical variable first and then include the level specific dummy variable column names to the NumericVars character vector argument. If you set Center and Scale to TRUE then the interaction multiplication won't create huge numbers.

AutoWord2VecModeler() and AutoWord2VecScoring()

Code Example

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 1000L,

ID = 2L,

FactorCount = 2L,

AddDate = TRUE,

AddComment = TRUE,

ZIP = 2L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Create Model and Vectors

data <- RemixAutoML::AutoWord2VecModeler(

data,

BuildType = "individual",

stringCol = c("Comment"),

KeepStringCol = FALSE,

ModelID = "Model_1",

model_path = getwd(),

vects = 10,

MinWords = 1,

WindowSize = 1,

Epochs = 25,

SaveModel = "standard",

Threads = max(1,parallel::detectCores()-2),

MaxMemory = "28G")

# Remove data

rm(data)

# Create fake data for mock scoring

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 1000L,

ID = 2L,

FactorCount = 2L,

AddDate = TRUE,

AddComment = TRUE,

ZIP = 2L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Create vectors for scoring

data <- RemixAutoML::AutoWord2VecScoring(

data,

BuildType = "individual",

ModelObject = NULL,

ModelID = "Model_1",

model_path = getwd(),

stringCol = "Comment",

KeepStringCol = FALSE,

H2OStartUp = TRUE,

H2OShutdown = TRUE,

Threads = max(1L, parallel::detectCores() - 2L),

MaxMemory = "28G")

Function Description

AutoWord2VecModeler() generates a specified number of vectors (word2vec) for each column of text data in your data set that you specify and it will save the models if you specify for re-creating them later in a model scoring process. You can choose to build individual models for each column or one model for all your columns. If you need to run several models for groups of text variables you can run the function several times.

AutoWord2VecScoring() this is for generating word2vec vectors for model scoring situations. The function will load the model, create the transformations, and merge them onto the source data.table just like the training version does.

CategoricalEncoding()

Code Example

# Create fake data with 10 categorical

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000000,

ID = 2L,

ZIP = 0,

FactorCount = 10L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

# Take your pick

Meth <- c('m_estimator',

'credibility',

'woe',

'target_encoding',

'poly_encode',

'backward_difference',

'helmert')

# Pass to function

MethNum <- 1

# Mock test data with same factor levels

test <- data.table::copy(data)

# Run in Train Mode

data <- RemixAutoML::CategoricalEncoding(

data = data,

ML_Type = "classification",

GroupVariables = paste0("Factor_", 1:10),

TargetVariable = "Adrian",

Method = Meth[MethNum],

SavePath = getwd(),

Scoring = FALSE,

ReturnFactorLevelList = FALSE,

SupplyFactorLevelList = NULL,

KeepOriginalFactors = FALSE)

# View results

print(data)

# Run in Score Mode by pulling in the csv's

test <- RemixAutoML::CategoricalEncoding(

data = data,

ML_Type = "classification",

GroupVariables = paste0("Factor_", 1:10),

TargetVariable = "Adrian",

Method = Meth[MethNum],

SavePath = getwd(),

Scoring = TRUE,

ImputeValueScoring = 222,

ReturnFactorLevelList = FALSE,

SupplyFactorLevelList = NULL,

KeepOriginalFactors = FALSE)

}

Function Description

CategoricalEncoding() enables you to convert your categorical variables into numeric variables in seven different ways. You can choose from m_estimator, credibility (a.k.a. James Stein), weight of evidence, target encoding, poly encoding, backward difference encoding, and helmert encoding. You can run the function for training data and for scoring situations (on demand or batch). For scoring, you can choose to supply an imputation value for new levels that may show up or you can manage them somewhere else in the pipeline. For scoring, you have two options: during the training run you can save the metadata to file by supplying a path to SavePath or you can have the metadata returned by setting ReturnFactorLevelList to TRUE and in scoring your can either have the files pulled from file using the SavePath argument and the function will take care of the rest or you can supply the ReturnFactorLevelList to the SupplyFactorLevelList argument and the function will take care of the rest.

H2OAutoencoder() and H2OAutoencoderScoring()

Code Example

############################

# Training

############################

# Create simulated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 1000L,

ID = 2L,

FactorCount = 2L,

AddDate = TRUE,

AddComment = FALSE,

ZIP = 2L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run algo

Output <- RemixAutoML::H2OAutoencoder(

# Select the service

AnomalyDetection = TRUE,

DimensionReduction = TRUE,

# Data related args

data = data,

ValidationData = NULL,

Features = names(data)[2L:(ncol(data)-1L)],

per_feature = FALSE,

RemoveFeatures = TRUE,

ModelID = "TestModel",

model_path = getwd(),

# H2O Environment

NThreads = max(1L, parallel::detectCores()-2L),

MaxMem = "28G",

H2OStart = TRUE,

H2OShutdown = TRUE,

# H2O ML Args

LayerStructure = NULL,

ReturnLayer = 4L,

Activation = "Tanh",

Epochs = 5L,

L2 = 0.10,

ElasticAveraging = TRUE,

ElasticAveragingMovingRate = 0.90,

ElasticAveragingRegularization = 0.001)

# Inspect output

data <- Output$Data

Model <- Output$Model

# If ValidationData is not null

ValidationData <- Output$ValidationData

############################

# Scoring

############################

# Create simulated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 1000L,

ID = 2L,

FactorCount = 2L,

AddDate = TRUE,

AddComment = FALSE,

ZIP = 2L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run algo

data <- RemixAutoML::H2OAutoencoderScoring(

# Select the service

AnomalyDetection = TRUE,

DimensionReduction = TRUE,

# Data related args

data = data,

Features = names(data)[2L:ncol(data)],

RemoveFeatures = TRUE,

ModelObject = NULL,

ModelID = "TestModel",

model_path = getwd(),

# H2O args

NThreads = max(1L, parallel::detectCores()-2L),

MaxMem = "28G",

H2OStart = TRUE,

H2OShutdown = TRUE,

ReturnLayer = 4L,

per_feature = FALSE)

Function Description

H2OAutoencoder() Use for dimension reduction and anomaly detection

H2OAutoencoderScoring() Use for dimension reduction and anomaly detection scoring

H2OIsolationForest() and H2OIsolationForestScoring()

Code Example

# Create simulated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.70,

N = 50000,

ID = 2L,

FactorCount = 2L,

AddDate = TRUE,

ZIP = 0L,

TimeSeries = FALSE,

ChainLadderData = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run algo

data <- RemixAutoML::H2OIsolationForest(

data,

Features = names(data)[2L:ncol(data)],

IDcols = c("Adrian", "IDcol_1", "IDcol_2"),

ModelID = "Adrian",

SavePath = getwd(),

Threshold = 0.95,

MaxMem = "28G",

NThreads = -1,

NTrees = 100,

SampleRate = (sqrt(5)-1)/2,

MaxDepth = 8,

MinRows = 1,

ColSampleRate = 1,

ColSampleRatePerLevel = 1,

ColSampleRatePerTree = 1,

CategoricalEncoding = c("AUTO"),

Debug = TRUE)

# Remove output from data and then score

data[, eval(names(data)[17:ncol(data)]) := NULL]

# Run algo

Outliers <- RemixAutoML::H2OIsolationForestScoring(

data,

Features = names(data)[2:ncol(data)],

IDcols = c("Adrian", "IDcol_1", "IDcol_2"),

H2OStart = TRUE,

H2OShutdown = TRUE,

ModelID = "TestModel",

SavePath = getwd(),

Threshold = 0.95,

MaxMem = "28G",

NThreads = -1,

Debug = FALSE)

Function Description

H2OIsolationForecast() Anomaly detection and feature engineering using H2O Isolation Forest. A model is built, your training data is scored, and the model is saved to file for later use in scoring environments with H2OIsolationForestScoring()

H2OIsolationForecastScoring() Scoring function

AutoClustering() and AutoClusteringScoring()

Code Example

#########################

# Training Setup

#########################

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

data <- RemixAutoML::AutoClustering(

data,

FeatureColumns = names(data)[2:(ncol(data)-1)],

ModelID = "TestModel",

SavePath = getwd(),

NThreads = 8,

MaxMemory = "28G",

MaxClusters = 50,

ClusterMetric = "totss",

RunDimReduction = TRUE,

ShrinkRate = (sqrt(5) - 1) / 2,

Epochs = 5L,

L2_Reg = 0.10,

ElasticAveraging = TRUE,

ElasticAveragingMovingRate = 0.90,

ElasticAveragingRegularization = 0.001)

#########################

# Scoring Setup

#########################

Sys.sleep(10)

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

data <- RemixAutoML::AutoClusteringScoring(

data,

FeatureColumns = names(data)[2:(ncol(data)-1)],

ModelID = "TestModel",

SavePath = getwd(),

NThreads = 8,

MaxMemory = "28G",

DimReduction = TRUE)

Function Description

AutoClustering() Generates a single column and merges it onto your data. You can have an autoencoder ran to reduce the dimension size before running the KMeans grid tuning operation. If you provide a directory path, the models will be saved and can be used later in scoring enviroments. I find that I utilize clustering more often for feature engineering that unsupervised learning which is why I put the code example and description here. The function utilizes H2O under the hood with their KMeans algo for the clustering and their deep learning algo for the dimensionality reduction.

CreateCalendarVariables()

Code Example

# Create fake data with a Date column----

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 25000L,

ID = 2L,

ZIP = 0L,

FactorCount = 4L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

for(i in seq_len(20L)) {

print(i)

data <- data.table::rbindlist(list(data, RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 25000L,

ID = 2L,

ZIP = 0L,

FactorCount = 4L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)))

}

# Create calendar variables - automatically excludes the second, minute, and hour selections since

# it is not timestamp data

runtime <- system.time(

data <- RemixAutoML::CreateCalendarVariables(

data = data,

DateCols = "DateTime",

AsFactor = FALSE,

TimeUnits = c("second", "minute", "hour", "wday", "mday", "yday", "week", "isoweek", "wom", "month", "quarter", "year")))

head(data)

print(runtime)

Function Description

CreateCalendarVariables() This functions creates numerical columns based on the date columns you supply such as second, minute, hour, week day, day of month, day of year, week, isoweek, wom, month, quarter, and year.

CreateHolidayVariable()

Code Example

# Create fake data with a Date----

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 25000L,

ID = 2L,

ZIP = 0L,

FactorCount = 4L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

for(i in seq_len(20L)) {

print(i)

data <- data.table::rbindlist(list(data, RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 25000L,

ID = 2L,

ZIP = 0L,

FactorCount = 4L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)))

}

# Run function and time it

runtime <- system.time(

data <- CreateHolidayVariables(

data,

DateCols = "DateTime",

LookbackDays = 7,

HolidayGroups = c("USPublicHolidays","EasterGroup","ChristmasGroup","OtherEcclesticalFeasts"),

Holidays = NULL

Print = FALSE))

head(data)

print(runtime)

Function Description

CreateHolidayVariable()

This function counts up the number of specified holidays between the current record time stamp and the previous record time stamp, by group as well if specified.

DummifyDT()

Code Example

# Create fake data with 10 categorical columns

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 25000,

ID = 2L,

ZIP = 0,

FactorCount = 10L,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Create dummy variables

data <- DummifyDT(

data = data,

cols = c("Factor_1",

"Factor_2",

"Factor_3",

"Factor_4",

"Factor_5",

"Factor_6",

"Factor_8",

"Factor_9",

"Factor_10"),

TopN = c(rep(3,9)),

KeepFactorCols = TRUE,

OneHot = FALSE,

SaveFactorLevels = TRUE,

SavePath = getwd(),

ImportFactorLevels = FALSE,

FactorLevelsList = NULL,

ClustScore = FALSE,

ReturnFactorLevels = FALSE)

# Create Fake Data for Scoring Replication

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 25000,

ID = 2L,

ZIP = 0,

FactorCount = 10L,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Scoring Version (imports csv's to generate matching levels and ordering)

data <- RemixAutoML::DummifyDT(

data = data,

cols = c("Factor_1",

"Factor_2",

"Factor_3",

"Factor_4",

"Factor_5",

"Factor_6",

"Factor_8",

"Factor_9",

"Factor_10"),

TopN = c(rep(3,9)),

KeepFactorCols = TRUE,

OneHot = FALSE,

SaveFactorLevels = TRUE,

SavePath = getwd(),

ImportFactorLevels = TRUE,

FactorLevelsList = NULL,

ClustScore = FALSE,

ReturnFactorLevels = FALSE)

Function Description

DummifyDT() This function is used in the AutoXGBoost__() suite of modeling functions to manage categorical variables in your training, validation, and test sets. This function rapidly dichotomizes categorical columns in a data.table (N+1 columns for N levels using one hot encoding or N columns for N levels otherwise). Several other arguments exist for outputting and saving factor levels. This is useful in model training, validating, and scoring processes.

AutoDataPartition()

Code Example

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run data partitioning function

dataSets <- RemixAutoML::AutoDataPartition(

data,

NumDataSets = 3L,

Ratios = c(0.70,0.20,0.10),

PartitionType = "random",

StratifyColumnNames = NULL,

TimeColumnName = NULL)

# Collect data

TrainData <- dataSets$TrainData

ValidationData <- dataSets$ValidationData

TestData <- dataSets$TestData

Function Description

AutoDataPartition() is designed to achieve a few things that standard data partitioning processes or functions don't handle. First, you can choose to build any number of partitioned data sets beyond the standard train, validate, and test data sets. Second, you can choose between random sampling to split your data or you can choose a time-based partitioning. Third, for the random partitioning, you can specify a stratification columns in your data to stratify by in order to ensure a proper split amongst your categorical features (E.g. think MultiClass targets). Lastly, it's 100% data.table so it will run fast and with low memory overhead.

ModelDataPrep()

Code Example

# Create fake data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.75,

N = 250000L,

ID = 2L,

ZIP = 0L,

FactorCount = 6L,

AddDate = TRUE,

AddComment = FALSE,

TimeSeries = FALSE,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

# Check column types

str(data)

# RUn function

data <- RemixAutoML::ModelDataPrep(

data,

Impute = TRUE,

CharToFactor = FALSE,

FactorToChar = TRUE,

IntToNumeric = TRUE,

LogicalToBinary = FALSE,

DateToChar = FALSE,

RemoveDates = TRUE,

MissFactor = "0",

MissNum = -1,

IgnoreCols = c("Factor_1"))

# Check column types

str(data)

Function Description

ModelDataPrep() This function will loop through every column in your data and apply a variety of functions based on argument settings. For all columns not ignored, these tasks include:

- Character type to Factor type converstion

- Factor type to Character type conversion

- Constant value imputation for numeric and categorical columns

- Integer type to Numeric type conversion

- Date type to Character type conversion

- Remove date columns

- Ignore specified columns

AutoTransformationCreate() and AutoTransformationScore()

Function Description

AutoTransformationCreate() is a function for automatically identifying the optimal transformations for numeric features and transforming them once identified. This function will loop through your selected transformation options (YeoJohnson, BoxCox, Asinh, Log, LogPlus1, Sqrt, along with Asin and Logit for proportion data) and find the one that produces the best fit to a normal distribution. It then generates the transformation and collects the metadata information for use in the AutoTransformationScore() function, either by returning the objects or saving them to file.

AutoTransformationScore() is a the compliment function to AutoTransformationCreate(). Automatically apply or inverse the transformations you identified in AutoTransformationCreate() to other data sets. This is useful for applying transformations to your validation and test data sets for modeling, which is done automatically for you if you specify.

AutoHierarchicalFourier()

Function Description

AutoHierarchicalFourier() turns time series data into fourier series. This function can generate any number of fourier pairs the user wants (if they can actually build) and you can run it with grouped time series data. In the grouping case, fourier pairs can be created for each categorical variable along with the full interactions between specified categoricals. The process is parallelized as well to run as fast as possible.

Supervised Learning

Expand to view content

Regression

click to expand

The Auto_Regression() models handle a multitude of tasks. In order:Regression Description

AutoTransformationCreate() functionAutoDataPartition() function, if you didn't supply those directly to the functionAutoXGBoostRegression()) and save the factor levels for scoring in a way that guarentees consistency across training, validation, and test data sets, utilizing the DummifyDT() functionEvalPlot() functionParDepPlots() function

CatBoost Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 10000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoCatBoostRegression(

# GPU or CPU and the number of available GPUs

TrainOnFull = FALSE,

task_type = 'GPU',

NumGPUs = 1,

DebugMode = FALSE,

# Metadata args

OutputSelection = c('Importances', 'EvalPlots', 'EvalMetrics', 'Score_TrainData'),

ModelID = 'Test_Model_1',

model_path = normalizePath('./'),

metadata_path = normalizePath('./'),

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

ReturnModelObjects = TRUE,

# Data args

data = data,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in%

c('IDcol_1', 'IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

TransformNumericColumns = 'Adrian',

Methods = c('BoxCox', 'Asinh', 'Asin', 'Log',

'LogPlus1', 'Sqrt', 'Logit'),

# Model evaluation

eval_metric = 'RMSE',

eval_metric_value = 1.5,

loss_function = 'RMSE',

loss_function_value = 1.5,

MetricPeriods = 10L,

NumOfParDepPlots = ncol(data)-1L-2L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

MaxModelsInGrid = 30L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 60*60,

BaselineComparison = 'default',

# ML args

langevin = FALSE,

diffusion_temperature = 10000,

Trees = 1000,

Depth = 9,

L2_Leaf_Reg = NULL,

RandomStrength = 1,

BorderCount = 128,

LearningRate = NULL,

RSM = 1,

BootStrapType = NULL,

GrowPolicy = 'SymmetricTree',

model_size_reg = 0.5,

feature_border_type = 'GreedyLogSum',

sampling_unit = 'Object',

subsample = NULL,

score_function = 'Cosine',

min_data_in_leaf = 1)

XGBoost Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoXGBoostRegression(

# GPU or CPU

TreeMethod = 'hist',

NThreads = parallel::detectCores(),

LossFunction = 'reg:squarederror',

# Metadata args

OutputSelection = c('Importances', 'EvalPlots', 'EvalMetrics', 'Score_TrainData'),

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "Test_Model_1",

EncodingMethod = "binary",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

DebugMode = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c('IDcol_1','IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

TransformNumericColumns = 'Adrian',

Methods = c('Asinh','Asin','Log','LogPlus1','Sqrt','Logit'),

# Model evaluation args

eval_metric = 'rmse',

NumOfParDepPlots = 3L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

grid_eval_metric = 'r2',

BaselineComparison = 'default',

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

Verbose = 1L,

# ML args

Trees = 50L,

eta = 0.05,

max_depth = 4L,

min_child_weight = 1.0,

subsample = 0.55,

colsample_bytree = 0.55)

LightGBM Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoLightGBMRegression(

# Metadata args

OutputSelection = c('Importances','EvalPlots','EvalMetrics','Score_TrainData'),

model_path = normalizePath('./'),

metadata_path = NULL,

ModelID = 'Test_Model_1',

NumOfParDepPlots = 3L,

EncodingMethod = 'credibility',

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

DebugMode = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in% c('IDcol_1', 'IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

TransformNumericColumns = NULL,

Methods = c('Asinh','Asin','Log','LogPlus1','Sqrt','Logit'),

# Grid parameters

GridTune = FALSE,

grid_eval_metric = 'r2',

BaselineComparison = 'default',

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

PassInGrid = NULL,

# Core parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#core-parameters

input_model = NULL, # continue training a model that is stored to file

task = 'train',

device_type = 'CPU',

NThreads = parallel::detectCores() / 2,

objective = 'regression',

metric = 'rmse',

boosting = 'gbdt',

LinearTree = FALSE,

Trees = 50L,

eta = NULL,

num_leaves = 31,

deterministic = TRUE,

# Learning Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#learning-control-parameters

force_col_wise = FALSE,

force_row_wise = FALSE,

max_depth = NULL,

min_data_in_leaf = 20,

min_sum_hessian_in_leaf = 0.001,

bagging_freq = 0,

bagging_fraction = 1.0,

feature_fraction = 1.0,

feature_fraction_bynode = 1.0,

extra_trees = FALSE,

early_stopping_round = 10,

first_metric_only = TRUE,

max_delta_step = 0.0,

lambda_l1 = 0.0,

lambda_l2 = 0.0,

linear_lambda = 0.0,

min_gain_to_split = 0,

drop_rate_dart = 0.10,

max_drop_dart = 50,

skip_drop_dart = 0.50,

uniform_drop_dart = FALSE,

top_rate_goss = FALSE,

other_rate_goss = FALSE,

monotone_constraints = NULL,

monotone_constraints_method = 'advanced',

monotone_penalty = 0.0,

forcedsplits_filename = NULL, # use for AutoStack option; .json file

refit_decay_rate = 0.90,

path_smooth = 0.0,

# IO Dataset Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#io-parameters

max_bin = 255,

min_data_in_bin = 3,

data_random_seed = 1,

is_enable_sparse = TRUE,

enable_bundle = TRUE,

use_missing = TRUE,

zero_as_missing = FALSE,

two_round = FALSE,

# Convert Parameters

convert_model = NULL,

convert_model_language = 'cpp',

# Objective Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#objective-parameters

boost_from_average = TRUE,

alpha = 0.90,

fair_c = 1.0,

poisson_max_delta_step = 0.70,

tweedie_variance_power = 1.5,

lambdarank_truncation_level = 30,

# Metric Parameters (metric is in Core)

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#metric-parameters

is_provide_training_metric = TRUE,

eval_at = c(1,2,3,4,5),

# Network Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#network-parameters

num_machines = 1,

# GPU Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#gpu-parameters

gpu_platform_id = -1,

gpu_device_id = -1,

gpu_use_dp = TRUE,

num_gpu = 1)

H2O-GBM Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoH2oGBMRegression(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = normalizePath("./"),

metadata_path = file.path(normalizePath("./")),

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in% c('IDcol_1','IDcol_2','Adrian')],

WeightsColumn = NULL,

TransformNumericColumns = NULL,

Methods = c('Asinh','Asin','Log','LogPlus1','Sqrt','Logit'),

# ML grid tuning args

GridTune = FALSE,

GridStrategy = "Cartesian",

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

MaxModelsInGrid = 2,

# Model args

Trees = 50,

LearnRate = 0.10,

LearnRateAnnealing = 1,

eval_metric = "RMSE",

Alpha = NULL,

Distribution = "poisson",

MaxDepth = 20,

SampleRate = 0.632,

ColSampleRate = 1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-DRF Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoH2oDRFRegression(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1L, parallel::detectCores() - 2L),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation:

eval_metric = "RMSE",

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data Args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumn = NULL,

TransformNumericColumns = NULL,

Methods = c("Asinh", "Asin", "Log", "LogPlus1", "Sqrt", "Logit"),

# Grid Tuning Args

GridStrategy = "Cartesian",

GridTune = FALSE,

MaxModelsInGrid = 10,

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

# ML Args

Trees = 50,

MaxDepth = 20,

SampleRate = 0.632,

MTries = -1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-GLM Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoH2oGLMRegression(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation:

eval_metric = "RMSE",

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments:

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

RandomColNumbers = NULL,

InteractionColNumbers = NULL,

WeightsColumn = NULL,

TransformNumericColumns = NULL,

Methods = c("Asinh", "Asin", "Log", "LogPlus1", "Sqrt", "Logit"),

# Model args

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "gaussian",

Link = "identity",

TweedieLinkPower = NULL,

TweedieVariancePower = NULL,

RandomDistribution = NULL,

RandomLink = NULL,

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

H2O-AutoML Example

# Create some dummy correlated data with numeric and categorical features

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoH2oMLRegression(

# Compute management

MaxMem = "32G",

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

IfSaveModel = "mojo",

# Model evaluation

eval_metric = "RMSE",

NumOfParDepPlots = 3,

# Metadata arguments

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

# Data arguments

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

TransformNumericColumns = NULL,

Methods = c("Asinh", "Asin", "Log", "LogPlus1", "Logit"),

# Model args

GridTune = FALSE,

ExcludeAlgos = NULL,

Trees = 50,

MaxModelsInGrid = 10)

H2O-GAM Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Define GAM Columns to use - up to 9 are allowed

GamCols <- names(which(unlist(lapply(data, is.numeric))))

GamCols <- GamCols[!GamCols %in% c("Adrian","IDcol_1","IDcol_2")]

GamCols <- GamCols[1L:(min(9L,length(GamCols)))]

# Run function

TestModel <- RemixAutoML::AutoH2oGAMRegression(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation:

eval_metric = "RMSE",

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments:

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

InteractionColNumbers = NULL,

WeightsColumn = NULL,

GamColNames = GamCols,

TransformNumericColumns = NULL,

Methods = c("Asinh", "Asin", "Log", "LogPlus1", "Sqrt", "Logit"),

# Model args

num_knots = NULL,

keep_gam_cols = TRUE,

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "gaussian",

Link = "Family_Default",

TweedieLinkPower = NULL,

TweedieVariancePower = NULL,

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

Binary Classification

click to expand

The Auto_Classifier() models handle a multitude of tasks. In order:Classification Description

CatBoost Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 10000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoCatBoostClassifier(

# GPU or CPU and the number of available GPUs

task_type = 'GPU',

NumGPUs = 1,

TrainOnFull = FALSE,

DebugMode = FALSE,

# Metadata args

OutputSelection = c('Score_TrainData', 'Importance', 'EvalPlots', 'Metrics', 'PDF'),

ModelID = 'Test_Model_1',

model_path = normalizePath('./'),

metadata_path = normalizePath('./'),

SaveModelObjects = FALSE,

ReturnModelObjects = TRUE,

SaveInfoToPDF = FALSE,

# Data args

data = data,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in%

c('IDcol_1','IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

# Evaluation args

ClassWeights = c(1L,1L),

CostMatrixWeights = c(1,0,0,1),

EvalMetric = 'AUC',

grid_eval_metric = 'MCC',

LossFunction = 'Logloss',

MetricPeriods = 10L,

NumOfParDepPlots = ncol(data)-1L-2L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

MaxModelsInGrid = 30L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

BaselineComparison = 'default',

# ML args

Trees = 1000,

Depth = 9,

LearningRate = NULL,

L2_Leaf_Reg = NULL,

model_size_reg = 0.5,

langevin = FALSE,

diffusion_temperature = 10000,

RandomStrength = 1,

BorderCount = 128,

RSM = 1,

BootStrapType = 'Bayesian',

GrowPolicy = 'SymmetricTree',

feature_border_type = 'GreedyLogSum',

sampling_unit = 'Object',

subsample = NULL,

score_function = 'Cosine',

min_data_in_leaf = 1)

XGBoost Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoXGBoostClassifier(

# GPU or CPU

TreeMethod = "hist",

NThreads = parallel::detectCores(),

# Metadata args

OutputSelection = c("Importances", "EvalPlots", "EvalMetrics", "PDFs", "Score_TrainData"),

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "Test_Model_1",

EncodingMethod = "binary",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in%

c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumnName = NULL,

IDcols = c("IDcol_1","IDcol_2"),

# Model evaluation

LossFunction = 'reg:logistic',

CostMatrixWeights = c(1,0,0,1),

eval_metric = "auc",

grid_eval_metric = "MCC",

NumOfParDepPlots = 3L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

BaselineComparison = "default",

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

Verbose = 1L,

# ML args

Trees = 500L,

eta = 0.30,

max_depth = 9L,

min_child_weight = 1.0,

subsample = 1,

colsample_bytree = 1,

DebugMode = FALSE)

LightGBM Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoLightGBMClassifier(

# Metadata args

OutputSelection = c("Importances","EvalPlots","EvalMetrics","Score_TrainData"),

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "Test_Model_1",

NumOfParDepPlots = 3L,

EncodingMethod = "credibility",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

DebugMode = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c("IDcol_1","IDcol_2"),

# Grid parameters

GridTune = FALSE,

grid_eval_metric = 'Utility',

BaselineComparison = 'default',

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

PassInGrid = NULL,

# Core parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#core-parameters

input_model = NULL, # continue training a model that is stored to file

task = "train",

device_type = 'CPU',

NThreads = parallel::detectCores() / 2,

objective = 'binary',

metric = 'binary_logloss',

boosting = 'gbdt',

LinearTree = FALSE,

Trees = 50L,

eta = NULL,

num_leaves = 31,

deterministic = TRUE,

# Learning Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#learning-control-parameters

force_col_wise = FALSE,

force_row_wise = FALSE,

max_depth = NULL,

min_data_in_leaf = 20,

min_sum_hessian_in_leaf = 0.001,

bagging_freq = 0,

bagging_fraction = 1.0,

feature_fraction = 1.0,

feature_fraction_bynode = 1.0,

extra_trees = FALSE,

early_stopping_round = 10,

first_metric_only = TRUE,

max_delta_step = 0.0,

lambda_l1 = 0.0,

lambda_l2 = 0.0,

linear_lambda = 0.0,

min_gain_to_split = 0,

drop_rate_dart = 0.10,

max_drop_dart = 50,

skip_drop_dart = 0.50,

uniform_drop_dart = FALSE,

top_rate_goss = FALSE,

other_rate_goss = FALSE,

monotone_constraints = NULL,

monotone_constraints_method = "advanced",

monotone_penalty = 0.0,

forcedsplits_filename = NULL, # use for AutoStack option; .json file

refit_decay_rate = 0.90,

path_smooth = 0.0,

# IO Dataset Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#io-parameters

max_bin = 255,

min_data_in_bin = 3,

data_random_seed = 1,

is_enable_sparse = TRUE,

enable_bundle = TRUE,

use_missing = TRUE,

zero_as_missing = FALSE,

two_round = FALSE,

# Convert Parameters

convert_model = NULL,

convert_model_language = "cpp",

# Objective Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#objective-parameters

boost_from_average = TRUE,

is_unbalance = FALSE,

scale_pos_weight = 1.0,

# Metric Parameters (metric is in Core)

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#metric-parameters

is_provide_training_metric = TRUE,

eval_at = c(1,2,3,4,5),

# Network Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#network-parameters

num_machines = 1,

# GPU Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#gpu-parameters

gpu_platform_id = -1,

gpu_device_id = -1,

gpu_use_dp = TRUE,

num_gpu = 1)

H2O-GBM Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

TestModel <- RemixAutoML::AutoH2oGBMClassifier(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = normalizePath("./"),

metadata_path = file.path(normalizePath("./")),

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumn = NULL,

# ML grid tuning args

GridTune = FALSE,

GridStrategy = "Cartesian",

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

MaxModelsInGrid = 2,

# Model args

Trees = 50,

LearnRate = 0.10,

LearnRateAnnealing = 1,

eval_metric = "auc",

Distribution = "bernoulli",

MaxDepth = 20,

SampleRate = 0.632,

ColSampleRate = 1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-DRF Example

# Create some dummy correlated data

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

TestModel <- RemixAutoML::AutoH2oDRFClassifier(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1L, parallel::detectCores() - 2L),

IfSaveModel = "mojo",

H2OShutdown = FALSE,

H2OStartUp = TRUE,

# Metadata arguments:

eval_metric = "auc",

NumOfParDepPlots = 3L,

# Data arguments:

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Model evaluation:

data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2", "Adrian")],

WeightsColumn = NULL,

# Grid Tuning Args

GridStrategy = "Cartesian",

GridTune = FALSE,

MaxModelsInGrid = 10,

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

# Model args

Trees = 50L,

MaxDepth = 20,

SampleRate = 0.632,

MTries = -1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-GLM Example

# Create some dummy correlated data with numeric and categorical features

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

# Run function

TestModel <- RemixAutoML::AutoH2oGLMClassifier(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation args

eval_metric = "auc",

NumOfParDepPlots = 3,

# Metadata args

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in%

c("IDcol_1", "IDcol_2","Adrian")],

RandomColNumbers = NULL,

InteractionColNumbers = NULL,

WeightsColumn = NULL,

# ML args

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "binomial",

Link = "logit",

RandomDistribution = NULL,

RandomLink = NULL,

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

H2O-AutoML Example

# Create some dummy correlated data with numeric and categorical features

data <- RemixAutoML::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,