AdGuardHome

AdGuardHome copied to clipboard

AdGuardHome copied to clipboard

Don't forward multiple identical requests to upstream server, but serve all except the first request from cache

Have a question or an idea? Please search it on our forum to make sure it was not yet asked. If you cannot find what you had in mind, please submit it here.

Prerequisites

Please answer the following questions for yourself before submitting an issue. YOU MAY DELETE THE PREREQUISITES SECTION.

- [x] I am running the latest version

- [x] I checked the documentation and found no answer

- [x] I checked to make sure that this issue has not already been filed

Problem Description

When opening a webpage multiple DNS requests are made at the same.

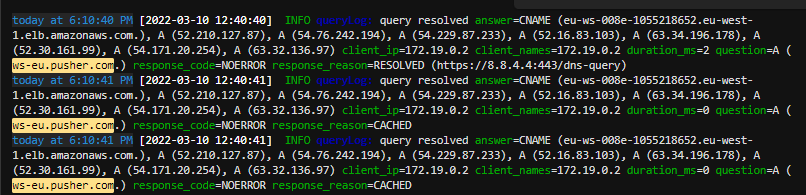

This is an excerpt of the log:

As you can see at 01:56:11 multiple requests were made. According to the log 3 requests were forwarded to upstream forwarding DNS-Servers, because none was served from the cache. The request at 2:01:09 was anwered from cache.

Proposed Solution

AdGuard tracks that it is already running a request for "administrator.de", waits until answer is there and when answer is there it serves the two other requests from cache instead of forwarding them.

Alternatives Considered

The new state would reduce traffic a lot. In this case to one third which is amazing and will make AdGuard to the best DNS resolver for home network.

Additional Information

A possible implemention, probably you find a better: When a request is made this request is stored in memory like in "pendingRequests". When another request comes in it is first looked if there is a pending request. If so thread blocks until pending request is finished. When pending request is done, name is resolved again by the normal way. Since entry is now in cache, it will be served from the cache.

My understanding of your suggestion is: check if the exact same request is already being processed, if so wait for the result and return it to all requesters at the same time, right?

I think this causes several problems. One the one hand, it is rare to have multiple identical requests at the same time, at least it's a very small percentage of all requests. And in most cases the request will be processed in a short time, doing this check for all requests would cause unnecessary delays to all requests, since very few requests are actually identical and simultaneous. On the other hand, the performance overhead of this check also needs to be considered, because it works for all requests.

Therefore, the negative effects of this checking far outweigh the possible benefits. I think this is inappropriate.

My understanding of your suggestion is: check if the exact same request is already being processed, if so wait for the result and return it to all requesters at the same time, right?

Exactly.

One the one hand, it is rare to have multiple identical requests at the same time, at least it's a very small percentage of all requests.

For me it is about 2.3 % of the requests that could be fullfilled with cache with new implementation. You should know, that I have set cachesize to 20 MB and cache is already quite filled. With an empty cache like when restarting AdGuard rate would be around 4 %. This was only from one log file and since I have quite a lot automated queries where there won't be identical requests values would be a bit higher. Please try it with your real world examples with default settings were users are only browsing and no automated requests are made.

And in most cases the request will be processed in a short time, doing this check for all requests would cause unnecessary delays to all requests, since very few requests are actually identical and simultaneous.

The question is how much time would this check really need? If new request is made and can't be fullfilled by cache I would check if it is already in "pendingQueries" and put it into a dict/list "pendingQueries". If it was not in "pendingQueries" then lookup to upstream server is done. Every result that comes back from Upstream-Server will be matched against "pendingQueries" and then be answered. This algorithm sounds pretty simple and should be pretty fast. I'm using free DoT servers like https://blog.uncensoreddns.org/ and some others. Since the more results are cached by Adguard the less requests are forwared to these servers and the less money they costs. That is why I had the idea to implement this caching algorithm.

So I'm unsure if there are so many negative effects by implementing this algorithm.

In the attachment you find a Python script you can analyse the json query logfiles to get your own stats. It also prints the cache hit ratio.

#### Config section #####

filename="querylog.json.1"

identicalQueriesTime=50 #ms queries within this timerange will be considered as identical

allidenticalQueries = False #Set this to true to see also cached results as identical. This way you see how much queries are identical

printQueries = False # print all identical queries to examine them further

##### Config section end ####

The configuration section should be quite self-explaining.

The second query is being made before the first item can be cached, that's probably the reason.

Do you have an easier solution than my proposed algorithm?

I do not. Personally, it's a non-issue to me. Perhaps the devs are able to fix this.

FWIW, for a versatile resolver like Unbound, I recall seeing the same behavior.

For me it is about 2.3 % of the requests that could be fullfilled with cache with new implementation. You should know, that I have set cachesize to 20 MB and cache is already quite filled. With an empty cache like when restarting AdGuard rate would be around 4 %.

Actually that's still a really really small percentage, and I think maybe you misunderstand something. Let me explain more clearly, these requests must be uncached and, at the same time, of the same type, are exactly equal. Is it really worth it to affect all other requests for so few requests?

Now that we've talked about the extra time and resource overhead that such a check might take, let's move on to see what other bad effects it might have. Even if this check hits, does it cause unnecessary blockage? And what about EDNS Client Subnet? It is perfectly reasonable and common for the same exact same request to have completely different results for different requesters, that's how EDNS and CDN and load balancer and geo based DNS works. How are you going to handle these requests?

Let's see if we don't check, and forward these few requests directly to the upstream. Actually, every thing gonna be fine and simple. These few same requests will not cause any impact and abuse upstream, instead such checks may affect all downstream clients.

I have a lot of reasons not to do this, but I think that's enough. If any developer is willing to implement this feature for AdGuardHome, I hope it will not become a default behavior.

As far as I understood agneevX this behaviour is already implemented in blocky and seems to work well. Perhaps it is very easy to copy this feature since blocky is also written in Go.

Let me explain more clearly, these requests must be uncached and, at the same time, of the same type, are exactly equal.

Can you explain why these requests must be uncached? The requests orginate from the same system. Mainly / Windows Firefox is showing this behaviour to send the same request multiple times.

Is it really worth it to affect all other requests for so few requests?

How would the other requests be affected? The additional algorithm won't add any significant latency, I'm quite sure.

It is perfectly reasonable and common for the same exact same request to have completely different results for different requesters, that's how EDNS and CDN and load balancer and geo based DNS works. How are you going to handle these requests?

In the example above the requester was always the same, so I think we had some misunderstanding. I didn't look for an example where different systems requested the same name resolution at the same time and I think these cases are really really rare. If a different system is requesting name resolution cache is used, if already populated. This is the expected behaviour.

As far as I understood agneevX this behaviour is already implemented in blocky and seems to work well. Perhaps it is very easy to copy this feature since blocky is also written in Go.

Glad to know that, thanks for your information, I'm gonna try.

Can you explain why these requests must be uncached?

About this, if the request already exists in the cache, then it should be served directly from the cache, right? So the situation we are discussing is narrowed down.

The additional algorithm won't add any significant latency, I'm quite sure.

I can't be sure of this given that exact data is not available right now, but I'm sure that any delay greater than 10ms gonna be a huge impact.

In the example above the requester was always the same, so I think we had some misunderstanding.

Yes, I think so. Obviously the situation we are discussing has a little difference. I'm a little confused why the same request is initiated by the same client at the same time? Sure, this behavior does need to be handled some way.

And again, thanks for your information. Hope you don't get me wrong :)

About this, if the request already exists in the cache, then it should be served directly from the cache, right?

Yes, right and that's why I had the idea when several requests for the same domain are made then AdGuard Home forwards the first request and then answers the other requests that arrived more or less simultaneously from the cache built after the first request completed.

About this, if the request already exists in the cache, then it should be served directly from the cache, right?

Yes. And that does AdGuard Home already. If it exists in the cache, it answers from the cache.

I can't be sure of this given that exact data is not available right now, but I'm sure that any delay greater than 10ms gonna be a huge impact.

Suppose at 1. request is made at 0 ms, then 2. request is made at 5 ms to the same domain from the same client. It takes for the upstream client 30 ms to answer the request (including 3 way tcp handshake and all so on). So 2. request would be answered at 35 ms. So waiting for the first request until it is completed and then answering second request from cache would be actually faster.

I'm a little confused why the same request is initiated by the same client at the same time? Sure, this behavior does need to be handled some way.

Thanks now we're talking about the same issue. All identical requests numbers from this post https://github.com/AdguardTeam/AdGuardHome/issues/4364#issuecomment-1062387101 were from the same client. I also think that this should be handled some way, so we finally agree.

And again, thanks for your information. Hope you don't get me wrong :)

Everything is fine. I also didn't expect that the same client starts the same DNS resolution request multiple times simultaneously. So I understand you thought these requests were from different clients at the same time just by coincidence.

I also didn't expect that the same client starts the same DNS resolution request multiple times simultaneously.

This. I've never understood this behavior. At first I thought it was sending the same query to all DNS servers received via DHCP. Then, I changed the config and DHCP returned a single server, yet clients tend to make queries of the same type.

This is definitely a bug in AGH, because the first query here took 11ms, which is not possible, because the DNS server is ~40ms away. The second query takes a more sensible 47ms.

If I look at logs from the upstream, I see just one query made:

So yes, there is an extra query recorded. CC @ainar-g

I've split this into a bug report, because its bigger than this issue: #4386.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

Still an issue.