stable-diffusion-webui

stable-diffusion-webui copied to clipboard

stable-diffusion-webui copied to clipboard

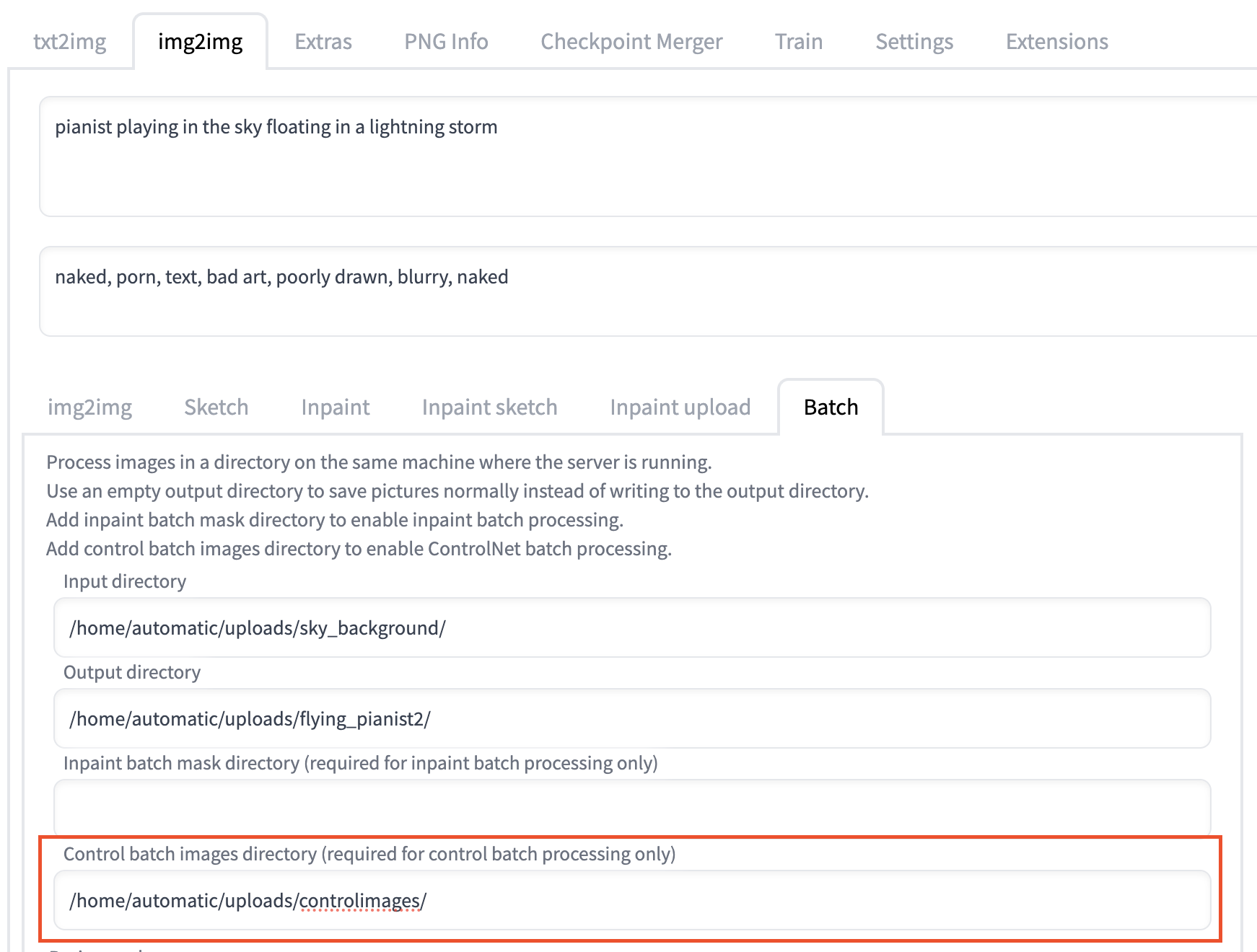

Enable ControlNet batch support

Describe what this pull request is trying to achieve.

Enable ControlNet extension batch support for control images.

Additional notes and description of your changes

Currently there's only batch support for inpainting masks. I simply adapted the following inpainting batch support pull request (https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/7295/files), but for ControlNet control images instead.

This enables using 1 control image per 1 img2img input image, which helps to create videos combining different techniques.

This is to add support to the ControlNet extension Mikubill/sd-webui-controlnet

This change can only be usable after the following Pull Request is approved in the extension repo: https://github.com/Mikubill/sd-webui-controlnet/pull/383

UPDATE: extension pull request has been approved and merged.

Environment this was tested in

- OS: Linux

- Browser: chrome

- Graphics card: NVIDIA RTX 3090 24GB

- Extension used: https://github.com/Mikubill/sd-webui-controlnet

Screenshots or videos of your changes

Lets go. This is a crucial feature to unblock controlnet potencial.

Btw, the controlnet extension can still support mutliple layers of control.

For multiple layers of batch control, if auto1111 PR is approved, we could modify the extension to read subfolders with a specific naming eg: layer0, layer1, etc if they're found inside the main folder. That would allow to not add so many folders in the options to the user, and use only 1, but with a predefined structure as mentioned, eg:

AUTO1111 folder config input dir:

/home/diffusing/controlimages

and inside that, we could have /home/diffusing/controlimages/layer00/img001.png /home/diffusing/controlimages/layer00/img002.png /home/diffusing/controlimages/layer00/imgxxx.png /home/diffusing/controlimages/layer01/img001.png /home/diffusing/controlimages/layer01/img002.png /home/diffusing/controlimages/layer01/imgxxx.png /home/diffusing/controlimages/layer02/img001.png /home/diffusing/controlimages/layer02/img002.png /home/diffusing/controlimages/layer02/imgxxx.png

for 3 layers. If no subfolder is found, then use the same folder for all layers.

I could work on this commit as soon as this PR is approved.

Added to what I asked you in the other thread, this may be relevant to this PR. Can you add a box for masks, just like the one Img2Img already has? That would be incredibly useful, even without considering the possibility of frame scheduling.

Hello A T A R A, someone had the same issue but I wasnt able to replicate and he neither after a second attempt. You can see his comment here: https://www.reddit.com/r/StableDiffusion/comments/11c2ya1/i_just_added_controlnet_batch_support_in/ja21ck5/?utm_source=share&utm_medium=ios_app&utm_name=iossmf&context=3

If you’re able to replicate please let me know the steps so I can have something to work. I’ll try again too with a fresh install just in case.

On Sat, 4 Mar 2023 at 01:16 A T A R A @.***> wrote:

Heya, I switched to your branch and tried to test it. I get an error:

Traceback (most recent call last): File "D:\BACKUP\WORKSPACE\stable-diffusion-webui\modules\call_queue.py", line 56, in f res = list(func(*args, **kwargs)) File "D:\BACKUP\WORKSPACE\stable-diffusion-webui\modules\call_queue.py", line 37, in f res = func(*args, **kwargs) File "D:\BACKUP\WORKSPACE\stable-diffusion-webui\modules\img2img.py", line 181, in img2img process_batch(p, img2img_batch_input_dir, img2img_batch_output_dir, img2img_batch_inpaint_mask_dir, img2img_batch_control_input_dir, args) File "D:\BACKUP\WORKSPACE\stable-diffusion-webui\modules\img2img.py", line 92, in process_batch if processed_image.mode == 'RGBA': AttributeError: 'numpy.ndarray' object has no attribute 'mode'

set the control net dir properly. Not sure what I am missing?

— Reply to this email directly, view it on GitHub https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/8125#issuecomment-1454381627, or unsubscribe https://github.com/notifications/unsubscribe-auth/AEZPKTNTNNXDAXTGEXJCF43W2K62PANCNFSM6AAAAAAVIF5VJE . You are receiving this because you authored the thread.Message ID: @.***>

Added to what I asked you in the other thread, this may be relevant to this PR. Can you add a box for masks, just like the one Img2Img already has? That would be incredibly useful, even without considering the possibility of frame scheduling.

I'm not sure what you mean by this. Still waiting for approval of the other repository before modifying anything else. I'll go step by step and see how people use it before making modifications.

Of course. One step at a time. I'm just trying to contribute ideas. I mean that each layer can accept its own folder of masks, just like it can be done in Img2Img. That would need an extra option to put the address of the masks, or to enable use mask if, for example, it finds a subfolder called masks in the directory.

with the new changes allowing easier controlnet control by external scripts, this is much easier to do... Should be redone to use the new controlnet argument changing method in external_code.py

with the new changes allowing easier controlnet control by external scripts, this is much easier to do... Should be redone to use the new controlnet argument changing method in external_code.py

Interesting. I'm about to make an extension to my deflicker, and adding controlnet could be very helpful. Unfortunately all my programming depends on ChatGPT, so I don't know how to do it.

with the new changes allowing easier controlnet control by external scripts, this is much easier to do... Should be redone to use the new controlnet argument changing method in external_code.py

Hello, can you provide me some link to these new changes so I can see them? Weekends I have time to see this kind of stuff. If it's easy and it doesn't depends on auto111 webui approving anything, I can try your suggestion

This should be possible to do within an extension. Use on_before_component/on_after_component to place the UI element. If something needs to be added to the repo to support this functionality, that can be done - a callback, or something, but not editing the code in a way that explicitly mentions the extension.

This should be possible to do within an extension. Use

on_before_component/on_after_componentto place the UI element. If something needs to be added to the repo to support this functionality, that can be done - a callback, or something, but not editing the code in a way that explicitly mentions the extension.

Ok. Thanks for the feedback, will try your suggestions.

with the new changes allowing easier controlnet control by external scripts, this is much easier to do... Should be redone to use the new controlnet argument changing method in external_code.py

Hello, can you provide me some link to these new changes so I can see them?

I'll hopefully be posting an early beta of a working extension that implements batch loading of multiple Controlnet layers. I'll send you a link once it's posted.

with the new changes allowing easier controlnet control by external scripts, this is much easier to do... Should be redone to use the new controlnet argument changing method in external_code.py

Hello, can you provide me some link to these new changes so I can see them?

I'll hopefully be posting an early beta of a working extension that implements batch loading of multiple Controlnet layers. I'll send you a link once it's posted.

cool, just saw this on_before/after_component, ball is on your court now

cool, just saw this on_before/after_component, ball is on your court now

Um, I'm not using that. If you want to go that route, good luck. I'm using the external_code method to control CN.

While your fork is the only working solution for batch processing with controlnet, may I request a little--I hope-- but crucial bug fix, and make it fully applicable for the production use?

For some reason Automatic1111 uses the same seed for every image in the bach. When a sequence is processed with the same seed it causes unwanted visual patterns like this: https://imgbox.com/LCUoww4s These artifacts make the sequence unacceptable for use it video production. If you could fix this issue it would make you for a great working solution until it's not implemented in the main branch.

Thank you!

While your fork is the only working solution for batch processing with controlnet, may I request a little--I hope-- but crucial bug fix, and make it fully applicable for the production use?

For some reason Automatic1111 uses the same seed for every image in the bach.

When a sequence is processed with the same seed it causes unwanted visual patterns like this: https://imgbox.com/LCUoww4s

These artifacts make the sequence unacceptable for use it video production. If you could fix this issue it would make you for a great working solution until it's not implemented in the main branch.

Thank you!

Hi sssttttas, I haven't worked on this to avoid duplicating efforts, but I will take a look when I have time.

Can you give me a detailed step by step reproduction of your workflow to help me understand better?

Can you give me a detailed step by step reproduction of your workflow to help me understand better?

- Prepare a png sequence. Even 2 images will be enough to see if the seed increments. They even can be just black squares because you will check the seed in metadata.

- Go to the image2image tab, then to the Batch sub-tab.

- Put the path to your sequence folder in the "Input directory".

- Leave the "Output directory". It will save generated images to your default output directory. If you specify a custom folder you will encounter anoutheк bug -- the images will not have metadata, therefore you'll not be able to see what seeds they used.

- Sampler, prompt, CFG, etc. are not important.

- Make sure the seed is set to -1.

- Run "Generate".

- Go to your default output folder.

- Drag and drop the first generated image to the PNG info tab.

- Remember the seed.

- Drag and drop another image to the PNG info tab.

- Compare seeds. They will be the same.

Desired behavior: incremented seed for each image in the batch.

If you will be able to fix it, it would be great if you make a separate PR for this issue.

- Make sure the seed is set to -1.

- Run "Generate".

Hi sssttttas, I replicated your steps and I'm not sure why incrementing the seed would avoid the issue you mentioned.

Reading your phrase (When a sequence is processed with the same seed it causes unwanted visual patterns) it doesn't makes sense to me why using a different seed would avoid that.

In fact, if you use a different seed, the variation would be even bigger between 2 sequenced images.

A seed is basically the initial "noise" the model will use to start the processing based on your prompt. If you go to txt2img, without any prompt or change at all and use 1 sampling step, you will see this initial "seed" image.

It makes sense for batch processing to use the same seed for all images inside the folder if you ask me.

Now, if you want to have different seeds per image, that also can be considered a new "feature", but I don't agree that this should be the default behaviour.

Regarding the output directory and metadata bug, you're correct. That's happening here too. My guess is that not many people uses/view the image metadata, but its quite useful in case you don't remember prompts used or configurations for a specific image. It's reported here too: https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/8046

tl;dr Incremental seed won't fix the unwanted visual patterns (unless there's something I didn't understand per your requirement), and using the same as default behaviour makes sense. I won't make the change considering this PR is not approved and it won't solve your problem.

cool, just saw this on_before/after_component, ball is on your court now

Um, I'm not using that. If you want to go that route, good luck.

I'm using the external_code method to control CN.

Where you able to implement this? How did it go?

Where you able to implement this? How did it go?

Yes, I have it working, but needed to step away for it for a bit. Likely I'll publish some working code this week. My version only does pose and canny models right now, as I am (re)building it modularly to make it do far more flexibly. So for example, it offers a few different canny processors, not just the usual.

But it works well,.and it does batches just fine. I can give it a directory and even a search word to match on, and it'll batch process and batch generate.

Rulyone,

Thanks for reviewing my request.

Reading your phrase (When a sequence is processed with the same seed it causes unwanted visual patterns) it doesn't makes sense to me why using a different seed would avoid that.

The initial noise predisposes details on the final image. When applied to a sequence it makes appear similar details in the same place across all frames. This causes an effect similar to when you are looking thru a dirty car window. The objects are moving, but the dirt spots are staying in their place. Here's another sample with more distinct dots that are not moving. In the original sequence, the camera flies thru the field, and objects move very fast.

https://imgbox.com/GHutmwYN

My idea was that the different seeds would help to get rid of this unwanted effect. I don't see any pros in the fixed seed, because the original image and controlnet are good enough in making the result images consistent in shape and color.

But you are right, maybe it should be a separate feature.

BTW the master of /Mikubill/sd-webui-controlnet is not working correctly with your version of webui anymore

it adds a ghost of the first frame of the sequence fed to the controlnet to all result frames

"UPDATE: extension pull request has been approved and merged." So is this feature now available in batch img2img processing? I haven't seen any updates. tnx

What's the current status? I would really love having ControlNet batch support :)

This is not necessary. See https://github.com/Mikubill/sd-webui-controlnet/pull/683 for updates on the implementation of multi-cn batch.

The changes in this PR are related to adding UI element which should be done in extension itself rather than main repo so I'm going to close this PR. Reopen it if there's something else.