[Bug]:batch size changes image using same seed with DPM++SDE Karras

Is there an existing issue for this?

- [X] I have searched the existing issues and checked the recent builds/commits

What happened?

The image generated is changing with the same seed and settings when using DPM++ SDE Karras and batch size.

Steps to reproduce the problem

Enable DPM++SDE Karras and set a fixed seed and generate as a batch and then as a single image. The image is generating differently with the same seed and settings showing.

What should have happened?

The images should have bee the same I believe.

Commit where the problem happens

0b5dcb3d7ce397ad38312dbfc70febe7bb42dcc3

What platforms do you use to access UI ?

Windows

What browsers do you use to access the UI ?

Mozilla Firefox

Command Line Arguments

@echo off

set PYTHON=

set GIT=

set VENV_DIR=

set COMMANDLINE_ARGS=--xformers --deepdanbooru --medvram --no-half-vae

call webui.bat

Additional information, context and logs

No response

Thanks! I was going to report this too, it affects both SDE samplers.

It seems to change for every change in batch size.

Also tried changing the Eta noise seed delta but same results.

is this also with the case with --xformers and with --disable--opt-split-attention enabled?

is this also with the case with

--xformersand with--disable-opt-split-attentionenabled?

Yes. I normally just use --xformers, but tested with the extra switch.

Either way, it is however deterministic if you use the same seed and batch size. Change either and it changes unlike the other k-diffusion samplers.

I also see this bug. Exact same parameters and seed, but entirely different images if you change the batch size.

If you use just patch in the SDE sampler PR for webui 1 to 2 wks prior, we can a better idea if it was always like this?

If you use just patch in the SDE sampler PR for webui 1 to 2 wks prior, we can a better idea if it was always like this?

Thanks for volunteering 👍

I was using it, but I cant recall if the behavior was the same.

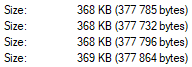

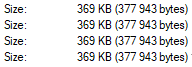

I can confirm that this is not a problem on old commit 828438b4a190759807f9054932cae3a8b880ddf1 (DPM++SDE Karras dont exist on that ver.), but on the latest version I also have a problem by using same seed getting different results even on a different sampler: DPM++ 2M Karras. Hard to tell differences by eye, but by number of bytes can say that the problem exists:

Latest commit 4b3c5bc24bffdf429c463a465763b3077fe55eb8:

Old commit 828438b4a190759807f9054932cae3a8b880ddf1:

I thought it was a problem in one very small mistake made in here https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/5065 (I found this completely by accident, and im not sure is this safe change or is it create more bugs): 01f2ed684450b650f5802bce5c7bf061062781c7

--- a/modules/sd_samplers.py

+++ b/modules/sd_samplers.py

@@ -342,7 +342,7 @@ class TorchHijack:

def __getattr__(self, item):

if item == 'randn_like':

- return self.randn_like

+ return self.sampler_noises.randn_like

if hasattr(torch, item):

return getattr(torch, item)

but it looks like the problem is bigger than that, because this fix doesn't help completely

Now I want to find after which commit this bug appeared (it will take time)

PS: no COMMANDLINE_ARGS

this commit ce6911158b5b2f9cf79b405a1f368f875492044d breaks consistency, can anyone else confirm this by checkout to c833d5bfaae05de41d8e795aba5b15822673ef04?

It looks like this issue is unique to this particular sampler, which lives in https://github.com/crowsonkb/k-diffusion. It's using a different kind of noise sampler than the other ones in the same repository. Something called a BrownianTreeSampler: https://github.com/crowsonkb/k-diffusion/blob/master/k_diffusion/sampling.py#L546

If I make the following change to stable-diffusion-webui/repositories/k-diffusion/k_diffusion/sampling.py, the sampler gives stable results across all batch sizes.

@@ -543,7 +543,7 @@ def sample_dpmpp_2s_ancestral(model, x, sigmas, extra_args=None, callback=None,

def sample_dpmpp_sde(model, x, sigmas, extra_args=None, callback=None, disable=None, eta=1., s_noise=1., noise_sampler=None, r=1 / 2):

"""DPM-Solver++ (stochastic)."""

sigma_min, sigma_max = sigmas[sigmas > 0].min(), sigmas.max()

- noise_sampler = BrownianTreeNoiseSampler(x, sigma_min, sigma_max) if noise_sampler is None else noise_sampler

+ noise_sampler = default_noise_sampler(x)

extra_args = {} if extra_args is None else extra_args

s_in = x.new_ones([x.shape[0]])

sigma_fn = lambda t: t.neg().exp()

This isn't a very good fix though, the sampler might need BrownianTreeNoiseSampler to operate optimally. But, at the very least, it looks like this is always how this sampler worked. There might be some way to coax it into behaving differently, but I don't know off-hand.

There might be some way to coax it into behaving differently, but I don't know off-hand.

Looks like you need to pass it a seed (as it generates a random one without a hint), but that is deep down the pipeline and I dont really how it hooks up.

https://github.com/crowsonkb/k-diffusion/blob/master/k_diffusion/sampling.py#L72

Update:

Due to my lack of Python/Torch skills I spent an hour doing nothing, but getting errors, Last resort, just fix seed to constant.

And then I get perfectly reproducable results regardless of the batch size. But when I shift the seed, it does not reproduce :(

Going to play a little more.

Update:

Tried increase seed by 1, and then seeing if I can get something the same.

And I have no idea what I am doing. Will leave this to the experts.

@JaySmithWpg doing a quick search for "brown" or "brownian" in the paper that I think these DPM++ samplers came from, and nothing comes up. Does that mean it is not necessary for these samplers to operate optimally? It seems like if the BrownianTree were an essential part of these samplers, it would be noted in that paper. Perhaps we could switch back to the default noise sampler.

It would be good to know why this sampler used the BrownianTree noise sampler instead of the same one used by the others in the same repository. Why did they make that change? Perhaps someone just liked the BrownianTree noise sampler better, for no good reason?

Any good solutions to this yet, other than changing the Brownian noise sampler to the default one?

I have this issue as well

The same thing happens to me when I use DPM adaptive.

Update:

I was wrong, the changes kept happening because I was also changing batch position. The batch size issue only concerns DPM++ SDE Karras.

Just hit this myself, been using DPM++ SDE Karras for the most part since it was added, have not noticed the issue(Mostly because I was either generating batches, or using scripts to generate batches like with StylePile), and hadn't tried to highres fix generate a single image out of a batch until today.

I had tried disabling all extensions and swapping between 2.0 and 1.5 based models to see if it was maybe a 2.0 issue, I gave up and checked on here to find this post, swapped over to DPM++ 2M Karras and I was getting consistent results again between batches and individual generations.

Unfortunately don't know enough to help with the issue, but I like the SDE sampler and hope it gets addressed eventually.

I found this thread: https://github.com/crowsonkb/k-diffusion/issues/25

So, as far as I understand this, the Brownian tree noise sampler is used to actually make convergence more stable.

So that sounds like we have to keep the Brownian tree noise sampler. But there has to be a way to make the seed repeatable in a batch.

Since the problematic code actually provides a way to use an external seed, instead of generating one, I think it "just" needs to be passed down.

I've attempted to do this and it seems to work. If you want to try it out, there are two changes needed:

AlUlkesh/stable-diffusion-webui@20a1fa7 AlUlkesh/k-diffusion@c110d3f

This keeps images constant, regardless of batch size.

However:

- You still have to keep the same batch position. So if, for example, you are interested in repeating the 3rd image in a batch with size = 20, you will still have to make a batch of at least size = 3.

- With this change, old seeds for DPM++ SDE Karras obviously produce different results now. If that is necessary, perhaps a new settings option for old/new version could be implemented.

- This requires a change in k-diffusion, which is separate from stable-diffusion-webui.

what are these arguments for? where can I find full arguments list?

--deepdanbooru --medvram --no-half-vae

You can find them here: https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Command-Line-Arguments-and-Settings

BTW --deepdanbooru is not necessary anymore, it's integrated now.

I just packaged this solution into an extension. You can install it from here: https://github.com/AlUlkesh/sd_dpmpp_sde_no_random

@ClashSAN Can you please add this extension to the Wiki, when you get a chance? Thanks.

{ "name": "sd_dpmpp_sde_no_random", "url": "https://github.com/AlUlkesh/sd_dpmpp_sde_no_random", "description": "Prevent DPM++ SDE Karras from always using a random seed", "tags": ["script"] }

I think this issue can be closed now.

@AlUlkesh so using your extension, if I make a batch of 8, and I want to reproduce the 8th image again, I have to do the whole batch of 8 again? I'm not sure that is a full solution. I usually make small images on my first runs, and then use hires fix to upscale the ones I like (using their seed). But using this method, I'd have to upscale all 8 images before getting to the one I like in the batch?

Yes, that is correct.

Even if I use the previous seed as entropy for the Brownian Tree I get a changed image in that case. I think that's because the whole batch is processed as a single entity.

@Jonseed I think I could implement an option that would set the step size of all images to 1, except for the final in a batch. So that would mean, a minimum time spent for the preceding images. Would that solve your issue?

@AlUlkesh that would definitely help! You could even set it to throw away all those other images prior to the desired image in the batch, so it would effectively be like you are just generating the image you want.

Perhaps it could look to see what seed you want and automatically set step to 1 for all other images in the batch to get to the one you want. So if you want image 5 of an 8 batch, it would set step to 1 for images 1-4, and then it would be much easier to get to image 5.

Do you have to set the seed for the first image in the batch, or can you use the seed for the specific image in the batch?

Ah, that's a good concept. So I could put a new field in the main UI, with "SDE seed" or whatever. The script could then check all images if that seed is reached, do as little generation as possible until it finds it and then only fully do the desired seed and stop after that.

Do you have to set the seed for the first image in the batch, or can you use the seed for the specific image in the batch?

Yes, the way I see it is that torchsde needs "all data" up to the desired image to make similar ones. "All" being more than, but including, the seed.

Ideally you could just use the seed field that is already in the UI. Your extension could check to see if it is a SDE gen, find the initial seed, walk through all the undesired seeds with 1 step, and then fully generate the desired image in the batch.

I don't think I can do that. The field that is already in the UI is the origin for the first seed, that one is still needed.

Hmm, how do you know what the initial seed is for an image generated in a batch?

Well, as far as I can tell from the code, the seed-value from the ui is stored in p.seed, while p.all_seeds contains all seeds for the batch. So for example with a batch size of 3:

UI seed = 123

p.seed = 123

p.all_seeds = [123, 124, 125]

So if you do your first lowres pass, that's how it would look like. If we then do a second run, with hires, but only want to save image number 3, you cannot change these seeds, otherwise you get different images.

That's why I think the UI's seed needs to be unchanged, while a new field can contain the "seed for image to save".