Allow TI training using 6GB VRAM when xformers is available

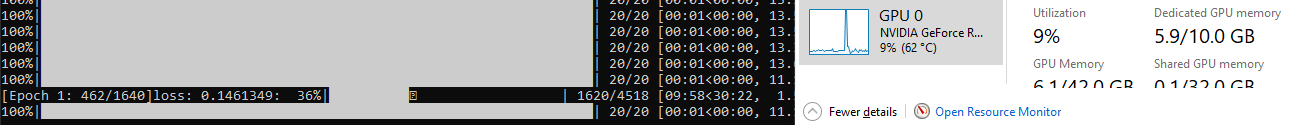

Pic of me training TI at 512x512 using <6GB VRAM

This PR consists of 2 parts.

-

Added a setting to allow using cross attention optimizations when TI training. When the xformers cross attention optimization is available, this saves around 1.5GB of VRAM during training. I know that there has been past reports of bad results when using cross attention optimizations when training TI, but I have tested it myself using the xformers optimization and the InvokeAI optimization and did not have any issues.

-

Changed the "Unload VAE and CLIP to RAM..." option to also unload VAE to RAM when training TI. This is safe(also tested by me) and saves us around .25GB of VRAM during training.

When both options are enabled, I was able to get TI training down below 6GB VRAM.