Allow save /load control of Optimizer state dict

Optimizers, especially Adam and its variants are recommended to save and load its state.

This patch offers way to save / load optimizer state, also supports for user-selected optimizer types, such as "SGD", "Adam", etc.

If Selecting optimizer type is enabled, this line has to be changed for safety:

if hypernetwork.optimizer_state_dict:

to, whatever like

if hypernetwork.optimizer_name == hypernetwork_optimizer_type and hypernetwork.optimizer_state_dict

to prevent loading wrong state dict for mismatching optimizer types.

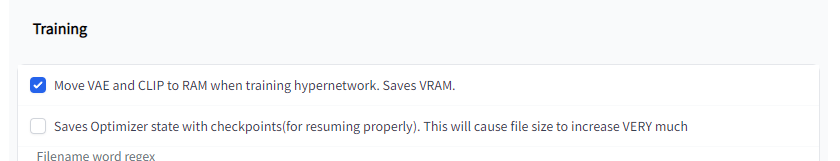

Users will see new option in Training section:

This option should be only enabled when they plan to continue training in future.

Training can continue without saving optimizer state, but some user reported that it was blowing up sometimes when its continued from checkpoint... must by bad luck of optimizer...

For releasing HN, it is recommended to turn off the option (with Apply button) before saving / interrupting training.

Standard (1, 2, 1) network file size comparision is here, it is roughly 3x size difference.

Current Task

-

[x] Save and load optimizer state dict People complained about optimizer not resuming properly, it was because we don't save optimizer state dict.

-

[x] Generalized way to save / load optimizers This is for generalizing optimizer resuming process. It does not necessarily mean it will offer more optimizer options immediately.

Future jobs:

-

[ ] Fix Hypernetwork multiplier value while training As far as I read the code, hyperparameter multiplier can be changed while training

-

[ ] Add an option for specify standard deviation + scale multiplier for initialization + nonzero bias initialization related - https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/2740 Analyzed data : Colab Shortly, Xavier and Kaiming have too big standard deviation in weight initialization compared to normal. But rather than using magic numbers, the std should be parameterized, and we can use xavier normally, if we scale it. (its called gain in pytorch parameter)

-

[ ] Add an option to fix weight initialization seeds. This is for reproducing results.

-

[ ] Add an option to specify dropout structure. Few examples have shown that 1, 2, 2[Dropout], 1 structure is promising. This is actually bug-generated networks, which won't be able to struct same structure with fix. Instead of totally removing the functionality, we need to offer detailed way to specify dropouts. Example : [0, 0.1, 0.15, 0] -> applies dropout at second, third layer. The sequence should follow the layer structure, First and last value should never use value other than 0.

Optional

-

[ ] Quick-start in page / Offering references of previously trained HNs

-

[ ] Emphasize the importance of dataset quality

-

[ ] Grouping activations by type

-

[ ] Generalized ways to evaluate HNs properly

-

[ ] Hyperparameter tuning pipeline

-

[ ] Add ways to use multiple hypernetworks sequentially or in parallel