[Bug]: Loss diverged When Train Hypernetwork

Is there an existing issue for this?

- [X] I have searched the existing issues and checked the recent builds/commits

What happened?

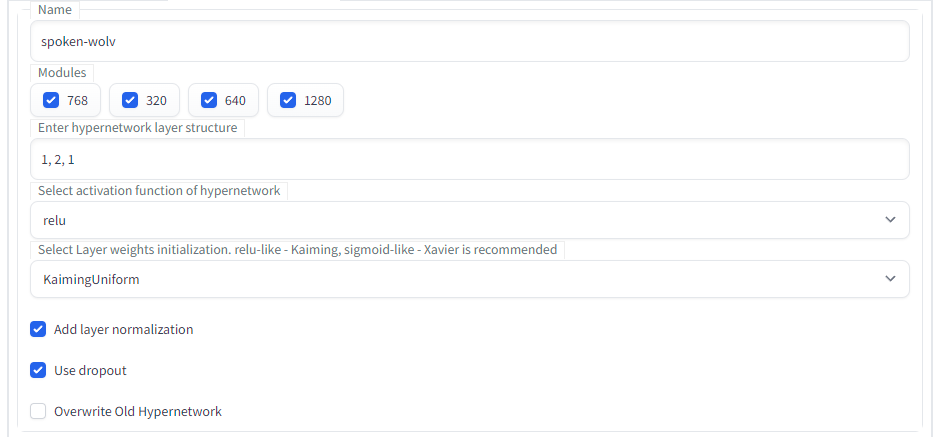

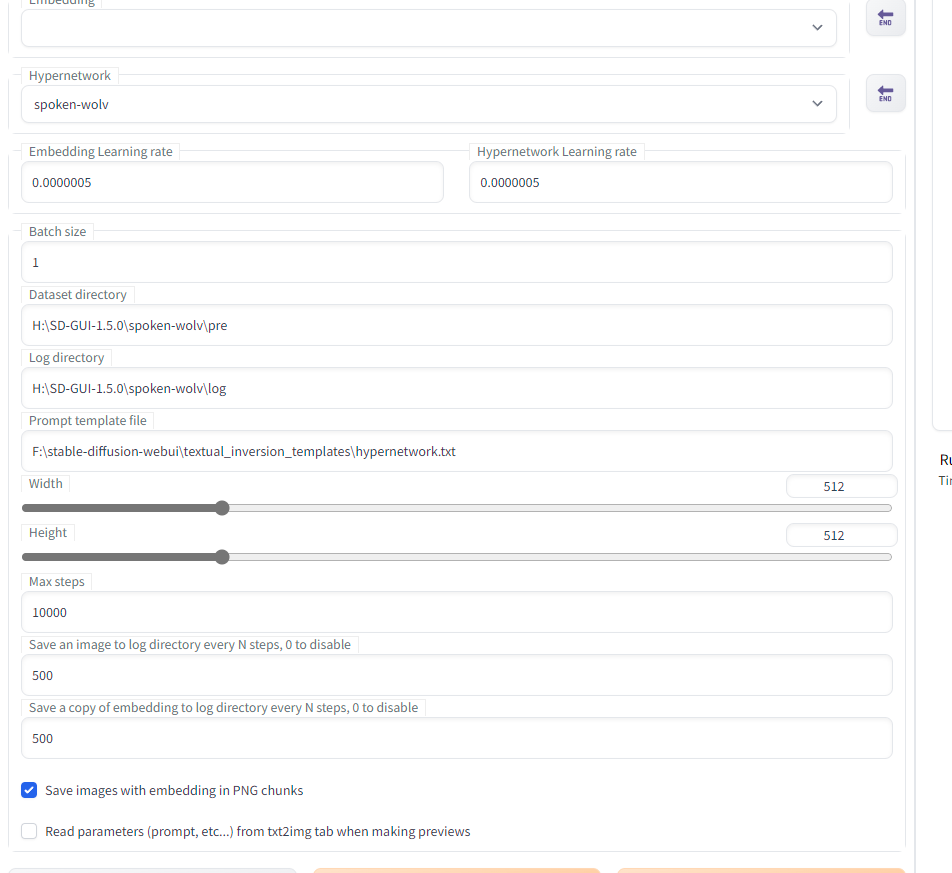

[1.0, 2.0, 1.0] Activation function is relu Weight initialization is KaimingUniform Layer norm is set to True Dropout usage is set to True Preparing dataset... 100%|████████████████████████████████████████████████████████████████████████████████| 654/654 [00:34<00:00, 18.80it/s] Mean loss of 327 elements Training at rate of 5e-07 until step 10000 0%| | 0/10000 [00:00<?, ?it/s] Applying xformers cross attention optimization. Error completing request Arguments: ('spoken-wolv', '0.0000005', 1, 'H:\SD-GUI-1.5.0\spoken-wolv\pre', 'H:\SD-GUI-1.5.0\spoken-wolv\log', 512, 512, 10000, 500, 500, 'F:\stable-diffusion-webui\textual_inversion_templates\hypernetwork.txt', False, '', '', 20, 0, 7, -1.0, 512, 512) {} Traceback (most recent call last): File "F:\stable-diffusion-webui\modules\ui.py", line 221, in f res = list(func(*args, **kwargs)) File "F:\stable-diffusion-webui\webui.py", line 63, in f res = func(*args, **kwargs) File "F:\stable-diffusion-webui\modules\hypernetworks\ui.py", line 49, in train_hypernetwork hypernetwork, filename = modules.hypernetworks.hypernetwork.train_hypernetwork(*args) File "F:\stable-diffusion-webui\modules\hypernetworks\hypernetwork.py", line 432, in train_hypernetwork raise RuntimeError("Loss diverged.") RuntimeError: Loss diverged.

Steps to reproduce the problem

What should have happened?

It should training properly.

Commit where the problem happens

Commit hash: 737eb28faca8be2bb996ee0930ec77d1f7ebd939

What platforms do you use to access UI ?

Windows

What browsers do you use to access the UI ?

Google Chrome

Command Line Arguments

No response

Additional information, context and logs

No response

It is safety feature to prevent continuing failed attempt of training : I'll note that ML is not perfect, you'll run into some many failuares and some successes. There're no 100% working example currently, but I'd suggest using Xavier Normal instead of Kaiming Normal.