(Hypernet) Xavier/He initializations and activation function(Sigmond, tanh, etc)

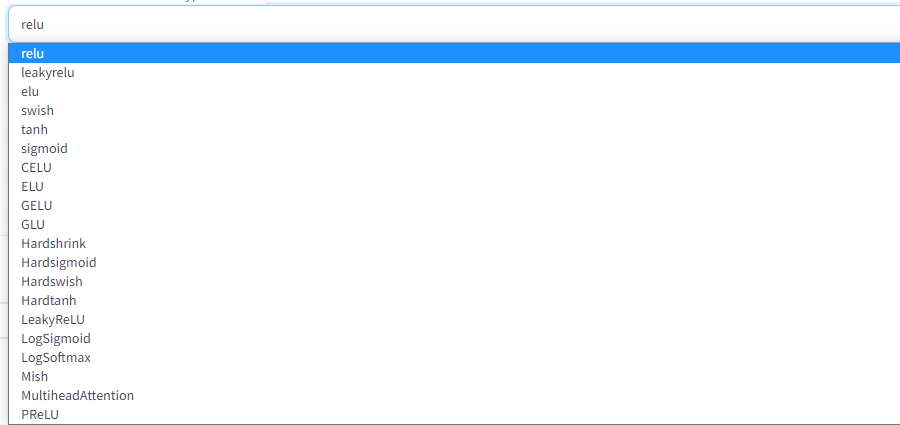

As you expected, activation_dict was for variable activation functions, so it should offer any available activation functions.

Yes. (would people be interested in weird activation functions? 🤔 more ML data scientists?)

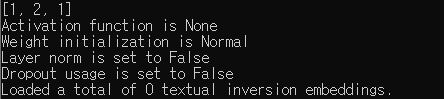

Also added peeking hypernetwork information for debugging.

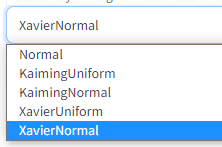

Currently supported options are Normal (tweaked bias initialization to use normal_ instead of zeros_), Xavier & He (normal / uniform).

For ReLU / Leaky ReLU, it is known that He (or Kaiming) initialization sometimes offers better result.

For Sigmoid / Tanh, it is known that Xavier initialization sometimes offers better result.

But these changes would not necessarily mean there is something definitely better, rather, it is just offering more chances.

We need proper testing tool like hyperparameter tuning setup to prove something in future.