stable-diffusion-webui

stable-diffusion-webui copied to clipboard

stable-diffusion-webui copied to clipboard

colab cant use gpu

Describe the bug sd_models is changed , we can't use gpu on colab by remove this parameter ‘map location =cpu’ from the function ’torch.load‘ to load big model ,or the ram will full

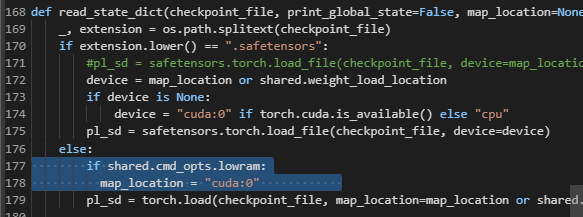

I may have misunderstood the problem, but try to set --lowram command line option #2407

I'm experiencing the same issue. If you load a large model on Colab, the RAM usage will continue to increase until it reaches 12GB, at which point Colab will terminate any running code.

I'm experiencing the same issue. If you load a large model on Colab, the RAM usage will continue to increase until it reaches 12GB, at which point Colab will terminate any running code.

Try to set --lowram command line option

I'm experiencing the same issue. If you load a large model on Colab, the RAM usage will continue to increase until it reaches 12GB, at which point Colab will terminate any running code.

Try to set

--lowramcommand line option

i did that already, forgot to add srry

i got it to load by adding these lines on sd_models:

If needed launch with --lowram, it loads the full model with more vram, but less CPU ram