Using more VRAM in high VRAM GPUs

I'm using an A10G with 24 GB VRAM yet I still have memory problems when doing 512x768 pics with a Batch size of 2! (no Highres. fix either).

RuntimeError: CUDA out of memory. Tried to allocate 2.25 GiB (GPU 0; 22.49 GiB total capacity; 4.94 GiB already allocated; 12.71 GiB free; 7.24 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

It seems like it doesn't even try to take advantage of the massive amounts of VRAM I have available, which I guess is the problem. It would be great if there was a way to specify how much VRAM to use or automatically use more of the VRAM properly.

do you have the correct gpu selected at the command line? my 3090 w/24g runs batchsize=2 just fine (although really batch count is preferable

a man wearing a cheese hat made from cheese

Negative prompt: bad anatomy, extra legs, extra arms, poorly drawn hands, poorly drawn feet, disfigured, head out of frame

Steps: 30, Sampler: DPM2 a, CFG scale: 7, Seed: 2064794882, Face restoration: CodeFormer, Size: 512x1024, Model hash: 402dc090, Batch size: 2, Batch pos: 0, Denoising strength: 0.69

Time taken: 85.50s

Torch active/reserved: 11299/15398 MiB, Sys VRAM: 17936/24576 MiB (72.98%)

My setup is an Amazon EC2 g5.xlarge instance so there's only one GPU and judging by the line I pasted that claims 22.49 GiB total capacity I'd say it has the right one. I run the vanilla batch file, no modifications of any sort.

ah missed that, can't help ya then

I just tried a different instance with the same A10G with 24 GB VRAM but this time with 32 GB of RAM instead of only 16 GB and all the problems are gone. Which is very strange because at no point does the whole system use more than 8 GB of RAM, so it seems like a problem with such a configuration. With 32 GB of RAM I can make all kinds of crazy huge images and max out VRAM whereas with 16 GB of RAM I get OOM errors very easily.

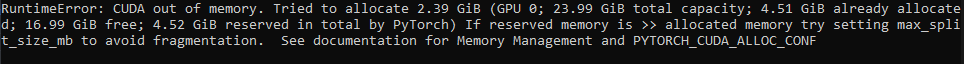

I'm using a 3090Ti video card with 24GB VRAM and my CPU has 16GB system RAM. Should be more than enough to generate 1x960x960 with batch size 1 but I get CUDA out of memory errors with lots of free VRAM shown in the error. I tried both with and without --medvram --opt-split-attention but no luck. Makes no sense that I have 16.99GB of VRAM free but somehow it cannot allocate 2.39GB. I shouldn't need to buy an additional 16GB of regular RAM just to run this at a expected level for my card.

I just tried a different instance with the same A10G with 24 GB VRAM but this time with 32 GB of RAM instead of only 16 GB and all the problems are gone. Which is very strange because at no point does the whole system use more than 8 GB of RAM, so it seems like a problem with such a configuration. With 32 GB of RAM I can make all kinds of crazy huge images and max out VRAM whereas with 16 GB of RAM I get OOM errors very easily.

I think this issue may be related. It's very strange that the CUDA OOM error shows free available VRAM but increasing system RAM can somewhat remedy the issue. I hope a solution to the actual underlying core issue is discovered soon.

I don't know if this is still an issue, and if it is specifically an issue for some cards, please open a new issue referring to issue if this is related.