ElegantRL

ElegantRL copied to clipboard

ElegantRL copied to clipboard

Massively Parallel Deep Reinforcement Learning. 🔥

Traceback (most recent call last): File "/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd.py", line 1491, in _exec pydev_imports.execfile(file, globals, locals) # execute the script File "/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_imps/_pydev_execfile.py", line 18, in execfile exec(compile(contents+"\n", file, 'exec'), glob, loc) File...

I get the following error while running the elegantrl_models.py: TypeError: __init__() got an unexpected keyword argument 'agent' in the 58th line of /agents/elegantrl_models.py I understand "agent" argument in Arguments class...

Test Evn: Colab, SAC, env_num == 1 -In train/run.py, line 93: trajectory, step = agent.explore_env(env, args.num_seed_steps * args.num_steps_per_episode, True) Error message: explore_one_env() takes 3 positional arguments but 4 were given...

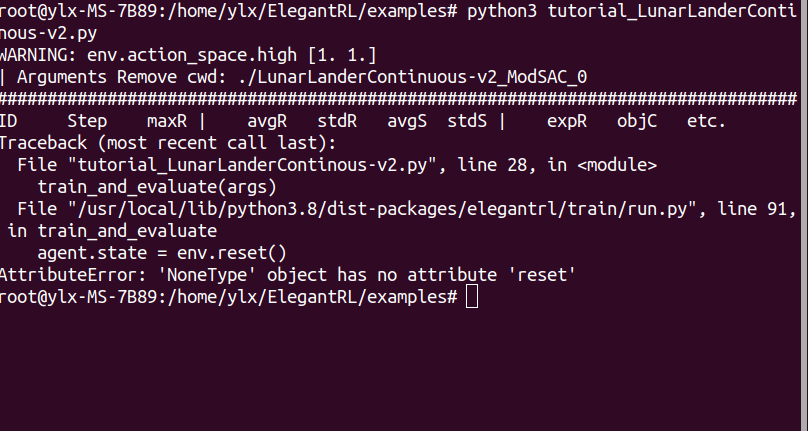

官网教程的样例都跑不通。举例: 1. https://elegantrl.readthedocs.io/en/latest/tutorial/LunarLanderContinuous-v2.html ``` https://github.com/AI4Finance-Foundation/ElegantRL/blob/master/examples/tutorial_LunarLanderContinous-v2.py ```  2. https://elegantrl.readthedocs.io/en/latest/algorithms/dqn.html ``` import torch from elegantrl.run import train_and_evaluate from elegantrl.config import Arguments from elegantrl.train.config import build_env from elegantrl.agents.AgentDQN import AgentDQN ```

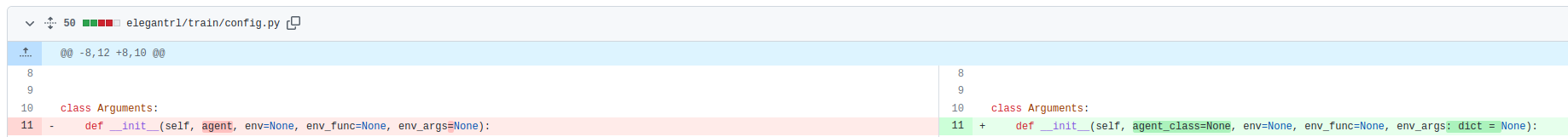

Hello, following this commit: https://github.com/AI4Finance-Foundation/ElegantRL/commit/c1d66a81f0fcc804f2dc3d13b8834a6cad1494bc A Breaking change was added:  The constructor of Arguments now expect `agent_class` instead of `agent`. However FinRL and FinRL-Meta are still using the old...

https://github.com/AI4Finance-Foundation/ElegantRL/blob/8b14594e8e9c8c94dc6591d691e8e636f9b8587c/elegantrl/train/replay_buffer.py#L35 我看到在主线上更新了replaybuffer的内容,加入了next_state,去除了mask换成了done,但我在看到DQN的explore_one_env()函数时,并没有传入next_state,以及之后使用的convert_trajectory(traj_list, last_done)函数中依旧使用的是mask?

https://github.com/AI4Finance-Foundation/ElegantRL/blob/5dbfb6037b4201aa2f8b5b6b855b04a303b18581/elegantrl/train/replay_buffer.py#L309 https://github.com/AI4Finance-Foundation/ElegantRL/blob/5dbfb6037b4201aa2f8b5b6b855b04a303b18581/elegantrl/train/replay_buffer.py#L275

环境: Colab, Elegantrl Model: 测试过SAC, ModSAC, TD3 都报下面相同错误 Issue: __init__() takes 4 positional arguments but 5 were given 31 trained_model = agent.train_model(model=model, 32 cwd=cwd, ---> 33 total_timesteps=break_step) 34 else: 35...

1. In train/run.py, line 35, "if_use_per=args.if_use_per", Argument has no attribute "if_use_per". 2. In train/evaluator.py, coder should import wandb and write "wandb.init()". 3. In demo_IsaacGym.py, if I change the Agent such...