Bert-Chinese-Text-Classification-Pytorch

Bert-Chinese-Text-Classification-Pytorch copied to clipboard

Bert-Chinese-Text-Classification-Pytorch copied to clipboard

使用Bert,ERNIE,进行中文文本分类

A. 德莱厄斯 B. 荣耀行刑官 C 古拉加斯 D. 费德提克

将输出类别个数等于label数 _Originally posted by @daonna in https://github.com/649453932/Bert-Chinese-Text-Classification-Pytorch/issues/17#issuecomment-569576271_ 我这边也是类似问题,但是就是主要在于class中放了22行,并且也是0-21数label,但是报错

单条文本数据的预测代码

```java import torch from importlib import import_module key = { 0: 'finance', 1: 'realty', 2: 'stocks', 3: 'education', 4: 'science', 5: 'society', 6: 'politics', 7: 'sports', 8: 'game', 9: 'entertainment'...

使用项目自带的convert_tf_checkpoint_to_pytorch.py进行转换的时候,出现了如上图的报错,请问这该怎么办呢,跪求解答,使用bert官方给的转换程序也报了这个错,……

this model's max length is 512?

前面几千个step比较稳定,占用一半的显存,训练正常,loss会下降,后面会突然持续增大显存占用,然后爆掉,基本上报错地方都在这里。 求解~~ File "/home/anaconda3/envs/python2.7/lib/python2.7/site-packages/torch/nn/modules/module.py", line 532, in __call__ result = self.forward(*input, **kwargs) File "/home/embed.py", line 84, in forward text_encoded_layer, _ = self.bert_model(text_var, text_segments_ids, output_all_encoded_layers=False) File "/home/anaconda3/envs/python2.7/lib/python2.7/site-packages/torch/nn/modules/module.py", line 532, in __call__...

请问模型预测文本输出的各分类概率值是如何计算的,我想要对结果的各个分类概率值进行0-1归一化处理,但是不知道这个该如何转化

请问我在运行程序时 from pytorch_pretrained import BertModel, BertTokenizer 这一行代码报错 提示No module named 'pytorch_pretrained' 是什么问题啊

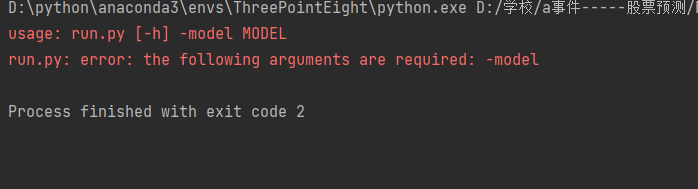

运行问题

哪位大佬有出现下面问题呀 咋解决呀 求告  知