1k-validators-be

1k-validators-be copied to clipboard

1k-validators-be copied to clipboard

for candidates json, 'valid' and 'validity' are sometimes not aligned

I run the ksm & dot 1kv prometheus exporters.

The underlying data comes from here: https://kusama.w3f.community/candidates and https://polkadot.w3f.community/candidates

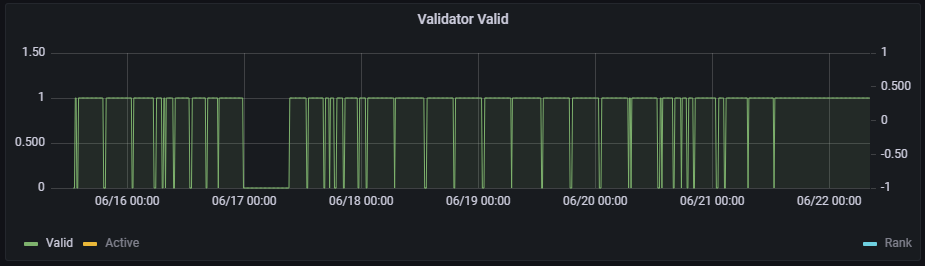

During monitoring we see the valid indicator flick to false, and then back to true.

There seems to be no reasons for being invalid:

const c = new Candidate(state.candidates.find(c => c.stash === t.stash))

// ...

console.log 'INVALID: '

+ '- ' + moment().format('YYYY.MM.DD HH:mm:ss') + ': \n'

+ '- ' + c.stash + ' \n'

+ JSON.stringify(c.valid) + ' \n'

+ JSON.stringify(c.validity) + ' \n'

+ JSON.stringify(c.invalidityReasons)

The output, you see valid is false, all validity items are true, and invalidityReasons is undefined.

INVALID: - 2022.06.22 05:55:32:

- Cad3MXUdmKLPyosPJ67ZhkQh7CjKjBFvb4hyjuNwnfaAGG5

false

[{"valid":true,"type":"UNCLAIMED_REWARDS","details":"","updated":1655418639661,"_id":"62abaf0f165c821b6440a08a"},

{"valid":true,"type":"CLIENT_UPGRADE","details":"","updated":1655868432836,"_id":"62b28c1034bf709febd28263"},

{"valid":true,"type":"IDENTITY","details":"","updated":1655870963143,"_id":"62b295f334bf709febdacb3d"},

{"valid":true,"type":"CONNECTION_TIME","details":"","updated":1655875568941,"_id":"62b2a7f0bf178f1d6b28d0af"},

{"valid":true,"type":"ACCUMULATED_OFFLINE_TIME","details":"","updated":1655875569007,"_id":"62b2a7f1bf178f1d6b28d0cc"},

{"valid":true,"type":"COMMISION","details":"","updated":1655875569024,"_id":"62b2a7f1bf178f1d6b28d0db"},

{"valid":true,"type":"SELF_STAKE","details":"","updated":1655875569070,"_id":"62b2a7f1bf178f1d6b28d0e9"},

{"valid":true,"type":"BLOCKED","details":"","updated":1655875569101,"_id":"62b2a7f1bf178f1d6b28d0f7"},

{"valid":true,"type":"VALIDATE_INTENTION","details":"","updated":1655875680818,"_id":"62b2a860bf178f1d6b292cc8"},

{"valid":true,"type":"ONLINE","details":"","updated":1655876542206,"_id":"62b2abbec4087169bf27fa0e"}]

undefined

We get around this with a small helper function, but it's not ideal.

// cross-check valid with validity

checkValid (valid, validity) {

return valid

? valid

: validity.filter(f => f.valid === false).length === 0

}

The helper function was implemented in the prometheus exporters yesterday 21/06 12h40 and you can see the data is showing valid constantly from that time onwards.

I think the valid boolean flag can't be trusted, it will stay false until the backend iterated thru all candidates after a restart which can take anywhere from 2-5min. We've also seen crashes due to too frequent querying of the 1kv backend endpoints, hence @wpank 's suggestion to convert it to a microservice architecture.

We've also seen crashes due to too frequent querying of the 1kv backend endpoints

Ok, we limit calls to 10 mins and keep the result in cache. Do I need to drop that even further?

I think the state updates [c|sh]ould be based in a planned cycle. Relying on system restart is less than ideal.

Moving to micro-architecture is something I can help with. Perhaps we should make a project proposal?

Instead of loading data for all candidates, we might be able to reduce traffic / load by promoting the use of direct calls for individual candidates. I found this service completely by 'hacking' around... the data from the direct call is not the same, but it might be an easy interim fix.

https://kusama.w3f.community/candidate/HyLisujX7Cr6D7xzb6qadFdedLt8hmArB6ZVGJ6xsCUHqmx

I vaguely remember @wpank mentioning before that users should check the validity[].valid fields if any of those is false instead of trusting the global valid flag.

Instead of loading data for all candidates, we might be able to reduce traffic / load by promoting the use of direct calls for individual candidates. I found this service completely by 'hacking' around... the data from the direct call is not the same, but it might be an easy interim fix.

https://kusama.w3f.community/candidate/HyLisujX7Cr6D7xzb6qadFdedLt8hmArB6ZVGJ6xsCUHqmx

We have a public endpoint ppl can use here:

- http://api.metaspan.io/api/kusama/candidate

- http://api.metaspan.io/api/kusama/candidate/HyLisujX7Cr6D7xzb6qadFdedLt8hmArB6ZVGJ6xsCUHqmx

The data is cached for 10 mins, so it should reduce the load on the server.

@dcolley I know that Polkachu does some caching of data but from time to time he may try pulling the data when the API is down. Are you doing anything to make sure that the cached data is correct and not 404? I have made this dashboard while back that can show you the uptime. https://ccris02.github.io/1KV_API/

@ccris02 I implemented at check for 404 or even 500 today. If upstream server is not available it will serve the cache we have. There is a timestamp in the service that specifies the dateTime of the data.

The data format simply wraps the upstream as follows:

{

"updatedAt": "YYYY/MM/DDTHH:mm:ssZ",

"candidates": [

//...

]

}