kvm-guest-drivers-windows

kvm-guest-drivers-windows copied to clipboard

kvm-guest-drivers-windows copied to clipboard

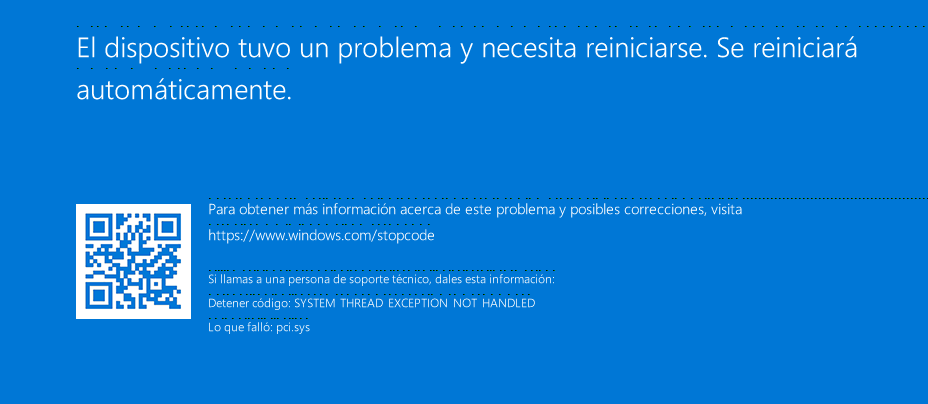

virtio-scsi: Windows Server 2022 BSOD on startup after installing all updates

Describe the bug Windows Server 2022 Standard gets a BSOD on startup after installing all updates using virtio-scsi

To Reproduce Steps to reproduce the behaviour:

- Install an evaluation version using an ISO file from this link: https://www.microsoft.com/en-us/evalcenter/download-windows-server-2022

- Use a virtio scsi device for Windows with virtio-win-0.1.229.iso from https://fedorapeople.org/groups/virt/virtio-win/direct-downloads/

- Install all Windows updates without change any other option.

- Reboot the VM to apply the Windows updates.

Expected behavior Normal boot to a usable Windows.

Screenshots

Host:

- Disto: Proxmox 7.3

- Kernel version 5.15.74

- QEMU version 7.2

- QEMU command line: /usr/bin/kvm -id 60000 -name windows-server-2022-test,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/60000.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5 -mon chardev=qmp-event,mode=control -pidfile /var/run/qemu-server/60000.pid -daemonize -smbios type=1,uuid=219bdc09-e434-4c0a-83e1-63fdecd28ed9 -smp 1,sockets=1,cores=8,maxcpus=8 -device host-x86_64-cpu,id=cpu2,socket-id=0,core-id=1,thread-id=0 -device host-x86_64-cpu,id=cpu3,socket-id=0,core-id=2,thread-id=0 -device host-x86_64-cpu,id=cpu4,socket-id=0,core-id=3,thread-id=0 -device host-x86_64-cpu,id=cpu5,socket-id=0,core-id=4,thread-id=0 -device host-x86_64-cpu,id=cpu6,socket-id=0,core-id=5,thread-id=0 -device host-x86_64-cpu,id=cpu7,socket-id=0,core-id=6,thread-id=0 -device host-x86_64-cpu,id=cpu8,socket-id=0,core-id=7,thread-id=0 -nodefaults -boot menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg -vnc unix:/var/run/qemu-server/60000.vnc,password=on -no-hpet -cpu host,+hv-tlbflush,hv_ipi,hv_relaxed,hv_reset,hv_runtime,hv_spinlocks=0x1fff,hv_stimer,hv_synic,hv_time,hv_vapic,hv_vpindex,+kvm_pv_eoi,+kvm_pv_unhalt -m size=1024,slots=255,maxmem=4194304M -object memory-backend-ram,id=ram-node0,size=1024M -numa node,nodeid=0,cpus=0-7,memdev=ram-node0 -object memory-backend-ram,id=mem-dimm0,size=512M -device pc-dimm,id=dimm0,memdev=mem-dimm0,node=0 -object memory-backend-ram,id=mem-dimm1,size=512M -device pc-dimm,id=dimm1,memdev=mem-dimm1,node=0 -object memory-backend-ram,id=mem-dimm2,size=512M -device pc-dimm,id=dimm2,memdev=mem-dimm2,node=0 -object memory-backend-ram,id=mem-dimm3,size=512M -device pc-dimm,id=dimm3,memdev=mem-dimm3,node=0 -object memory-backend-ram,id=mem-dimm4,size=512M -device pc-dimm,id=dimm4,memdev=mem-dimm4,node=0 -object memory-backend-ram,id=mem-dimm5,size=512M -device pc-dimm,id=dimm5,memdev=mem-dimm5,node=0 -object memory-backend-ram,id=mem-dimm6,size=512M -device pc-dimm,id=dimm6,memdev=mem-dimm6,node=0 -object memory-backend-ram,id=mem-dimm7,size=512M -device pc-dimm,id=dimm7,memdev=mem-dimm7,node=0 -object memory-backend-ram,id=mem-dimm8,size=512M -device pc-dimm,id=dimm8,memdev=mem-dimm8,node=0 -object memory-backend-ram,id=mem-dimm9,size=512M -device pc-dimm,id=dimm9,memdev=mem-dimm9,node=0 -object memory-backend-ram,id=mem-dimm10,size=512M -device pc-dimm,id=dimm10,memdev=mem-dimm10,node=0 -object memory-backend-ram,id=mem-dimm11,size=512M -device pc-dimm,id=dimm11,memdev=mem-dimm11,node=0 -object memory-backend-ram,id=mem-dimm12,size=512M -device pc-dimm,id=dimm12,memdev=mem-dimm12,node=0 -object memory-backend-ram,id=mem-dimm13,size=512M -device pc-dimm,id=dimm13,memdev=mem-dimm13,node=0 -object iothread,id=iothread-virtioscsi0 -device pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e -device pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f -device pci-bridge,id=pci.3,chassis_nr=3,bus=pci.0,addr=0x5 -device vmgenid,guid=e342a64d-ed14-444e-80fd-2f345c8a6893 -device piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2 -device usb-tablet,id=tablet,bus=uhci.0,port=1 -chardev socket,id=tpmchar,path=/var/run/qemu-server/60000.swtpm -tpmdev emulator,id=tpmdev,chardev=tpmchar -device tpm-tis,tpmdev=tpmdev -device VGA,id=vga,bus=pci.0,addr=0x2,edid=off -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3,free-page-reporting=on -iscsi initiator-name=iqn.1993-08.org.debian:01:bb1c51cd390 -device virtio-scsi-pci,id=virtioscsi0,bus=pci.3,addr=0x1,iothread=iothread-virtioscsi0 -drive file=/dev/rbd-pve/d2e57a63-c384-4ec7-9a76-3acfa9442b5c/SSD-HC-TEST/vm-60000-disk-0,if=none,id=drive-scsi0,discard=on,format=raw,cache=none,aio=io_uring,detect-zeroes=unmap -device scsi-hd,bus=virtioscsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,rotation_rate=1,bootindex=100 -device ahci,id=ahci0,multifunction=on,bus=pci.0,addr=0x7 -drive file=/mnt/pve/cephfs/template/iso/SERVER_EVAL_x64FRE_es-es_2022.iso,if=none,id=drive-sata0,media=cdrom,aio=io_uring -device ide-cd,bus=ahci0.0,drive=drive-sata0,id=sata0,bootindex=101 -drive file=/mnt/pve/cephfs/template/iso/virtio-win-0.1.229.iso,if=none,id=drive-sata1,media=cdrom,aio=io_uring -device ide-cd,bus=ahci0.1,drive=drive-sata1,id=sata1,bootindex=102 -netdev type=tap,id=net0,ifname=tap60000i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on -device virtio-net-pci,mac=22:E6:58:F6:6C:8E,netdev=net0,bus=pci.0,addr=0x12,id=net0,rx_queue_size=1024,tx_queue_size=1024 -rtc driftfix=slew,base=localtime -machine type=pc-i440fx-7.1+pve0 -global kvm-pit.lost_tick_policy=discard

VM:

- Windows version: Windows Server 2022 Standard Edition

- Which driver has a problem: virtio-scsi

Additional context The problem occurs with UEFI or without it.

@cosmedd Would it be possible to share the crash dump file?

Thanks, Vadim.

Hi @cosmedd ,

Could tell which updated patches you used? I tried on my test environment, did not reproduce it. These are updated patches for my tests:

Used Versions: kernel-5.14.0-249.el9.x86_64 qemu-kvm-7.2.0-8.el9.x86_64 virtio-win-0.1.229-1.noarch seabios-bin-1.16.1-1.el9.noarch Guest OS: Windows 2022 standard version

qemu-commands:

cat boot.sh

/usr/libexec/qemu-kvm

-name windows-server-2022-test,debug-threads=on -no-shutdown

-smbios type=1,uuid=219bdc09-e434-4c0a-83e1-63fdecd28ed9

-smp 1,sockets=1,cores=8,maxcpus=8

-device host-x86_64-cpu,id=cpu2,socket-id=0,core-id=1,thread-id=0

-device host-x86_64-cpu,id=cpu3,socket-id=0,core-id=2,thread-id=0

-device host-x86_64-cpu,id=cpu4,socket-id=0,core-id=3,thread-id=0

-device host-x86_64-cpu,id=cpu5,socket-id=0,core-id=4,thread-id=0

-device host-x86_64-cpu,id=cpu6,socket-id=0,core-id=5,thread-id=0

-device host-x86_64-cpu,id=cpu7,socket-id=0,core-id=6,thread-id=0

-device host-x86_64-cpu,id=cpu8,socket-id=0,core-id=7,thread-id=0

-nodefaults -boot menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg

-vnc :0

-no-hpet

-cpu host,+hv-tlbflush,hv_ipi,hv_relaxed,hv_reset,hv_runtime,hv_spinlocks=0x1fff,hv_stimer,hv_synic,hv_time,hv_vapic,hv_vpindex,+kvm_pv_eoi,+kvm_pv_unhalt

-m size=1024,slots=255,maxmem=4194304M

-object memory-backend-ram,id=ram-node0,size=1024M

-numa node,nodeid=0,cpus=0-7,memdev=ram-node0 -object memory-backend-ram,id=mem-dimm0,size=512M

-device pc-dimm,id=dimm0,memdev=mem-dimm0,node=0

-object memory-backend-ram,id=mem-dimm1,size=512M

-device pc-dimm,id=dimm1,memdev=mem-dimm1,node=0

-object memory-backend-ram,id=mem-dimm2,size=512M

-device pc-dimm,id=dimm2,memdev=mem-dimm2,node=0

-object memory-backend-ram,id=mem-dimm3,size=512M

-device pc-dimm,id=dimm3,memdev=mem-dimm3,node=0

-object memory-backend-ram,id=mem-dimm4,size=512M

-device pc-dimm,id=dimm4,memdev=mem-dimm4,node=0

-object memory-backend-ram,id=mem-dimm5,size=512M

-device pc-dimm,id=dimm5,memdev=mem-dimm5,node=0

-object memory-backend-ram,id=mem-dimm6,size=512M

-device pc-dimm,id=dimm6,memdev=mem-dimm6,node=0

-object memory-backend-ram,id=mem-dimm7,size=512M

-device pc-dimm,id=dimm7,memdev=mem-dimm7,node=0

-object memory-backend-ram,id=mem-dimm8,size=512M

-device pc-dimm,id=dimm8,memdev=mem-dimm8,node=0

-object memory-backend-ram,id=mem-dimm9,size=512M

-device pc-dimm,id=dimm9,memdev=mem-dimm9,node=0

-object memory-backend-ram,id=mem-dimm10,size=512M

-device pc-dimm,id=dimm10,memdev=mem-dimm10,node=0

-object memory-backend-ram,id=mem-dimm11,size=512M

-device pc-dimm,id=dimm11,memdev=mem-dimm11,node=0

-object memory-backend-ram,id=mem-dimm12,size=512M

-device pc-dimm,id=dimm12,memdev=mem-dimm12,node=0

-object memory-backend-ram,id=mem-dimm13,size=512M

-device pc-dimm,id=dimm13,memdev=mem-dimm13,node=0

-object iothread,id=iothread-virtioscsi0 -device pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e

-device pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f

-device pci-bridge,id=pci.3,chassis_nr=3,bus=pci.0,addr=0x5

-device vmgenid,guid=e342a64d-ed14-444e-80fd-2f345c8a6893

-device piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2

-device usb-tablet,id=tablet,bus=uhci.0,port=1

-device VGA,id=vga,bus=pci.0,addr=0x2,edid=off

-device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3,free-page-reporting=on

-device virtio-scsi-pci,id=virtioscsi0,bus=pci.3,addr=0x1,iothread=iothread-virtioscsi0

-drive file=/dev/sdb,if=none,id=drive-scsi0,discard=on,format=raw,cache=none,detect-zeroes=unmap

-device scsi-hd,bus=virtioscsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,rotation_rate=1,bootindex=100

-device ahci,id=ahci0,multifunction=on,bus=pci.0,addr=0x7

-drive file=/home/kvm_autotest_root/iso/ISO/Win2022/windows_server_2022_x64_official_dvd.iso,if=none,id=drive-sata0,media=cdrom

-device ide-cd,bus=ahci0.0,drive=drive-sata0,id=sata0,bootindex=101

-drive file=/usr/share/virtio-win/virtio-win-0.1.229.iso,if=none,id=drive-sata1,media=cdrom

-device ide-cd,bus=ahci0.1,drive=drive-sata1,id=sata1,bootindex=102

-netdev type=tap,id=net0,ifname=tap60000i0,vhost=on

-device virtio-net-pci,mac=22:E6:58:F6:6C:8E,netdev=net0,bus=pci.0,addr=0x12,id=net0,rx_queue_size=1024,tx_queue_size=1024

-rtc driftfix=slew,base=localtime

-machine type=pc-i440fx-rhel7.6.0

-global kvm-pit.lost_tick_policy=discard

-monitor stdio

Thanks~ Peixiu

Also tried to test with a ceph rbd device as a scsi system disk to closer your used commands, but didn't reproduce it neither~ The Win2022 standard version can be installed successfully and windows update also succeed smoothly.

Used commands:

-device virtio-scsi-pci,id=virtioscsi0,bus=pci.3,addr=0x1,iothread=iothread-virtioscsi0

-drive file=/dev/rbd0,if=none,id=drive-scsi0,discard=on,format=raw,cache=none,detect-zeroes=unmap

-device scsi-hd,bus=virtioscsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,rotation_rate=1,bootindex=100 \

I found there are 2 obviously different point about my test env with yours:

- the kernel version: you run on Proxmox 7.3 with Kernel version 5.15.74, I run on RHEL9.2 with kernel-5.14.0-249.

- The -machine type=pc-i440fx-7.1+pve0 does not work on RHEL9.2, I used -machine type=pc-i440fx-rhel7.6.0, since the pc-i440fx-7.1+pve0 this one is not support on RHEL9.2 Host.

Just Highlight~ Thanks~

And BTW, How reproducible for this issue? is 100%? @cosmedd

Thank you~ Peixiu

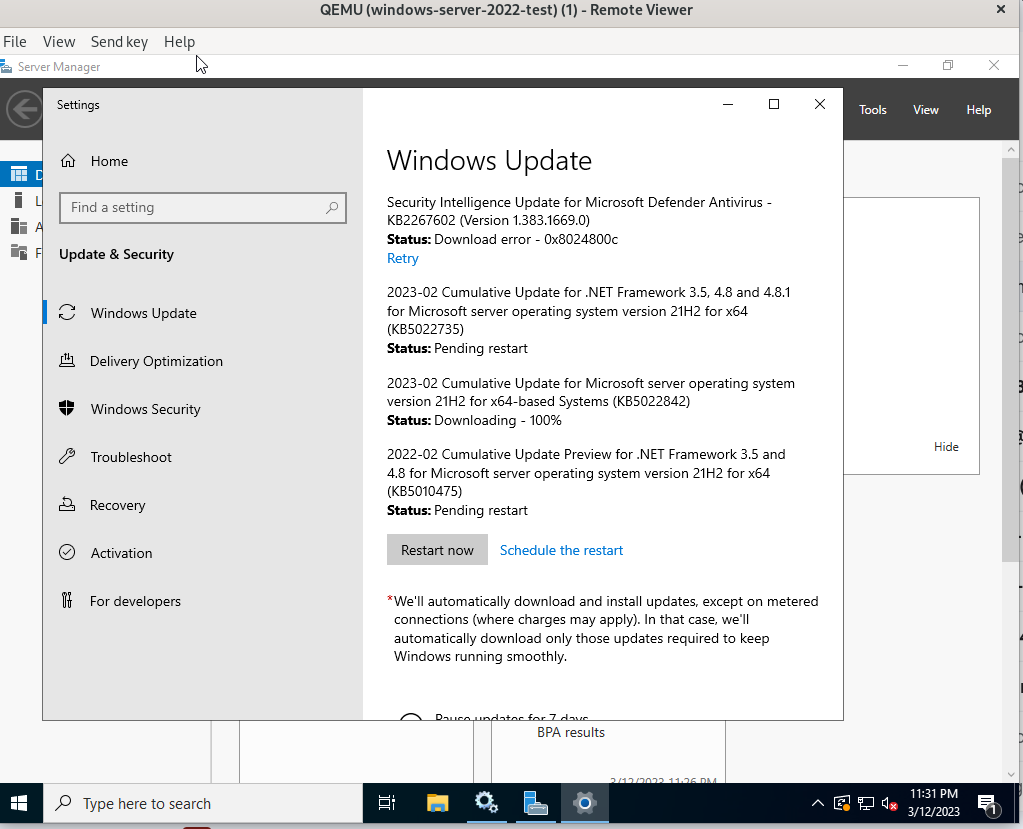

Upgrades applied:

I have made a mistake with the "QEMU command line" provided. This is the right one:

- /usr/bin/kvm -id 60000 -name windows-server-2022-test,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/60000.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5 -mon chardev=qmp-event,mode=control -pidfile /var/run/qemu-server/60000.pid -daemonize -smbios type=1,uuid=219bdc09-e434-4c0a-83e1-63fdecd28ed9 -smp 8,sockets=2,cores=8,maxcpus=16 -nodefaults -boot menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg -vnc unix:/var/run/qemu-server/60000.vnc,password=on -no-hpet -cpu host,+hv-tlbflush,hv_ipi,hv_relaxed,hv_reset,hv_runtime,hv_spinlocks=0x1fff,hv_stimer,hv_synic,hv_time,hv_vapic,hv_vpindex,+kvm_pv_eoi,+kvm_pv_unhalt -m 8192 -object memory-backend-ram,id=ram-node0,size=4096M -numa node,nodeid=0,cpus=0-7,memdev=ram-node0 -object memory-backend-ram,id=ram-node1,size=4096M -numa node,nodeid=1,cpus=8-15,memdev=ram-node1 -object iothread,id=iothread-virtioscsi0 -device pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e -device pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f -device pci-bridge,id=pci.3,chassis_nr=3,bus=pci.0,addr=0x5 -device vmgenid,guid=e342a64d-ed14-444e-80fd-2f345c8a6893 -device piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2 -device usb-tablet,id=tablet,bus=uhci.0,port=1 -chardev socket,id=tpmchar,path=/var/run/qemu-server/60000.swtpm -tpmdev emulator,id=tpmdev,chardev=tpmchar -device tpm-tis,tpmdev=tpmdev -device VGA,id=vga,bus=pci.0,addr=0x2,edid=off -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3,free-page-reporting=on -iscsi initiator-name=iqn.1993-08.org.debian:01:1e5475de3cc -device virtio-scsi-pci,id=virtioscsi0,bus=pci.3,addr=0x1,iothread=iothread-virtioscsi0 -drive file=/dev/rbd-pve/d2e57a63-c384-4ec7-9a76-3acfa9442b5c/SSD-HC-TEST/vm-60000-disk-0,if=none,id=drive-scsi0,discard=on,format=raw,cache=none,aio=io_uring,detect-zeroes=unmap -device scsi-hd,bus=virtioscsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0 -device ahci,id=ahci0,multifunction=on,bus=pci.0,addr=0x7 -drive file=/mnt/pve/cephfs/template/iso/SERVER_EVAL_x64FRE_es-es_2022.iso,if=none,id=drive-sata0,media=cdrom,aio=io_uring -device ide-cd,bus=ahci0.0,drive=drive-sata0,id=sata0 -drive file=/mnt/pve/cephfs/template/iso/virtio-win-0.1.229.iso,if=none,id=drive-sata1,media=cdrom,aio=io_uring -device ide-cd,bus=ahci0.1,drive=drive-sata1,id=sata1 -netdev type=tap,id=net0,ifname=tap60000i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on -device virtio-net-pci,mac=22:E6:58:F6:6C:8E,netdev=net0,bus=pci.0,addr=0x12,id=net0,rx_queue_size=1024,tx_queue_size=1024 -rtc driftfix=slew,base=localtime -machine type=pc-i440fx-7.1+pve0 -global kvm-pit.lost_tick_policy=discard

The crash is reproduced when using 2 cpu sockets. If after applying Windows updates only 1 socket is configured on the machine, the problem is not reproduced: Windows boots normally.

However if SATA is configured for storage no BSOD occurs when using 2 cpu sockets.

About the crash dump file, could you tell me where it should be? I have tried to enable the generation of a memory dump but it did not create any file.

The same issue with virtio-win-0.1.229 on Windows 11 21H2 The system BSOD if I try to install virtio-disk driver

The same issue with virtio-win-0.1.229 on Windows 11 21H2 The system BSOD if I try to install virtio-disk driver

Hi @smasharov ,

Could you help to share your vm's qemu command line? You can get it through command "ps aux| grep qemu". And what's your used kernel/qemu/ovmf version? Are you using RHEL?

Thanks a lot~ Peixiu

I use bhyve under FreeBSD 13.1-Release

@smasharov Since it is FreeBSD, can you try limiting the maximum transfer length by adding ",max_sectors=32" to virtio-scsi-pci device's parameters:

In your case it should be something like this: -device virtio-scsi-pci,id=virtioscsi0,bus=pci.3,addr=0x1,iothread=iothread-virtioscsi0,max_sectors=32 -drive file=/dev/rbd-pve/d2e57a63-c384-4ec7-9a76-3acfa9442b5c/SSD-HC-TEST/vm-60000-disk-0,if=none,id=drive-scsi0,discard=on,format=raw,cache=none,aio=io_uring,detect-zeroes=unmap -device scsi-hd,bus=virtioscsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0

If it helps to solve the problem, then you can try increase the number of sectors. for more information please visit the following issue: https://github.com/virtio-win/kvm-guest-drivers-windows/issues/747

Best, Vadim.

In my case it looks like -s 6:0,virtio-blk,/dev/ada0s1 and I do not see an option to add max_sectors directive. I am pretty sure that one of the old versions worked fine. I will try to find exact last version that have worked.

I tried to reproduce this issue with 2 sockets on RHEL Host, but also didn't reproduce it. After the windows 2022 vm installed all updates, system restart in OS normally, no BSOD occur.

Commands as follows:

cat 893-cnv.sh

/usr/libexec/qemu-kvm

-name guest=default_peixiu-2022-seabios-03,debug-threads=on

-machine pc-q35-rhel9.2.0,usb=off,smm=on,dump-guest-core=off

-accel kvm

-cpu Cascadelake-Server,ss=on,vmx=on,pdcm=on,hypervisor=on,tsc-adjust=on,umip=on,pku=on,md-clear=on,stibp=on,arch-capabilities=on,xsaves=on,ibpb=on,ibrs=on,amd-stibp=on,amd-ssbd=on,rdctl-no=on,ibrs-all=on,skip-l1dfl-vmentry=on,mds-no=on,pschange-mc-no=on,tsx-ctrl=on,hle=off,rtm=off,hv-time=on,tsc-frequency=1995312000,hv-relaxed=on,hv-vapic=on,hv-spinlocks=0x1fff,hv-vpindex=on,hv-runtime=on,hv-synic=on,hv-stimer=on,hv-stimer-direct=on,hv-reset=on,hv-frequencies=on,hv-reenlightenment=on,hv-tlbflush=on,hv-ipi=on

-m 8192

-smp 8,sockets=2,cores=8,maxcpus=16

-uuid c9c40bff-ff08-5217-98d0-cb3fadb91b7b

-smbios type=1,manufacturer="Red Hat",product="OpenShift Virtualization",version=4.13.0,uuid=c9c40bff-ff08-5217-98d0-cb3fadb91b7b,sku=4.13.0,family="Red Hat"

-no-user-config

-nodefaults

-rtc base=utc,driftfix=slew

-global kvm-pit.lost_tick_policy=delay

-no-hpet

-no-shutdown

-boot menu=on

-device '{"driver":"pcie-root-port","port":16,"chassis":1,"id":"pci.1","bus":"pcie.0","multifunction":true,"addr":"0x2"}'

-device '{"driver":"pcie-root-port","port":17,"chassis":2,"id":"pci.2","bus":"pcie.0","addr":"0x2.0x1"}'

-device '{"driver":"pcie-root-port","port":19,"chassis":4,"id":"pci.4","bus":"pcie.0","addr":"0x2.0x3"}'

-device '{"driver":"pcie-root-port","port":20,"chassis":5,"id":"pci.5","bus":"pcie.0","addr":"0x2.0x4"}'

-device '{"driver":"pcie-root-port","port":21,"chassis":6,"id":"pci.6","bus":"pcie.0","addr":"0x2.0x5"}'

-device '{"driver":"qemu-xhci","id":"usb","bus":"pci.2","addr":"0x0"}'

-device pci-bridge,id=pci.3,chassis_nr=3,bus=pcie.0,addr=0x5

-device virtio-scsi-pci,id=scsi0,bus=pci.3,addr=0x0

-drive file=/home/kvm_autotest_root/images/win2022-64-virtio-scsi.qcow2,if=none,id=drive-scsi0,discard=on,format=qcow2,cache=none,detect-zeroes=unmap

-device scsi-hd,bus=scsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=disk0

-netdev '{"type":"tap","id":"hostua-default"}'

-device '{"driver":"e1000e","netdev":"hostua-default","id":"ua-default","mac":"02:45:83:00:00:51","bus":"pci.1","addr":"0x0","romfile":""}'

-device '{"driver":"usb-tablet","id":"ua-tablet","bus":"usb.0","port":"1"}'

-device '{"driver":"virtio-balloon-pci-non-transitional","id":"balloon0","bus":"pci.5","addr":"0x0"}'

-device VGA,id=vga,bus=pcie.0,addr=0x6,edid=off

-vnc :0

-msg timestamp=on

Versions: kernel-5.14.0-295.el9.x86_64 qemu-kvm-8.0.0-0.rc1.el9.candidate.x86_64 seabios-bin-1.16.1-1.el9.noarch virtio-win-0.1.229-1.noarch

Thanks~ Peixiu

Hi @peixiu

I noticed that you have not activated the iothread mode on virtio-scsi

Hi @peixiu

I noticed that you have not activated the iothread mode on virtio-scsi

Hi @cosmedd , thanks for your highlight, I re-test with iothread, but it's so sad that I did not reproduce it on my env neither~

Scsi device related qemu comamnds as follows:

-device pci-bridge,id=pci.3,chassis_nr=3,bus=pcie.0,addr=0x5

-object iothread,id=iothread-virtioscsi0

-device virtio-scsi-pci,id=scsi0,bus=pci.3,addr=0x0,iothread=iothread-virtioscsi0

-drive file=/home/kvm_autotest_root/images/win2022-64-virtio-scsi-02.qcow2,if=none,id=drive-scsi0,discard=on,format=qcow2,cache=none,detect-zeroes=unmap

-device scsi-hd,bus=scsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=disk0 \

My steps:

- On a RHEL9.2 Host, boot up a VM up, command line similar with my last comment, and install a new Windows 2022 OS on the VM. During installation install the virtio-win-0.1.229 driver to virtio-scsi-pci device.

- After OS installation finished, try to do windows update, checking updates --> download updates --> install all updates

- Restart the VM, vm restarted normally.

Could you help to check my steps again?

Thanks~ Peixiu

Hi,

We at Proxmox have a user who encountered this problem. I can also reproduce the bluescreen with Windows Server 2022, virtio-win-0.1.229 and KB5023705 (mentioned in @cosmedd's screenshot), but only if I define NUMA nodes and if the number of vCPUs is chosen small enough such that there is a CPU socket without any vCPUs. I think this is also the case in the command line posted by @cosmedd with triggers the bluescreen, but not in the command line posted by @peixiu which does not trigger the bluescreen. To trigger the bluescreen, there needs to be at least one virtio device, either virtio-scsi or virtio-blk.

For example, the bluescreen can be triggered by defining 2 sockets with 4 cores each (= 8 cores total), but only 4 vCPUs total, so the second socket has no cores. Windows Server 2022 is installed on a virtio-scsi disk. See the full command at the end of my post -- the relevant parts:

-smp '4,sockets=2,cores=4,maxcpus=8' \

-m 4096 \

-object 'memory-backend-ram,id=ram-node0,size=2048M' \

-numa 'node,nodeid=0,cpus=0-3,memdev=ram-node0' \

-object 'memory-backend-ram,id=ram-node1,size=2048M' \

-numa 'node,nodeid=1,cpus=4-7,memdev=ram-node1' \

-object 'iothread,id=iothread-virtioscsi0' \

Without KB5023705, this VM boots and works fine. If I install KB5023705 and reboot, I get the bluescreen from the initial post on boot ("What failed: pci.sys"). This also happens after installing KB5026370 instead.

The VM boots again if I do one of the following things:

- Configure 5 vCPUs instead of 4:

-smp '5,sockets=2,cores=4,maxcpus=8' - Disable NUMA.

- Move all disks from virtio-blk/virtio-scsi to e.g. IDE or SATA. In that case, a bluescreen can be triggered in a running VM by hotplugging a virtio-blk or virtio-scsi disk

I'm not sure what to make out of this, though -- it looks like there is some interaction of the Windows update and virtio-win that leads to the bluescren? Could it related to the issue that was recently fixed by 1a515221348b5213fad367f426a9a38d24217442?

Let me know if I can help tracking this down -- I'm happy to provide more information or test things.

Full command line for the VM triggering the bluescreen (on a PVE host):

/usr/bin/kvm \

-id 143 \

-name 'win2022-server-bios,debug-threads=on' \

-no-shutdown \

-chardev 'socket,id=qmp,path=/var/run/qemu-server/143.qmp,server=on,wait=off' \

-mon 'chardev=qmp,mode=control' \

-chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' \

-mon 'chardev=qmp-event,mode=control' \

-pidfile /var/run/qemu-server/143.pid \

-daemonize \

-smbios 'type=1,uuid=7f6d8248-e7a3-471e-a7b4-c894a8b3ac90' \

-smp '4,sockets=2,cores=4,maxcpus=8' \

-nodefaults \

-boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' \

-vnc 'unix:/var/run/qemu-server/143.vnc,password=on' \

-no-hpet \

-cpu 'kvm64,enforce,hv_ipi,hv_relaxed,hv_reset,hv_runtime,hv_spinlocks=0x1fff,hv_stimer,hv_synic,hv_time,hv_vapic,hv_vpindex,+kvm_pv_eoi,+kvm_pv_unhalt,+lahf_lm,+sep' \

-m 4096 \

-object 'memory-backend-ram,id=ram-node0,size=2048M' \

-numa 'node,nodeid=0,cpus=0-3,memdev=ram-node0' \

-object 'memory-backend-ram,id=ram-node1,size=2048M' \

-numa 'node,nodeid=1,cpus=4-7,memdev=ram-node1' \

-object 'iothread,id=iothread-virtioscsi0' \

-device 'pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e' \

-device 'pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f' \

-device 'pci-bridge,id=pci.3,chassis_nr=3,bus=pci.0,addr=0x5' \

-device 'vmgenid,guid=a2aa3308-779e-46dc-868d-d444cf1d31cd' \

-device 'piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2' \

-device 'usb-tablet,id=tablet,bus=uhci.0,port=1' \

-device 'VGA,id=vga,bus=pci.0,addr=0x2,edid=off' \

-device 'virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3,free-page-reporting=on' \

-iscsi 'initiator-name=iqn.1993-08.org.debian:01:d96ec51abbf7' \

-device 'virtio-scsi-pci,id=virtioscsi0,bus=pci.3,addr=0x1,iothread=iothread-virtioscsi0' \

-drive 'file=/dev/pve/vm-143-disk-0,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on' \

-device 'scsi-hd,bus=virtioscsi0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,bootindex=100' \

-rtc 'driftfix=slew,base=localtime' \

-machine 'type=pc-i440fx-7.2+pve0' \

-global 'kvm-pit.lost_tick_policy=discard'

The VM boots again if I do one of the following things ...

The command line above assigns vCPUS { 0, 1, 2, 3 } to NUMA node 0, and no vCPUS to NUMA node 1. As it turns out, another workaround is to change the NUMA topology such that at least one vCPU is assigned to NUMA node 1.

Two examples:

- Assign vCPUs { 0, 2 } to NUMA node 0, and vCPUS { 1, 3 } to NUMA node 1:

-numa 'node,nodeid=0,cpus=0,cpus=2,cpus=4,cpus=6,memdev=ram-node0' \

-numa 'node,nodeid=1,cpus=1,cpus=3,cpus=5,cpus=7,memdev=ram-node1' \

- Assign vCPU 0 to NUMA node 0 and vCPUS { 1, 2, 3 } to NUMA node 1:

-numa 'node,nodeid=0,cpus=0,memdev=ram-node0' \

-numa 'node,nodeid=1,cpus=1-7,memdev=ram-node1' \

Any of the two options makes the VM boot for me.

Also, I think I managed to extract a backtrace of the bluescreen in question. Here it is, in case it helps. Unfortunately it's a German ISO -- "Division durch Null" translates to "Division by Zero".

SYSTEM_THREAD_EXCEPTION_NOT_HANDLED (7e)

This is a very common BugCheck. Usually the exception address pinpoints

the driver/function that caused the problem. Always note this address

as well as the link date of the driver/image that contains this address.

Arguments:

Arg1: ffffffffc0000094, The exception code that was not handled

Arg2: fffff804099e72ac, The address that the exception occurred at

Arg3: fffffb042b428dd8, Exception Record Address

Arg4: fffffb042b4285f0, Context Record Address

Debugging Details:

------------------

KEY_VALUES_STRING: 1

Key : Analysis.CPU.mSec

Value: 10874

Key : Analysis.DebugAnalysisManager

Value: Create

Key : Analysis.Elapsed.mSec

Value: 68800

Key : Analysis.Init.CPU.mSec

Value: 1781

Key : Analysis.Init.Elapsed.mSec

Value: 174487

Key : Analysis.Memory.CommitPeak.Mb

Value: 71

Key : WER.OS.Branch

Value: fe_release_svc_prod2

Key : WER.OS.Timestamp

Value: 2022-07-07T18:32:00Z

Key : WER.OS.Version

Value: 10.0.20348.859

BUGCHECK_CODE: 7e

BUGCHECK_P1: ffffffffc0000094

BUGCHECK_P2: fffff804099e72ac

BUGCHECK_P3: fffffb042b428dd8

BUGCHECK_P4: fffffb042b4285f0

EXCEPTION_RECORD: fffffb042b428dd8 -- (.exr 0xfffffb042b428dd8)

ExceptionAddress: fffff804099e72ac (pci!PciGetMessageAffinityMask+0x00000000000000c0)

ExceptionCode: c0000094 (Integer divide-by-zero)

ExceptionFlags: 00000000

NumberParameters: 0

CONTEXT: fffffb042b4285f0 -- (.cxr 0xfffffb042b4285f0)

rax=0000000000000000 rbx=0000000000000000 rcx=b6f4317bdee00000

rdx=0000000000000000 rsi=0000000000000000 rdi=0000000000000001

rip=fffff804099e72ac rsp=fffffb042b429010 rbp=0000000000000000

r8=0000000000000000 r9=fffffb042b428f18 r10=0000000000000001

r11=fffffb042b428f50 r12=0000000000000000 r13=0000000000000007

r14=0000000000000000 r15=fffffb042b429170

iopl=0 nv up ei pl zr na po nc

cs=0010 ss=0018 ds=002b es=002b fs=0053 gs=002b efl=00010246

pci!PciGetMessageAffinityMask+0xc0:

fffff804`099e72ac f7356a47feff div eax,dword ptr [pci!PciGroupZeroNumaNodeCount (fffff804`099cba1c)] ds:002b:fffff804`099cba1c=00000000

Resetting default scope

PROCESS_NAME: System

ERROR_CODE: (NTSTATUS) 0xc0000094 - {AUSNAHME} Ganze Zahl-Fehler:Division durch Null

EXCEPTION_CODE_STR: c0000094

EXCEPTION_STR: 0xc0000094

LOCK_ADDRESS: fffff804062500c0 -- (!locks fffff804062500c0)

Resource @ nt!PiEngineLock (0xfffff804062500c0) Exclusively owned

Contention Count = 14

Threads: ffffac01e7c2c100-01<*>

1 total locks

PNP_TRIAGE_DATA:

Lock address : 0xfffff804062500c0

Thread Count : 1

Thread address: 0xffffac01e7c2c100

Thread wait : 0x1c78

STACK_TEXT:

fffffb04`2b429010 fffff804`099e71c4 : ffffac01`ec98a1b0 fffffb04`2b4290c0 00000000`00000007 fffffb04`2b429160 : pci!PciGetMessageAffinityMask+0xc0

fffffb04`2b429050 fffff804`099e7713 : ffff8286`b64af10a 00000000`00000000 00000000`00000007 ffffac01`ec98a538 : pci!PciGetMessagePolicy+0x114

fffffb04`2b4290e0 fffff804`099dfdd5 : 00000000`00000001 ffff8286`b64af0f8 fffffb04`2b429200 00000000`00000000 : pci!PciPopulateMsiRequirements+0xe7

fffffb04`2b429160 fffff804`099df71a : ffffac01`ea84a5a0 00000000`00000000 ffffac01`ec98a1b0 00000000`00000000 : pci!PciBuildRequirementsList+0x695

fffffb04`2b4293a0 fffff804`099932c9 : ffffac01`e7ba7a20 00000000`c0000034 00000000`00000000 fffffb04`2b429530 : pci!PciDevice_QueryResourceRequirements+0x3a

fffffb04`2b4293d0 fffff804`05841185 : ffffac01`ea84a5a0 ffffac01`ea84a5a0 ffffac01`ec150e10 00000000`00000000 : pci!PciDispatchPnpPower+0x2e9

fffffb04`2b429440 fffff804`095522f2 : ffffac01`ec150e10 ffffac01`ea84a5a0 ffff8286`b58f1eb0 ffff8286`b6013600 : nt!IofCallDriver+0x55

fffffb04`2b429480 fffff804`095e8723 : ffffac01`e7b4f050 ffffac01`ea84a5a0 00000000`00000000 00000000`00000000 : ACPI!ACPIDispatchForwardIrp+0x82

fffffb04`2b4294c0 fffff804`09551263 : ffffac01`e7b4f050 ffffac01`ea84a6b8 00000000`00000000 fffff804`05600000 : ACPI!ACPIFilterIrpQueryResourceRequirements+0x63

fffffb04`2b429520 fffff804`05841185 : 00000000`00000007 ffffac01`ec150e10 00000000`00000000 fffffb04`2b429680 : ACPI!ACPIDispatchIrp+0x253

fffffb04`2b4295a0 fffff804`05cff248 : 00000000`c00000bb ffffac01`ec150e10 00000000`00000000 ffffac01`ec7f5cc0 : nt!IofCallDriver+0x55

fffffb04`2b4295e0 fffff804`05d612d5 : ffffac01`ec98a060 fffffb04`2b429730 ffffffff`80002060 fffffb04`2b429768 : nt!IopSynchronousCall+0xf8

fffffb04`2b429650 fffff804`05d610fb : 00000000`00000000 00000000`00000000 00000000`00000000 00000000`00000000 : nt!PpIrpQueryResourceRequirements+0x49

fffffb04`2b4296e0 fffff804`05d8b5a5 : 00000000`00000000 00000000`00000000 00000000`00000000 ffffffff`80002060 : nt!PiQueryResourceRequirements+0x3b

fffffb04`2b429760 fffff804`05c3c86c : fffffb04`2b4299c8 ffffac01`ec7f5cc0 ffffac01`ec7f5cc0 00000000`00000001 : nt!PiProcessNewDeviceNode+0xead

fffffb04`2b429930 fffff804`05d9a627 : ffffac01`ec7f5cc0 fffffb04`2b4299c8 ffffac01`e78f0ca0 00000000`00000001 : nt!PiProcessNewDeviceNodeAsync+0xf4

fffffb04`2b429960 fffff804`05d840e2 : ffffac01`ebe84001 fffff804`05850e00 fffffb04`2b429a60 ffffac01`00000002 : nt!PipProcessDevNodeTree+0x43b

fffffb04`2b429a10 fffff804`0596725d : 00000001`00000003 ffffac01`e78f0ca0 ffffac01`ebe84060 ffffac01`ebe84060 : nt!PiProcessReenumeration+0x92

fffffb04`2b429a60 fffff804`05861261 : ffffac01`e7c2c100 fffff804`0633d640 ffffac01`e7957ce0 ffffac01`00000000 : nt!PnpDeviceActionWorker+0x47d

fffffb04`2b429b20 fffff804`059580d5 : ffffac01`e7c2c100 00000000`00000000 ffffac01`e7c2c100 01d7441a`5b072f06 : nt!ExpWorkerThread+0x161

fffffb04`2b429d30 fffff804`05a21588 : ffffda81`af792180 ffffac01`e7c2c100 fffff804`05958080 00000000`005c0070 : nt!PspSystemThreadStartup+0x55

fffffb04`2b429d80 00000000`00000000 : fffffb04`2b42a000 fffffb04`2b424000 00000000`00000000 00000000`00000000 : nt!KiStartSystemThread+0x28

SYMBOL_NAME: pci!PciGetMessageAffinityMask+c0

MODULE_NAME: pci

IMAGE_NAME: pci.sys

IMAGE_VERSION: 10.0.20348.1487

STACK_COMMAND: .cxr 0xfffffb042b4285f0 ; kb

BUCKET_ID_FUNC_OFFSET: c0

FAILURE_BUCKET_ID: 0x7E_pci!PciGetMessageAffinityMask

OS_VERSION: 10.0.20348.859

BUILDLAB_STR: fe_release_svc_prod2

OSPLATFORM_TYPE: x64

OSNAME: Windows 10

FAILURE_ID_HASH: {a2e74222-f9b5-76db-ad0f-9ef2fe067a30}

Followup: MachineOwner

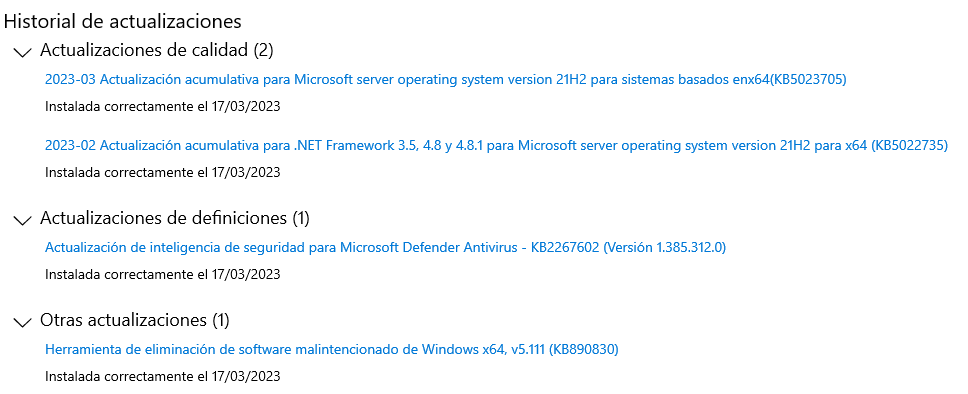

I also reproduced this bug on a RHEL host, just one different is for windows updates patch, this is I installled status:

And after I uninstalled KB5026370, this issue cannot be reproduced.

Thanks~ Peixiu