morphops

morphops copied to clipboard

morphops copied to clipboard

Please help me!!!

Awesome for your repo

I have a question: I warped my image with grid point and noisy point and it work perfect. When i have many points on original image and i want to get corresponding position of coordinates on transformed image how to do.

Currently my solution is to traverse each point with the transformed grid and find the corresponding coordinates.

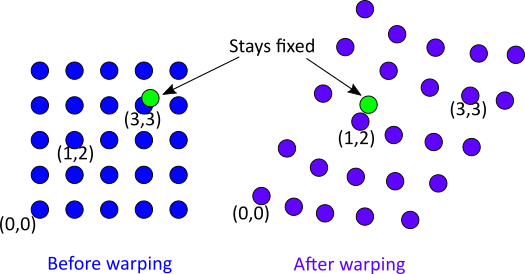

For example the picture below.

.

.

My code

import os

import torch

from PIL import Image

import cv2

import numpy as np

from sklearn.metrics.pairwise import euclidean_distances

import morphops as mops

import torchvision

def grid_points_2d(width, height, device):

"""

Create 2d grid points. Distribute points across a width and height,

with the resulting coordinates constrained to -1, 1

returns tensor shape (width * height, 2)

"""

xx, yy = torch.meshgrid(

[torch.linspace(-1.0, 1.0, height, device=device),

torch.linspace(-1.0, 1.0, width, device=device)])

return torch.stack([yy, xx], dim=-1).contiguous().view(-1, 2)

def noisy_grid(width, height, noise_scale=0.1, device=None):

"""

Make uniform grid points, and add noise except for edge points.

"""

grid = grid_points_2d(width, height, device)

mod = torch.zeros([height, width, 2], device=device)

noise = (torch.rand([height - 2, width - 2, 2]) - 0.5) * noise_scale

mod[1:height - 1, 1:width-1, :] = noise

return grid + mod.reshape(-1, 2)

DEVICE = torch.device("cpu")

points = torch.tensor([0.33145134998962683,0.37168062334870805,0.291440680477757,0.36570985028286535,0.5959663317625441,0.35376830415117994,0.6433863845173527,0.3268998253548878,0.6737648558134021,0.3224217455555058,0.7078480187309208,0.32988521188780917,0.7330399217569128,0.34705118445210686,0.705625203758039,0.36645619691609566,0.667096410894757,0.3701879300822474,0.6367179395987077,0.36421715701640467,0.3603479446370884,0.7135073813682026,0.4099908123647787,0.6829071694057588,0.46852493998399575,0.6597704237756183,0.4996443496043389,0.6672338901079217,0.5307637592246821,0.6605167704088487,0.589297886843899,0.6829071694057588,0.6359770012744138,0.716492767901124,0.5841113185738419,0.7403758601644947,0.5441006490619721,0.7530637529294105,0.4981624729557511,0.7575418327287925,0.4529652351738241,0.7538100995626408,0.41369550398624816,0.7388831668980341,0.37442577279867223,0.7105219948352812,0.459633680092469,0.6978341020703654,0.4996443496043389,0.6963414088039048,0.5403959574405026,0.6970877554371352,0.6263448030585934,0.7142537280014329,0.5389140807919149,0.7135073813682026,0.4996443496043389,0.7157464212678936,0.46037461841676297,0.7127610347349723,0.32552384339527574,0.34555849118564624,0.6730239174891082,0.34406579791918557]).reshape((-1,2))

cur_path = os.path.abspath(os.path.dirname(__file__))

img = Image.open(cur_path + "/boris_johnson.jpg")

w,h = img.size

img_numpy_origin = np.array(img)[:,:,::-1].copy()

_points = points.numpy().copy()

_points[:,0] *= w

_points[:,1] *= h

cv2.drawContours(img_numpy_origin,_points.reshape((-1,1,2)).astype(int) , -1, (0,255,0), 3)

cv2.imshow("img origin",img_numpy_origin)

w, h = img.size

dense_grid = grid_points_2d(w, h, DEVICE)

X = grid_points_2d(7, 11, DEVICE)

Y = noisy_grid(7, 11, 0.15, DEVICE)

warped_C = mops.tps_warp(X, Y, dense_grid)

dis = euclidean_distances(points.cpu().numpy()*2-1,(warped_C))

dis = np.argmin(dis,1)

Y,X = np.unravel_index(dis,(h,w))

new_point = np.array([[x,y]for x,y in zip(X,Y)]).reshape((-1,2)).astype(int)

ten_img = torchvision.transforms.ToTensor()(img).to(DEVICE)

ten_wrp_b = torch.grid_sampler_2d(ten_img[None, ...], torch.from_numpy(warped_C).reshape((1,h,w,2)).float(), 0, 0, False) #[1, 738, 512, 2]

img_torch = torchvision.transforms.ToPILImage()(ten_wrp_b[0].cpu())

img_numpy_warped = np.array(img_torch)[:,:,::-1].copy()

cv2.drawContours(img_numpy_warped,new_point.reshape((-1,1,2)).astype(int) , -1, (0,0,255), 3)

cv2.imshow("img",img_numpy_warped)

cv2.waitKey()

It takes a lot of time and memory. However I wonder if there is another way that relies on matrixs (W,A,U,P)

Hi there, I'm wondering if just updating to the latest version might do it.

The distance matrix calculation in the TPS warp used to be a painfully slow nested loop that got replaced by the fast scipy cdist in #10.

So yea, hopefully it's just needs an update.. Let me know!

Hi there, I'm wondering if just updating to the latest version might do it.

The distance matrix calculation in the TPS warp used to be a painfully slow nested loop that got replaced by the fast scipy cdist in #10.

So yea, hopefully it's just needs an update.. Let me know!

I bet you mistakenly replied to another issues thread

Hi sorry, I did mean to reply here but perhaps did it too hastily.

So if I understand correctly, there is significant slowdown and memory usage when you run the line warped_pts = mops.tps_warp(X, Y, dense_grid) with a dense_grid of shape (M,2) where M is very big?

i mean i want to create new point from var(points) but not by searching through all points like below

dis = euclidean_distances(points.cpu().numpy()*2-1,(warped_C))

dis = np.argmin(dis,1)

Y,X = np.unravel_index(dis,(h,w))

new_point = np.array([[x,y]for x,y in zip(X,Y)]).reshape((-1,2)).astype(int)

I want to calculate directly from available matrices like K, P like in your library. I've been stuck for a few days now. sorry my english is so bad.

From what i know about thin_plate and function :mops.tps_warp it will find new pixel coordinates which will be replaced into dense_grid. but i want to know after having matrix W,A is there any way to find new coordinates of points after warped. Like i did in the description picture.

Ah, so if I understand correctly you would like to warp points in addition to dense_grid using the TPS warp from X to Y correct?

You could try to dig out the matrices manually, but perhaps there is a simpler route:

warped_combined = mops.tps_warp(X, Y, np.vstack((dense_grid,_points)))

warped_C = warped_combined[:len(dense_grid)]

new_point = warped_combined[len(dense_grid):].astype(int)

Basically I combined dense_grid and _points (with the _ in front, as it appears points is normalized) input lists into 1 list, warped the combined list, and got out the 2 separate outputs from the combined warped output. Would something like this work?

No, I'm sorry but that's not what I meant. I mean:

Let's say there is 1 point pointing to the left eye in the original image (left image) and I want to have a corresponding point pointing to the left eye in the right image. function mops.tps_warp can't help me to do that. you can try again.

Maybe I've got it now, does the below picture look right? Before the warp the nearest pixel is (3,3), but after it is (1,2). And you'd like to find that nearest pixel (1,2) after the warp correct?

(I'm using green as an element of _points, blue as the pixels of the original image, and purple as the warped locations of the original pixels. Hopefully it makes sense:))