unionml

unionml copied to clipboard

unionml copied to clipboard

Integration: BentoML for Model Serving

User Story: As an ML Engineer I want to serve my UnionML- or Flyte-trained model using BentoML

# bentoml configuration so that

from unionml import Model

# UnionML deployment journey

# This will create a CRD in Flyte cluster that is a BentoML endpoint users can hit

# - TODO: create an endpoint via backend plugin

model = Model(...)

model.serve(bentoml_service, ..., auto_deploy=YataiConfig(...), approve_before_deploy=True)

model.remote_train(...) # this actually deploys to bentoml

# This is the Flyte journey:

@task

def produce_model(...) -> nn.Module:

...

return MyModel

# assume that a flyte execution has produced

model = Model()

@model.predictor

def predictor(model_obj: nn.Module, feature: List[float]):

...

@workflow

def batch_predict_wf(features: List[float):

m = produce_model()

return model.predict_task(m=m, features=features)

@workflow

def deploy_model():

m = produce_model()

model.deploy_task(m=m)

TODO:

- [ ] create design doc for how the integration will work

- [ ] implement integration

will this need a backend plugin?

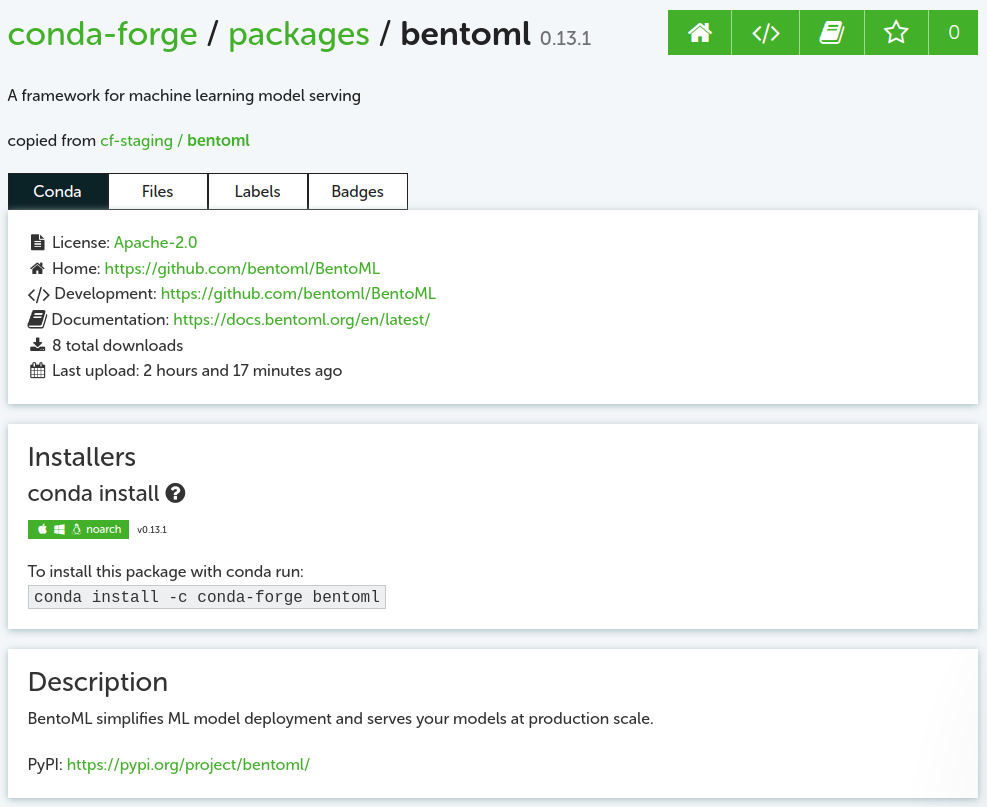

Once you start implementing this, bentoml will become a dependency. At that point it will also require bentoml on conda-forge channel to properly package unionml on conda-forge.

I have already started adding bentoml to conda-forge.

- https://github.com/conda-forge/staged-recipes/pull/19457

@cosmicBboy :tada: It is now available on conda-forge!

- Conda: https://anaconda.org/conda-forge/bentoml

- Feedstock: https://github.com/conda-forge/bentoml-feedstock

Design Doc

The UnionML <> BentoML integration should facilitate the creation of bentos... this is the model-packaging entity that can be served locally, on yatai, or on some target cloud infra like AWS lambda.

This will require an adaptor class that converts UnionML functions/constructs into BentoML constructs.

This would mean:

- saving UnionML-trained models into the local BentoML model store: https://docs.bentoml.org/en/latest/tutorial.html#saving-models-with-bentoml

- translating

unionml.Model.predict_taskto the bentoml service API. - building the resulting bento

- containerizing it and convenience functions for running the bento on a docker process.

- creating a

unionml inittemplate that contains the minimum working code/config for training a model with unionml, serving it locally with bento, with instructions on how to deploy the model to one or just a few of the cloud targets (e.g. AWS Lambda and/or Sagemaker)

Deployment Documentation: UnionML shouldn't handle documentation for any of the specific ways in which one can deploy bentoml models. Simply point them to the Yatai and Bentoctl docs once the UnionML user has trained and successfully served a model locally.

User-facing API

Given this UnionML App:

from typing import List

import pandas as pd

from sklearn.datasets import load_digits

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

from unionml import Dataset, Model

dataset = Dataset(name="digits_dataset", test_size=0.2, shuffle=True, targets=["target"])

model = Model(name="digits_classifier", init=LogisticRegression, dataset=dataset)

@dataset.reader

def reader() -> pd.DataFrame:

return load_digits(as_frame=True).frame

@model.trainer

def trainer(estimator: LogisticRegression, features: pd.DataFrame, target: pd.DataFrame) -> LogisticRegression:

return estimator.fit(features, target.squeeze())

@model.predictor

def predictor(estimator: LogisticRegression, features: pd.DataFrame) -> List[float]:

return [float(x) for x in estimator.predict(features)]

@model.evaluator

def evaluator(estimator: LogisticRegression, features: pd.DataFrame, target: pd.DataFrame) -> float:

return float(accuracy_score(target.squeeze(), predictor(estimator, features)))

We can then configure bentoml like so:

from unionml.bentoml import BentoMLService

# initialize a service with the model object, along with other bento-related configuration

bentoml_service = BentoMLService(model, ...) # Service name will be model.name

# BentoMLService exposes a svc attribute that is the underlying bentoml.Service instance

bentoml_service.svc

# build the bento locally

# https://docs.bentoml.org/en/latest/concepts/bento.html#bento-build-options

# this will create a local bento "digits_classifier:<tag>"

# and may also output a bentofile.yaml

bentoml_service.build(**kwargs)

# create docker container, which will create a docker image "digits_classifier:<tag>"

bentoml_service.containerize(**kwargs)

# alternatively, just use a bentofile.yaml in the project directory

Then serve locally with:

$ bentoml serve bentoml_integration:bentoml_service.svc --reload

Or with docker

$ docker run -it --rm -p 3000:3000 digits_classifier:<tag> serve --production

For deploying on Yatai and BentoCTL, point to the respective bentoml guides here and here

Handling Data Descriptors

This is an additional spec for how to handle the svc.api input/output data descriptors.

UnionML should have reasonable defaults for translating the Dataset.feature_loader and Model.predictor function type annotations into the bentoml.io data descriptors, but in the case that it can't handle all the cases, unionml should provide a fallback mechanism.

Say we' have a bentoml definition like this:

@svc.api(input=bentoml.io.PandasDataFrame(), output=bentoml.io.JSON())

def predict(dataframe: pd.DataFrame) -> List[float]:

...

For the features IO description unionml will use the predictor features arg type or feature_loader return type, and for the prediction type it'll use the predictor return type to automatically infer the data descriptors:

@dataset.feature_loader

def feature_loader(features: pd.DataFrame) -> ...: # translate to bentoml.io.PandasDataFrame()

...

@model.predictor

def predictor(..., features: pd.DataFrame) -> List[float]: # translate to bentoml.io.JSON()

...

But can fall back to:

bentoml_service.predict_api(input=bentoml.io.PandasDataFrame(), output=bentoml.io.JSON())

Alternative

Alternatively UnionML could try to use the feature_loader component to create a custom IODescriptor so that the function's logic can be reused in the bentoml service.

UnionML Runners

A UnionML app should use a Custom Runner so that the Model.predict method is used instead of the built-in bentoml runners so the unionml-defined logic is reused.